Ecosyste.ms: Awesome

An open API service indexing awesome lists of open source software.

https://github.com/mahmoudnafifi/Exposure_Correction

Project page of the paper "Learning Multi-Scale Photo Exposure Correction" (CVPR 2021).

https://github.com/mahmoudnafifi/Exposure_Correction

coarse-to-fine color-correction computational-photography cvpr cvpr2021 dataset datasets deep-learning deeplearning exposure-correction image-enhancement low-light-enhance low-light-image multi-scale overexposure-correction underexposure-correction

Last synced: 25 days ago

JSON representation

Project page of the paper "Learning Multi-Scale Photo Exposure Correction" (CVPR 2021).

- Host: GitHub

- URL: https://github.com/mahmoudnafifi/Exposure_Correction

- Owner: mahmoudnafifi

- License: other

- Created: 2020-04-19T18:51:03.000Z (about 4 years ago)

- Default Branch: master

- Last Pushed: 2023-12-24T01:15:11.000Z (7 months ago)

- Last Synced: 2024-02-26T22:40:42.536Z (4 months ago)

- Topics: coarse-to-fine, color-correction, computational-photography, cvpr, cvpr2021, dataset, datasets, deep-learning, deeplearning, exposure-correction, image-enhancement, low-light-enhance, low-light-image, multi-scale, overexposure-correction, underexposure-correction

- Language: MATLAB

- Homepage:

- Size: 30.9 MB

- Stars: 466

- Watchers: 32

- Forks: 59

- Open Issues: 4

-

Metadata Files:

- Readme: README.md

- License: LICENSE.md

Lists

- Awesome-Low-Light-Enhancement - homepage

README

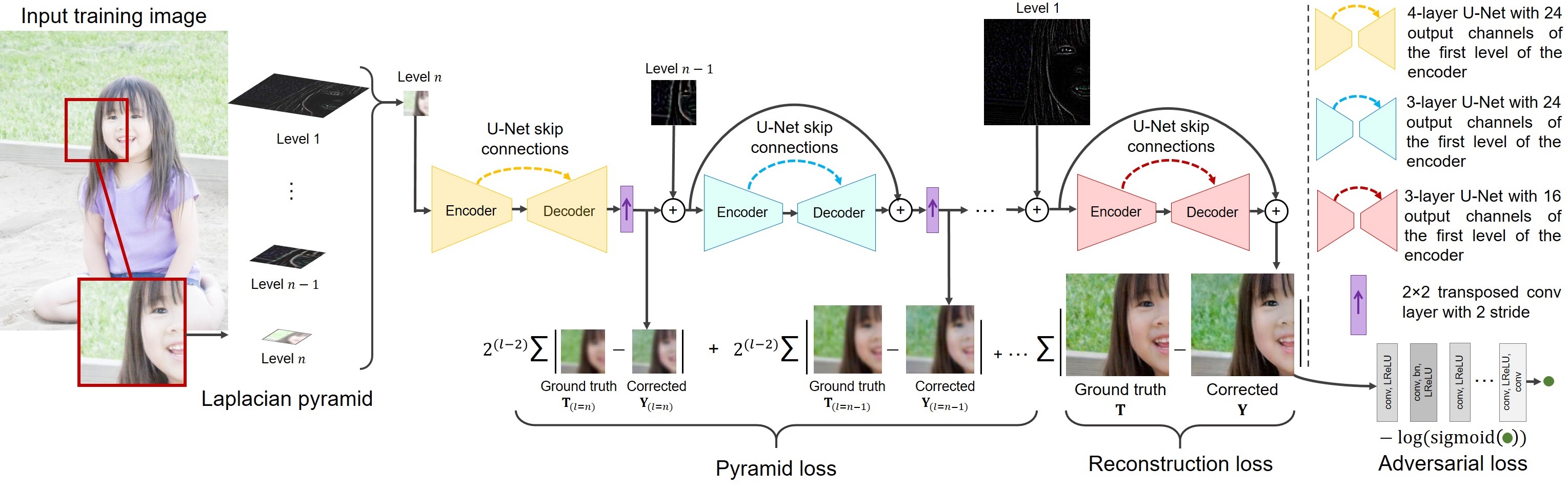

# Learning Multi-Scale Photo Exposure Correction

*[Mahmoud Afifi](https://sites.google.com/view/mafifi)*1,2,

*[Konstantinos G. Derpanis](https://www.cs.ryerson.ca/kosta/)*1,

*[Björn Ommer](https://hci.iwr.uni-heidelberg.de/Staff/bommer)*3,

and *[Michael S. Brown](http://www.cse.yorku.ca/~mbrown/)*1

1Samsung AI Center (SAIC) - Toronto 2York University 3Heidelberg University

Project page of the paper [Learning Multi-Scale Photo Exposure Correction.](https://arxiv.org/pdf/2003.11596.pdf) Mahmoud Afifi, Konstantinos G. Derpanis, Björn Ommer, and Michael S. Brown. In CVPR, 2021. If you use this code or our dataset, please cite our paper:

```

@inproceedings{afifi2021learning,

title={Learning Multi-Scale Photo Exposure Correction},

author={Afifi, Mahmoud and Derpanis, Konstantinos G, and Ommer, Bj{\"o}rn and Brown, Michael S},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

year={2021}

}

```

## Dataset

Download our dataset from the following links:

[Training](https://ln2.sync.com/dl/141f68cf0/mrt3jtm9-ywbdrvtw-avba76t4-w6fw8fzj) ([mirror](https://drive.google.com/file/d/1YtsTeUThgD2tzF6RDwQ7Ol9VTSwqFHc_/view?usp=sharing)) | [Validation](https://ln2.sync.com/dl/49a6738c0/3m3imxpe-w6eqiczn-vripaqcf-jpswtcfr) ([mirror](https://drive.google.com/file/d/1k_L2I63NpjDbhFFfHinwF7_2KjTIiipk/view?usp=sharing)) | [Testing](https://ln2.sync.com/dl/098a6c5e0/cienw23w-usca2rgh-u5fxikex-q7vydzkp) ([mirror](https://drive.google.com/file/d/1uxiD6-DOeLnLyI_51DUHMRxORHmUWtgz/view?usp=sharing)) | [Our results](https://ln2.sync.com/dl/36fe0c4e0/d5buy3rd-gkhbcv78-qjj7c2kx-j25u9qk9)

As the dataset was originally rendered using raw images taken from the MIT-Adobe FiveK dataset, our dataset follows the original license of the MIT-Adobe FiveK dataset.

## Code

### Prerequisite

1. Matlab 2019b or higher (tested on Matlab 2019b)

2. Deep Learning Toolbox

### Get Started

Run `install_.m`

#### Demos:

1. Run `demo_single_image.m` or `demo_image_directory.m` to process a single image or image directory, respectively. If you run the demo_single_image.m, it should save the result in `../result_images` and output the following figure:

2. Run `demo_GUI.m` for a gui demo.

We provide a way to interactively control the output results by scaling each layer of the Laplacian pyramid before feeding them to the network. This can be controlled from the `S` variable in `demo_single_image.m` or `demo_image_directory.m` or from the GUI demo. Each scale factor in the `S` vector is multiplied by the corresponding pyramid level.

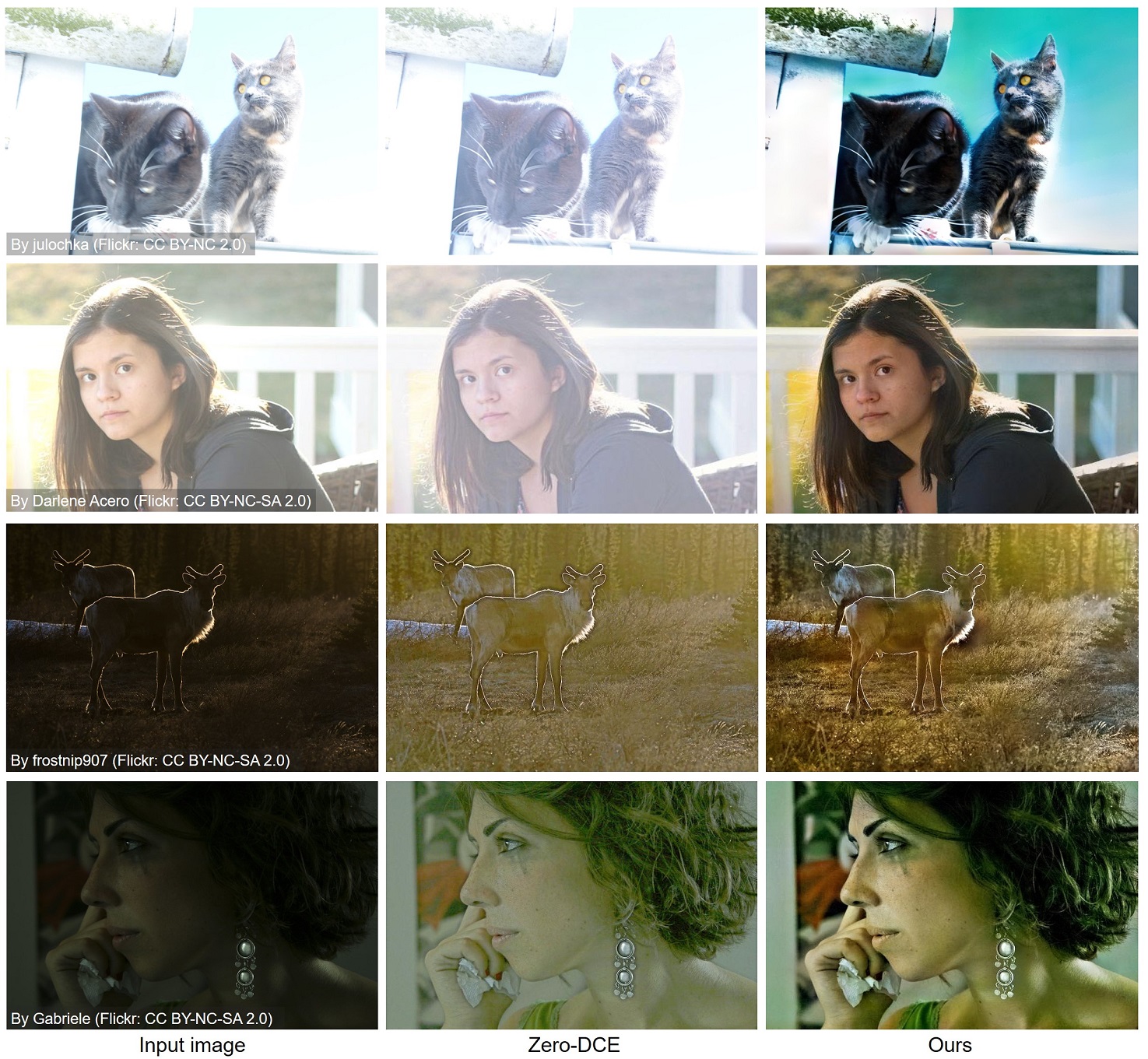

Additional post-processing options include fusion and histogram adjustment that can be turned on using the `fusion` and `pp` variables, respectively in `demo_single_image.m` or `demo_image_directory.m`. These options are also available in the GUI demo. Note that none of the `fusion` and `pp` options was used in producing our results in the paper, but they can improve the quality of results in some cases as shown below.

#### Training:

We train our model end-to-end to minimize: reconstruction loss, Laplacian pyramid loss, and adversarial loss. We trained our model on patches randomly

extracted from training images with different dimensions. We first train on patches of size 128×128 pixels. Next, we continue training on 256×256 patches, followed by training on 512×512 patches.

Before starting, run `src/patches_extraction.m` to extract random patches with different dimensions -- adjust training/validation image directories before running the code. In the given code, the dataset is supposed to be located in the `exposure_dataset` folder in the root directory. The `exposure_dataset` should include the following directories:

```

- exposure_dataset/

training/

INPUT_IMAGES/

GT_IMAGES/

validation/

INPUT_IMAGES/

GT_IMAGES/

```

The `src/patches_extraction.m` will create subdirectories with patches extracted from each image and its corresponding ground-truth at different resolutions as shown below.

After extracting the training patches, run `main_training.m` to start training -- adjust training/validation image directories before running the code. All training options are available in the `main_training.m`.

## Results

This software is provided for research purposes only and CANNOT be used for commercial purposes.

Maintainer: Mahmoud Afifi ([email protected])

## Related Research Projects

- [Deep White-Balance Editing](https://github.com/mahmoudnafifi/Deep_White_Balance): A deep learning multi-task framework for white-balance editing (CVPR 2020).