Ecosyste.ms: Awesome

An open API service indexing awesome lists of open source software.

https://github.com/Luolc/AdaBound

An optimizer that trains as fast as Adam and as good as SGD.

https://github.com/Luolc/AdaBound

Last synced: about 2 months ago

JSON representation

An optimizer that trains as fast as Adam and as good as SGD.

- Host: GitHub

- URL: https://github.com/Luolc/AdaBound

- Owner: Luolc

- License: apache-2.0

- Created: 2019-02-15T18:05:20.000Z (over 5 years ago)

- Default Branch: master

- Last Pushed: 2023-07-23T10:44:20.000Z (11 months ago)

- Last Synced: 2024-03-25T20:01:45.055Z (2 months ago)

- Language: Python

- Homepage: https://www.luolc.com/publications/adabound/

- Size: 2.59 MB

- Stars: 2,905

- Watchers: 74

- Forks: 327

- Open Issues: 19

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Lists

- Awesome-pytorch-list - AdaBound

- awesome-stars - AdaBound - An optimizer that trains as fast as Adam and as good as SGD. (Python)

- awesome-stars - Luolc/AdaBound - An optimizer that trains as fast as Adam and as good as SGD. (Python)

- my-awesome-stars - Luolc/AdaBound - An optimizer that trains as fast as Adam and as good as SGD. (Python)

- Awesome-pytorch-list-CNVersion - AdaBound

- awesome-stars - AdaBound

- awesome-computer-vision-papers - 2019ICLR - example]](https://github.com/taki0112/AdaBound-Tensorflow) (DeepCNN / Optimization)

- awesome-python-machine-learning-resources - GitHub - 72% open · ⏱️ 06.03.2019): (Pytorch实用程序)

README

# AdaBound

[](https://pypi.org/project/adabound/)

[](https://pypi.org/project/adabound/)

[](https://pypi.org/project/adabound/)

[](./LICENSE)

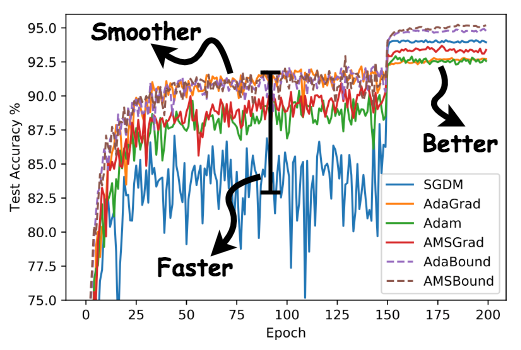

An optimizer that trains as fast as Adam and as good as SGD, for developing state-of-the-art

deep learning models on a wide variety of popular tasks in the field of CV, NLP, and etc.

Based on Luo et al. (2019).

[Adaptive Gradient Methods with Dynamic Bound of Learning Rate](https://openreview.net/forum?id=Bkg3g2R9FX).

In *Proc. of ICLR 2019*.

## Quick Links

- [Website](https://www.luolc.com/publications/adabound/)

- [Demos](./demos)

## Installation

AdaBound requires Python 3.6.0 or later.

We currently provide PyTorch version and AdaBound for TensorFlow is coming soon.

### Installing via pip

The preferred way to install AdaBound is via `pip` with a virtual environment.

Just run

```bash

pip install adabound

```

in your Python environment and you are ready to go!

### Using source code

As AdaBound is a Python class with only 100+ lines, an alternative way is directly downloading

[adabound.py](./adabound/adabound.py) and copying it to your project.

## Usage

You can use AdaBound just like any other PyTorch optimizers.

```python3

optimizer = adabound.AdaBound(model.parameters(), lr=1e-3, final_lr=0.1)

```

As described in the paper, AdaBound is an optimizer that behaves like Adam at the beginning of

training, and gradually transforms to SGD at the end.

The `final_lr` parameter indicates AdaBound would transforms to an SGD with this learning rate.

In common cases, a default final learning rate of `0.1` can achieve relatively good and stable

results on unseen data.

It is not very sensitive to its hyperparameters.

See Appendix G of the paper for more details.

Despite of its robust performance, we still have to state that, **there is no silver bullet**.

It does not mean that you will be free from tuning hyperparameters once using AdaBound.

The performance of a model depends on so many things including the task, the model structure,

the distribution of data, and etc.

**You still need to decide what hyperparameters to use based on your specific situation,

but you may probably use much less time than before!**

## Demos

Thanks to the awesome work by the GitHub team and the Jupyter team, the Jupyter notebook (`.ipynb`)

files can render directly on GitHub.

We provide several notebooks (like [this one](./demos/cifar10/visualization.ipynb)) for better

visualization.

We hope to illustrate the robust performance of AdaBound through these examples.

For the full list of demos, please refer to [this page](./demos).

## Citing

If you use AdaBound in your research, please cite [Adaptive Gradient Methods with Dynamic Bound of Learning Rate](https://openreview.net/forum?id=Bkg3g2R9FX).

```text

@inproceedings{Luo2019AdaBound,

author = {Luo, Liangchen and Xiong, Yuanhao and Liu, Yan and Sun, Xu},

title = {Adaptive Gradient Methods with Dynamic Bound of Learning Rate},

booktitle = {Proceedings of the 7th International Conference on Learning Representations},

month = {May},

year = {2019},

address = {New Orleans, Louisiana}

}

```

## Contributors

[@kayuksel](https://github.com/kayuksel)

## License

[Apache 2.0](./LICENSE)