Ecosyste.ms: Awesome

An open API service indexing awesome lists of open source software.

https://github.com/openbmb/agentverse

🤖 AgentVerse 🪐 is designed to facilitate the deployment of multiple LLM-based agents in various applications, which primarily provides two frameworks: task-solving and simulation

https://github.com/openbmb/agentverse

agent ai gpt gpt-4 llm

Last synced: about 1 month ago

JSON representation

🤖 AgentVerse 🪐 is designed to facilitate the deployment of multiple LLM-based agents in various applications, which primarily provides two frameworks: task-solving and simulation

- Host: GitHub

- URL: https://github.com/openbmb/agentverse

- Owner: OpenBMB

- License: apache-2.0

- Created: 2023-05-06T01:43:19.000Z (about 1 year ago)

- Default Branch: main

- Last Pushed: 2024-04-25T01:52:27.000Z (2 months ago)

- Last Synced: 2024-04-28T05:53:26.340Z (2 months ago)

- Topics: agent, ai, gpt, gpt-4, llm

- Language: JavaScript

- Homepage:

- Size: 238 MB

- Stars: 3,648

- Watchers: 56

- Forks: 342

- Open Issues: 18

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Lists

- awesome-langchain - AgentVerse - agent environments for LLMs  (Other LLM Frameworks / Videos Playlists)

- awesome-langchain-zh - AgentVerse

README

🤖 AgentVerse 🪐

【Paper】

【English | Chinese】

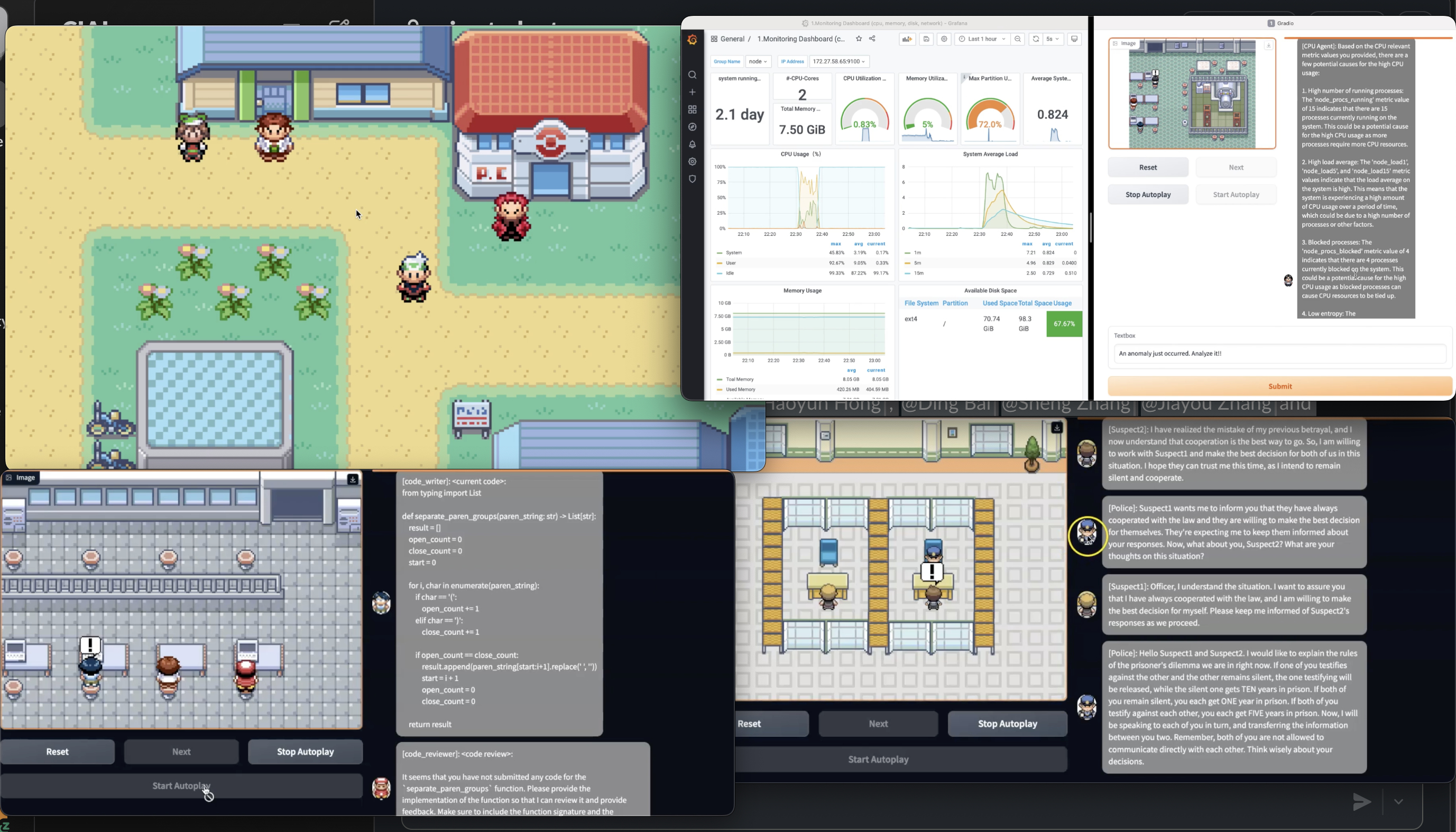

**AgentVerse** is designed to facilitate the deployment of multiple LLM-based agents in various applications. AgentVerse primarily provides two frameworks: **task-solving** and **simulation**.

- Task-solving: This framework assembles multiple agents as an automatic multi-agent system ([AgentVerse-Tasksolving](https://arxiv.org/pdf/2308.10848.pdf), [Multi-agent as system](https://arxiv.org/abs/2309.02427)) to collaboratively accomplish the corresponding tasks.

Applications: software development system, consulting system, etc.

- Simulation: This framework allows users to set up custom environments to observe behaviors among, or interact with, multiple agents. ⚠️⚠️⚠️ We're refactoring the code. If you require a stable version that exclusively supports simulation framework, you can use [`release-0.1`](https://github.com/OpenBMB/AgentVerse/tree/release-0.1) branch. Applications: game, social behavior research of LLM-based agents, etc.

---

# 📰 What's New

- [2024/3/17] AgentVerse was introduced in NVIDIA's blog - [Building Your First LLM Agent Application](https://developer.nvidia.com/blog/building-your-first-llm-agent-application/).

- [2024/1/17] We're super excited to announce that our paper got accepted at ICLR 2024. More updates will be coming soon!

- [2023/10/17] We're super excited to share our open-source AI community hugging face: [`AgentVerse`](https://huggingface.co/spaces/AgentVerse/agentVerse). You are able to try out the two simulation applications, NLP Classroom and Prisoner's Dilemma,with your code of the openai API key and the openai organization. Have fun!

- [2023/10/5] Re-factor our codebase to enable the deployment of both simulation and task-solving framework! We have placed the code for Minecraft example in the paper at the [`minecraft`](https://github.com/OpenBMB/AgentVerse/tree/minecraft) branch. Our tool-using example will soon be updated to the `main` branch. Stay tuned!

- [2023/8/22] We're excited to share our paper [AgentVerse: Facilitating Multi-Agent Collaboration and Exploring Emergent Behaviors in Agents](https://arxiv.org/abs/2308.10848) that illustrate the task-solving framework

in detail of AgentVerse.

- [2023/6/5] We are thrilled to present an array of [demos](#-simple-demo-video), including [NLP Classroom](#nlp-classroom), [Prisoner Dilemma](#prisoner-dilemma), [Software Design](#software-design), [Database Administrator](#database-administrator-dba), and a simple [H5 Pokemon Game](#pokemon) that enables the interaction with the characters in Pokemon! Try out these demos and have fun!

- [2023/5/1] 🚀 [AgentVerse](https://github.com/OpenBMB/AgentVerse) is officially launched!

# 🗓 Coming Soon

- [x] Code release of our [paper](https://arxiv.org/abs/2308.10848)

- [x] Add support for local LLM (LLaMA, Vicunna, etc.)

- [ ] Add documentation

- [ ] Support more sophisticated memory for conversation history

# Contents

- [📰 What's New](#-whats-new)

- [🗓 Coming Soon](#-coming-soon)

- [Contents](#contents)

- [🚀 Getting Started](#-getting-started)

- [Installation](#installation)

- [Environment Variables](#environment-variables)

- [Simulation](#simulation)

- [Framework Required Modules](#framework-required-modules)

- [CLI Example](#cli-example)

- [GUI Example](#gui-example)

- [Task-Solving](#task-solving)

- [Framework Required Modules](#framework-required-modules-1)

- [CLI Example](#cli-example-1)

- [Local Model Support](#local-model-support)

- [vLLM Support](#vllm-support)

- [FSChat Support](#fschat-support)

- [1. Install the Additional Dependencies](#1-install-the-additional-dependencies)

- [2. Launch the Local Server](#2-launch-the-local-server)

- [3. Modify the Config File](#3-modify-the-config-file)

- [AgentVerse Showcases](#agentverse-showcases)

- [Simulation Showcases](#simulation-showcases)

- [Task-Solving Showcases](#task-solving-showcases)

- [🌟 Join Us!](#-join-us)

- [Leaders](#leaders)

- [Contributors](#contributors)

- [How Can You Contribute?](#how-can-you-contribute)

- [Social Media and Community](#social-media-and-community)

- [Star History](#star-history)

- [Citation](#citation)

- [Contact](#contact)

# 🚀 Getting Started

## Installation

**Manually Install (Recommended!)**

**Make sure you have Python >= 3.9**

```bash

git clone https://github.com/OpenBMB/AgentVerse.git --depth 1

cd AgentVerse

pip install -e .

```

If you want to use AgentVerse with local models such as LLaMA, you need to additionally install some other dependencies:

```bash

pip install -r requirements_local.txt

```

**Install with pip**

Or you can install through pip

```bash

pip install -U agentverse

```

## Environment Variables

You need to export your OpenAI API key as follows:

```bash

# Export your OpenAI API key

export OPENAI_API_KEY="your_api_key_here"

```

If you want use Azure OpenAI services, please export your Azure OpenAI key and OpenAI API base as follows:

```bash

export AZURE_OPENAI_API_KEY="your_api_key_here"

export AZURE_OPENAI_API_BASE="your_api_base_here"

```

## Simulation

### Framework Required Modules

```

- agentverse

- agents

- simulation_agent

- environments

- simulation_env

```

### CLI Example

You can create a multi-agent environments provided by us. Using the classroom scenario as an example. In this scenario, there are nine agents, one playing the role of a professor and the other eight as students.

```shell

agentverse-simulation --task simulation/nlp_classroom_9players

```

### GUI Example

We also provide a local website demo for this environment. You can launch it with

```shell

agentverse-simulation-gui --task simulation/nlp_classroom_9players

```

After successfully launching the local server, you can visit [http://127.0.0.1:7860/](http://127.0.0.1:7860/) to view the classroom environment.

If you want to run the simulation cases with tools (e.g., simulation/nlp_classroom_3players_withtool), you need to install BMTools as follows:

```bash

git clone git+https://github.com/OpenBMB/BMTools.git

cd BMTools

pip install -r requirements.txt

python setup.py develop

```

This is optional. If you do not install BMTools, the simulation cases without tools can still run normally.

## Task-Solving

### Framework Required Modules

```

- agentverse

- agents

- simulation_env

- environments

- tasksolving_env

```

### CLI Example

To run the experiments with the task-solving environment proposed in our [paper](https://arxiv.org/abs/2308.10848), you can use the following command:

To run AgentVerse on a benchmark dataset, you can try

```shell

# Run the Humaneval benchmark using gpt-3.5-turbo (config file `agentverse/tasks/tasksolving/humaneval/gpt-3.5/config.yaml`)

agentverse-benchmark --task tasksolving/humaneval/gpt-3.5 --dataset_path data/humaneval/test.jsonl --overwrite

```

To run AgentVerse on a specific problem, you can try

```shell

# Run a single query (config file `agentverse/tasks/tasksolving/brainstorming/gpt-3.5/config.yaml`). The task is specified in the config file.

agentverse-tasksolving --task tasksolving/brainstorming

```

To run the tool using cases presented in our paper, i.e., multi-agent using tools such as web browser, Jupyter notebook, bing search, etc., you can first build ToolsServer provided by [XAgent](https://github.com/OpenBMB/XAgent). You can follow their [instruction](https://github.com/OpenBMB/XAgent#%EF%B8%8F-build-and-setup-toolserver) to build and run the ToolServer.

After building and launching the ToolServer, you can use the following command to run the task-solving cases with tools:

```shell

agentverse-tasksolving --task tasksolving/tool_using/24point

```

We have provided more tasks in `agentverse/tasks/tasksolving/tool_using/` that show how multi-agent can use tools to solve problems.

Also, you can take a look at `agentverse/tasks/tasksolving` for more experiments we have done in our paper.

## Local Model Support

## vLLM Support

If you want to use vLLM, follow the guide [here](https://docs.vllm.ai/en/latest/getting_started/quickstart.html) to install and setup the vLLM server which is used to handle larger inference workloads. Create the following environment variables to connect to the vLLM server:

```bash

export VLLM_API_KEY="your_api_key_here"

export VLLM_API_BASE="http://your_vllm_url_here"

```

Then modify the `model` in the task config file so that it matches the model name in the vLLM server. For example:

```yaml

model_type: vllm

model: llama-2-7b-chat-hf

```

## FSChat Support

This section provides a step-by-step guide to integrate FSChat into AgentVerse. FSChat is a framework that supports local models such as LLaMA, Vicunna, etc. running on your local machine.

### 1. Install the Additional Dependencies

If you want to use local models such as LLaMA, you need to additionally install some other dependencies:

```bash

pip install -r requirements_local.txt

```

### 2. Launch the Local Server

Then modify the `MODEL_PATH` and `MODEL_NAME` according to your need to launch the local server with the following command:

```bash

bash scripts/run_local_model_server.sh

```

The script will launch a service for Llama 7B chat model.

The `MODEL_NAME` in AgentVerse currently supports several models including `llama-2-7b-chat-hf`, `llama-2-13b-chat-hf`, `llama-2-70b-chat-hf`, `vicuna-7b-v1.5`, and `vicuna-13b-v1.5`. If you wish to integrate additional models that are [compatible with FastChat](https://github.com/lm-sys/FastChat/blob/main/docs/model_support.md), you need to:

1. Add the new `MODEL_NAME` into the `LOCAL_LLMS` within `agentverse/llms/__init__.py`. Furthermore, establish

2. Add the mapping from the new `MODEL_NAME` to its corresponding Huggingface identifier in the `LOCAL_LLMS_MAPPING` within the `agentverse/llms/__init__.py` file.

### 3. Modify the Config File

In your config file, set the `llm_type` to `local` and `model` to the `MODEL_NAME`. For example

```yaml

llm:

llm_type: local

model: llama-2-7b-chat-hf

...

```

You can refer to `agentverse/tasks/tasksolving/commongen/llama-2-7b-chat-hf/config.yaml` for a more detailed example.

# AgentVerse Showcases

## Simulation Showcases

Refer to [simulation showcases](README_simulation_cases.md)

## Task-Solving Showcases

Refer to [tasksolving showcases](README_tasksolving_cases.md)

# 🌟 Join Us!

AgentVerse is on a mission to revolutionize the multi-agent environment for large language models, and we're eagerly looking for passionate collaborators to join us on this exciting journey.

## How Can You Contribute?

- **Issue and Pull-Request**: If you encounter any problems when use AgentVerse, you can propose the issue in English. Beisdes, you can also autonomously ask us to assign issue to you and send the PR (Please follow the [PULL_REQUEST_TEMPLATE](https://github.com/OpenBMB/AgentVerse/blob/main/PULL_REQUEST_TEMPLATE.md)) after you solve it.

- **Code Development**: If you're an engineer, help us refine, optimize, and expand the current framework. We're always looking for talented developers to enhance our existing features and develop new modules.

- **Documentation and Tutorials**: If you have a knack for writing, help us improve our documentation, create tutorials, or write blog posts to make AgentVerse more accessible to the broader community.

- **Application Exploration**: If you're intrigued by multi-agent applications and are eager to experiment using AgentVerse, we'd be thrilled to support your journey and see what you create!

- **Feedback and Suggestions**: Use AgentVerse and provide us with feedback. Your insights can lead to potential improvements and ensure that our framework remains top-notch.

Also, if you're passionate about advancing the frontiers of multi-agent applications, become core AgentVerse team members, or are eager to dive deeper into agent research. Please reach out [AgentVerse Team](mailto:[email protected]?subject=[GitHub]%AgentVerse%20Project), and CC to [Weize Chen](mailto:[email protected]?subject=[GitHub]%AgentVerse%20Project) and [Yusheng Su](mailto:[email protected]?subject=[GitHub]%AgentVerse%20Project). We're keen to welcome motivated individuals like you to our team!

## Social Media and Community

- Twitter: https://twitter.com/Agentverse71134

- Discord: https://discord.gg/gDAXfjMw.

- Hugging Face: https://huggingface.co/spaces/AgentVerse/agentVerse.

# Star History

[](https://star-history.com/#OpenBMB/AgentVerse&Date)

## Citation

If you find this repo helpful, feel free to cite us.

```

@article{chen2023agentverse,

title={Agentverse: Facilitating multi-agent collaboration and exploring emergent behaviors in agents},

author={Chen, Weize and Su, Yusheng and Zuo, Jingwei and Yang, Cheng and Yuan, Chenfei and Qian, Chen and Chan, Chi-Min and Qin, Yujia and Lu, Yaxi and Xie, Ruobing and others},

journal={arXiv preprint arXiv:2308.10848},

year={2023}

}

```

# Contact

AgentVerse Team: [email protected]

Project leaders:

- Weize Chen: [email protected]

- [Yusheng Su](https://yushengsu-thu.github.io/): [email protected]