Ecosyste.ms: Awesome

An open API service indexing awesome lists of open source software.

https://github.com/sigoden/aichat

All-in-one CLI tool for 10+ AI platforms, including OpenAI(ChatGPT), Gemini, Claude, Mistral, LocalAI, Ollama, VertexAI, Ernie, Qianwen...

https://github.com/sigoden/aichat

ai all-in-one azure-openai chat chatbot chatgpt chatgpt-cli claude ernie gemini llama llm localai mistral ollama openai qianwen repl vertexai

Last synced: 2 months ago

JSON representation

All-in-one CLI tool for 10+ AI platforms, including OpenAI(ChatGPT), Gemini, Claude, Mistral, LocalAI, Ollama, VertexAI, Ernie, Qianwen...

- Host: GitHub

- URL: https://github.com/sigoden/aichat

- Owner: sigoden

- License: apache-2.0

- Created: 2023-03-03T00:29:39.000Z (over 1 year ago)

- Default Branch: main

- Last Pushed: 2024-03-30T02:44:48.000Z (3 months ago)

- Last Synced: 2024-03-30T03:29:04.278Z (3 months ago)

- Topics: ai, all-in-one, azure-openai, chat, chatbot, chatgpt, chatgpt-cli, claude, ernie, gemini, llama, llm, localai, mistral, ollama, openai, qianwen, repl, vertexai

- Language: Rust

- Homepage:

- Size: 2.17 MB

- Stars: 2,518

- Watchers: 22

- Forks: 170

- Open Issues: 1

-

Metadata Files:

- Readme: README.md

- License: LICENSE-APACHE

Lists

- plurality - AIChat

- cli-apps - AIChat - Using ChatGPT/GPT-3.5/GPT-4 in the terminal. (<a name="ai"></a>AI / ChatGPT)

- awesome - sigoden/aichat - All-in-one AI CLI tool that integrates 20+ AI platforms, including OpenAI, Azure-OpenAI, Gemini, Claude, Mistral, Cohere, VertexAI, Bedrock, Ollama, Ernie, Qianwen, Deepseek... (Rust)

- awesome-stars - sigoden/aichat - 4(V), Gemini, LocalAI, Ollama and other LLMs in the terminal. (Rust)

- awesome-ChatGPT-repositories - aichat - Using ChatGPT/GPT-3.5/GPT-4 in the terminal. (CLIs)

- awesome-chatgpt - sigoden/aichat - Use GPT-4(V), LocalAI and other LLMs in the terminal. (UIs / CLI)

- awesome-rust-llm - aichat - a pure Rust CLI implementing AI chat, with advanced features such as real-time streaming, text highlighting and more (Projects)

- awesome-llm-and-aigc - sigoden/aichat - 3.5/GPT-4 in the terminal. (Applications / 提示语(魔法))

- awesome-stars - sigoden/aichat - All-in-one AI CLI tool that integrates 20+ AI platforms, including OpenAI, Azure-OpenAI, Gemini, Claude, Mistral, Cohere, VertexAI, Bedrock, Ollama, Ernie, Qianwen, Deepseek... (Rust)

- awesome-open-gpt - aichat - 3.5/GPT-4 | 1.支持角色预设<br/>2.语法突出显示markdown和其他200种语言 | (精选开源项目合集 / GPT镜像平替)

- awesome-cuda-tensorrt-fpga - sigoden/aichat - 3.5/GPT-4 in the terminal. (Frameworks)

- awesome-cli-apps - AIChat - Using ChatGPT/GPT-3.5/GPT-4 in the terminal. (<a name="ai"></a>AI / ChatGPT)

- awesome-stars - sigoden/aichat - All-in-one CLI tool for 10+ AI platforms, including OpenAI(ChatGPT), Gemini, Claude, Mistral, LocalAI, Ollama, VertexAI, Ernie, Qianwen... (Rust)

- awesome-open-gpt - aichat - 3.5/GPT-4 | 1.支持角色预设<br/>2.语法突出显示markdown和其他200种语言 | (精选开源项目合集 / GPT镜像平替)

- awesome-stars - sigoden/aichat - `★3105` All-in-one AI CLI tool that integrates 20+ AI platforms, including OpenAI, Azure-OpenAI, Gemini, Claude, Mistral, Cohere, VertexAI, Bedrock, Ollama, Ernie, Qianwen, Deepseek... (Rust)

- awesome-stars - aichat - in-one AI CLI tool that integrates 20+ AI platforms, including OpenAI, Azure-OpenAI, Gemini, Claude, Mistral, Cohere, VertexAI, Bedrock, Ollama, Ernie, Qianwen, Deepseek... | sigoden | 3088 | (Rust)

- awesome-stars - sigoden/aichat - All-in-one AI CLI tool that integrates 20+ AI platforms, including OpenAI, Azure-OpenAI, Gemini, Claude, Mistral, Cohere, VertexAI, Bedrock, Ollama, Ernie, Qianwen, Deepseek... (Rust)

- awesome-stars - sigoden/aichat - All-in-one AI CLI tool that integrates 20+ AI platforms, including OpenAI, Azure-OpenAI, Gemini, Claude, Mistral, Cohere, VertexAI, Bedrock, Ollama, Ernie, Qianwen, Deepseek... (openai)

- awesome-stars - sigoden/aichat - All-in-one AI CLI tool that integrates 20+ AI platforms, including OpenAI, Azure-OpenAI, Gemini, Claude, Mistral, Cohere, VertexAI, Bedrock, Ollama, Ernie, Qianwen, Deepseek... (Rust)

README

# AIChat

[](https://github.com/sigoden/aichat/actions/workflows/ci.yaml)

[](https://crates.io/crates/aichat)

Use GPT-4(V), Gemini, LocalAI, Ollama and other LLMs in the terminal.

AIChat in chat REPL mode:

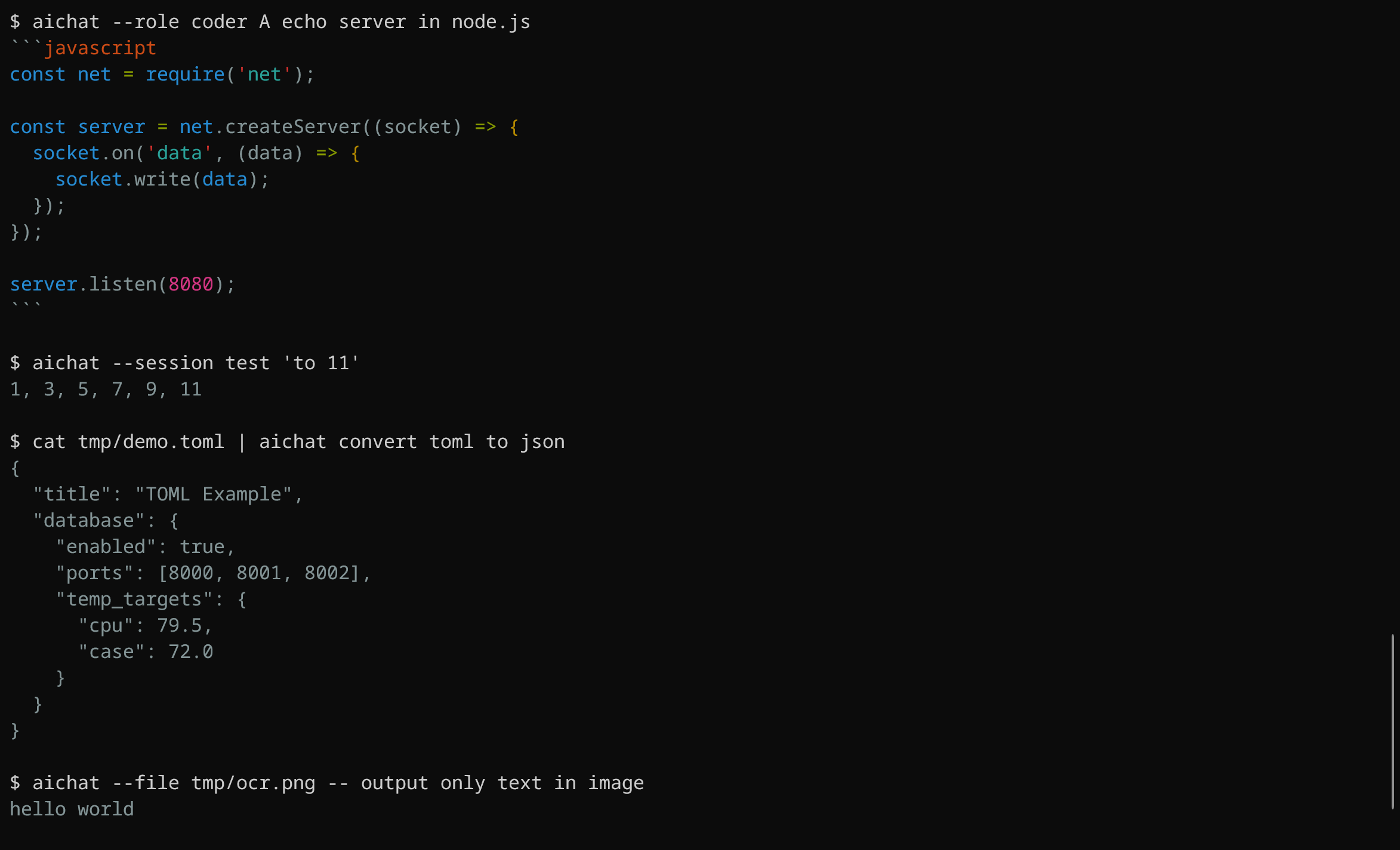

AIChat in command mode:

## Install

### Use a package management tool

For Rust programmer

```sh

cargo install aichat

```

For macOS Homebrew or a Linuxbrew user

```sh

brew install aichat

```

For Windows Scoop user

```sh

scoop install aichat

```

For Android termux user

```sh

pkg install aichat

```

### Binaries for macOS, Linux, Windows

Download it from [GitHub Releases](https://github.com/sigoden/aichat/releases), unzip and add aichat to your $PATH.

## Support LLMs

- OpenAI: gpt-3.5/gpt-4/gpt-4-vision

- Gemini: gemini-pro/gemini-pro-vision/gemini-ultra

- LocalAI: opensource LLMs and other openai-compatible LLMs

- Ollama: opensource LLMs

- Azure-OpenAI: user deployed gpt-3.5/gpt-4

- Ernie: ernie-bot-turbo/ernie-bot/ernie-bot-8k/ernie-bot-4

- Qianwen: qwen-turbo/qwen-plus/qwen-max/qwen-max-longcontext/qwen-vl-plus

## Features

- Have two modes: [REPL](#chat-repl) and [Command](#command).

- Support [Roles](#roles)

- Support context-aware conversation (session)

- Support multimodal models (vision)

- Syntax highlighting for markdown and 200+ languages in code blocks

- Stream output

- Support proxy

- With Dark/light theme

- Save messages/sessions

## Config

On first launch, aichat will guide you through the configuration.

```

> No config file, create a new one? Yes

> AI Platform: openai

> API Key: sk-xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

```

Feel free to adjust the configuration according to your needs.

```yaml

model: openai:gpt-3.5-turbo # LLM model

temperature: 1.0 # GPT temperature, between 0 and 2

save: true # Whether to save the message

highlight: true # Set false to turn highlight

light_theme: false # Whether to use a light theme

wrap: no # Specify the text-wrapping mode (no, auto, )

wrap_code: false # Whether wrap code block

auto_copy: false # Automatically copy the last output to the clipboard

keybindings: emacs # REPL keybindings. values: emacs, vi

prelude: '' # Set a default role or session (role:, session:)

clients:

- type: openai

api_key: sk-xxx

organization_id:

- type: localai

api_base: http://localhost:8080/v1

models:

- name: gpt4all-j

max_tokens: 8192

```

Take a look at the [config.example.yaml](config.example.yaml) for the complete configuration details.

There are some configurations that can be set through environment variables. For more information, please refer to the [Environment Variables](https://github.com/sigoden/aichat/wiki/Environment-Variables) page.

### Roles

We can define a batch of roles in `roles.yaml`.

> We can get the location of `roles.yaml` through the repl's `.info` command or cli's `--info` option.

For example, we can define a role:

```yaml

- name: shell

prompt: >

I want you to act as a Linux shell expert.

I want you to answer only with bash code.

Do not provide explanations.

```

Let ChatGPT answer questions in the role of a Linux shell expert.

```

> .role shell

shell> extract encrypted zipfile app.zip to /tmp/app

mkdir /tmp/app

unzip -P PASSWORD app.zip -d /tmp/app

```

AIChat with roles will be a universal tool.

```

$ aichat --role shell extract encrypted zipfile app.zip to /tmp/app

unzip -P password app.zip -d /tmp/app

$ cat README.md | aichat --role spellcheck

```

For more details about roles, please visit [Role Guide](https://github.com/sigoden/aichat/wiki/Role-Guide).

## Chat REPL

aichat has a powerful Chat REPL.

The Chat REPL supports:

- Emacs/Vi keybinding

- [Custom REPL Prompt](https://github.com/sigoden/aichat/wiki/Custom-REPL-Prompt)

- Tab Completion

- Edit/paste multiline text

- Undo support

### `.help` - print help message

```

> .help

.help Print this help message

.info Print system info

.model Switch LLM model

.role Use a role

.info role Show role info

.exit role Leave current role

.session Start a context-aware chat session

.info session Show session info

.exit session End the current session

.file Attach files to the message and then submit it

.set Modify the configuration parameters

.copy Copy the last reply to the clipboard

.exit Exit the REPL

Type ::: to begin multi-line editing, type ::: to end it.

Press Ctrl+C to abort readline, Ctrl+D to exit the REPL

```

### `.info` - view information

```

> .info

model openai:gpt-3.5-turbo

temperature -

dry_run false

save true

highlight true

light_theme false

wrap no

wrap_code false

auto_copy false

keybindings emacs

prelude -

config_file /home/alice/.config/aichat/config.yaml

roles_file /home/alice/.config/aichat/roles.yaml

messages_file /home/alice/.config/aichat/messages.md

sessions_dir /home/alice/.config/aichat/sessions

```

### `.model` - choose a model

```

> .model openai:gpt-4

> .model localai:gpt4all-j

```

> You can easily enter enter model name using autocomplete.

### `.role` - let the AI play a role

Select a role:

```

> .role emoji

```

Send message with the role:

```

emoji> hello

👋

```

Leave current role:

```

emoji> .exit role

> hello

Hello there! How can I assist you today?

```

Show role info:

```

emoji> .info role

name: emoji

prompt: I want you to translate the sentences I write into emojis. I will write the sentence, and you will express it with emojis. I just want you to express it with emojis. I don't want you to reply with anything but emoji. When I need to tell you something in English, I will do it by wrapping it in curly brackets like {like this}.

temperature: null

```

Temporarily use a role to send a message.

```

> ::: .role emoji

hello world

:::

👋🌍

>

```

### `.session` - context-aware conversation

By default, aichat behaves in a one-off request/response manner.

You should run aichat with `-s/--session` or use the `.session` command to start a session.

```

> .session

temp) 1 to 5, odd only 0

1, 3, 5

temp) to 7 19(0.46%)

1, 3, 5, 7

temp) .exit session 42(1.03%)

? Save session? (y/N)

```

The prompt on the right side is about the current usage of tokens and the proportion of tokens used,

compared to the maximum number of tokens allowed by the model.

### `.file` - attach files to the message

```

Usage: .file ... [-- text...]

.file message.txt

.file config.yaml -- convert to toml

.file a.jpg b.jpg -- What’s in these images?

.file https://ibb.co/a.png https://ibb.co/b.png -- what is the difference?

```

> Only the current model that supports vision can process images submitted through `.file` command.

### `.set` - modify the configuration temporarily

```

> .set temperature 1.2

> .set dry_run true

> .set highlight false

> .set save false

> .set auto_copy true

```

## Command

```

Usage: aichat [OPTIONS] [TEXT]...

Arguments:

[TEXT]... Input text

Options:

-m, --model Choose a LLM model

-r, --role Choose a role

-s, --session [] Create or reuse a session

-f, --file ... Attach files to the message to be sent

-H, --no-highlight Disable syntax highlighting

-S, --no-stream No stream output

-w, --wrap Specify the text-wrapping mode (no*, auto, )

--light-theme Use light theme

--dry-run Run in dry run mode

--info Print related information

--list-models List all available models

--list-roles List all available roles

--list-sessions List all available sessions

-h, --help Print help

-V, --version Print version

```

Here are some practical examples:

```sh

aichat -s # Start REPL with a new temp session

aichat -s temp # Reuse temp session

aichat -r shell -s # Create a session with a role

aichat -m openai:gpt-4-32k -s # Create a session with a model

aichat -s sh unzip a file # Run session in command mode

aichat -r shell unzip a file # Use role in command mode

aichat -s shell unzip a file # Use session in command mode

cat config.json | aichat convert to yaml # Read stdin

cat config.json | aichat -r convert:yaml # Read stdin with a role

cat config.json | aichat -s i18n # Read stdin with a session

aichat --file a.png b.png -- diff images # Attach files

aichat --file screenshot.png -r ocr # Attach files with a role

aichat --list-models # List all available models

aichat --list-roles # List all available roles

aichat --list-sessions # List all available models

aichat --info # system-wide information

aichat -s temp --info # Show session details

aichat -r shell --info # Show role info

$(echo "$data" | aichat -S -H to json) # Use aichat in a script

```

## License

Copyright (c) 2023 aichat-developers.

aichat is made available under the terms of either the MIT License or the Apache License 2.0, at your option.

See the LICENSE-APACHE and LICENSE-MIT files for license details.