Ecosyste.ms: Awesome

An open API service indexing awesome lists of open source software.

https://github.com/mikeroyal/Photogrammetry-Guide

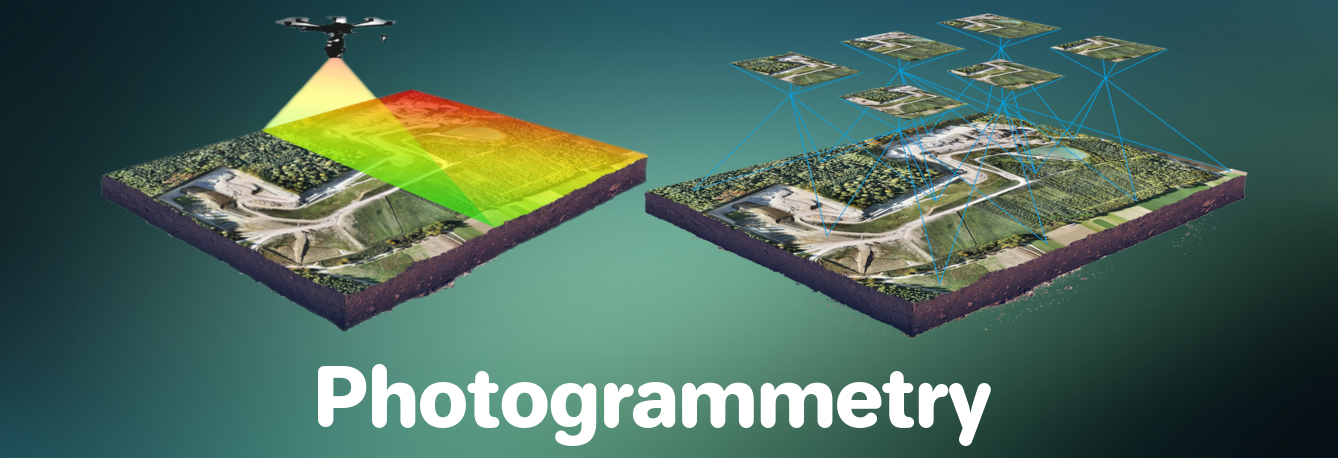

Photogrammetry Guide. Photogrammetry is widely used for Aerial surveying, Agriculture, Architecture, 3D Games, Robotics, Archaeology, Construction, Emergency management, and Medical.

https://github.com/mikeroyal/Photogrammetry-Guide

3d-reconstruction camera cesium drones geometry geospatial gis image-processing lidar map multiview-stereo photogrammetry point-cloud pointcloud rendering sfm slam spatial-data structure-from-motion unreal-engine

Last synced: about 2 months ago

JSON representation

Photogrammetry Guide. Photogrammetry is widely used for Aerial surveying, Agriculture, Architecture, 3D Games, Robotics, Archaeology, Construction, Emergency management, and Medical.

- Host: GitHub

- URL: https://github.com/mikeroyal/Photogrammetry-Guide

- Owner: mikeroyal

- Created: 2021-08-15T22:33:57.000Z (almost 3 years ago)

- Default Branch: main

- Last Pushed: 2024-01-04T22:53:39.000Z (6 months ago)

- Last Synced: 2024-02-03T07:38:31.908Z (5 months ago)

- Topics: 3d-reconstruction, camera, cesium, drones, geometry, geospatial, gis, image-processing, lidar, map, multiview-stereo, photogrammetry, point-cloud, pointcloud, rendering, sfm, slam, spatial-data, structure-from-motion, unreal-engine

- Language: Python

- Homepage:

- Size: 1.19 MB

- Stars: 950

- Watchers: 22

- Forks: 61

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- Contributing: CONTRIBUTING.md

Lists

- Awesome-Gamedev - link

- awesome-blender - Photogrammetry Guide

- awesome-stars - Photogrammetry-Guide

- awesome-photogrammetry - Photogrammetry-Guide - Guide.svg?style=social&label=Star&maxAge=2592000)](https://github.com/mikeroyal/Photogrammetry-Guide) (Related awesome lists)

- awesome-stars - mikeroyal/Photogrammetry-Guide - Photogrammetry Guide. Photogrammetry is widely used for Aerial surveying, Agriculture, Architecture, 3D Games, Robotics, Archaeology, Construction, Emergency management, and Medical. (Python)

- awesome-blender - Photogrammetry Guide

README

Photogrammetry Guide

#### A guide covering Photogrammetry including the applications, libraries and tools that will make you a better and more efficient Photogrammetry development.

**Note: You can easily convert this markdown file to a PDF in [VSCode](https://code.visualstudio.com/) using this handy extension [Markdown PDF](https://marketplace.visualstudio.com/items?itemName=yzane.markdown-pdf).**

# Table of Contents

1. [Getting Started with Photogrammetry](https://github.com/mikeroyal/Photogrammetry-Guide#getting-started-with-photogrammetry)

- [Types of Photogrammetry](#types-of-photogrammetry)

- [Photogrammetry Techniques](#photogrammetry-techniques)

- [Cameras For Photogrammetry](#cameras)

- [Types of Drones](#drones)

- [Geographic Information System](#geographic-information-system)

- [Remote Sensing ](#remote-sensing)

- [Point Cloud Processing](#point-cloud-processing)

- [LiDAR](#lidar)

- [Basic matching algorithms](#basic-matching-algorithms)

- [Semantic segmentation](#semantic-segmentation)

- [Ground segmentation](#ground-segmentation)

- [Simultaneous localization, mapping, SLAM/LIDAR-based odometry; or mapping LOAM](#simultaneous-localization-and-mapping-slam-and-lidar-based-odometry-and-or-mapping-loam)

- [Object detection and object tracking](#object-detection-and-object-tracking)

- [Neural Radiance Field (NeRF)](#neural-radiance-field-nerf)

- [Certifications & Courses](https://github.com/mikeroyal/Photogrammetry-Guide#Certifications--Courses)

- [Books/eBooks](https://github.com/mikeroyal/Photogrammetry-Guide#BookseBooks)

- [YouTube Tutorials](https://github.com/mikeroyal/Photogrammetry-Guide#YouTube-Tutorials)

2. [Photogrammetry Tools, Libraries, and Frameworks](https://github.com/mikeroyal/Photogrammetry-Guide#photogrammetry-tools-libraries-and-frameworks)

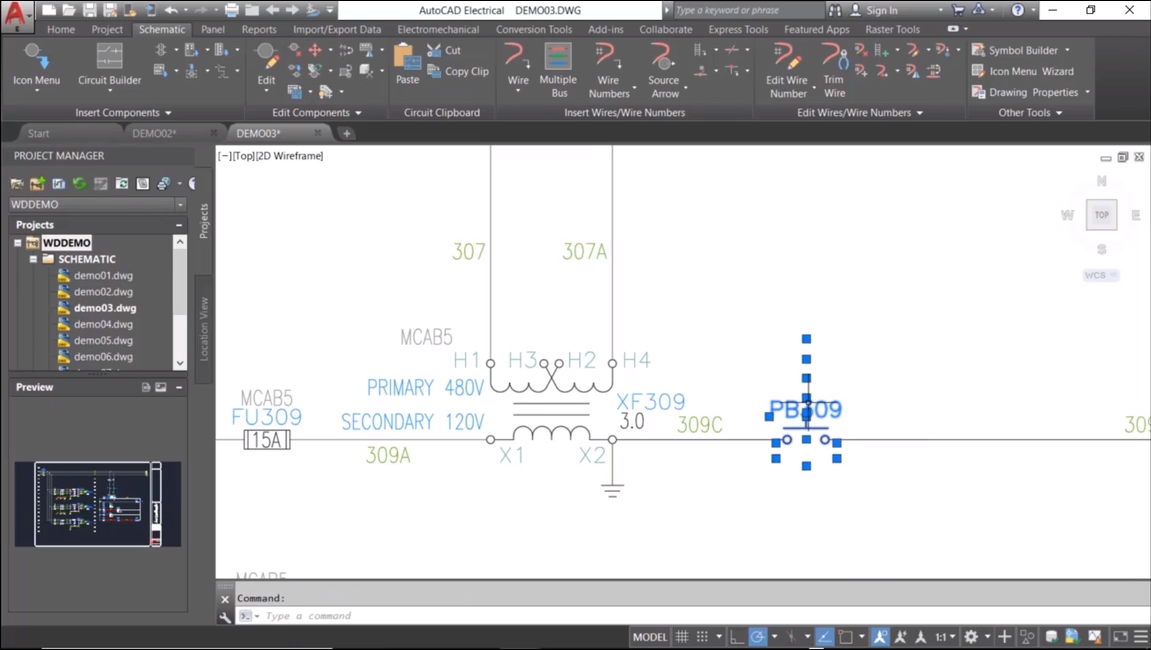

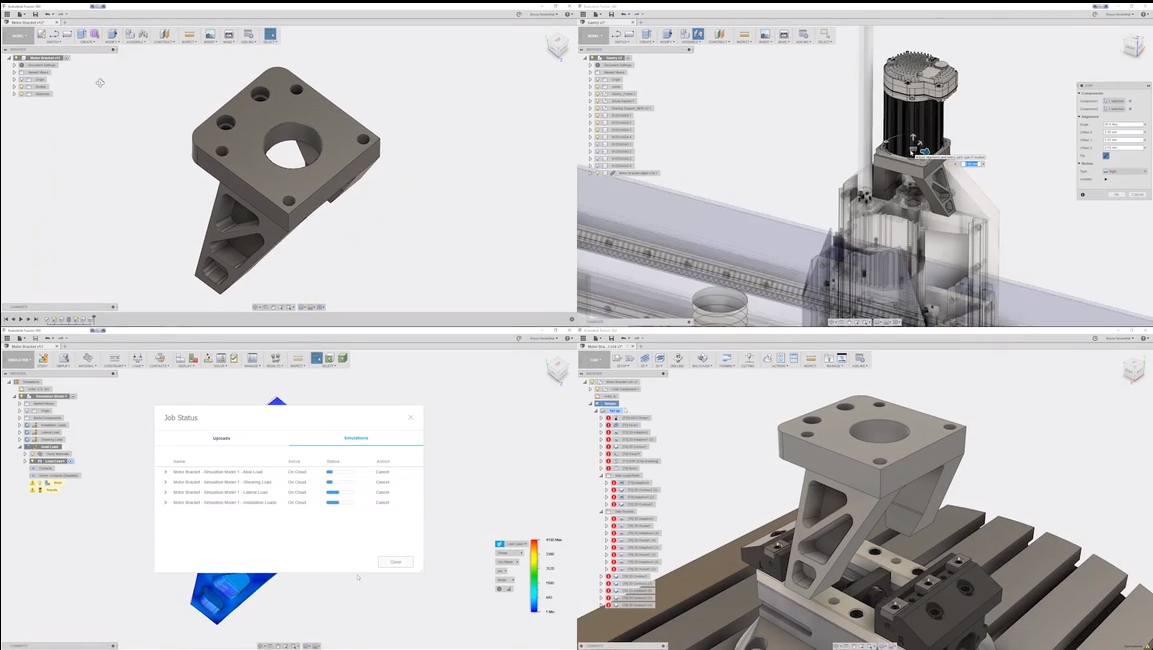

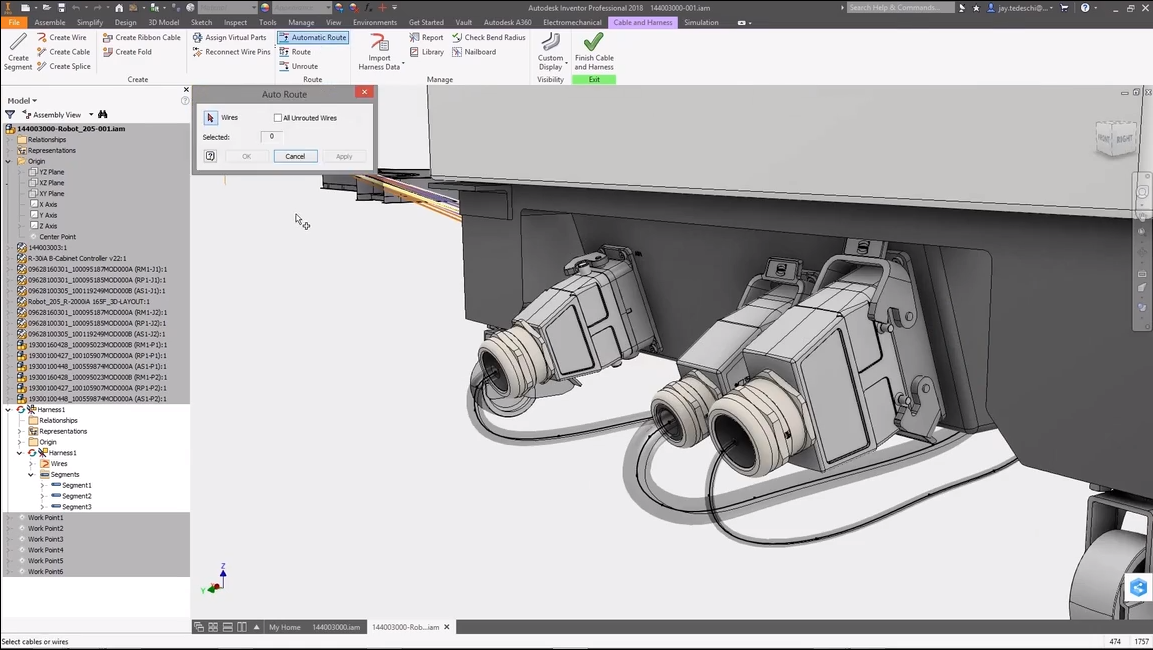

3. [Autodesk Development](https://github.com/mikeroyal/Photogrammetry-Guide#autodesk-development)

4. [LiDAR Development](https://github.com/mikeroyal/Photogrammetry-Guide#lidar-development)

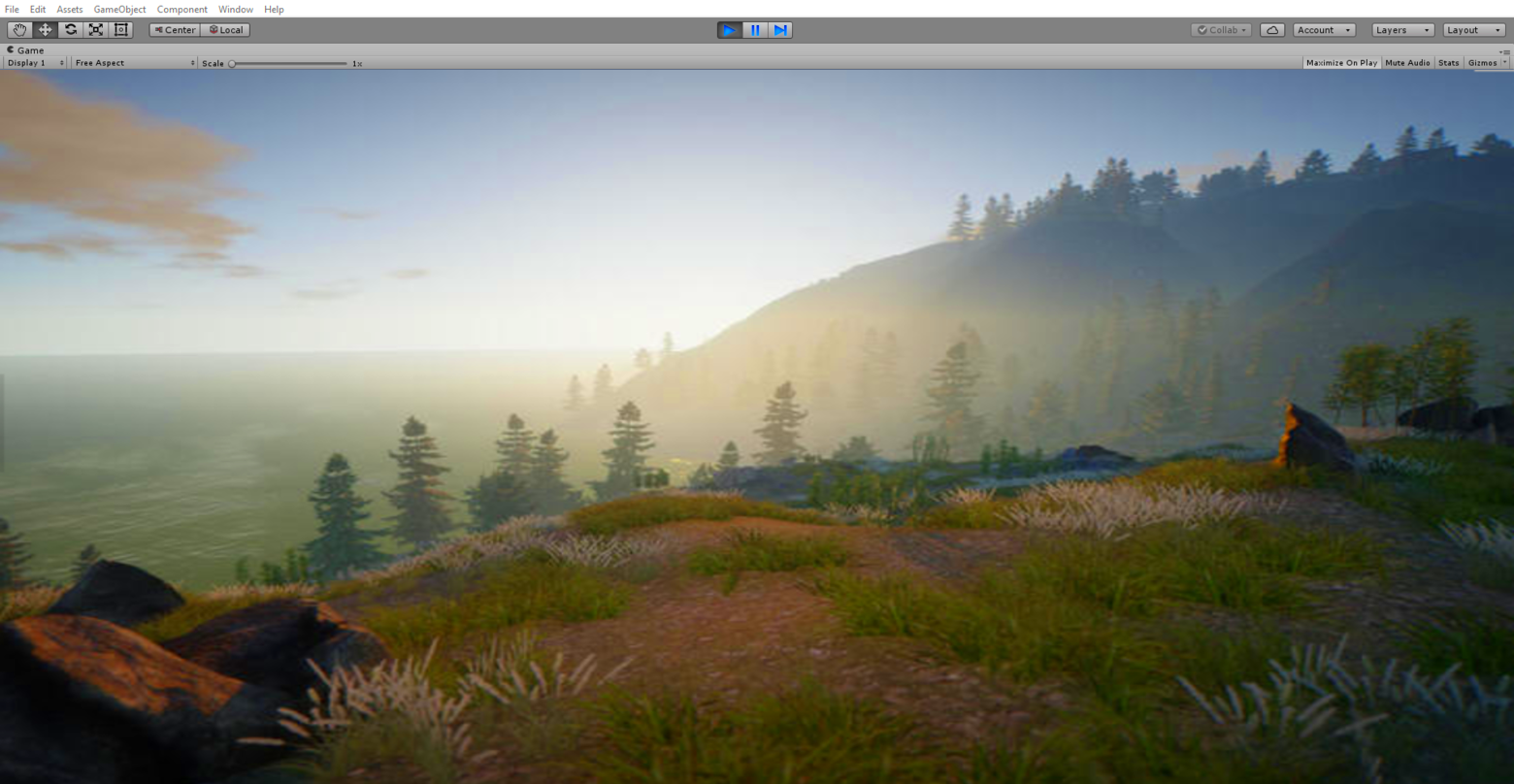

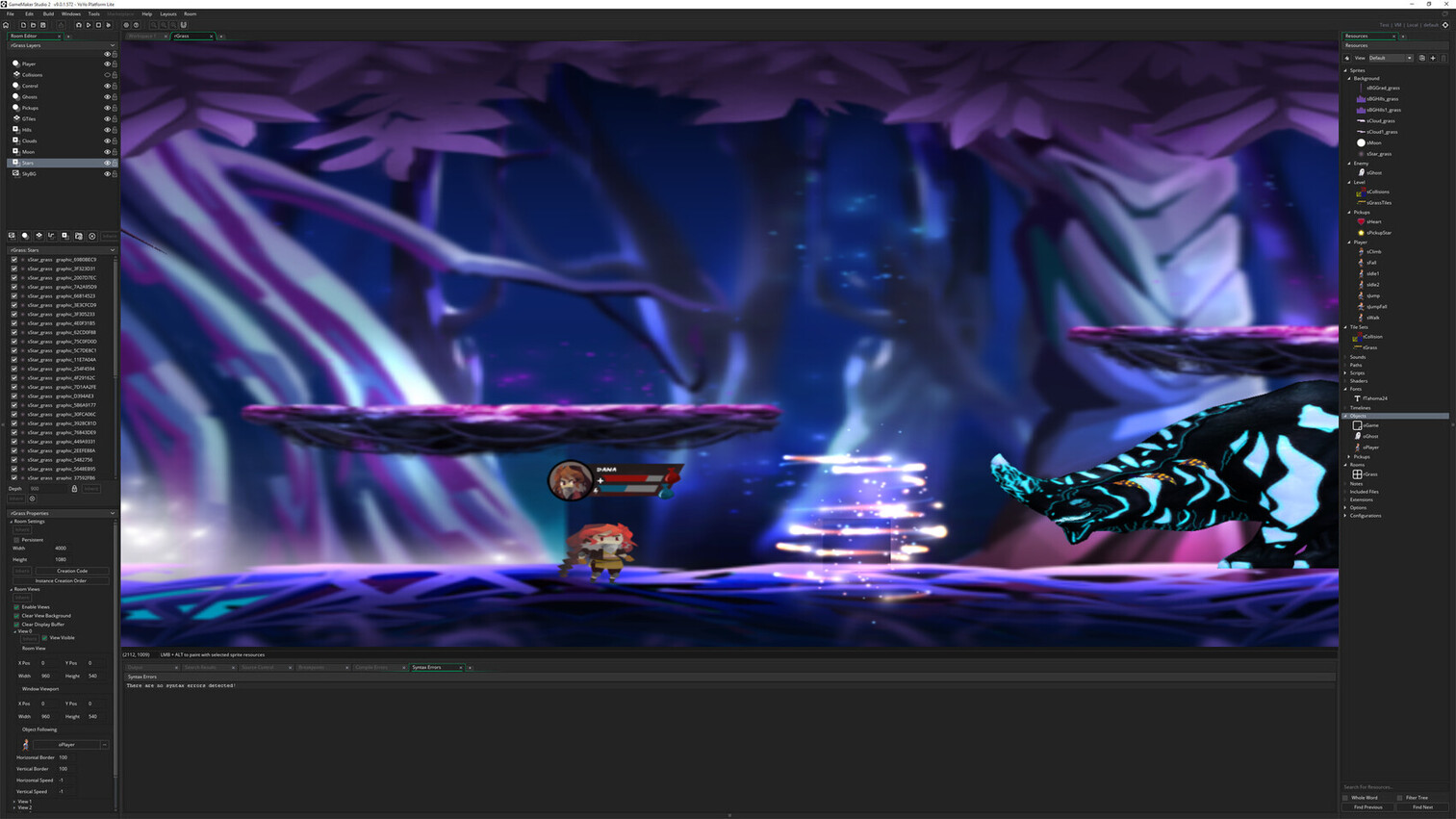

5. [Game Development](https://github.com/mikeroyal/Photogrammetry-Guide#game-development)

6. [Machine Learning](https://github.com/mikeroyal/Photogrammetry-Guide#machine-learning)

7. [Python Development](https://github.com/mikeroyal/Photogrammetry-Guide#python-development)

8. [R Development](https://github.com/mikeroyal/Photogrammetry-Guide#r-development)

# Getting Started with Photogrammetry

[Back to the Top](#table-of-contents)

[Photogrammetry](https://www.autodesk.com/solutions/photogrammetry-software) is the art and science of extracting 3D information from photographs. The process involves taking overlapping photographs of an object, structure, or space, and converting them into 2D or 3D digital models. Photogrammetry is often used by surveyors, architects, engineers, and contractors to create topographic maps, meshes, point clouds, or drawings based on the real-world.

## Types of Photogrammetry

* [Aerial photogrammetry](https://www.autodesk.com/solutions/photogrammetry-software) is process of utilizing aircrafts to produce aerial photography that can be turned into a 3D model or mapped digitally. Now, it is possible to do the same work with a drone.

Image credit: [Forestrypedia](https://forestrypedia.com/photogrammetry-definitions/)

* [Terrestrial (Close-range) photogrammetry](https://www.autodesk.com/solutions/photogrammetry-software) is when images are captured using a handheld camera or with a camera mounted to a tripod. The output of this method is not to create topographic maps, but rather to make 3D models of a smaller object.

Image credit: [TLT Photography](https://www.tlt.photography/2018/06/trail-mapping-with-terrestrial-photogrammetry-a-funny-tale/)

* [Stereo Photogrammetry](https://pro.arcgis.com/en/pro-app/latest/help/analysis/image-analyst/introduction-to-stereo-mapping.htm) is a process that involves the estimation of 3D coordinates of points on an object by considering the measurements made of two or more images taken from different positions. The sensor looks at angles that are horizontal or oblique to collect accurate, detailed information for primarily engineering purposes, such as mapping the infrastructure of bridges, buildings, and dams.

Image credit: Stereo Photogrammetry Capture. [HELImetrex](https://www.helimetrex.com.au/aerial-survey-mapping/stereo-photogrammetry-capture/)

## Photogrammetry Techniques

[Back to the Top](#table-of-contents)

* [Structure from motion (SfM)](https://en.wikipedia.org/wiki/Structure_from_motion) is a photogrammetry range imaging technique for estimating 3D structures from 2D image sequences that may be coupled with local motion signals.

* [Scale-Invariant Feature Transform (SIFT)](https://en.wikipedia.org/wiki/Scale-invariant_feature_transform) is a computer vision algorithm to detect, describe, and match local features in images. This includes object recognition, robotic mapping and navigation, image stitching, and 3D modeling.

* [Digital Outcrop Model (DOM)](https://en.wikipedia.org/wiki/Digital_outcrop_model) is a digital 3D representation of the outcrop surface, mostly in a form of textured polygon mesh. This allows for interpretation and reproducible measurement of different geological features, orientation of geological surfaces, width, and thickness of layers.

* [DEM (Digital Elevation Models)](https://gisgeography.com/dem-dsm-dtm-differences/) is a bare-earth raster grid referenced to a vertical datum. When you filter out non-ground points such as bridges and roads, you get a smooth digital elevation model. The built (power lines, buildings, and towers) and natural (trees and other types of vegetation) aren’t included in a DEM.

* [DSM (Digital Surface Models)](https://gisgeography.com/dem-dsm-dtm-differences/) is a process that captures both the natural and built/artificial features of the Earth’s surface.

* [DTM (Digital Terrain Models)](https://gisgeography.com/dem-dsm-dtm-differences/) is a process that has two definitions depending on where you live. [USGS LiDAR Base Specification](http://pubs.usgs.gov/tm/11b4/pdf/tm11-B4.pdf).

- In some countries, a DTM is actually synonymous with a DEM. This means that a DTM is simply an elevation surface representing the bare earth referenced to a common vertical datum.

- In the United States and other countries, a DTM has a slightly different meaning. A DTM is a vector data set composed of regularly spaced points and natural features such as ridges and breaklines. A DTM augments a DEM by including linear features of the bare-earth terrain.

* [TIN (Triangular Irregular Networks)](https://desktop.arcgis.com/en/arcmap/latest/manage-data/tin/fundamentals-of-tin-surfaces.htm) is a representation of a continuous surface consisting entirely of triangular facets (a triangle mesh ), used mainly as Discrete Global Grid in primary elevation modeling. TINs are a form of vector-based digital geographic data and are constructed by triangulating a set of vertices (points). The vertices are connected with a series of edges to form a network of triangles. There are different methods of interpolation to form these triangles, such as Delaunay triangulation or distance ordering.

* [Canopy Height Model (CHM)](https://geodetics.com/dem-dsm-dtm-digital-elevation-models/) is a separate model derived from elevation data in the point cloud. In forested areas, the difference between the DSM and the DEM can be viewed as CHM, representing the height of trees in the area above ground-level. Software utilizing CHMs can also derive individual tree data, such as crown diameter, crown area and tree boundaries.

* [Ground Control Points (GCPs)](https://www.usgs.gov/landsat-missions/ground-control-points) is a technique with known real-world coordinates that can be clearly identified in an image. GCPs are used in the orthorectification process to augment the geometric parameters embedded in the raw image and improve the accuracy of the resulting orthorectification.

* [Orthorectification](https://locationiq.com/glossary/orthorectification) is a process of removing distortion from an image caused by the curvature of the Earth and changes in terrain. It involves correcting the perspective of the image to align it with a map or a coordinate system, resulting in a more accurate representation of the Earth's surface.

* [Orthorectified imagery](https://www.esri.com/about/newsroom/insider/what-is-orthorectified-imagery/) is a type of imagery that has been processed to apply corrections for optical distortions of satellite/aerial imagery caused by topography and sensor geometry errors.

* [Real Time Kinematic (RTK)](https://en.wikipedia.org/wiki/Real-time_kinematic_positioning) is a satellite navigation technique used to enhance the precision of position data derived from satellite-based positioning systems (global navigation satellite systems, GNSS) such as GPS, GLONASS, Galileo, NavIC and BeiDou.

* [Post Processing Kinematic (PPK)](https://docs.emlid.com/reach/tutorials/basics/ppk-introduction/) is an alternative a satellite navigation technique to Real-Time Kinematic (RTK) used in surveying that corrects the location data after it is collected and uploaded. This technique is commonly used in drone mapping and land surveying.

* [Gaussian Splatting](https://huggingface.co/blog/gaussian-splatting) is a rasterization technique described in 3D Gaussian Splatting for Real-Time Radiance Field Rendering that allows real-time rendering of photorealistic scenes learned from small samples of images. [Polycam Gaussian splat viewer and creator](https://poly.cam/gaussian-splatting)

* [DynIBaR (Neural Dynamic Image-Based Rendering)](https://dynibar.github.io/) is a new method that generates photorealistic free-viewpoint renderings from a single video of a complex, dynamic scene. It can be used to generate a range of video effects, such as “bullet time” effects (where time is paused and the camera is moved at a normal speed around a scene), video stabilization, depth of field, and slow motion, from a single video taken with a phone’s camera. [DynIBaR: Space-time view synthesis from videos of dynamic scenes | Google Research](https://blog.research.google/2023/09/dynibar-space-time-view-synthesis-from.html)

## Cameras

[Back to the Top](#table-of-contents)

DJI Zenmuse P1 Full-frame 45MP camera.

**Links to Helpful Resources:**

* A great resource for everything to do with cameras: https://www.dpreview.com/.

* [Automatic 360° HDRI camera for photogrammetry | Civetta - Weiss AG](https://weiss-ag.com/civetta360camera/)

* [The Best Photogrammetry Solutions in 2023 - XR Today](https://www.xrtoday.com/mixed-reality/the-best-photogrammetry-solutions-in-2023/)

* [Low-Cost Cameras for Photogrammetry and Measurement (Price range:$750 and $1200)](https://www.photomodeler.com/low-cost-cameras-for-photogrammetry/)

* [Which Camera to Use with Photogrammetry and PhotoModeler](https://www.photomodeler.com/products/about_cameras/)

* [How To Pick The Best Camera For Drone Photogrammetry](https://www.heliguy.com/blogs/posts/how-to-pick-the-best-camera-for-drone-photogrammetry)

* [Best Camera For Photogrammetry In 2023 (Top 10 Models) - GetPhotography](https://getphotokits.com/best-camera-for-photogrammetry/)

#### General camera-type recommendations

**Here are some categories in which you should start your research:**

Camera Photogrammetry Nikon D300/5mm precision GPS setup for VORTEX2. Image credit: NOAA

#### Fully automatic operation:

* **Default:** high-end phone.

* **Some more flexibility in a zoom range:** high-end compact camera (as in the Sony RX100 line).

* **More flexibility, including distant subjects:** high-end superzoom camera (as in the Sony RX10 and Panasonic FZ1000 lines).

* **Extremely distant subjects in daylight:** consumer superzoom camera (as in the Nikon P line, Panasonic FZ80, etc.).

#### Manual/creative control:

* Default: mirrorless camera.

* Cheaper alternative (no mirrorless camera that suits your needs is available under budget): DSLR.

If you’re absolutely sure that’s the only lens you’ll need: fixed prime lens camera (as in the [Fujifilm X100](https://fujifilm-x.com/products/cameras/x100v/) and [Ricoh GR lines](https://us.ricoh-imaging.com/product/gr-iii/)), high-end compact camera or high-end superzoom camera.

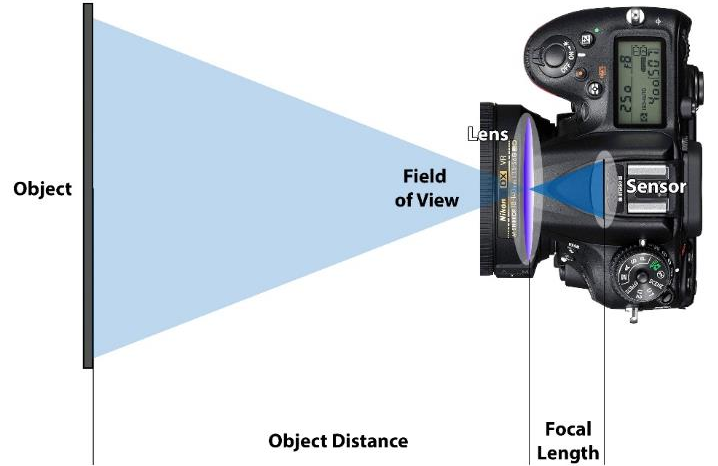

#### Key concepts and terminology

There are some concepts, terms and features of a camera that you’ll need to learn about to really understand camera reviews and see how one camera differs from another. The following is a list of such terms for you to look up if needed.

Camera Basics. Image credit: Geodetic Systems, Inc

* Exposure, noise, dynamic range (SDR and HDR).

* **Camera design:** interchangeable-lens cameras and fixed-lens cameras, mirrorless and DSLR.

* **Image sensor:** size and surface area, resolution.

* **Lens:** focal length and angle/field of view, maximum aperture, lens mount and format coverage.

* Autofocus.

* Continuous/burst shooting, buffer depth.

* Viewfinder and display.

* Image stabilization.

* Weather resistance.

Remember a camera’s age is irrelevant. Cameras don’t age like smart phones or computers do, because they have no increasingly demanding software to keep up with. So as long as a camera is in good working order, it should work as well as it did when it was brand new.

Make sure to shop at well accepted and well established in the camera market, even at the high end. Try reputable outlets ([KEH](https://www.keh.com/) and [mpb](https://www.mpb.com/)) and the used sections on big retailers ([B&H](https://www.bhphotovideo.com/) and [Adorama](https://www.adorama.com/) in the US) and local camera stores. You can also find refurbished cameras sold directly by the manufacturers’ distributors.

## Drones

[Back to the Top](#table-of-contents)

**Links for Drone photography:**

* [Drone photography: A beginner's guide - Adobe](https://www.adobe.com/creativecloud/photography/discover/drone-photography.html)

* [Drone Photography: Beginner's Guide to Getting Started - Droneblog](https://www.droneblog.com/drone-photography/)

* [The complete beginner's guide to drone photography - Canva](https://www.canva.com/learn/the-complete-beginners-guide-to-drone-photography/)

* [Drone Photography: The Definitive Guide (2023) | PhotoPills](https://www.photopills.com/articles/drone-photography-guide)

* [19 Drone Photography Tips to Improve Your Aerial Shots in 2023](https://shotkit.com/drone-photography-tips/)

* [Drone photography: Tips and tricks | Space](https://www.space.com/guide-to-drone-photography)

A drone is basically an Unmanned Aerial Vehicle (UAV). Before the rise in consumer interest in UAVs, the word “drone” was primarily used to refer to the UAVs used by the military.

Now, though, intelligent quadcopters that have UAV-like features are more popular among consumers than ever before. And while they technically aren’t as advanced as military drones, we refer to them as ”drones” because they are similar in nature (both allow you to operate an aerial vehicle in order to perform a particular task, which, in the case of consumer drones, is typically to shoot video or capture still images).

There are different types of drones for all kinds of situations, so make sure to consider the main purpose for your drone purchase. Even though most drones fall under the categories of consumer or professional grade drones, there are now UAVs geared towards traveling and selfies.

* **Camera drones:** These drones might range from $100 to $1000 which have exceptional features that make such drones a market leader in the world of professional drones. They also have Obstacle avoidable sensors.

* **Compact drones:** These drones are specially designed for panoramic images and landscape shoot. The best feature of compact drones is that they have an ability to change the image format from JEPG to raw images. Raw images have more details and can produce 4k quality of the picture and videos. For example, [DJI Inspire 2](https://www.dji.com/inspire-2), which falls in the category of professional drones has a speed of 60+ miles per hour (97+ kilometers per hour) and has a dual battery backup of up to 30 minutes. Use these drones in the places where you cannot step in and take the pictures that you don’t need to crop or it can adversely affect the quality of the picture.

**The different among RTF, BNF and ARF:**

* **RTF stands for Ready-To-Fly** - Usually an RTF quadcopter doesn’t require any assembly or setup, but you may have to do some simple things like charge up the battery, install the propellers or bind the controller to the quadcopter (get them talking to each other).

* **BNF stands for Bind-And-Fly** - A BNF quadcopter usually comes completely assembled, but without a controller. With BNF models, you’ll have to use the controller that you already have (if it’s compatible) or find a controller sold separately. One thing you should know is that just because a transmitter and receiver are on the same frequency that doesn’t mean that they’ll work together.

* **ARF stands for Almost-ready-to-fly** - ARF Drones are usually like quadcopter kits. They usually don’t come with a transmitter or receiver and might require partial assembly. An ARF drone kit might also leave out components like motors, ESCs, or even the flight controller and battery. The definition of an ARF drone kit is very broad, so whenever you see ARF in the title, you should read the description thoroughly.

### Taking care of legal consideration

Before you get started with your drone (I know you must be excited) make sure you are updated with all the rules and regulations for what you can and what you cannot do with the drone, the restricted areas and the permission to fly near any private property.

* Various countries have their own rules and regulations for flying drones. For Example in the UK it’s Civil Aviation Authority (CAA) that restricts you from flying drones near airports or aircraft, keep the drone below 400 feet etc. Further you can refer to the [UK link](http://dronesafe.uk/drone-code/)

* In the US it’s federal Aviation Administration (FAA) which has a code of not to fly above a group of people, never fly under influence of drugs and alcohol. Please refer to [here](https://www.faa.gov/uas/getting_started/fly_for_fun/)

* Similarly, for various countries you have strict rules that need to be taken care of before making a decision of buying a drone. So, save yourself from getting disheartened of the fact that you cannot achieve the purpose for what you bought this drone.

### Improve the drone flying skills

A lot of people think that drones are hard to fly, but the truth is, they’re really not. Anyone capable of using an [iPhone](https://apps.apple.com/us/app/dronedeploy-flight-app/id971358101) or [Android](https://play.google.com/store/apps/details?id=com.dronedeploy.beta) device is more than capable of flying a drone. However, this does not mean that drones are fool proof. Even the most advanced drones require some general knowledge if you want to avoid crashing or worse, losing your drone forever. So you need to improve your flying drone skills.

### Where To Buy A Drone?

If you don’t know where to buy a drone, don’t worry. There are tons of online stores for drones that will ship to just about any major country. If you’re buying toy drones, the best place to go is Amazon or others below:

All of the main websites for buying drones.

* **[dji.com](https://www.dji.com/)**: The #1 in popularity and name.

* **[Amazon.com](https://www.amazon.com/drones/s?k=drones)**: A little bit of everything.

* **[horizonhobby.com](https://www.horizonhobby.com/helicopters/drones/)**: The leader in radio control airplanes, cars, quads, radios and more.

* **[amainhobbies.com](https://www.amainhobbies.com/drones/c3347)**: The great selection of RC Hobby.

## Geographic Information System

[Back to the Top](#table-of-contents)

[Geographic Information System (GIS)](https://www.usgs.gov/faqs/what-geographic-information-system-gis) is an information system able to encode, store, transform, analyze and display geospatial information.

Image credit: [geo.university](https://www.geo.university/courses/environmental-modelling-and-analysis-in-gis)

### Geographic Information System Software

- [ArcGIS Desktop](https://www.esri.com/en-us/arcgis/products/arcgis-desktop/overview): Extendable desktop suite to manage, visualize and analyze GIS data in 2D and 3D, including image processing. Includes ArcGIS Pro, ArcMap, ArcCatalog, and ArcGIS Online.

- [DIVA-GIS](https://www.diva-gis.org/) - DIVA-GIS is a free geographic information system software program used for the analysis of geographic data, in particular point data on biodiversity.

- [GeoDa](http://geodacenter.github.io/) - A free and open source software tool that serves as an introduction to spatial data analysis.

- [GISInternals](http://www.gisinternals.com/) - Povidesdaily build packages and software development kits for the GDAL and MapServer

- [Global Mapper](http://www.bluemarblegeo.com/products/global-mapper.php) - An easy-to-use, robust, and genuinely affordable GIS application that combines a wide array of spatial data processing tools with access to an unparalleled variety of data formats.

- [GRASS GIS](https://grass.osgeo.org/) - A free and open source GIS software suite used for geospatial data management and analysis, image processing, graphics and maps production, spatial modeling, and visualization.

- [gvSIG](http://www.gvsig.com/en) - A powerful, user-friendly, interoperable GIS.

- [JUMP GIS](http://jump-pilot.sourceforge.net/) - An open source GIS written in Java

- [MapInfo Pro](https://www.pitneybowes.com/us/location-intelligence/geographic-information-systems/mapinfo-pro.html) - A full-featured desktop solution to prepare data for web mapping applications and create presentation quality maps that combines data analysis, visual insights, and map publishing.

- [Marble](https://marble.kde.org/) - A virtual globe and world atlas.

- [OpenOrienteering Mapper](https://github.com/openorienteering/mapper) - A software for creating maps for the orienteering sport.

- [QGIS](http://qgis.org/en/site/) - A free and open source GIS.

- [SAGA](http://www.saga-gis.org/en/index.html) - Open source system for automated geoscientific analyses.

- [SharpMap](https://github.com/SharpMap/SharpMap) - An easy-to-use mapping library for use in web and desktop applications

- [TileMill](https://tilemill-project.github.io/tilemill/) - An open source map design studio, developed by a community of volunteer open source contributors

- [Whitebox GAT](http://www.uoguelph.ca/~hydrogeo/Whitebox/) - An open source desktop GIS and remote sensing software package for general applications of geospatial analysis and data visualization.

- [DIVA-GIS](https://www.diva-gis.org/) - DIVA-GIS is a free geographic information system software program used for the analysis of geographic data, in particular point data on biodiversity.

- [Abc-Map](https://abc-map.fr/) - A lightweight and user-friendly Web GIS. Create, import data from various sources, export maps or share them online freely and easily.

## Remote Sensing

[Back to the Top](#table-of-contents)

[Remote Sensing](https://www.earthdata.nasa.gov/learn/backgrounders/remote-sensing) is a set of techniques used to gather and process information about an object without direct physical contact.

* **Active remote sensing** are instruments that operate with their own source of emission or light, while passive ones rely on the reflected one. Radiation also differs by wavelengths that fall into short (visible, NIR, MIR) and long (microwave). Active remote sensing techniques differ by what they transmit (light or waves) and what they determine (distance, height, atmospheric conditions, etc.).

* **Passive remote sensing** are instruments that depend on natural energy (sunrays) bounced by the target. For this reason, it can be applied only with proper sunlight, otherwise there will be nothing to reflect. It employs multispectral or hyperspectral sensors that measure the acquired quantity with multiple band combinations. These combinations differ by the number of channels (two wavelengths and more). The scope of bands (visible, IR, NIR, TIR, microwave).

)

Image credit: [mdpi](https://www.mdpi.com/2072-4292/12/18/3053)

### Remote Sensing Software

- [eCognition](http://www.ecognition.com/suite/ecognition-developer) - A powerful development environment for object-based image analysis.

- [ENVI](https://www.harris.com/solution/envi) :star2: - A geospatial imagery analysis and processing software.

- [ERDAS IMAGINE](https://www.hexagongeospatial.com/products/power-portfolio/erdas-imagine) :star2: - A geospatial imagery analysis and processing software.

- [Google Earth](https://www.google.com/earth/) - A computer program that renders a 3D representation of Earth based on satellite imagery.

- [Google Earth Studio](https://www.google.com/earth/studio/) - An animation tool for Google Earth’s satellite and 3D imagery.

- [GRASS GIS](https://grass.osgeo.org/) - A free and open source GIS software suite used for geospatial data management and analysis, image processing, graphics and maps production, spatial modeling, and visualization.

- [Opticks](https://opticks.org/) - An expandable remote sensing and imagery analysis software platform that is free and open source.

- [Orfeo toolbox](https://www.orfeo-toolbox.org/) - An open-source project for state-of-the-art remote sensing, including a fast image viewer, apps callable from Bash, Python or QGIS, and a powerful C++ API.

- [PANOPLY](https://www.giss.nasa.gov/tools/panoply/)- Panoply plots geo-referenced and other arrays from netCDF, HDF, GRIB, and other datasets.

- [PCI Geomatica](http://www.pcigeomatics.com/software/geomatica/professional) - A remote sensing desktop software package for processing earth observation data.

- [SNAP](http://step.esa.int/main/toolboxes/snap/) - A common architecture for all Sentinel Toolboxes.

## Point Cloud Processing

[Back to the Top](#table-of-contents)

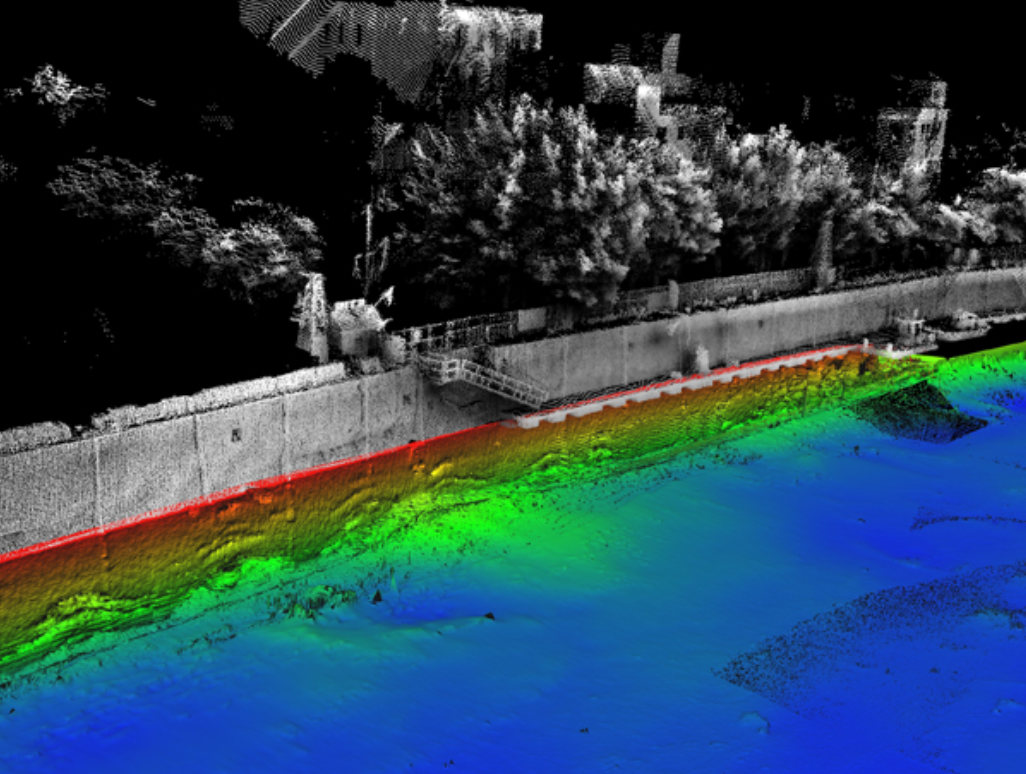

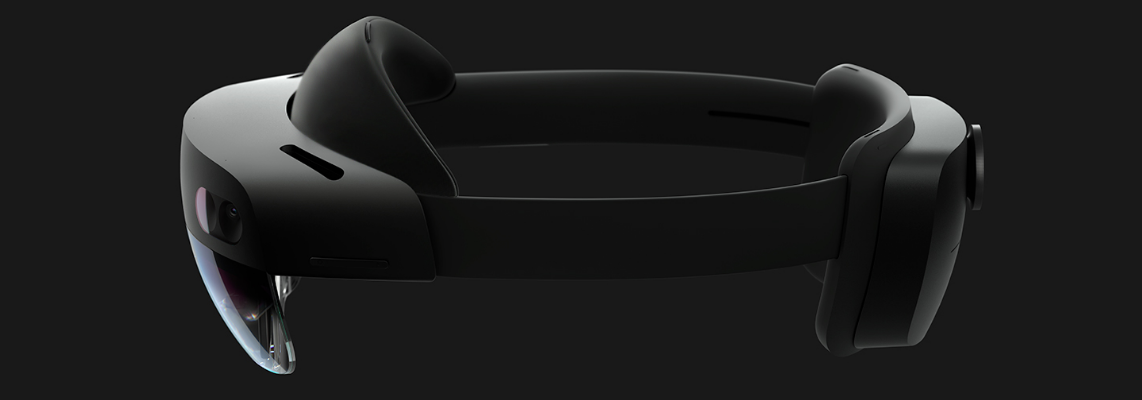

[Point Cloud Processing](https://www.mathworks.com/discovery/point-cloud.html) is a huge number of tiny data points that exist in three dimensions(3D). If you could spit those points out of a scanner they would appear as a cloud. This can be used for mapping, perception, and navigation in robotics and autonomous systems. It can also be used in augmented reality (AR) and virtual reality (VR) applications.

**Functions of point cloud processing software include:**

- Manual editing of the point cloud.

- Automatic cleanup and filtering.

- Offloading tasks to the cloud.

- Rendering.

- Point distance calculation.

- Registration.

- Conversion to mesh.

- Conversation to [NURBS](https://en.wikipedia.org/wiki/Non-uniform_rational_B-spline).

Point Cloud Processing & Data Management. Image credit: [H2H Associates](http://h2hassociates.com/services/point-cloud-processing-data-management/)

### Point Cloud Processing Software

- [**CloudCompare**](https://www.cloudcompare.org/) is a 3D point cloud processing software. It can also handle triangular meshes and calibrated images.

- [**PCL - Point Cloud Library**](https://pointclouds.org/) is a standalone, large scale, open project for 2D/3D image and point cloud processing.

- [**Leica Cyclone**](https://leica-geosystems.com/products/laser-scanners/software/leica-cyclone?redir=w226) is the market-leading point cloud processing software. It is a family of software modules that provides the widest set of work process options for 3D laser scanning projects in engineering, surveying, construction and related applications.

- [**FARO SCENE**](https://www.faro.com/en/Products/Software/SCENE-Software) is a software that allows you to capture, process and register real-world objects and environments in 3D point cloud. You can create stunning visualizations, share and collaborate, and access virtual reality -LRB- VR -RRB- views of your scans.

- [**Autodesk ReCap**](https://www.autodesk.com/support/technical/product/recap) is a software that let's you create accurate 3D models with reality capture using laser scanning. This software, which lets you convert reality into a 3D model or 2D drawing, actually comes in two flavors: ReCap and ReCap Pro.

- [**Trimble RealWorks**](https://geospatial.trimble.com/en/products/software/trimble-realworks) is a powerful office software suite to integrate 3D point cloud and survey data. It effectively manages large scan datasets to register, analyze, model, collaborate and produce compelling deliverables.

- [**PDAL - Point Data Abstraction Library**](http://www.pdal.io/) is a C++/Python BSD library for translating and manipulating point cloud data.

- [**libLAS**](http://liblas.org/) is a C/C++ library for reading and writing the very common LAS LiDAR format (Legacy. Replaced by PDAL).

- [**entwine**](https://github.com/connormanning/entwine/) is a data organization library for massive point clouds, designed to conquer datasets of hundreds of billions of points as well as desktop-scale point clouds.

- [**PotreeConverter**](https://github.com/potree/PotreeConverter) is another data organisation library, generating data for use in the Potree web viewer.

- [**lidR**](https://github.com/Jean-Romain/lidR) is a R package for Airborne LiDAR Data Manipulation and Visualization for Forestry Applications.

- [**pypcd**](https://github.com/dimatura/pypcd) is a Python module to read and write point clouds stored in the PCD file format, used by the Point Cloud Library.

- [**Open3D**](https://github.com/intel-isl/Open3D) is an open-source library that supports rapid development of software that deals with 3D data. It has Python and C++ frontends.

- [**cilantro**](https://github.com/kzampog/cilantro) is a Lean and Efficient Library for Point Cloud Data Processing (C++).

- [**PyVista**](https://github.com/pyvista/pyvista/) is a 3D plotting and mesh analysis through a streamlined interface for the Visualization Toolkit(VTK).

- [**pyntcloud**](https://github.com/daavoo/pyntcloud) is a Python library for working with 3D point clouds.

- [**pylas**](https://github.com/tmontaigu/pylas) Reading Las (lidar) in Python.

- [**PyTorch**](https://github.com/rusty1s/pytorch_geometric) PyTorch Geometric (PyG) is a geometric deep learning extension library for PyTorch.

- [**Paraview**](http://www.paraview.org/) is a Open-source, multi-platform data analysis and visualization application.

- [**MeshLab**](http://meshlab.sourceforge.net/) is a Open source, portable, and extensible system for the processing and editing of unstructured 3D triangular meshes

- [**OpenFlipper**](http://www.openflipper.org/) is a Open Source Geometry Processing and Rendering Framework.

- [**PotreeDesktop**](https://github.com/potree/PotreeDesktop) is a desktop/portable version of the web-based point cloud viewer [**Potree**](https://github.com/potree/potree)

- [**3d-annotation-tool**](https://github.com/StrayRobots/3d-annotation-tool) is a lightweight desktop application to annotate pointclouds for machine learning.

## LiDAR

[Back to the Top](#table-of-contents)

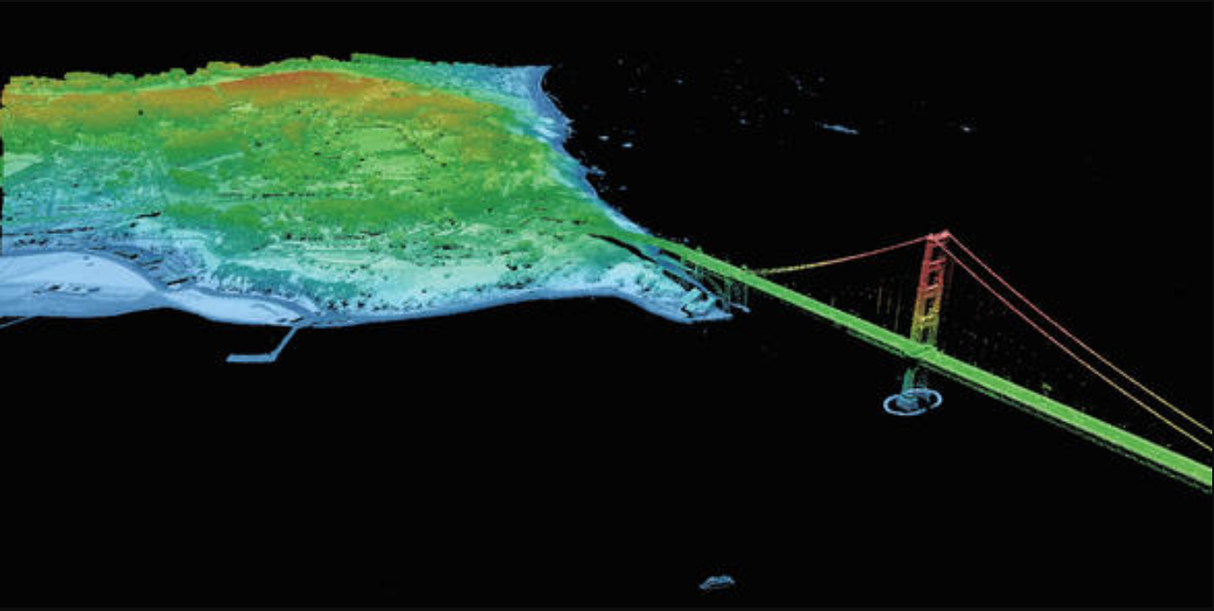

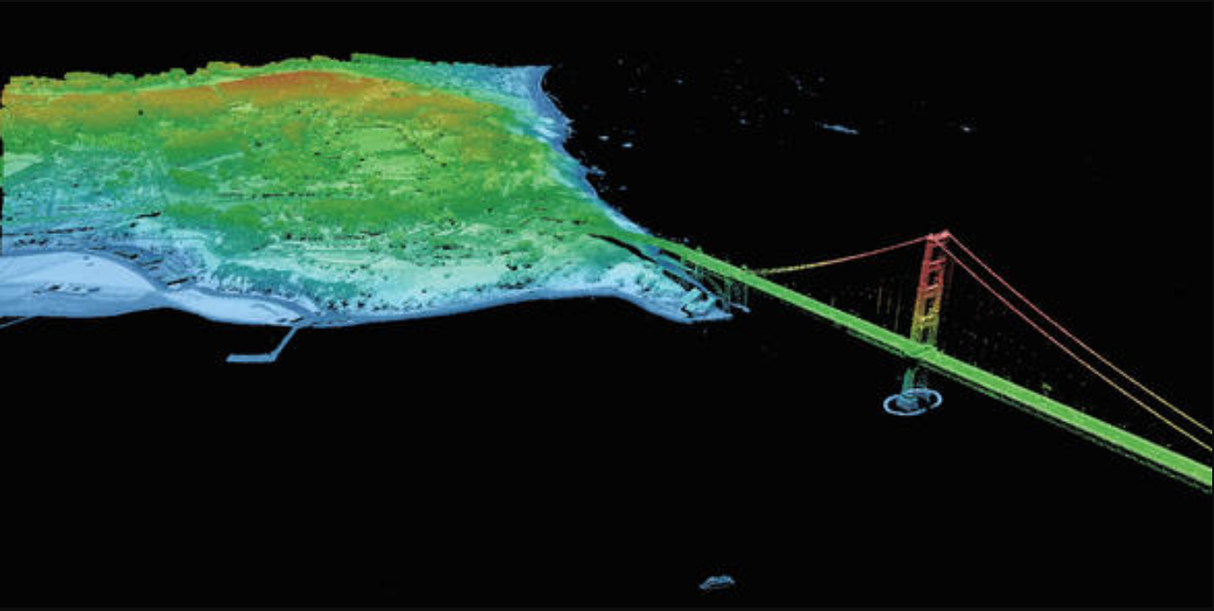

[Light Detection and Ranging (LiDAR)](https://www.usgs.gov/news/earthword-lidar) is a technology used to create high-resolution models of ground elevation with a vertical accuracy of 10 centimeters (4 inches). Lidar equipment, which includes a laser scanner, a Global Positioning System (GPS), and an Inertial Navigation System (INS), is typically mounted on a small aircraft. The laser scanner transmits brief pulses of light to the ground surface. Those pulses are reflected or scattered back and their travel time is used to calculate the distance between the laser scanner and the ground. Lidar data is initially collected as a “point cloud” of individual points reflected from everything on the surface, including structures and vegetation. To produce a “bare earth” Digital Elevation Model (DEM), structures and vegetation are stripped away.

**3D Data Visualization of Golden Gate Bridge. Source: [USGS](https://www.usgs.gov/core-science-systems/ngp/tnm-delivery)**

[Mola](https://docs.mola-slam.org/latest/) is a Modular Optimization framework for Localization and mApping (MOLA).

**3D LiDAR SLAM from KITTI dataset. Source: [MOLA](https://docs.mola-slam.org/latest/demo-kitti-lidar-slam.html)**

### Basic matching algorithms

- [Iterative closest point (ICP) ](https://www.youtube.com/watch?v=uzOCS_gdZuM) - The must-have algorithm for feature matching applications (ICP).

- [GitHub repository](https://github.com/pglira/simpleICP) - simpleICP C++ /Julia / Matlab / Octave / Python implementation.

- [GitHub repository](https://github.com/ethz-asl/libpointmatcher) - libpointmatcher, a modular library implementing the ICP algorithm.

- [Normal distributions transform](https://www.youtube.com/watch?v=0YV4a2asb8Y) - More recent massively-parallel approach to feature matching (NDT).

- [KISS-ICP](https://www.youtube.com/watch?v=kMMH8rA1ggI) - In Defense of Point-to-Point ICP – Simple, Accurate, and Robust Registration If Done the Right Way.

- [GitHub repository](https://github.com/PRBonn/kiss-icp)

### Semantic segmentation

- [RangeNet++](https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/milioto2019iros.pdf) - Fast and Accurate LiDAR Sematnic Segmentation with fully convolutional network.

- [GitHub repository](https://github.com/PRBonn/rangenet_lib)

- [YouTube video](https://www.youtube.com/watch?v=uo3ZuLuFAzk)

- [PolarNet](https://arxiv.org/pdf/2003.14032.pdf) - An Improved Grid Representation for Online LiDAR Point Clouds Semantic Segmentation.

- [GitHub repository](https://github.com/edwardzhou130/PolarSeg)

- [YouTube video](https://www.youtube.com/watch?v=iIhttRSMqjE)

- [Frustum PointNets](https://arxiv.org/pdf/1711.08488.pdf) - Frustum PointNets for 3D Object Detection from RGB-D Data.

- [GitHub repository](https://github.com/charlesq34/frustum-pointnets)

- [Study of LIDAR Semantic Segmentation](https://larissa.triess.eu/scan-semseg/) - Scan-based Semantic Segmentation of LiDAR Point Clouds: An Experimental Study IV 2020.

- [Paper](https://arxiv.org/abs/2004.11803)

- [GitHub repository](http://ltriess.github.io/scan-semseg)

- [LIDAR-MOS](https://www.ipb.uni-bonn.de/pdfs/chen2021ral-iros.pdf) - Moving Object Segmentation in 3D LIDAR Data

- [GitHub repository](https://github.com/PRBonn/LiDAR-MOS)

- [YouTube video](https://www.youtube.com/watch?v=NHvsYhk4dhw)

- [SuperPoint Graph](https://arxiv.org/pdf/1711.09869.pdf)- Large-scale Point Cloud Semantic Segmentation with Superpoint Graphs

- [GitHub repository](https://github.com/PRBonn/LiDAR-MOS)

- [YouTube video](https://www.youtube.com/watch?v=Ijr3kGSU_tU)

- [RandLA-Net](https://arxiv.org/pdf/1911.11236.pdf) - Efficient Semantic Segmentation of Large-Scale Point Clouds

- [GitHub repository](https://github.com/QingyongHu/RandLA-Net)

- [YouTube video](https://www.youtube.com/watch?v=Ar3eY_lwzMk)

- [Automatic labelling](https://arxiv.org/pdf/2108.13757.pdf) - Automatic labelling of urban point clouds using data fusion

- [GitHub repository](https://github.com/Amsterdam-AI-Team/Urban_PointCloud_Processing)

- [YouTube video](https://www.youtube.com/watch?v=qMj_WM6D0vI)

### Ground segmentation

- [Plane Seg](https://github.com/ori-drs/plane_seg) - ROS comapatible ground plane segmentation; a library for fitting planes to LIDAR.

- [YouTube video](https://www.youtube.com/watch?v=YYs4lJ9t-Xo)

- [LineFit Graph](https://ieeexplore.ieee.org/abstract/document/5548059)- Line fitting-based fast ground segmentation for horizontal 3D LiDAR data

- [GitHub repository](https://github.com/lorenwel/linefit_ground_segmentation)

- [Patchwork](https://arxiv.org/pdf/2108.05560.pdf)- Region-wise plane fitting-based robust and fast ground segmentation for 3D LiDAR data

- [GitHub repository](https://github.com/LimHyungTae/patchwork)

- [YouTube video](https://www.youtube.com/watch?v=rclqeDi4gow)

- [Patchwork++](https://arxiv.org/pdf/2207.11919.pdf)- Improved version of Patchwork. Patchwork++ provides pybinding as well for deep learning users

- [GitHub repository](https://github.com/url-kaist/patchwork-plusplus-ros)

- [YouTube video](https://www.youtube.com/watch?v=fogCM159GRk)

### Simultaneous localization and mapping SLAM and LIDAR-based odometry and or mapping LOAM

- [LOAM J. Zhang and S. Singh](https://youtu.be/8ezyhTAEyHs) - LOAM: Lidar Odometry and Mapping in Real-time.

- [LeGO-LOAM](https://github.com/RobustFieldAutonomyLab/LeGO-LOAM) - A lightweight and ground optimized lidar odometry and mapping (LeGO-LOAM) system for ROS compatible UGVs.

- [YouTube video](https://www.youtube.com/watch?v=7uCxLUs9fwQ)

- [Cartographer](https://github.com/cartographer-project/cartographer) - Cartographer is ROS compatible system that provides real-time simultaneous localization and mapping (SLAM) in 2D and 3D across multiple platforms and sensor configurations.

- [YouTube video](https://www.youtube.com/watch?v=29Knm-phAyI)

- [SuMa++](http://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/chen2019iros.pdf) - LiDAR-based Semantic SLAM.

- [GitHub repository](https://github.com/PRBonn/semantic_suma/)

- [YouTube video](https://youtu.be/uo3ZuLuFAzk)

- [OverlapNet](http://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/chen2020rss.pdf) - Loop Closing for LiDAR-based SLAM.

- [GitHub repository](https://github.com/PRBonn/OverlapNet)

- [YouTube video](https://www.youtube.com/watch?v=YTfliBco6aw)

- [LIO-SAM](https://arxiv.org/pdf/2007.00258.pdf) - Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping.

- [GitHub repository](https://github.com/TixiaoShan/LIO-SAM)

- [YouTube video](https://www.youtube.com/watch?v=A0H8CoORZJU)

- [Removert](http://ras.papercept.net/images/temp/IROS/files/0855.pdf) - Remove, then Revert: Static Point cloud Map Construction using Multiresolution Range Images.

- [GitHub repository](https://github.com/irapkaist/removert)

- [YouTube video](https://www.youtube.com/watch?v=M9PEGi5fAq8)

### Object detection and object tracking

- [Learning to Optimally Segment Point Clouds](https://arxiv.org/abs/1912.04976) - By Peiyun Hu, David Held, and Deva Ramanan at Carnegie Mellon University. IEEE Robotics and Automation Letters, 2020.

- [YouTube video](https://www.youtube.com/watch?v=wLxIAwIL870)

- [GitHub repository](https://github.com/peiyunh/opcseg)

- [Leveraging Heteroscedastic Aleatoric Uncertainties for Robust Real-Time LiDAR 3D Object Detection](https://arxiv.org/pdf/1809.05590.pdf) - By Di Feng, Lars Rosenbaum, Fabian Timm, Klaus Dietmayer. 30th IEEE Intelligent Vehicles Symposium, 2019.

- [YouTube video](https://www.youtube.com/watch?v=2DzH9COLpkU)

- [What You See is What You Get: Exploiting Visibility for 3D Object Detection](https://arxiv.org/pdf/1912.04986.pdf) - By Peiyun Hu, Jason Ziglar, David Held, Deva Ramanan, 2019.

- [YouTube video](https://www.youtube.com/watch?v=497OF-otY2k)

- [GitHub repository](https://github.com/peiyunh/WYSIWYG)

- [urban_road_filter](https://doi.org/10.3390/s22010194)-

Real-Time LIDAR-Based Urban Road and Sidewalk Detection for Autonomous Vehicles

- [GitHub repository](https://github.com/jkk-research/urban_road_filter)

- [YouTube video](https://www.youtube.com/watch?v=T2qi4pldR-E)

## Neural Radiance Field (NeRF)

[Back to the Top](#table-of-contents)

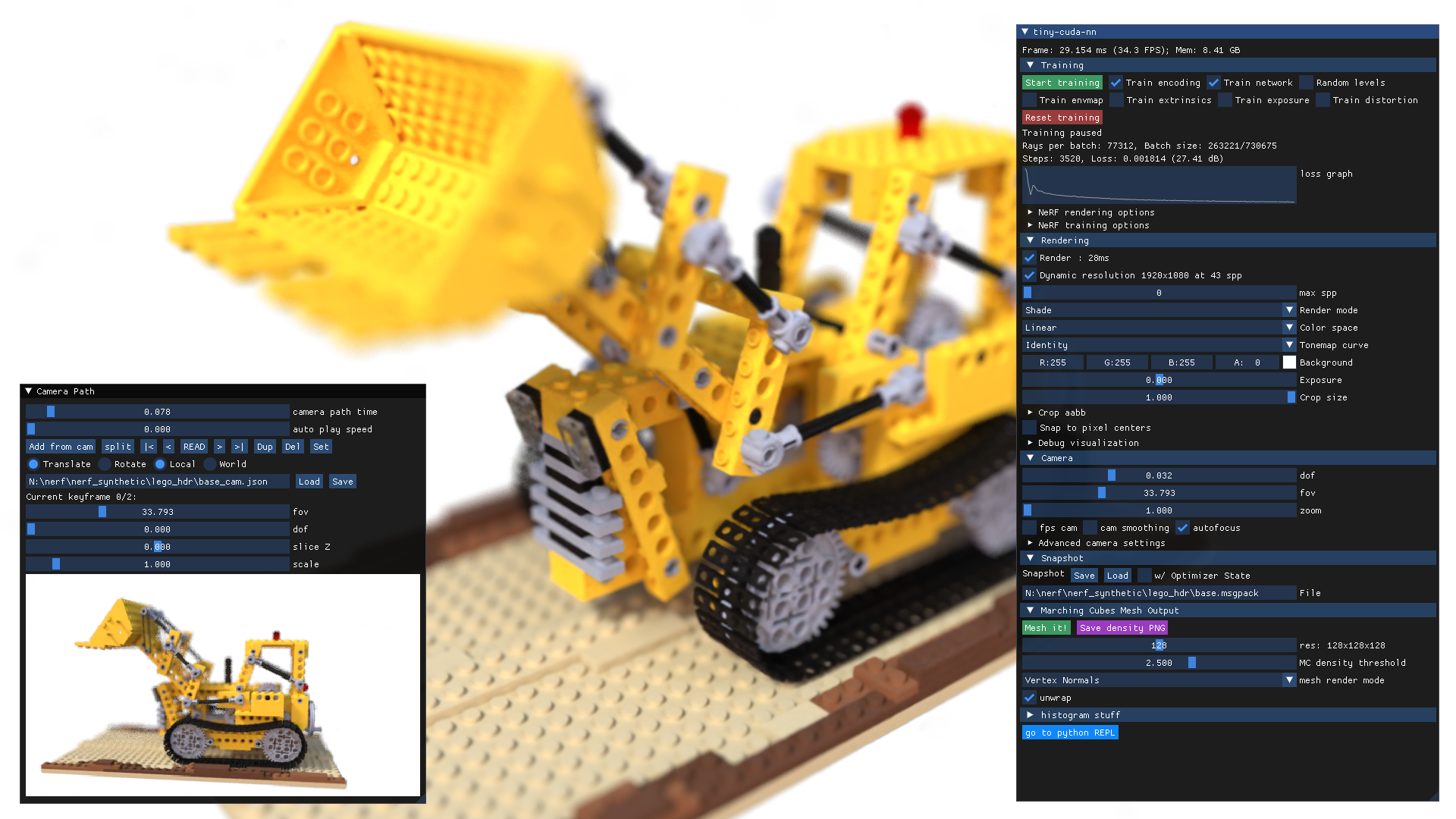

[NeRF (Neural Radiance Field)](https://developer.nvidia.com/blog/getting-started-with-nvidia-instant-nerfs/) is a neural rendering model that learns a high-resolution 3D scene in seconds and can render images of that scene in a few milliseconds. It works by taking a handful a of 2D images representing a scene and [interpolating](https://en.wikipedia.org/wiki/Interpolation) between them to render one complete 3D scene.

* [NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](http://tancik.com/nerf)

* [Instant Neural Graphics Primitives with a Multiresolution Hash Encoding (PDF)](https://nvlabs.github.io/instant-ngp/assets/mueller2022instant.pdf)

* [Instant Neural Graphics Primitives](https://github.com/NVlabs/instant-ngp)

* [Neural Radiance Field (NeRF): A Gentle Introduction ](https://datagen.tech/guides/synthetic-data/neural-radiance-field-nerf/)

NVIDIA Instant NeRFs. Image Credit: [NVIDIA](https://developer.nvidia.com/blog/getting-started-with-nvidia-instant-nerfs/)

[Tiny CUDA Neural Networks](https://github.com/NVlabs/tiny-cuda-nn) is a small, self-contained framework for training and querying neural networks. Most notably, it contains a lightning fast ["fully fused" multi-layer perceptron](https://raw.githubusercontent.com/NVlabs/tiny-cuda-nn/master/data/readme/fully-fused-mlp-diagram.png) ([technical paper](https://tom94.net/data/publications/mueller21realtime/mueller21realtime.pdf)), a versatile [multiresolution hash encoding](https://raw.githubusercontent.com/NVlabs/tiny-cuda-nn/master/data/readme/multiresolution-hash-encoding-diagram.png) ([technical paper](https://nvlabs.github.io/instant-ngp/assets/mueller2022instant.pdf)), as well as support for various other input encodings, losses, and optimizers.

NVIDIA Instant NeRFs using tiny-cuda-nn (Tiny CUDA Neural Networks). Image Credit: [NVIDIA](https://github.com/NVlabs/instant-ngp/blob/master/docs/assets_readme/testbed.png)

Compression

- [Variable Bitrate Neural Fields](https://nv-tlabs.github.io/vqad/), Takikawa et al., SIGGRAPH 2022 | [github](https://github.com/nv-tlabs/vqad)

Unconstrained Images

- [NeRF in the Wild: Neural Radiance Fields for Unconstrained Photo Collections](https://nerf-w.github.io/), Martin-Brualla et al., CVPR 2021

- [Ha-NeRF: Hallucinated Neural Radiance Fields in the Wild](https://rover-xingyu.github.io/Ha-NeRF/), Chen et al., CVPR 2022 | [github](https://github.com/rover-xingyu/Ha-NeRF)

- [HDR-Plenoxels: Self-Calibrating High Dynamic Range Radiance Fields](https://hdr-plenoxels.github.io/), Jun-seong et al., ECCV 2022 | [github](https://github.com/postech-ami/HDR-Plenoxels)

Pose Estimation

- [iNeRF: Inverting Neural Radiance Fields for Pose Estimation](http://yenchenlin.me/inerf/), Yen-Chen et al. IROS 2021

- [A-NeRF: Surface-free Human 3D Pose Refinement via Neural Rendering](https://zollhoefer.com/papers/arXiv20_ANeRF/page.html), Su et al. Arxiv 2021

- [NeRF--: Neural Radiance Fields Without Known Camera Parameters](http://nerfmm.active.vision/), Wang et al., Arxiv 2021 | [github](https://github.com/ActiveVisionLab/nerfmm)

- [iMAP: Implicit Mapping and Positioning in Real-Time](https://edgarsucar.github.io/iMAP/), Sucar et al., ICCV 2021

- [NICE-SLAM: Neural Implicit Scalable Encoding for SLAM](https://pengsongyou.github.io/nice-slam), Zhu et al., Arxiv 2021

- [GNeRF: GAN-based Neural Radiance Field without Posed Camera](https://arxiv.org/abs/2103.15606), Meng et al., Arxiv 2021

- [BARF: Bundle-Adjusting Neural Radiance Fields](https://chenhsuanlin.bitbucket.io/bundle-adjusting-NeRF/), Lin et al., ICCV 2021

- [Self-Calibrating Neural Radiance Fields](https://postech-cvlab.github.io/SCNeRF/), Jeong et al., ICCV 2021 | [github](https://github.com/POSTECH-CVLab/SCNeRF)

- [L2G-NeRF: Local-to-Global Registration for Bundle-Adjusting Neural Radiance Fields](https://rover-xingyu.github.io/L2G-NeRF/), Chen et al., CVPR 2023 | [github](https://github.com/rover-xingyu/L2G-NeRF)

- [Loc-NeRF: Monte Carlo Localization using Neural Radiance Fields](https://arxiv.org/abs/2209.09050), Maggio et al., ICRA 2023 | [github](https://github.com/MIT-SPARK/Loc-NeRF)

- [Robust Camera Pose Refinement for Multi-Resolution Hash Encoding](http://arxiv.org/abs/2302.01571), Heo et al., ICML 2023.

Compositionality

- [NeRF++: Analyzing and Improving Neural Radiance Fields](https://arxiv.org/abs/2010.07492), Zhang et al., Arxiv 2020 | [github](https://github.com/Kai-46/nerfplusplus)

- [GIRAFFE: Representing Scenes as Compositional Generative Neural Feature Fields](https://arxiv.org/abs/2011.12100), Niemeyer et al., CVPR 2021.

- [Object-Centric Neural Scene Rendering](https://shellguo.com/osf/), Guo et al., Arxiv 2020

- [Learning Compositional Radiance Fields of Dynamic Human Heads](https://ziyanw1.github.io/hybrid_nerf/), Wang et al., Arxiv 2020.

- [Neural Scene Graphs for Dynamic Scenes](https://light.princeton.edu/neural-scene-graphs/), Ost et al., CVPR 2021.

- [Unsupervised Discovery of Object Radiance Fields](https://kovenyu.com/uorf/), Yu et al., Arxiv 2021 .

- [Learning Object-Compositional Neural Radiance Field for Editable Scene Rendering](https://zju3dv.github.io/object_nerf/), Yang et al., ICCV 2021 | [github](https://github.com/zju3dv/object_nerf)

- [MoFaNeRF: Morphable Facial Neural Radiance Field](https://neverstopzyy.github.io/mofanerf/), Zhuang et al., Arxiv 2021 | [github](https://github.com/zhuhao-nju/mofanerf)

Scene Labelling and Understanding

- [In-Place Scene Labelling and Understanding with Implicit Scene Representation](https://shuaifengzhi.com/Semantic-NeRF/), Zhi et al., Arxiv 2021.

- [NeRF-SOS: Any-view Self-supervised Object Segmentation on Complex Real-world Scenes](https://zhiwenfan.github.io/NeRF-SOS/), Fan et al., ICLR 2023.

Object Category Modeling

- [FiG-NeRF: Figure Ground Neural Radiance Fields for 3D Object Category Modelling](https://fig-nerf.github.io/), Xie et al., Arxiv 2021.

- [NeRF-Tex: Neural Reflectance Field Textures](https://developer.nvidia.com/blog/nvidia-research-nerf-tex-neural-reflectance-field-textures/), Baatz et al., EGSR 2021.

Multi-scale

- [Mip-NeRF: A Multiscale Representation for Anti-Aliasing Neural Radiance Fields](https://jonbarron.info/mipnerf/), Barron et al., Arxiv 2021 | [github](https://github.com/google/mipnerf)

- [Mip-NeRF 360: Unbounded Anti-Aliased Neural Radiance Fields](https://jonbarron.info/mipnerf360/), Barron et al., Arxiv 2022.

Model Reconstruction

- [UNISURF: Unifying Neural Implicit Surfaces and Radiance Fields for Multi-View Reconstruction](https://arxiv.org/abs/2104.10078), Oechsle et al., ICCV 2021

- [NeuS: Learning Neural Implicit Surfaces by Volume Rendering for Multi-view Reconstruction](https://arxiv.org/abs/2106.10689), Wang et al., NeurIPS 2021 | [github](https://github.com/Totoro97/NeuS)

- [Volume Rendering of Neural Implicit Surfaces](https://arxiv.org/abs/2106.12052), Yariv et al., NeurIPS 2021 | [github](https://github.com/ventusff/neurecon)

- [NeAT: Learning Neural Implicit Surfaces with Arbitrary Topologies from Multi-view Images](https://arxiv.org/abs/2303.12012), Meng et al., CVPR 2023 | [github](https://github.com/xmeng525/NeAT)

Depth Estimation

- [NerfingMVS: Guided Optimization of Neural Radiance Fields for Indoor Multi-view Stereo](https://weiyithu.github.io/NerfingMVS/), Wei et al., ICCV 2021.

Robotics

- [3D Neural Scene Representations for Visuomotor Control](https://3d-representation-learning.github.io/nerf-dy/), Li et al., CoRL 2021 Oral.

- [Vision-Only Robot Navigation in a Neural Radiance World](https://arxiv.org/abs/2110.00168), Adamkiewicz et al., RA-L 2022 Vol.7 No.2 .

Large-scale scene

- [Switch-NeRF: Learning Scene Decomposition with Mixture of Experts for Large-scale Neural Radiance Fields](https://mizhenxing.github.io/switchnerf), Mi et al., ICLR 2023 | [github](https://github.com/MiZhenxing/Switch-NeRF)

## LERF(Language Embedded Radiance Fields)

[Back to the Top](#table-of-contents)

[LERF(Language Embedded Radiance Fields)](https://www.lerf.io/) is a AI process that optimizes a dense, multi-scale language 3D field by volume rendering CLIP embeddings along training rays, supervising these embeddings with multi-scale CLIP features across multi-view training images.

LERF Rendering. Image credit: [LERF.io](https://www.lerf.io/)

### Certifications & Courses

[Back to the Top](#table-of-contents)

- [ASPRS (American Society for Photogrammetry and Remote Sensing) Certification Program](https://www.asprs.org/certification)

- [Pix4D training and certification for mapping professionals](https://training.pix4d.com/)

- [Drone mapping and photogrammetry workshops with Pix4D](https://training.pix4d.com/pages/workshops)

- [Top Photogrammetry Courses Online | Udemy](https://www.udemy.com/topic/photogrammetry/)

- [Photogrammetry With Drones: In Mapping Technology | Udemy](https://www.udemy.com/course/essentials-of-photogrammetry/)

- [Introduction to Photogrammetry Course | Coursera](https://www.coursera.org/lecture/aerial-photography-with-uav/introduction-to-photogrammetry-KyP30)

- [Photogrammetry Online Classes and Training | Linkedin Learning](https://www.linkedin.com/learning/search?keywords=Photogrammetry&upsellOrderOrigin=default_guest_learning&trk=learning-course_learning-search-bar_search-submit)

- [Digital Photogrammetric Systems Course | Purdue Online Learning](https://engineering.purdue.edu/online/courses/digital-photogrammetric-systems)

- [Photogrammetry Training | Deep3D Photogrammetry](https://deep3d.co.uk/photogrammetry-training/)

- [Blender 3 + Reality Capture 5hr. Tutorial Course, with Futuristic Movie Scene & Files](https://blendermarket.com/products/blender-3--reality-capture-cinematic-render-course-futuristic-movie-film-scene)

- [Photogrammetry Course: Photoreal 3d With Blender And Reality Capture](https://blendermarket.com/products/photogrammetry-course)

- [Advanced PhotoModeler for Collision Reconstruction | LightPoint Course](https://lightpointdata.com/advanced-photogrammetry)

- [Motorcycle Collision Reconstruction | LightPoint Course](https://lightpointdata.com/motorcycle-collision-reconstruction)

- [Point Clouds in Collision Reconstruction | LightPoint Course](https://lightpointdata.com/point-clouds-in-collision-reconstruction-future)

- [Advanced Photogrammetry and Mapping with UAS Certificate | Michigan Tech Unviserity](https://www.mtu.edu/gradschool/programs/certificates/photogrammetry-mapping/)

- [Cesium Certified Developer Program](https://cesium.com/learn/certifications/)

### Books/eBooks

[Back to the Top](#table-of-contents)

- [73 Best Photogrammetry Books of All Time - BookAuthority](https://bookauthority.org/books/best-photogrammetry-books)

- [Photogrammetry E-books/tutorials | GIS Resources](https://gisresources.com/photogrammetry-e-books/)

- [Create photorealistic game assets with Unity Engine(eBook)](https://unity.com/solutions/photogrammetry)

- [Get ready for Photogrammetry: A beginner’s guide to scan clean-up with Unity ArtEngine (PDF)](https://content.cdntwrk.com/files/aT0xNDQ5MzA2JnY9MSZpc3N1ZU5hbWU9Z2V0LXJlYWR5LWZvci1waG90b2dyYW1tZXRyeSZjbWQ9ZCZzaWc9ZWZmOGJkNjJlOTA1Yzc2ZGFmMTc3MjVmODMzNDBjNTc%253D)

- [Introduction to Modern Photogrammetry by MIKHAIL EDWARD](https://www.amazon.com/Introduction-Modern-Photogrammetry-Edward-Mikhail/dp/8126539984/ref=sr_1_5?crid=2ZTDXM9NZX73K&keywords=Photogrammetry&qid=1654415794&s=books&sprefix=photogrammetry%2Cstripbooks%2C157&sr=1-5)

- [UAV Photogrammetry and Remote Sensing by Fernando Carvajal-Ramírez, Francisco Agüera-Vega](https://www.amazon.com/Photogrammetry-Remote-Sensing-Fernando-Carvajal-Ram%C3%ADrez/dp/3036514546/ref=sr_1_4?crid=2ZTDXM9NZX73K&keywords=Photogrammetry&qid=1654415794&s=books&sprefix=photogrammetry%2Cstripbooks%2C157&sr=1-4)

- [Elements of Photogrammetry with Application in GIS, Fourth Editionby Paul Wolf, Bon DeWitt](https://www.amazon.com/Elements-Photogrammetry-Application-GIS-Fourth/dp/0071761128/ref=sr_1_2?crid=2ZTDXM9NZX73K&keywords=Photogrammetry&qid=1654415794&s=books&sprefix=photogrammetry%2Cstripbooks%2C157&sr=1-2)

- [OpenDroneMap: The Missing Guide: A Practical Guide To Drone Mapping Using Free and Open Source Software

by Piero Toffanin](https://www.amazon.com/OpenDroneMap-Missing-Practical-Mapping-Software/dp/1086027566/ref=sr_1_16?crid=2ZTDXM9NZX73K&keywords=Photogrammetry&qid=1654415923&refinements=p_72%3A1250221011&rnid=1250219011&s=books&sprefix=photogrammetry%2Cstripbooks%2C157&sr=1-16)

- [Digital Photogrammetry by Yves Egels and Michel Kasser](https://www.amazon.com/Michel-Kasser/e/B001H6L0CS?ref=sr_ntt_srch_lnk_19&qid=1654415923&sr=1-19)

- [Digital Photogrammetry: Theory and Applications by Wilfried Linder ](https://www.amazon.com/Digital-Photogrammetry-Applications-Wilfried-Linder-ebook/dp/B00FBVV9VM/ref=sr_1_22?crid=2ZTDXM9NZX73K&keywords=Photogrammetry&qid=1654415923&refinements=p_72%3A1250221011&rnid=1250219011&s=books&sprefix=photogrammetry%2Cstripbooks%2C157&sr=1-22)

- [Drone Technology in Architecture, Engineering and Construction: A Strategic Guide to Unmanned Aerial Vehicle Operation and Implementation by Daniel Tal and Jon Altschuld ](https://www.amazon.com/Drone-Technology-Architecture-Engineering-Construction/dp/1119545889/ref=sr_1_35?crid=2ZTDXM9NZX73K&keywords=Photogrammetry&qid=1654416094&refinements=p_72%3A1250221011&rnid=1250219011&s=books&sprefix=photogrammetry%2Cstripbooks%2C157&sr=1-35)

- [Getting to Know ArcGIS Desktop 10.8 by Michael Law and Amy Collins](https://www.amazon.com/Getting-Know-ArcGIS-Desktop-10-8/dp/1589485777/ref=sr_1_2?keywords=Getting+to+Know+ArcGIS&qid=1659566233&sr=8-2)

- [Getting to Know ArcGIS Pro 2.8 by Michael Law and Amy Collins](https://www.amazon.com/Getting-Know-ArcGIS-Pro-2-8/dp/158948701X/ref=sr_1_5?keywords=Getting+to+Know+ArcGIS&qid=1659566233&sr=8-5)

- [Getting to Know Web GIS by Pinde Fu](https://www.amazon.com/Getting-Know-Web-GIS-Pinde-ebook/dp/B09Y68TXGF/ref=sr_1_3?crid=303PWBITFO5JT&keywords=web+GIS&qid=1659566920&sprefix=web+gis%2Caps%2C200&sr=8-3)

- [Geographical Data Science and Spatial Data Analysis: An Introduction in R (Spatial Analytics and GIS) by Lex Comber and Chris Brunsdon](https://www.amazon.com/Geographical-Data-Science-Spatial-Analysis/dp/1526449366/ref=sr_1_3?crid=38CZ6KLAF9GQ0&keywords=An+Introduction+to+R+for+Spatial+Analysis+and+Mapping&qid=1659566504&sprefix=an+introduction+to+r+for+spatial+analysis+and+mapping%2Caps%2C152&sr=8-3)

- [Fuzzy Machine Learning Algorithms for Remote Sensing Image Classification by Anil Kumar , Priyadarshi Upadhyay, et al.](https://www.amazon.com/Machine-Learning-Algorithms-Sensing-Classification-ebook/dp/B08C27QSSW/ref=sr_1_fkmr0_2?crid=O5TAKPDUTE86&keywords=Imagery+and+GIS%3A+Best+Practices+for+Extracting+Information+from+Imagery&qid=1659566587&sprefix=imagery+and+gis+best+practices+for+extracting+information+from+imagery%2Caps%2C159&sr=8-2-fkmr0)

- [GIS Applications in Agriculture, Volume Four by Tom Mueller, Gretchen F. Sassenrath](https://www.amazon.com/GIS-Applications-Agriculture-Four-Mueller/dp/1032098805/ref=sr_1_fkmr1_1?crid=O5TAKPDUTE86&keywords=Imagery+and+GIS%3A+Best+Practices+for+Extracting+Information+from+Imagery&qid=1659566587&sprefix=imagery+and+gis+best+practices+for+extracting+information+from+imagery%2Caps%2C159&sr=8-1-fkmr1)

- [Applications of Small Unmanned Aircraft Systems: Best Practices and Case Studies by J.B. Sharma](https://www.amazon.com/Applications-Small-Unmanned-Aircraft-Systems/dp/0367199246/ref=sr_1_fkmr1_2?crid=O5TAKPDUTE86&keywords=Imagery+and+GIS%3A+Best+Practices+for+Extracting+Information+from+Imagery&qid=1659566587&sprefix=imagery+and+gis+best+practices+for+extracting+information+from+imagery%2Caps%2C159&sr=8-2-fkmr1)

- [Free GIS Books](https://www.gislounge.com/free-gis-books/)

### YouTube Tutorials

[Back to the Top](#table-of-contents)

[](https://www.youtube.com/watch?v=SyB7Wg1e62A&list=RDCMUCi1TC2fLRvgBQNe-T4dp8Eg)

[](https://www.youtube.com/watch?v=j3lhPKF8qjU)[](https://www.youtube.com/watch?v=JLdxBtECGuc)

[](https://www.youtube.com/watch?v=ZkZbVOdaXHs)

[](https://www.youtube.com/watch?v=2yuz4E1_T4o)

[](https://www.youtube.com/watch?v=bdL6J-q4Owk)

[](https://www.youtube.com/watch?v=dWuaIv6UiVQ)[")](https://www.youtube.com/watch?v=udXQHys50aA)

[](https://www.youtube.com/watch?v=L_SdlR57NtU)

[](https://www.youtube.com/watch?v=E17XQdC3DVU)

[](https://www.youtube.com/watch?v=WrCOhes1Zgg)

[](https://www.youtube.com/watch?v=gdd31rgS1q8)

[](https://www.youtube.com/watch?v=B5hBBFM2I_w)

[](https://www.youtube.com/watch?v=fRmGbM84aOw)

[](https://www.youtube.com/watch?v=TiSGfKm5cFQ)

[")](https://www.youtube.com/watch?v=je79gV8HsZI)

[](https://www.youtube.com/watch?v=lfWHyi-3VKs)

[](https://www.youtube.com/watch?v=1eJhsTUEPEg)

[](https://www.youtube.com/watch?v=kI-jJUr9rH8)

[](https://www.youtube.com/watch?v=dPOldb5yTdg)

# Photogrammetry Tools, Libraries, and Frameworks

[Back to the Top](https://github.com/mikeroyal/Photogrammetry-Guide#table-of-contents)

[Autodesk® ReCap™](https://www.autodesk.com/products/recap/free-trial) is a software tool that converts reality captured from laser scans or photos into a 3D model or 2D drawing that's ready to be used in your design built for UAV and drone processes.

[Autodesk® ReCap™ Photo](http://blogs.autodesk.com/recap/introducing-recap-photo/) is a cloud-connected solution tailored for drone/UAV photo capturing workflows. Using ReCap Photo, you can create textured meshes, point clouds with geolocation, and high-resolution orthographic views with elevation maps.

[Pix4D](https://www.pix4d.com/) is a unique suite of photogrammetry software for drone mapping. Capture images with our app, process on desktop or cloud and create maps and 3D models.

[PIX4Dmapper](https://www.pix4d.com/product/pix4dmapper-photogrammetry-software) is the leading photogrammetry software for professional drone mapping.

[RealityCapture](https://www.capturingreality.com/) is a state-of-the-art photogrammetry software solution that creates virtual reality scenes, textured 3D meshes, orthographic projections, geo-referenced maps and much more from images and/or laser scans completely automatically.

[ArcGIS Reality](https://www.esri.com/arcgis/products/arcgis-reality/overview) is a suite of photogrammetry software products designed to enable reality capture workflows for sites, cities, and countries.

[ArcGIS Drone2Map](https://www.esri.com/en-us/arcgis/products/arcgis-drone2map/overview) is the desktop app for your GIS drone mapping needs. As a 2D & 3D photogrammetry app, create the outputs you need.

[Adobe Scantastic](https://labs.adobe.com/projects/scantastic/) is a tool that makes the creation of 3D assets accessible to everyone. It can be used with just a mobile device (combined with Adobe's server-based photogrammetry pipeline), users can easily scan objects in their physical environment and turn them into 3D models which can then be imported into tools like [Adobe Dimension](https://www.adobe.com/products/dimension.html) and [Adobe Aero](https://www.adobe.com/products/aero.html).

[Adobe Aero](https://www.adobe.com/products/aero.html) is a tool that helps you build, view, and share immersive AR experiences. Simply build a scene by bringing in 2D images from Adobe Photoshop and Illustrator, or 3D models from Adobe Dimension, Substance, third-party apps like Cinema 4D, or asset libraries like Adobe Stock and TurboSquid. Aero optimizes a wide array of assets, including OBJ, GLB, and glTF files, for AR, so you can visualize them in real time.

[Agisoft Metashape](https://www.agisoft.com/) is a stand-alone software product that performs photogrammetric processing of digital images and generates 3D spatial data to be used in GIS applications, cultural heritage documentation, and visual effects production as well as for indirect measurements of objects of various scales.

[MicroStation](https://www.bentley.com/en/products/brands/microstation) is a CAD software platform for 2D and 3D dimensional design and drafting, developed and sold by Bentley Systems. It generates 2D/3D vector graphics objects and elements and includes building information modeling (BIM) features.

[Leica Photogrammetry Suite (LPS)](https://support.hexagonsafetyinfrastructure.com/infocenter/index?page=product&facRef=LPS&facDisp=Leica%20Photogrammetry%20Suite%20(LPS)&landing=1) is a powerful photogrammetry system that delivers full analytical triangulation, the generation of digital terrain models, orthophoto production, mosaicking, and 3D feature extraction in a user-friendly environment that guarantees results even for photogrammetry novices.

[DroneDeploy](https://www.dronedeploy.com/product/photogrammetry/) is a powerful Cloud Photogrammetry platform that provides fast, high-quality results at any scale Upload up to 10,000 images at once without specialized hardware or software Process hundreds of maps simultaneously across your organization Generate precise 2D maps, 3D models, and 360 panoramas.

[Polycam](https://poly.cam/) is the world's most popular LiDAR 3D scanning app for iOS(iPhone/iPad), Web, and Android. It let's you scan the world around you with your mobile device, DSLR camera, or drone to get beautiful, accurate 3D models.

[Sanborn Prism4D™](https://www.sanborn.com/prism4d/) is a Real-Time Decision Support and Visualization Software that provides integrated command center operation functions within a single turnkey system and performs as a decision support and visualization system for analysis of geospatial information. It includes tools for 2D and 3D visualization that can be integrated with other applications providing a seamless ability to analyze, plan and react in real time to events.

[FlightGoggles](https://flightgoggles.mit.edu/) is a photorealistic sensor simulator for perception-driven robotic vehicles. FlightGoggles provides photorealistic exteroceptive sensor simulation using graphics assets generated with photogrammetry.

[ArcMap Raster Edit Suite](https://github.com/haoliangyu/ares) - is an ArcMap Add-in that enables manual editing of single pixels on raster layer.

[Bertin.js](https://github.com/neocarto/bertin) is a JavaScript library for visualizing geospatial data and make thematic maps for the web.

[CMV - The Configurable Map Viewer](https://github.com/cmv/cmv-app) is a community-supported open source mapping framework. CMV works with the Esri JavaScript API, ArcGIS Server, ArcGIS Online and more.

[ContextCapture](https://www.bentley.com/software/contextcapture/) is a tool that enables you to automatically generate multi-resolution 3D models at any scale and precision.

[Correlator3D](https://www.simactive.com/correlator3d-mapping-software-features) is a High-end photogrammetry suite.

[FlowEngine](https://aws.amazon.com/marketplace/seller-profile?id=bc1120fb-7263-4683-9301-4b7470f9a2d9) is the perfect photogrammetry Software Development Kit with a powerful, fully customizable photogrammetry reconstruction engine written in C++.

[FME Desktop](https://www.safe.com/fme/fme-desktop/) is an integrated collection of Spatial ETL tools for data transformation and data translation.

[Geomedia](https://hexagon.com/products/geomedia) is a Commercial GIS software.

[GRASS (Geographic Resources Analysis Support System) GIS](https://grass.osgeo.org/) is a free and open source GIS software.

[SNAP](https://step.esa.int/main/download/snap-download/) is an open source common architecture for ESA Toolboxes ideal for the exploitation of Earth Observation data.

[TerrSet (formerly IDRISI)](https://clarklabs.org/terrset/) is an integrated geographic information system (GIS) and remote sensing software

[The Sentinel Toolbox](https://sentinel.esa.int/web/sentinel/toolboxes) is a collection of processing tools, data product readers and writers and a display and analysis application to process Sentinel data.

[BoofCV](https://github.com/lessthanoptimal/BoofCV) is an open source library written from scratch for real-time computer vision. Its functionality covers a range of subjects, low-level image processing, camera calibration, feature detection/tracking, structure-from-motion, fiducial detection, and recognition.

[OpenMVG (open Multiple View Geometry)](https://github.com/openMVG/openMVG) is a library for 3D Computer Vision and Structure from Motion. It's targeted for the Multiple View Geometry community.

[Mapnik](http://mapnik.org/) is an open source toolkit for developing mapping applications. It uses a C++ shared library providing algorithms and patterns for spatial data access and visualization.

[Cesium](https://www.cesium.com/) is the foundational open platform for creating powerful 3D geospatial applications.

[CesiumJS](https://cesium.com/cesiumjs/) is a JavaScript library for creating 3D globes and 2D maps in a web browser without a plugin. It uses WebGL for hardware-accelerated graphics, and is cross-platform, cross-browser, and tuned for dynamic-data visualization.

[Cesium ion](https://cesium.com/platform/cesium-ion/) is a robust, scalable, and secure platform for 3D geospatial data. Upload your content and Cesium ion will optimize it as 3D Tiles, host it in the cloud, and stream it to any device. It includes access to curated global 3D content including Cesium World Terrain, Bing Maps imagery, and Cesium OSM Buildings.

[Cesium for Unreal](https://cesium.com/cesium-for-unreal/) is a tool that brings the 3D geospatial ecosystem to Unreal Engine. By combining a high-accuracy full-scale WGS84 globe, open APIs and open standards for spatial indexing such as 3D Tiles, and cloud-based real-world content from Cesium ion with Unreal Engine, this project enables a new era of 3D geospatial software.

[Cesium for O3DE](https://cesium.com/platform/cesium-for-o3de/) is a tool that provides a full-scale, high-accuracy ([WGS84](https://csrc.nist.gov/glossary/term/world_geodetic_system_1984)) globe and runtime 3D Tiles engine for the open source Open 3D Engine (O3DE).

[3D Tiles](https://github.com/CesiumGS/3d-tiles) is an open specification for sharing, visualizing, fusing, and interacting with massive heterogenous 3D geospatial content across desktop, web, and mobile applications.

[TeleSculptor](https://telesculptor.org/) is an open source, cross-platform desktop application for photogrammetry. It was designed specifically with a focus on aerial video processing leveraging video metadata standards ([MISB 0601](https://developer.ridgerun.com/wiki/index.php/LibMISB)) for geolocation, but it can handle both images and video either with or without metadata. TeleSculptor uses structure-from-motion techniques to estimate camera parameters and a sparse set of 3D landmarks.

[Terramodel](https://heavyindustry.trimble.com/products/terramodel) is a powerful software package for the surveyor, civil engineer or contractor who requires a CAD and design package with integrated support for raw survey data.

[MicMac](https://github.com/micmacIGN/micmac) is a free and open-source photogrammetry software tools for 3D reconstruction.

[3DF Zephyr](https://www.3dflow.net/3df-zephyr-photogrammetry-software/) is a photogrammetry software solution by 3Dflow. It allows you automatically reconstruct 3D models from photos and deal with any 3D reconstruction and scanning challenge. No matter what camera sensor, drone or laser scanner device you are going to use.

[COLMAP](https://colmap.github.io/) is a general-purpose Structure-from-Motion (SfM) and Multi-View Stereo (MVS) pipeline with a graphical and command-line interface. It offers a wide range of features for reconstruction of ordered and unordered image collections.

[Multi-View Environment (MVE)](https://www.gcc.tu-darmstadt.de/home/proj/mve/) is an effort to ease the work with multi-view datasets and to support the development of algorithms based on multiple views. It features Structure from Motion, Multi-View Stereo and Surface Reconstruction. MVE is developed at the TU Darmstadt.

[OpenMVG (open Multiple View Geometry)](https://github.com/openMVG/openMVG) is a photogrammtery that provides an end-to-end 3D reconstruction from images framework compounded of libraries, binaries, and pipelines. The libraries provide easy access to features like: images manipulation, features description and matching, feature tracking, camera models, multiple-view-geometry, robust-estimation, and structure-from-motion algorithms.

[DroneDeploy](https://www.dronedeploy.com/) is a drone mapping software for flying your drone to using your data to create interactive maps, 3D models, videos, and more.

[AliceVision](https://github.com/alicevision/AliceVision) is a Photogrammetric Computer Vision Framework which provides 3D Reconstruction and Camera Tracking algorithms. AliceVision comes up with strong software basis and state-of-the-art computer vision algorithms that can be tested, analyzed and reused.

[Meshroom](https://github.com/alicevision/meshroom) is a free, open-source 3D Reconstruction Software based on the AliceVision framework.

[PhotoModeler](https://www.photomodeler.com/) is a software extracts Measurements and Models from photographs taken with an ordinary camera. A cost-effective way for accurate 2D or 3D measurement, photo-digitizing, surveying, 3D scanning, and reality capture.

[OpenDroneMap(ODM)](https://www.opendronemap.org/odm/) is an open source command line toolkit to generate maps, point clouds, 3D models and DEMs from drone, balloon or kite images.

[WebODM](https://www.opendronemap.org/webodm/) is a user-friendly, commercial grade software for drone image processing. Generate georeferenced maps, point clouds, elevation models and textured 3D models from aerial images. It supports multiple engines for processing, currently [ODM](https://github.com/OpenDroneMap/ODM) and [MicMac](https://github.com/dronemapper-io/NodeMICMAC/).

[NodeODM](https://www.opendronemap.org/nodeodm/) is a [standard API specification](https://github.com/OpenDroneMap/NodeODM/blob/master/docs/index.adoc) for processing aerial images with engines such as [ODM](https://github.com/OpenDroneMap/ODM). The API is used by clients such as [WebODM](https://github.com/OpenDroneMap/WebODM), [CloudODM](https://github.com/OpenDroneMap/CloudODM) and [PyODM](https://github.com/OpenDroneMap/PyODM).

[ClusterODM](https://www.opendronemap.org/clusterodm/) is a reverse proxy, load balancer and task tracker with optional cloud autoscaling capabilities for NodeODM API compatible nodes. In a nutshell, it's a program to link together multiple NodeODM API compatible nodes under a single network address.

[Mosaic](https://databrickslabs.github.io/mosaic/) is an extension to the Apache Spark framework that allows easy and fast processing of very large geospatial datasets.) is an extension to the Apache Spark framework that allows easy and fast processing of very large geospatial datasets.

[FIELDimageR](https://www.opendronemap.org/fieldimager/) is an R package to analyze orthomosaic images from agricultural field trials.

[Regard3D](https://www.regard3d.org/) is a free and open source structure-from-motion program. It converts photos of an object, taken from different angles, into a 3D model of this object.

[PhotoCatch](https://apps.apple.com/us/app/photocatch/id1576081762) is the first app for [Apple's Object Capture API](https://developer.apple.com/news/?id=48xhsgu2), enabling anyone to create stunning 3D models in minutes, not days, with no code or 3D experience required.

[PhotoCatch Desktop](https://www.photocatch.app/desktop) is a Professional photogrammetry workstation for exporting beautiful 3D assets ready for Augmented Reality and Visual Effects. It provides performance optimizations for M1 Max and M1 Ultra on MacBook Pro and Mac Studio, so you can process up to 4 3D models at the same time.

[PhotoCatch Cloud](https://www.photocatch.app/cloud) is a professional service that brings desktop class photogrammetry and 3D editing tools to mobile devices, so you can capture, edit, and share 3D content on-site. It Syncs photo capture with your equipment and use Depth Capture so your 3D models stay the same size as the real world objects.

[Martin](https://martin.maplibre.org/) is a tile server able to generate [vector tiles](https://github.com/mapbox/vector-tile-spec) from large [PostGIS](https://github.com/postgis/postgis) databases on the fly, or serve tiles from [PMTile](https://protomaps.com/blog/pmtiles-v3-whats-new) and [MBTile](https://github.com/mapbox/mbtiles-spec) files. Martin optimizes for speed and heavy traffic, and is written in Rust.

[Headway](https://about.maps.earth/) is a maps stack in a box that makes it easy to take your location data into your own hands. With just a few commands you can bring up your own fully functional maps server. This includes a frontend, basemap, geocoder and routing engine. Choose one of the 200+ predefined cities or provide your own OpenStreetMap extract covering any area: from a neighborhood to the whole planet.

[OpenLayers](https://openlayers.org/) is a high-performance, feature-packed library for creating interactive maps on the web. It can display map tiles, vector data and markers loaded from any source on any web page.

[Supercluster](https://github.com/mapbox/supercluster) is a very fast JavaScript library for geospatial point clustering for browsers and Node.

[MapLibre GL JS](https://github.com/maplibre/maplibre-gl-js) is an open-source library for publishing maps on your websites or webview based apps. Fast displaying of maps is possible thanks to GPU-accelerated vector tile rendering.

[MapLibre Native](https://maplibre.org/) is an Interactive vector tile maps for iOS, Android and other platforms.

[Maplibre-rs ](https://github.com/maplibre/maplibre-rs) is an Experimental Maps for Web, Mobile and Desktop.

[Prettymaps](https://github.com/marceloprates/prettymaps) is a small set of Python functions to draw pretty maps from OpenStreetMap data. Based on osmnx, matplotlib and shapely libraries.

[OrientDB](https://github.com/orientechnologies/orientdb) is an Open Source Multi-Model NoSQL DBMS with the support of Native Graphs, Documents Full-Text, Reactivity, Geo-Spatial and Object Oriented concepts.

[PgRouting](https://pgrouting.org/) is a tool that extends the PostGIS / PostgreSQL geospatial database to provide geospatial routing functionality.

[PostGIS Vector Tile Utils](https://github.com/mapbox/postgis-vt-util) is a set of PostgreSQL functions that are useful when creating vector tile sources.

[CMV - The Configurable Map Viewer](https://github.com/cmv/cmv-app) is a community-supported open source mapping framework. CMV works with the Esri JavaScript API, ArcGIS Server, ArcGIS Online and more.

[ContextCapture](https://www.bentley.com/software/contextcapture/) is a tool that enables you to automatically generate multi-resolution 3D models at any scale and precision.

[Correlator3D](https://www.simactive.com/correlator3d-mapping-software-features) is a High-end photogrammetry suite.

[FME Desktop](https://www.safe.com/fme/fme-desktop/) is an integrated collection of Spatial ETL tools for data transformation and data translation.

[Geomedia](https://hexagon.com/products/geomedia) is a Commercial GIS software.

[GRASS (Geographic Resources Analysis Support System) GIS](https://grass.osgeo.org/) is a free and open source GIS software.

[pyGEDI](https://github.com/EduinHSERNA/pyGEDI) is a high performance, lower cognitive load, and cleaner and more transparent code for data extraction, analysis, processing, and visualization of Global Ecosystem Dynamics Investigation (GEDI) products.

[rGEDI](https://github.com/carlos-alberto-silva/rGEDI) is an R Package for NASA's Global Ecosystem Dynamics Investigation (GEDI) Data Visualization and Processing.