Ecosyste.ms: Awesome

An open API service indexing awesome lists of open source software.

https://github.com/youngwanLEE/centermask2

[CVPR 2020] CenterMask : Real-time Anchor-Free Instance Segmentation

https://github.com/youngwanLEE/centermask2

anchor-free centermask cvpr2020 detectron2 instance-segmentation object-detection pytorch real-time vovnet vovnetv2

Last synced: about 1 month ago

JSON representation

[CVPR 2020] CenterMask : Real-time Anchor-Free Instance Segmentation

- Host: GitHub

- URL: https://github.com/youngwanLEE/centermask2

- Owner: youngwanLEE

- License: other

- Created: 2020-02-20T08:39:46.000Z (over 4 years ago)

- Default Branch: master

- Last Pushed: 2021-12-27T06:21:35.000Z (over 2 years ago)

- Last Synced: 2024-02-17T18:35:44.432Z (5 months ago)

- Topics: anchor-free, centermask, cvpr2020, detectron2, instance-segmentation, object-detection, pytorch, real-time, vovnet, vovnetv2

- Language: Python

- Homepage:

- Size: 109 KB

- Stars: 764

- Watchers: 16

- Forks: 159

- Open Issues: 34

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Lists

- awesome-anchor-free-object-detection - CenterMask - Time Anchor-Free Instance Segmentation". (**[CVPR 2020](https://openaccess.thecvf.com/content_CVPR_2020/html/Lee_CenterMask_Real-Time_Anchor-Free_Instance_Segmentation_CVPR_2020_paper.html)**) (Frameworks)

- my-awesome-stars - youngwanLEE/centermask2 - CenterMask : Real-time Anchor-Free Instance Segmentation, in CVPR 2020 (Python)

README

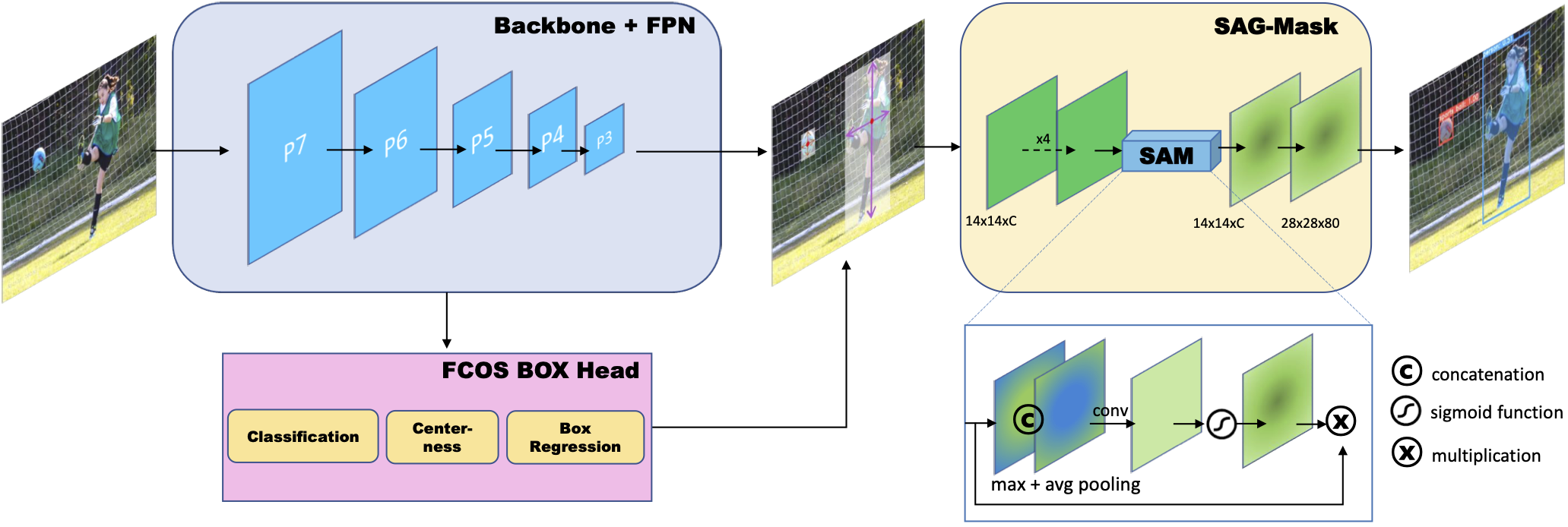

# [CenterMask](https://arxiv.org/abs/1911.06667)2

[[`CenterMask(original code)`](https://github.com/youngwanLEE/CenterMask)][[`vovnet-detectron2`](https://github.com/youngwanLEE/vovnet-detectron2)][[`arxiv`](https://arxiv.org/abs/1911.06667)] [[`BibTeX`](#CitingCenterMask)]

**CenterMask2** is an upgraded implementation on top of [detectron2](https://github.com/facebookresearch/detectron2) beyond original [CenterMask](https://github.com/youngwanLEE/CenterMask) based on [maskrcnn-benchmark](https://github.com/facebookresearch/maskrcnn-benchmark).

> **[CenterMask : Real-Time Anchor-Free Instance Segmentation](https://arxiv.org/abs/1911.06667) (CVPR 2020)**

> [Youngwan Lee](https://github.com/youngwanLEE) and Jongyoul Park

> Electronics and Telecommunications Research Institute (ETRI)

> pre-print : https://arxiv.org/abs/1911.06667

## Highlights

- ***First* anchor-free one-stage instance segmentation.** To the best of our knowledge, **CenterMask** is the first instance segmentation on top of anchor-free object detection (15/11/2019).

- **Toward Real-Time: CenterMask-Lite.** This works provide not only large-scale CenterMask but also lightweight CenterMask-Lite that can run at real-time speed (> 30 fps).

- **State-of-the-art performance.** CenterMask outperforms Mask R-CNN, TensorMask, and ShapeMask at much faster speed and CenterMask-Lite models also surpass YOLACT or YOLACT++ by large margins.

- **Well balanced (speed/accuracy) backbone network, VoVNetV2.** VoVNetV2 shows better performance and faster speed than ResNe(X)t or HRNet.

## Updates

- CenterMask2 has been released. (20/02/2020)

- Lightweight VoVNet has ben released. (26/02/2020)

- Panoptic-CenterMask has been released. (31/03/2020)

- code update for compatibility with pytorch1.7 and the latest detectron2 (22/12/2020)

## Results on COCO val

### Note

We measure the inference time of all models with batch size 1 on the same V100 GPU machine.

- pytorch1.7.0

- CUDA 10.1

- cuDNN 7.3

- multi-scale augmentation

- Unless speficified, no Test-Time Augmentation (TTA)

### CenterMask

|Method|Backbone|lr sched|inference time|mask AP|box AP|download|

|:--------:|:--------:|:--:|:--:|----|----|:--------:|

Mask R-CNN (detectron2)|R-50|3x|0.055|37.2|41.0|model \| metrics

Mask R-CNN (detectron2)|V2-39|3x|0.052|39.3|43.8|model \| metrics

CenterMask (maskrcnn-benchmark)|V2-39|3x|0.070|38.5|43.5|[link](https://github.com/youngwanLEE/CenterMask#coco-val2017-results)

**CenterMask2**|V2-39|3x|**0.050**|**39.7**|**44.2**|model \| metrics

||

Mask R-CNN (detectron2)|R-101|3x|0.070|38.6|42.9|model \| metrics

Mask R-CNN (detectron2)|V2-57|3x|0.058|39.7|44.2|model \| metrics

CenterMask (maskrcnn-benchmark)|V2-57|3x|0.076|39.4|44.6|[link](https://github.com/youngwanLEE/CenterMask#coco-val2017-results)

**CenterMask2**|V2-57|3x|**0.058**|**40.5**|**45.1**|model \| metrics

||

Mask R-CNN (detectron2)|X-101|3x|0.129|39.5|44.3|model \| metrics

Mask R-CNN (detectron2)|V2-99|3x|0.076|40.3|44.9|model \| metrics

CenterMask (maskrcnn-benchmark)|V2-99|3x|0.106|40.2|45.6|[link](https://github.com/youngwanLEE/CenterMask#coco-val2017-results)

**CenterMask2**|V2-99|3x|**0.077**|**41.4**|**46.0**|model \| metrics

||

**CenterMask2 (TTA)**|V2-99|3x|-|**42.5**|**48.6**|model \| metrics

* TTA denotes Test-Time Augmentation (multi-scale test).

### CenterMask-Lite

|Method|Backbone|lr sched|inference time|mask AP|box AP|download|

|:--------:|:--------:|:--:|:--:|:----:|:----:|:--------:|

|YOLACT550|R-50|4x|0.023|28.2|30.3|[link](https://github.com/dbolya/yolact)

|CenterMask (maskrcnn-benchmark)|V-19|4x|0.023|32.4|35.9|[link](https://github.com/youngwanLEE/CenterMask#coco-val2017-results)

|**CenterMask2-Lite**|V-19|4x|0.023|**32.8**|**35.9**|model \| metrics

||

|YOLACT550|R-101|4x|0.030|28.2|30.3|[link](https://github.com/dbolya/yolact)

|YOLACT550++|R-50|4x|0.029|34.1|-|[link](https://github.com/dbolya/yolact)

|YOLACT550++|R-101|4x|0.036|34.6|-|[link](https://github.com/dbolya/yolact)

|CenterMask (maskrcnn-benchmark)|V-39|4x|0.027|36.3|40.7|[link](https://github.com/youngwanLEE/CenterMask#coco-val2017-results)

|**CenterMask2-Lite**|V-39|4x|0.028|**36.7**|**40.9**|model \| metrics

* Note that The inference time is measured on Titan Xp GPU for fair comparison with YOLACT.

### Lightweight VoVNet backbone

|Method|Backbone|Param.|lr sched|inference time|mask AP|box AP|download|

|:--------:|:--------:|:--:|:--:|:--:|:----:|:----:|:--------:|

|CenterMask2-Lite|MobileNetV2|3.5M|4x|0.021|27.2|29.8|model \| metrics

||

|CenterMask2-Lite|V-19|11.2M|4x|0.023|32.8|35.9|model \| metrics

|CenterMask2-Lite|V-19-**Slim**|3.1M|4x|0.021|29.8|32.5|model \| metrics

|CenterMask2-Lite|V-19**Slim**-**DW**|1.8M|4x|0.020|27.1|29.5|model \| metrics

* _**DW** and **Slim** denote depthwise separable convolution and a thiner model with half the channel size, respectively._

* __Params.__ means the number of parameters of backbone.

### Deformable VoVNet Backbone

|Method|Backbone|lr sched|inference time|mask AP|box AP|download|

|:--------:|:--------:|:--:|:--:|:--:|:----:|:----:|

CenterMask2|V2-39|3x|0.050|39.7|44.2|model \| metrics

CenterMask2|V2-39-DCN|3x|0.061|40.3|45.1|model \| metrics

||

CenterMask2|V2-57|3x|0.058|40.5|45.1|model \| metrics

CenterMask2|V2-57-DCN|3x|0.071|40.9|45.5|model \| metrics

||

CenterMask2|V2-99|3x|0.077|41.4|46.0|model \| metrics

CenterMask2|V2-99-DCN|3x|0.110|42.0|46.9|model \| metrics

||

* _DCN denotes deformable convolutional networks v2. Note that we apply deformable convolutions from stage 3 to 5 in backbones._

### Panoptic-CenterMask

|Method|Backbone|lr sched|inference time|mask AP|box AP|PQ|download|

|:--------:|:--------:|:--:|:--:|:--:|:----:|:----:|:--------:|

|Panoptic-FPN|R-50|3x|0.063|40.0|36.5|41.5|model \| metrics

|Panoptic-CenterMask|R-50|3x|0.063|41.4|37.3|42.0|model \| metrics

|Panoptic-FPN|V-39|3x|0.063|42.8|38.5|43.4|model \| metrics

|Panoptic-CenterMask|V-39|3x|0.066|43.4|39.0|43.7|model \| metrics

||

|Panoptic-FPN|R-101|3x|0.078|42.4|38.5|43.0|model \| metrics

|Panoptic-CenterMask|R-101|3x|0.076|43.5|39.0|43.6|model \| metrics

|Panoptic-FPN|V-57|3x|0.070|43.4|39.2|44.3|model \| metrics

|Panoptic-CenterMask|V-57|3x|0.071|43.9|39.6|44.5|model \| metrics

||

|Panoptic-CenterMask|V-99|3x|0.091|45.1|40.6|45.4|model \| metrics

## Installation

All you need to use centermask2 is [detectron2](https://github.com/facebookresearch/detectron2). It's easy!

you just install [detectron2](https://github.com/facebookresearch/detectron2) following [INSTALL.md](https://github.com/facebookresearch/detectron2/blob/master/INSTALL.md).

Prepare for coco dataset following [this instruction](https://github.com/facebookresearch/detectron2/tree/master/datasets).

## Training

#### ImageNet Pretrained Models

We provide backbone weights pretrained on ImageNet-1k dataset for detectron2.

* [MobileNet-V2](https://dl.dropbox.com/s/yduxbc13s3ip6qn/mobilenet_v2_detectron2.pth)

* [VoVNetV2-19-Slim-DW](https://dl.dropbox.com/s/f3s7ospitqoals1/vovnet19_ese_slim_dw_detectron2.pth)

* [VoVNetV2-19-Slim](https://dl.dropbox.com/s/8h5ybmi4ftbcom0/vovnet19_ese_slim_detectron2.pth)

* [VoVNetV2-19](https://dl.dropbox.com/s/rptgw6stppbiw1u/vovnet19_ese_detectron2.pth)

* [VoVNetV2-39](https://dl.dropbox.com/s/q98pypf96rhtd8y/vovnet39_ese_detectron2.pth)

* [VoVNetV2-57](https://dl.dropbox.com/s/8xl0cb3jj51f45a/vovnet57_ese_detectron2.pth)

* [VoVNetV2-99](https://dl.dropbox.com/s/1mlv31coewx8trd/vovnet99_ese_detectron2.pth)

To train a model, run

```bash

cd centermask2

python train_net.py --config-file "configs/"

```

For example, to launch CenterMask training with VoVNetV2-39 backbone on 8 GPUs,

one should execute:

```bash

cd centermask2

python train_net.py --config-file "configs/centermask/centermask_V_39_eSE_FPN_ms_3x.yaml" --num-gpus 8

```

## Evaluation

Model evaluation can be done similarly:

* if you want to inference with 1 batch `--num-gpus 1`

* `--eval-only`

* `MODEL.WEIGHTS path/to/the/model.pth`

```bash

cd centermask2

wget https://dl.dropbox.com/s/tczecsdxt10uai5/centermask2-V-39-eSE-FPN-ms-3x.pth

python train_net.py --config-file "configs/centermask/centermask_V_39_eSE_FPN_ms_3x.yaml" --num-gpus 1 --eval-only MODEL.WEIGHTS centermask2-V-39-eSE-FPN-ms-3x.pth

```

## TODO

- [x] Adding Lightweight models

- [ ] Applying CenterMask for PointRend or Panoptic-FPN.

If you use VoVNet, please use the following BibTeX entry.

```BibTeX

@inproceedings{lee2019energy,

title = {An Energy and GPU-Computation Efficient Backbone Network for Real-Time Object Detection},

author = {Lee, Youngwan and Hwang, Joong-won and Lee, Sangrok and Bae, Yuseok and Park, Jongyoul},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops},

year = {2019}

}

@inproceedings{lee2020centermask,

title={CenterMask: Real-Time Anchor-Free Instance Segmentation},

author={Lee, Youngwan and Park, Jongyoul},

booktitle={CVPR},

year={2020}

}

```

## Special Thanks to

[mask scoring for detectron2](https://github.com/lsrock1/maskscoring_rcnn.detectron2) by [Sangrok Lee](https://github.com/lsrock1)

[FCOS_for_detectron2](https://github.com/aim-uofa/adet) by AdeliDet team.