https://gafniguy.github.io/4D-Facial-Avatars/

Dynamic Neural Radiance Fields for Monocular 4D Facial Avater Reconstruction

https://gafniguy.github.io/4D-Facial-Avatars/

Last synced: about 2 months ago

JSON representation

Dynamic Neural Radiance Fields for Monocular 4D Facial Avater Reconstruction

- Host: GitHub

- URL: https://gafniguy.github.io/4D-Facial-Avatars/

- Owner: gafniguy

- Created: 2020-11-26T14:09:10.000Z (over 4 years ago)

- Default Branch: main

- Last Pushed: 2024-04-29T13:53:26.000Z (about 1 year ago)

- Last Synced: 2024-08-01T04:02:39.017Z (10 months ago)

- Language: Python

- Size: 52.9 MB

- Stars: 671

- Watchers: 29

- Forks: 67

- Open Issues: 21

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

- awesome-NeRF - Dynamic Neural Radiance Fields for Monocular 4D Facial Avatar Reconstruction - Facial-Avatars) | [bibtex](./NeRF-and-Beyond.bib#L87-L93) <!---Gafni20arxiv_DNRF--> (Papers)

README

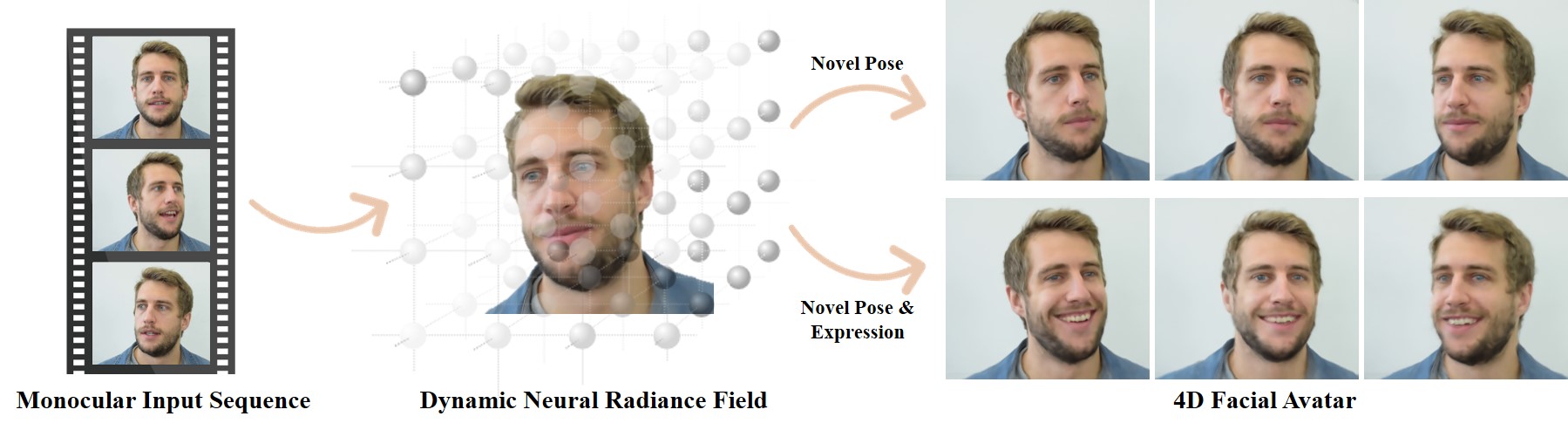

## NeRFace: Dynamic Neural Radiance Fields for Monocular 4D Facial Avatar Reconstruction [CVPR 2021 Oral Presentation]

*Guy Gafni1, Justus Thies1, Michael Zollhöfer2, Matthias Nießner1*

1 Technichal University of Munich, 2Facebook Reality Labs

Project Page & Video: https://gafniguy.github.io/4D-Facial-Avatars/

**If you find our work useful, please include the following citation:**

```

@InProceedings{Gafni_2021_CVPR,

author = {Gafni, Guy and Thies, Justus and Zollh{\"o}fer, Michael and Nie{\ss}ner, Matthias},

title = {Dynamic Neural Radiance Fields for Monocular 4D Facial Avatar Reconstruction},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2021},

pages = {8649-8658}

}

```

**Dataset and License**

Dataset is available for download [here](https://kaldir.vc.in.tum.de/nerface/nerface_dataset.zip) or from [here](https://syncandshare.lrz.de/getlink/fiFbKE8dEDWYENSr75L9WG/nerface_dataset.zip). Please do not use it for commercial use and respect the license attached within the zip file. (The material in this repository is licensed under an Attribution-NonCommercial-ShareAlike 4.0 International license).

If you make use of this dataset or code, please cite our paper.

MIT License applies for the code.

**Code Structure**

The nerf code is heavily based on this repo by Krishna Murthy. Thank you!

Installation etc:

Originally the project used torch 1.7.1, but this should also run with torch 1.9.0 (cuda 11).

If you get any errors related to `torchsearchsorted`, ignore this module and don't bother installing it, and comment out its imports. Its functionality is impmlemented in pytorch.

These two are interchangeable:

```

#inds = torchsearchsorted.searchsorted(cdf, u, side="right") # needs compilationo of torchsearchsorted

inds = torch.searchsorted(cdf.detach(), u, right=True) # native to pytorch

```

The main training and testing scripts are `train_transformed_rays.py` and `eval_transformed_rays.py`, respectively. They are in the main working folder which is in `nerface_code/nerf-pytorch/`

The training script expects a path to a config file, e.g.:

`python train_transformed_rays.py --config ./path_to_data/person_1/person_1_config.yml `

The eval script will also take a path to a model checkpoint and a folder to save the rendered images:

`python eval_transformed_rays.py --config ./path_to_data/person_1/person_1_config.yml --checkpoint /path/to/checkpoint/checkpoint400000.ckpt --savedir ./renders/person_1_rendered_frames`

The config file must refer to a dataset to use in `dataset.basedir`. Download the dataset from the .zip shared above, and place it in the nerf-pytorch directory.

If you have your own video sequence including per frame tracking, you can see how I create the json's for training in the `real_to_nerf.py` file (main function). This does not include the code for tracking, which unfortunately I cannot publish.

Don't hesitate to contact [guy.gafni at tum.de] for additional questions, or open an issue here.

Code for the webpage is borrowed from the ScanRefer project.