https://github.com/0xdea/oneiromancer

Reverse engineering assistant that uses a locally running LLM to aid with pseudo-code analysis.

https://github.com/0xdea/oneiromancer

aidapal ollama pseudo-code reverse-engineering vuln-dev

Last synced: 4 months ago

JSON representation

Reverse engineering assistant that uses a locally running LLM to aid with pseudo-code analysis.

- Host: GitHub

- URL: https://github.com/0xdea/oneiromancer

- Owner: 0xdea

- License: mit

- Created: 2025-02-19T09:30:51.000Z (12 months ago)

- Default Branch: master

- Last Pushed: 2025-06-27T14:44:04.000Z (8 months ago)

- Last Synced: 2025-07-27T23:23:45.199Z (7 months ago)

- Topics: aidapal, ollama, pseudo-code, reverse-engineering, vuln-dev

- Language: Rust

- Homepage: https://ollama.com

- Size: 1.92 MB

- Stars: 80

- Watchers: 2

- Forks: 10

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- Changelog: CHANGELOG.md

- License: LICENSE

Awesome Lists containing this project

- fucking-awesome-rust - 0xdea/oneiromancer - Reverse engineering assistant that uses a locally running LLM to aid with source code analysis [](https://github.com/0xdea/oneiromancer/actions/workflows/build.yml) (Applications / Security tools)

- awesome-rust - 0xdea/oneiromancer - Reverse engineering assistant that uses a locally running LLM to aid with source code analysis [](https://github.com/0xdea/oneiromancer/actions/workflows/build.yml) (Applications / Security tools)

- awesome-rust-with-stars - 0xdea/oneiromancer - 01-30 | (Applications / Security tools)

README

# oneiromancer

[](https://github.com/0xdea/oneiromancer)

[](https://crates.io/crates/oneiromancer)

[](https://crates.io/crates/oneiromancer)

[](https://twitter.com/0xdea)

[](https://infosec.exchange/@raptor)

[](https://github.com/0xdea/oneiromancer/actions/workflows/build.yml)

> "A large fraction of the flaws in software development are due to programmers not fully understanding all the possible

> states their code may execute in." -- John Carmack

> "Can it run Doom?" --

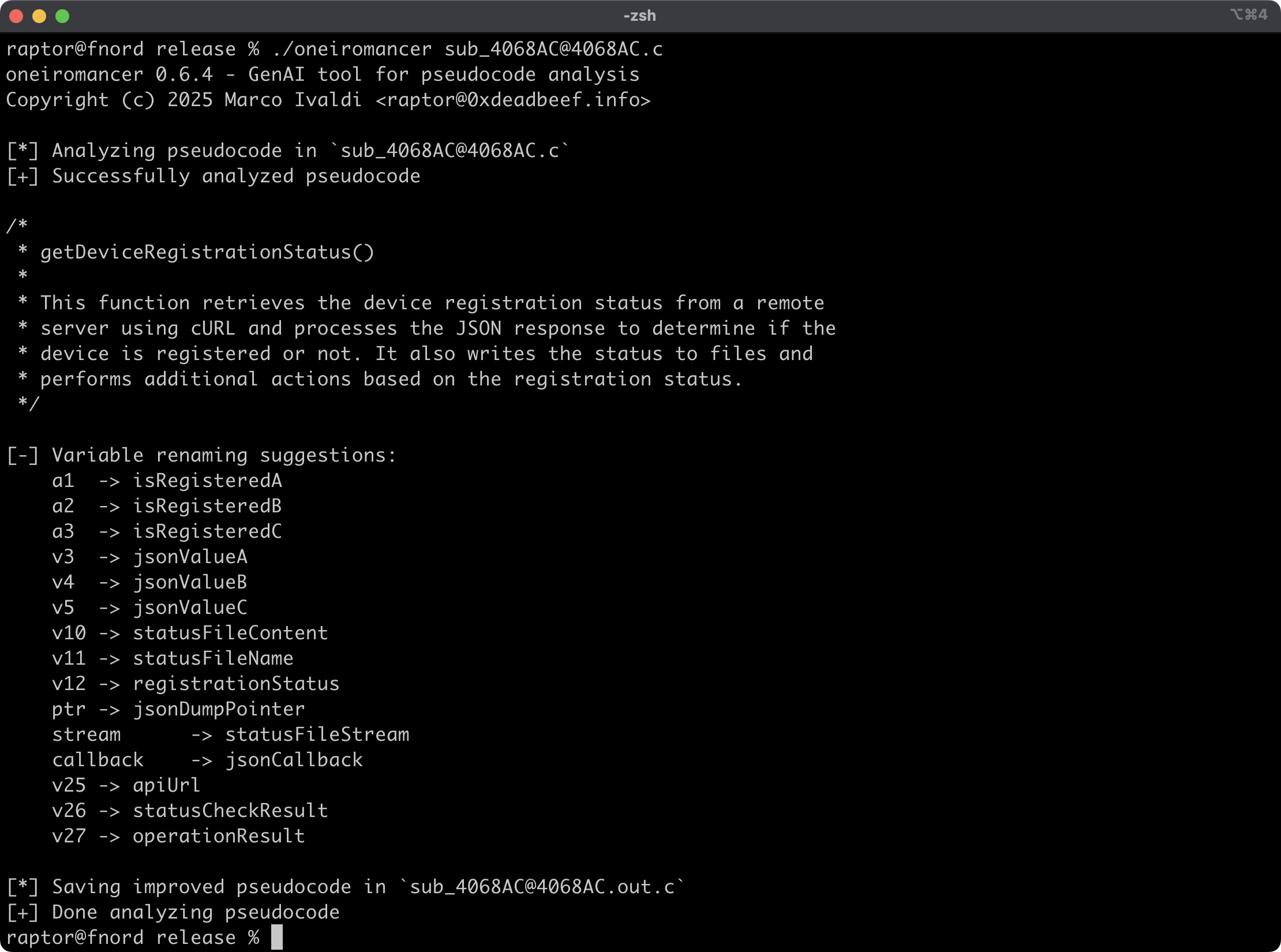

Oneiromancer is a reverse engineering assistant that uses a locally running LLM that has been fine-tuned for Hex-Rays

pseudocode to aid with code analysis. It can analyze a function or a smaller code snippet, returning a high-level

description of what the code does, a recommended name for the function, and variable renaming suggestions, based on the

results of the analysis.

## Features

* Cross-platform support for the fine-tuned LLM [aidapal](https://huggingface.co/AverageBusinessUser/aidapal) based on

`mistral-7b-instruct`.

* Easy integration with the pseudocode extractor [haruspex](https://github.com/0xdea/haruspex) and popular IDEs.

* Code description, recommended function name, and variable renaming suggestions are printed on the terminal.

* Improved pseudocode of each analyzed function is saved in a separate file for easy inspection.

* External crates can invoke `analyze_code` or `analyze_file` to analyze pseudocode and then process analysis results.

## Blog post

*

## See also

*

*

*

*

## Installing

The easiest way to get the latest release is via [crates.io](https://crates.io/crates/oneiromancer):

```sh

cargo install oneiromancer

```

To install as a library, run the following command in your project directory:

```sh

cargo add oneiromancer

```

## Compiling

Alternatively, you can build from [source](https://github.com/0xdea/oneiromancer):

```sh

git clone https://github.com/0xdea/oneiromancer

cd oneiromancer

cargo build --release

```

## Configuration

1. Download and install [Ollama](https://ollama.com/).

2. Download the fine-tuned weights and the Ollama modelfile from [Hugging Face](https://huggingface.co/):

```sh

wget https://huggingface.co/AverageBusinessUser/aidapal/resolve/main/aidapal-8k.Q4_K_M.gguf

wget https://huggingface.co/AverageBusinessUser/aidapal/resolve/main/aidapal.modelfile

```

3. Configure Ollama by running the following commands within the directory in which you downloaded the files:

```sh

ollama create aidapal -f aidapal.modelfile

ollama list

```

## Usage

1. Run oneiromancer as follows:

```sh

export OLLAMA_BASEURL=custom_baseurl # if not set, the default will be used

export OLLAMA_MODEL=custom_model # if not set, the default will be used

oneiromancer .c

```

2. Find the extracted pseudocode of each decompiled function in `.out.c`:

```sh

vim .out.c

code .out.c

```

*Note: for best results, you shouldn't submit for analysis to the LLM more than one function at a time.*

## Tested on

* Apple macOS Sequoia 15.2 with Ollama 0.5.11

* Ubuntu Linux 24.04.2 LTS with Ollama 0.5.11

* Microsoft Windows 11 23H2 with Ollama 0.5.11

## Changelog

* [CHANGELOG.md](CHANGELOG.md)

## Credits

* Chris Bellows (@AverageBusinessUser) at Atredis Partners for his fine-tuned LLM `aidapal` <3

## TODO

* Improve output file handling with versioning and/or an output directory.

* Implement other features of the IDAPython `aidapal` IDA Pro plugin (e.g., context).

* Integrate with [haruspex](https://github.com/0xdea/haruspex) and [idalib](https://github.com/binarly-io/idalib).

* Use custom types in the public API and implement a provider abstraction.

* Implement a "minority report" protocol (i.e., make three queries and select the best responses).

* Investigate other use cases for the `aidapal` LLM and implement a modular architecture to plug in custom LLMs.