https://github.com/AIVFI/Video-Frame-Interpolation-Rankings-and-Video-Deblurring-Rankings

Rankings include: ABME AdaFNIO ALANET AMT BiT BVFI CDFI CtxSyn DBVI DeMFI DQBC DRVI EAFI EBME EDC EDENVFI EDSC EMA-VFI FGDCN FILM FLAVR H-VFI IFRNet IQ-VFI JNMR LADDER M2M MA-GCSPA NCM PerVFI PRF ProBoost-Net RIFE RN-VFI SoftSplat SSR ST-MFNet Swin-VFI TDPNet TTVFI UGFI UPR-Net UTI-VFI VFIformer VFIFT VFIMamba VFIT VIDUE VRT

https://github.com/AIVFI/Video-Frame-Interpolation-Rankings-and-Video-Deblurring-Rankings

artificial-intelligence computer-vision deblurring deep-learning frame-interpolation interpolation low-level-vision machine-learning motion-blur motion-deblurring ncnn restoration rife tensorrt vapoursynth video-deblurring video-frame-interpolation video-interpolation video-processing video-restoration

Last synced: 3 months ago

JSON representation

Rankings include: ABME AdaFNIO ALANET AMT BiT BVFI CDFI CtxSyn DBVI DeMFI DQBC DRVI EAFI EBME EDC EDENVFI EDSC EMA-VFI FGDCN FILM FLAVR H-VFI IFRNet IQ-VFI JNMR LADDER M2M MA-GCSPA NCM PerVFI PRF ProBoost-Net RIFE RN-VFI SoftSplat SSR ST-MFNet Swin-VFI TDPNet TTVFI UGFI UPR-Net UTI-VFI VFIformer VFIFT VFIMamba VFIT VIDUE VRT

- Host: GitHub

- URL: https://github.com/AIVFI/Video-Frame-Interpolation-Rankings-and-Video-Deblurring-Rankings

- Owner: AIVFI

- Created: 2022-09-01T19:39:49.000Z (almost 3 years ago)

- Default Branch: main

- Last Pushed: 2024-07-11T14:54:32.000Z (11 months ago)

- Last Synced: 2024-07-30T18:28:44.566Z (11 months ago)

- Topics: artificial-intelligence, computer-vision, deblurring, deep-learning, frame-interpolation, interpolation, low-level-vision, machine-learning, motion-blur, motion-deblurring, ncnn, restoration, rife, tensorrt, vapoursynth, video-deblurring, video-frame-interpolation, video-interpolation, video-processing, video-restoration

- Homepage:

- Size: 6.98 MB

- Stars: 92

- Watchers: 9

- Forks: 0

- Open Issues: 1

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

#

Video Frame Interpolation Rankings

and Video Deblurring Rankings

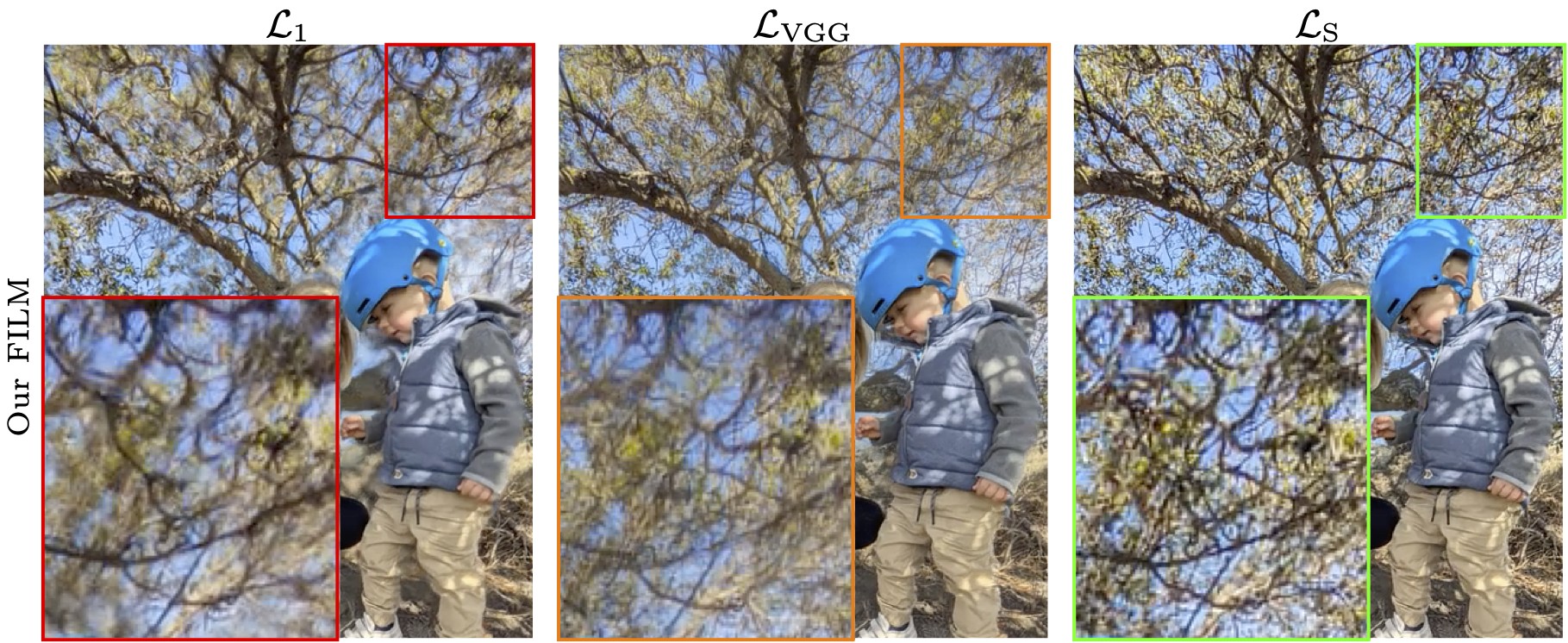

Researchers! Please develope joint video deblurring and frame interpolation models, incorporate [InterpAny-Clearer](https://github.com/zzh-tech/InterpAny-Clearer) in your models and train at least one of your models on **Style loss**, also called Gram matrix loss (the best perceptual loss function):

Source: FILM - Loss Functions Ablation https://film-net.github.io/

Source: MoSt-DSA - Loss Function Comparison https://arxiv.org/html/2407.07078

##

List of Rankings

Each ranking includes only the best model for one method.

The rankings exclude all event-based and spike-guided models.

### Joint Video Deblurring and Frame Interpolation Rankings

1. :crown: **RBI with real motion blur✔️: LPIPS😍** (no data)

*This will be the King of all rankings. We look forward to ambitious researchers.*

1. [**RBI with real motion blur✔️: PSNR😞>=28.5dB**](#rbi-with-real-motion-blur%EF%B8%8F-psnr285db)

1. **Adobe240 (640×352) with synthetic motion blur✖️: LPIPS😍** (no data)

1. [**Adobe240 (640×352) with synthetic motion blur✖️: PSNR😞>=33.3dB**](#adobe240-640352-with-synthetic-motion-blur%EF%B8%8F-psnr333db)

1. **Adobe240 (5:8) with synthetic motion blur✖️: LPIPS😍** (no data)

1. [**Adobe240 (5:8) with synthetic motion blur✖️: PSNR😞>=25dB**](#adobe240-58-with-synthetic-motion-blur%EF%B8%8F-psnr25db)

### Video Deblurring Rankings

- (to do)

### Video Frame Interpolation Rankings

1. [**SNU-FILM-arb Extreme (×16): LPIPS😍<=0.070**](#snu-film-arb-extreme-16-lpips0070)

1. [**SNU-FILM-arb Hard (×8): LPIPS😍<=0.048**](#snu-film-arb-hard-8-lpips0048)

1. [**SNU-FILM-arb Medium (×4): LPIPS😍<=0.026**](#snu-film-arb-medium-4-lpips0026)

1. [**SNU-FILM Extreme (×2): LPIPS😍<=0.0889**](#snu-film-extreme-2-lpips00889)

1. [**SNU-FILM Hard (×2): LPIPS😍<=0.0429**](#snu-film-hard-2-lpips00429)

1. [**SNU-FILM Medium (×2): LPIPS😍<=0.0202**](#snu-film-medium-2-lpips00202)

1. [**X-TEST (×8): LPIPS😍<=0.07**](#x-test-8-lpips007)

1. [**Vimeo-90K triplet: LPIPS😍<=0.018**](#vimeo-90k-triplet-lpips0018)

1. [**Vimeo-90K triplet: LPIPS😍(SqueezeNet)<=0.014**](#vimeo-90k-triplet-lpipssqueezenet0014)

1. [**Vimeo-90K triplet: PSNR😞>=36dB**](#vimeo-90k-triplet-psnr36db)

1. [**Vimeo-90K septuplet: LPIPS😍<=0.032**](#vimeo-90k-septuplet-lpips0032)

1. [**Vimeo-90K septuplet: PSNR😞>=36dB**](#vimeo-90k-septuplet-psnr36db)

### Appendices

- **Appendix 1: Rules for qualifying models for the rankings** (to do)

- [**Appendix 2: Metrics selection for the rankings**](#appendix-2-metrics-selection-for-the-rankings)

- [**Appendix 3: List of all research papers from the above rankings**](#appendix-3-list-of-all-research-papers-from-the-above-rankings)

--------------------

## RBI with real motion blur✔️: PSNR😞>=28.5dB

| RK | Model | PSNR ↑

{Input fr.} | Training

dataset | Official

repository | Practical

model | VapourSynth |

|:---:|:---:|:---:|:---:|:---:|:---:|:---:|

| 1 | Pre-BiT++

[](https://openaccess.thecvf.com/content/CVPR2023/html/Zhong_Blur_Interpolation_Transformer_for_Real-World_Motion_From_Blur_CVPR_2023_paper.html) | **31.32** {3}

[](https://openaccess.thecvf.com/content/CVPR2023/html/Zhong_Blur_Interpolation_Transformer_for_Real-World_Motion_From_Blur_CVPR_2023_paper.html) | *Pretraining*: Adobe240

*Training*: RBI | [](https://github.com/zzh-tech/BiT) | [ ](https://github.com/zzh-tech/BiT/issues/5) | - |

](https://github.com/zzh-tech/BiT/issues/5) | - |

| 2 | DeMFI-Net*rb*(5,3)

[](https://www.ecva.net/papers/eccv_2022/papers_ECCV/html/4406_ECCV_2022_paper.php) | **29.03** {4}

[](https://openaccess.thecvf.com/content/CVPR2023/html/Zhong_Blur_Interpolation_Transformer_for_Real-World_Motion_From_Blur_CVPR_2023_paper.html) | RBI | [](https://github.com/JihyongOh/DeMFI) | - | - |

| 3 | PRF4 -*Large*

[](https://openaccess.thecvf.com/content_CVPR_2020/html/Shen_Blurry_Video_Frame_Interpolation_CVPR_2020_paper.html)

*ENH:*

[](https://ieeexplore.ieee.org/document/9257179) | **28.55** {5}

[](https://openaccess.thecvf.com/content/CVPR2023/html/Zhong_Blur_Interpolation_Transformer_for_Real-World_Motion_From_Blur_CVPR_2023_paper.html) | RBI | [](https://github.com/laomao0/BIN) | - | - |

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

## Adobe240 (640×352) with synthetic motion blur✖️: PSNR😞>=33.3dB

| RK | Model | PSNR ↑

{Input fr.} | Originally

announced

or Training

dataset | Official

repository | Practical

model | VapourSynth |

|:----:|:----|:----:|:----:|:----:|:----:|:----:|

| 1 | BVFI

[](https://arxiv.org/abs/2310.05383) | **35.43** {4}

[](https://arxiv.org/abs/2310.05383) | Adobe240 | - | - | - |

| 2 | BiT++

[](https://openaccess.thecvf.com/content/CVPR2023/html/Zhong_Blur_Interpolation_Transformer_for_Real-World_Motion_From_Blur_CVPR_2023_paper.html) | **34.97** {3}

[](https://openaccess.thecvf.com/content/CVPR2023/html/Zhong_Blur_Interpolation_Transformer_for_Real-World_Motion_From_Blur_CVPR_2023_paper.html) | Adobe240 | [](https://github.com/zzh-tech/BiT) | [ ](https://github.com/zzh-tech/BiT/issues/5) | - |

](https://github.com/zzh-tech/BiT/issues/5) | - |

| 3 | DeMFI-Net*rb*(5,3)

[](https://www.ecva.net/papers/eccv_2022/papers_ECCV/html/4406_ECCV_2022_paper.php) | **34.34** {4}

[](https://www.ecva.net/papers/eccv_2022/papers_ECCV/html/4406_ECCV_2022_paper.php) | Adobe240 | [](https://github.com/JihyongOh/DeMFI) | - | - |

| 4 | ALANET | **33.34dB** [^37] | August 2020 [^37] | [](https://github.com/agupt013/ALANET) | - | - |

| 5 | PRF4 -*Large* | **33.32dB** [^38] | February 2020 [^35] | [](https://github.com/laomao0/BIN) | - | - |

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

## Adobe240 (5:8) with synthetic motion blur✖️: PSNR😞>=25dB

| RK | Model | PSNR ↑ | Originally

announced | Official

repository | Practical

model | VapourSynth |

|:----:|:----|:----:|:----:|:----:|:----:|:----:|

| 1 | VIDUE | **28.74dB** [^39] | March 2023 [^39] | [](https://github.com/shangwei5/VIDUE) | - | - |

| 2 | FLAVR | **27.23dB** [^39] | December 2020 [^9] | [](https://github.com/tarun005/FLAVR) | - | - |

| 3 | UTI-VFI | **26.69dB** [^39] | December 2020 [^40] | [](https://github.com/yjzhang96/UTI-VFI) | - | - |

| 4 | DeMFI | **25.71dB** [^39] | November 2021 [^34] | [](https://github.com/JihyongOh/DeMFI) | - | - |

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

## SNU-FILM-arb Extreme (×16): LPIPS😍<=0.070

📝 Note: This ranking has the most up-to-date layout.

| RK | Model

*Links:*

Venue Repository | LPIPS ↓

{Input fr.}

[](https://arxiv.org/abs/2407.08680)

GIMM-VFI | LPIPS ↓

{Input fr.}

[](https://arxiv.org/abs/2412.11365)

BiM-VFI |

|:---:|:---:|:---:|:---:|

| 1 | **GIMM-VFI-F-P**

[](https://proceedings.neurips.cc/paper_files/paper/2024/hash/7495fa446f10e9edef6e47b2d327596e-Abstract-Conference.html) [](https://github.com/GSeanCDAT/GIMM-VFI) | **0.058** {2} | - |

| 2 | **BiM-VFI**

[](https://arxiv.org/abs/2412.11365) | - | **0.070** {2} |

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

## SNU-FILM-arb Hard (×8): LPIPS😍<=0.048

📝 Note: This ranking has the most up-to-date layout.

| RK | Model

*Links:*

Venue Repository | LPIPS ↓

{Input fr.}

[](https://arxiv.org/abs/2407.08680)

GIMM-VFI | LPIPS ↓

{Input fr.}

[](https://arxiv.org/abs/2412.11365)

BiM-VFI |

|:---:|:---:|:---:|:---:|

| 1 | **GIMM-VFI-F-P**

[](https://proceedings.neurips.cc/paper_files/paper/2024/hash/7495fa446f10e9edef6e47b2d327596e-Abstract-Conference.html) [](https://github.com/GSeanCDAT/GIMM-VFI) | **0.030** {2} | - |

| 2 | **BiM-VFI**

[](https://arxiv.org/abs/2412.11365) | - | **0.039** {2} |

| 3 | **IA-Clearer [D,R]u IFRNet**

[](https://www.ecva.net/papers/eccv_2024/papers_ECCV/html/4908_ECCV_2024_paper.php) [](https://github.com/zzh-tech/InterpAny-Clearer) | - | **0.048** {2} |

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

## SNU-FILM-arb Medium (×4): LPIPS😍<=0.026

📝 Note: This ranking has the most up-to-date layout.

| RK | Model

*Links:*

Venue Repository | LPIPS ↓

{Input fr.}

[](https://arxiv.org/abs/2407.08680)

GIMM-VFI | LPIPS ↓

{Input fr.}

[](https://arxiv.org/abs/2412.11365)

BiM-VFI |

|:---:|:---:|:---:|:---:|

| 1 | **GIMM-VFI-R-P**

[](https://proceedings.neurips.cc/paper_files/paper/2024/hash/7495fa446f10e9edef6e47b2d327596e-Abstract-Conference.html) [](https://github.com/GSeanCDAT/GIMM-VFI) | **0.016** {2} | - |

| 2 | **BiM-VFI**

[](https://arxiv.org/abs/2412.11365) | - | **0.023** {2} |

| 3 | **IA-Clearer [D,R]u IFRNet**

[](https://www.ecva.net/papers/eccv_2024/papers_ECCV/html/4908_ECCV_2024_paper.php) [](https://github.com/zzh-tech/InterpAny-Clearer) | - | **0.026** {2} |

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

## SNU-FILM Extreme (×2): LPIPS😍<=0.0889

📝 Note: This ranking has the most up-to-date layout.

| RK | Model

*Links:*

Venue Repository | LPIPS ↓

{Input fr.}

[](https://openaccess.thecvf.com/content/CVPR2023/html/Plack_Frame_Interpolation_Transformer_and_Uncertainty_Guidance_CVPR_2023_paper.html)

UGFI | LPIPS ↓

{Input fr.}

[](https://arxiv.org/abs/2406.17256)

MoMo |

|:---:|:---:|:---:|:---:|

| 1 | **UGFI 𝓛*S***

[](https://openaccess.thecvf.com/content/CVPR2023/html/Plack_Frame_Interpolation_Transformer_and_Uncertainty_Guidance_CVPR_2023_paper.html) | **0.0864** {2} | - |

| 2 | **MoMo**

[](https://arxiv.org/abs/2406.17256) [](https://github.com/JHLew/MoMo) | - | **0.0872** {2} |

| 3 | **FILM-𝓛*S***

[](https://www.ecva.net/papers/eccv_2022/papers_ECCV/html/4614_ECCV_2022_paper.php) [](https://github.com/google-research/frame-interpolation) | **0.0899** {2} | **0.0889** {2} |

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

## SNU-FILM Hard (×2): LPIPS😍<=0.0429

📝 Note: This ranking has the most up-to-date layout.

| RK | Model

*Links:*

Venue Repository | LPIPS ↓

{Input fr.}

[](https://arxiv.org/abs/2406.17256)

MoMo | LPIPS ↓

{Input fr.}

[](https://openaccess.thecvf.com/content/CVPR2023/html/Plack_Frame_Interpolation_Transformer_and_Uncertainty_Guidance_CVPR_2023_paper.html)

UGFI |

|:---:|:---:|:---:|:---:|

| 1 | **MoMo**

[](https://arxiv.org/abs/2406.17256) [](https://github.com/JHLew/MoMo) | **0.0419** {2} | - |

| 2 | **UGFI 𝓛*S***

[](https://openaccess.thecvf.com/content/CVPR2023/html/Plack_Frame_Interpolation_Transformer_and_Uncertainty_Guidance_CVPR_2023_paper.html) | - | **0.0420** {2} |

| 3 | **FILM-𝓛*S***

[](https://www.ecva.net/papers/eccv_2022/papers_ECCV/html/4614_ECCV_2022_paper.php) [](https://github.com/google-research/frame-interpolation) | **0.0429** {2} | **0.0434** {2} |

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

## SNU-FILM Medium (×2): LPIPS😍<=0.0202

📝 Note: This ranking has the most up-to-date layout.

| RK | Model

*Links:*

Venue Repository | LPIPS ↓

{Input fr.}

[](https://arxiv.org/abs/2406.17256)

MoMo | - |

|:---:|:---:|:---:|:---:|

| 1 | **MoMo**

[](https://arxiv.org/abs/2406.17256) [](https://github.com/JHLew/MoMo) | **0.0202** {2} | - |

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

## X-TEST (×8): LPIPS😍<=0.07

📝 Note: This ranking has the most up-to-date layout.

| RK | Model

*Links:*

Venue Repository | LPIPS ↓

{Input fr.}

[](https://arxiv.org/abs/2412.11365)

BiM-VFI | - |

|:---:|:---:|:---:|:---:|

| 1 | **BiM-VFI**

[](https://arxiv.org/abs/2412.11365) | **0.068** {2} | - |

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

## Vimeo-90K triplet: LPIPS😍<=0.018

| RK | Model | LPIPS ↓

{Input fr.} | Training

dataset | Official

repository | Practical

model | VapourSynth |

|:---:|:---:|:---:|:---:|:---:|:---:|:---:|

| 1 | EAFI-𝓛*ecp*

[](https://arxiv.org/abs/2207.12305) | **0.012** {2}

[](https://arxiv.org/abs/2207.12305) | Vimeo-90K triplet | - | EAFI-𝓛*ecp* | - |

| 2 | UGFI 𝓛*S*

[](https://openaccess.thecvf.com/content/CVPR2023/html/Plack_Frame_Interpolation_Transformer_and_Uncertainty_Guidance_CVPR_2023_paper.html) | **0.0126** {2}

[](https://openaccess.thecvf.com/content/CVPR2023/html/Plack_Frame_Interpolation_Transformer_and_Uncertainty_Guidance_CVPR_2023_paper.html) | Vimeo-90K triplet | - | UGFI 𝓛*S* | - |

| 3 | SoftSplat - 𝓛*F*

[](https://openaccess.thecvf.com/content_CVPR_2020/html/Niklaus_Softmax_Splatting_for_Video_Frame_Interpolation_CVPR_2020_paper.html) | **0.013** {2}

[](https://openaccess.thecvf.com/content_CVPR_2020/html/Niklaus_Softmax_Splatting_for_Video_Frame_Interpolation_CVPR_2020_paper.html) | Vimeo-90K triplet | [](https://github.com/sniklaus/softmax-splatting) | SoftSplat - 𝓛*F* | - |

| 4 | FILM-𝓛*S*

[](https://www.ecva.net/papers/eccv_2022/papers_ECCV/html/4614_ECCV_2022_paper.php) | **0.0132** {2}

[](https://openaccess.thecvf.com/content/CVPR2023/html/Plack_Frame_Interpolation_Transformer_and_Uncertainty_Guidance_CVPR_2023_paper.html) | Vimeo-90K triplet | [](https://github.com/google-research/frame-interpolation) | [FILM-𝓛*S*](https://github.com/google-research/frame-interpolation#pre-trained-models) | - |

| 5 | MoMo

[](https://arxiv.org/abs/2406.17256) | **0.0136** {2}

[](https://arxiv.org/abs/2406.17256) | Vimeo-90K triplet | [](https://github.com/JHLew/MoMo) | [MoMo](https://github.com/JHLew/MoMo#download-the-pretrained-model) | - |

| 6 | EDSC_s-𝓛*F*

[](https://ieeexplore.ieee.org/document/9501506) | **0.016** {2}

[](https://arxiv.org/abs/2006.08070) | Vimeo-90K triplet | [](https://github.com/Xianhang/EDSC-pytorch) | [EDSC_s-𝓛*F*](https://github.com/Xianhang/EDSC-pytorch#pre-trained-models) | - |

| 7 | CtxSyn - 𝓛*F*

[](https://openaccess.thecvf.com/content_cvpr_2018/html/Niklaus_Context-Aware_Synthesis_for_CVPR_2018_paper.html) | **0.017** {2}

[](https://openaccess.thecvf.com/content_CVPR_2020/html/Niklaus_Softmax_Splatting_for_Video_Frame_Interpolation_CVPR_2020_paper.html) | proprietary | - | CtxSyn - 𝓛*F* | - |

| 8 | PerVFI

[](https://openaccess.thecvf.com/content/CVPR2024/html/Wu_Perception-Oriented_Video_Frame_Interpolation_via_Asymmetric_Blending_CVPR_2024_paper.html) | **0.018** {2}

[](https://openaccess.thecvf.com/content/CVPR2024/html/Wu_Perception-Oriented_Video_Frame_Interpolation_via_Asymmetric_Blending_CVPR_2024_paper.html) | Vimeo-90K triplet | [](https://github.com/mulns/PerVFI) | [PerVFI](https://github.com/mulns/PerVFI#-download-checkpoints) | - |

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

## Vimeo-90K triplet: LPIPS😍(SqueezeNet)<=0.014

| RK | Model | LPIPS ↓ | Originally

announced | Official

repository | Practical

model | VapourSynth |

|:----:|:----|:----:|:----:|:----:|:----:|:----:|

| 1 | CDFI w/ adaP/U | **0.008** [^23] | March 2021 [^24] | [](https://github.com/tding1/CDFI) | - | - |

| 2 | EDSC_s-𝓛*F* | **0.010** [^24] | June 2020 [^25] | [](https://github.com/Xianhang/EDSC-pytorch) | [EDSC_s-𝓛*F*](https://github.com/Xianhang/EDSC-pytorch#pre-trained-models) | - |

| 3 | DRVI | **0.013** [^26] | August 2021 [^26] | - | - | - |

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

## Vimeo-90K triplet: PSNR😞>=36dB

| RK | Model | PSNR ↑

{Input fr.} | Originally

announced

or Training

dataset | Official

repository | Practical

model | VapourSynth |

|:----:|:----|:----:|:----:|:----:|:----:|:----:|

| 1 | MA-GCSPA-triplets

[](https://openaccess.thecvf.com/content/CVPR2023/html/Zhou_Exploring_Motion_Ambiguity_and_Alignment_for_High-Quality_Video_Frame_Interpolation_CVPR_2023_paper.html) | **36.85** {2}

[](https://openaccess.thecvf.com/content/CVPR2023/html/Zhou_Exploring_Motion_Ambiguity_and_Alignment_for_High-Quality_Video_Frame_Interpolation_CVPR_2023_paper.html) | Vimeo-90K triplet | [](https://github.com/redrock303/CVPR23-MA-GCSPA) | - | - |

| 2 | VFIformer + HRFFM

[](https://openaccess.thecvf.com/content/CVPR2022/html/Lu_Video_Frame_Interpolation_With_Transformer_CVPR_2022_paper.html)

*ENH:*

[](https://arxiv.org/abs/2312.15868) | **36.69** {2}

[](https://arxiv.org/abs/2312.15868) | Vimeo-90K triplet | [](https://github.com/dvlab-research/VFIformer)

*ENH:*

- | - | - |

| 3 | LADDER-L

[](https://arxiv.org/abs/2404.11108) | **36.65** {2}

[](https://arxiv.org/abs/2404.11108) | Vimeo-90K triplet | - | - | - |

| 4-5 | EMA-VFI | **36.64dB** [^22] | March 2023 [^22] | [](https://github.com/MCG-NJU/EMA-VFI) | - | - |

| 4-5 | VFIMamba

[](https://arxiv.org/abs/2407.02315) | **36.64** {2}

[](https://arxiv.org/abs/2407.02315) | Vimeo-90K triplet & X-TRAIN | [](https://github.com/MCG-NJU/VFIMamba) | - | - |

| 6 | IQ-VFI

[](https://openaccess.thecvf.com/content/CVPR2024/html/Hu_IQ-VFI_Implicit_Quadratic_Motion_Estimation_for_Video_Frame_Interpolation_CVPR_2024_paper.html) | **36.60** {2}

[](https://openaccess.thecvf.com/content/CVPR2024/html/Hu_IQ-VFI_Implicit_Quadratic_Motion_Estimation_for_Video_Frame_Interpolation_CVPR_2024_paper.html) | Vimeo-90K triplet | - | - | - |

| 7 | DQBC-Aug | **36.57dB** [^36] | April 2023 [^36] | [](https://github.com/kinoud/DQBC) | - | - |

| 8 | TTVFI | **36.54dB** [^4] | July 2022 [^4] | [](https://github.com/ChengxuLiu/TTVFI) | - | - |

| 9 | AMT-G | **36.53dB** [^31] | April 2023 [^31] | [](https://github.com/MCG-NKU/AMT) | - | - |

| 10 | AdaFNIO | **36.50dB** [^19] | November 2022 [^19] | [](https://github.com/IDEAS-Lab-Purdue/AdaFNIO) | - | - |

| 11 | FGDCN-L | **36.46dB** [^21] | November 2022 [^21] | [](https://github.com/lpcccc-cv/FGDCN) | - | - |

| 12 | VFIFT

[](https://dl.acm.org/doi/10.1145/3581783.3612440) | **36.43** {2}

[](https://arxiv.org/abs/2307.16144) | Vimeo-90K triplet | - | - | - |

| 13 | UPR-Net LARGE | **36.42dB** [^18] | November 2022 [^18] | [](https://github.com/srcn-ivl/UPR-Net) | - | - |

| 14 | EAFI-𝓛*ecc* | **36.38dB** [^8] | July 2022 [^8] | - | EAFI-𝓛*ecp* | - |

| 15 | H-VFI-Large | **36.37dB** [^20] | November 2022 [^20] | - | - | - |

| 16 | UGFI 𝓛1

[](https://openaccess.thecvf.com/content/CVPR2023/html/Plack_Frame_Interpolation_Transformer_and_Uncertainty_Guidance_CVPR_2023_paper.html) | **36.34** {2}

[](https://openaccess.thecvf.com/content/CVPR2023/html/Plack_Frame_Interpolation_Transformer_and_Uncertainty_Guidance_CVPR_2023_paper.html) | Vimeo-90K triplet | - | UGFI 𝓛*S* | - |

| 17 | VFIT-B

[](https://openaccess.thecvf.com/content/CVPR2022/html/Shi_Video_Frame_Interpolation_Transformer_CVPR_2022_paper.html) | **36.33** {2}

[](https://arxiv.org/abs/2307.16144) | ? | [](https://github.com/zhshi0816/Video-Frame-Interpolation-Transformer) | - | - |

| 18 | SoftSplat - 𝓛*Lap* with ensemble | **36.28dB** [^28] | March 2020 [^15] | [](https://github.com/sniklaus/softmax-splatting) | SoftSplat - 𝓛*F* | - |

| 19 | ProBoost-Net (448x256)

[](https://ieeexplore.ieee.org/document/10003662) | **36.23** {2}

[](https://ieeexplore.ieee.org/document/10003662) | ? | - | - | - |

| 20 | NCM-Large | **36.22dB** [^32] | July 2022 [^32] | - | - | - |

| 21-22 | IFRNet large | **36.20dB** [^10] | May 2022 [^10] | [](https://github.com/ltkong218/IFRNet) | - | - |

| 21-22 | RAFT-M2M++

[](https://openaccess.thecvf.com/content/CVPR2022/html/Hu_Many-to-Many_Splatting_for_Efficient_Video_Frame_Interpolation_CVPR_2022_paper.html)

*ENH:*

[](https://ieeexplore.ieee.org/document/10294102) | **36.20** {2}

[](https://arxiv.org/abs/2310.18946) | Vimeo-90K triplet | [](https://github.com/feinanshan/M2M_VFI) | - | - |

| 23-24 | EBME-H* | **36.19dB** [^11] | June 2022 [^11] | [](https://github.com/srcn-ivl/EBME) | - | - |

| 23-24 | RIFE-Large

[](https://www.ecva.net/papers/eccv_2022/papers_ECCV/html/95_ECCV_2022_paper.php) | **36.19** {2}

[](https://www.ecva.net/papers/eccv_2022/papers_ECCV/html/95_ECCV_2022_paper.php) | Vimeo-90K triplet | [](https://github.com/megvii-research/ECCV2022-RIFE) | [Practical-RIFE 4.25](https://github.com/hzwer/Practical-RIFE#trained-model) | [](https://github.com/AmusementClub/vs-mlrt)

[](https://github.com/AmusementClub/vs-mlrt)

[](https://github.com/HolyWu/vs-rife)

[](https://github.com/HolyWu/vs-rife)

[](https://github.com/styler00dollar/VapourSynth-RIFE-ncnn-Vulkan)

[](https://github.com/styler00dollar/VapourSynth-RIFE-ncnn-Vulkan) |

| 25 | ABME | **36.18dB** [^13] | August 2021 [^13] | [](https://github.com/JunHeum/ABME) | - | - |

| 26 | HiFI

[](https://arxiv.org/abs/2410.11838) | **36.12** {2}

[](https://arxiv.org/abs/2410.11838) | *Pretraining*: Raw videos

*Training*: Vimeo-90K triplet & X-TRAIN | - | - | - |

| 27 | TDPNet*nv* w/o MRTM

[](https://ieeexplore.ieee.org/document/10182248) | **36.069** {2}

[](https://ieeexplore.ieee.org/document/10182248) | Vimeo-90K triplet | - | TDPNet | - |

| 28 | FILM-𝓛1

[](https://www.ecva.net/papers/eccv_2022/papers_ECCV/html/4614_ECCV_2022_paper.php) | **36.06** {2}

[](https://www.ecva.net/papers/eccv_2022/papers_ECCV/html/4614_ECCV_2022_paper.php) | Vimeo-90K triplet | [](https://github.com/google-research/frame-interpolation) | [FILM-𝓛*S*](https://github.com/google-research/frame-interpolation#pre-trained-models) | - |

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

## Vimeo-90K septuplet: LPIPS😍<=0.032

| RK | Model | LPIPS ↓ | Originally

announced | Official

repository | Practical

model | VapourSynth |

|:----:|:----|:----:|:----:|:----:|:----:|:----:|

| 1 | RIFE | **0.0233** [^27] | November 2020 [^12] | [](https://github.com/megvii-research/ECCV2022-RIFE) | [Practical-RIFE 4.25](https://github.com/hzwer/Practical-RIFE#trained-model) | [](https://github.com/AmusementClub/vs-mlrt)

[](https://github.com/AmusementClub/vs-mlrt)

[](https://github.com/HolyWu/vs-rife)

[](https://github.com/HolyWu/vs-rife)

[](https://github.com/styler00dollar/VapourSynth-RIFE-ncnn-Vulkan)

[](https://github.com/styler00dollar/VapourSynth-RIFE-ncnn-Vulkan) |

| 2 | IFRNet | **0.0274** [^27] | May 2022 [^10] | [](https://github.com/ltkong218/IFRNet) | - | - |

| 3 | VFIT-B | **0.0304** [^27] | November 2021 [^2] | [](https://github.com/zhshi0816/Video-Frame-Interpolation-Transformer) | - | - |

| 4 | ABME | **0.0309** [^27] | August 2021 [^13] | [](https://github.com/JunHeum/ABME) | - | - |

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

## Vimeo-90K septuplet: PSNR😞>=36dB

| RK | Model | PSNR ↑

{Input fr.} | Originally

announced

or Training

dataset | Official

repository | Practical

model | VapourSynth |

|:----:|:----|:----:|:----:|:----:|:----:|:----:|

| 1 | Swin-VFI

[](https://arxiv.org/abs/2406.11371) | **38.04** {6}

[](https://arxiv.org/abs/2406.11371) | Vimeo-90K septuplet | - | - | - |

| 2 | JNMR | **37.19dB** [^1] | June 2022 [^1] | [](https://github.com/ruhig6/JNMR) | - | - |

| 3 | VFIT-B

[](https://openaccess.thecvf.com/content/CVPR2022/html/Shi_Video_Frame_Interpolation_Transformer_CVPR_2022_paper.html) | **36.96** {4}

[](https://openaccess.thecvf.com/content/CVPR2022/html/Shi_Video_Frame_Interpolation_Transformer_CVPR_2022_paper.html) | Vimeo-90K septuplet | [](https://github.com/zhshi0816/Video-Frame-Interpolation-Transformer) | - | - |

| 4 | VRT

[](https://arxiv.org/abs/2201.12288) | **36.53** {4}

[](https://arxiv.org/abs/2201.12288) | Vimeo-90K septuplet | [](https://github.com/JingyunLiang/VRT) | - | - |

| 5 | ST-MFNet | **36.507dB** [^42] | November 2021 [^7] | [](https://github.com/danielism97/ST-MFNet) | - | - |

| 6 | EDENVFI PVT(15,15) | **36.387dB** [^42] | July 2023 [^42] | - | - | - |

| 7 | IFRNet

[](https://openaccess.thecvf.com/content/CVPR2022/html/Kong_IFRNet_Intermediate_Feature_Refine_Network_for_Efficient_Frame_Interpolation_CVPR_2022_paper.html) | **36.37** {2}

[](https://openaccess.thecvf.com/content/CVPR2023/html/Yu_Range-Nullspace_Video_Frame_Interpolation_With_Focalized_Motion_Estimation_CVPR_2023_paper.html) | Vimeo-90K septuplet | [](https://github.com/ltkong218/IFRNet) | - | - |

| 8 | RN-VFI

[](https://openaccess.thecvf.com/content/CVPR2023/html/Yu_Range-Nullspace_Video_Frame_Interpolation_With_Focalized_Motion_Estimation_CVPR_2023_paper.html) | **36.33** {4}

[](https://openaccess.thecvf.com/content/CVPR2023/html/Yu_Range-Nullspace_Video_Frame_Interpolation_With_Focalized_Motion_Estimation_CVPR_2023_paper.html) | Vimeo-90K septuplet | - | - | - |

| 9 | FLAVR

[](https://openaccess.thecvf.com/content/WACV2023/html/Kalluri_FLAVR_Flow-Agnostic_Video_Representations_for_Fast_Frame_Interpolation_WACV_2023_paper.html) | **36.3** {4}

[](https://openaccess.thecvf.com/content/WACV2023/html/Kalluri_FLAVR_Flow-Agnostic_Video_Representations_for_Fast_Frame_Interpolation_WACV_2023_paper.html) | Vimeo-90K septuplet | [](https://github.com/tarun005/FLAVR) | - | - |

| 10 | DBVI | **36.17dB** [^17] | October 2022 [^17] | [](https://github.com/Oceanlib/DBVI) | - | - |

| 11 | EDC | **36.14dB** [^1] | February 2022 [^14] | [](https://github.com/danielism97/EDC) | - | - |

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

## Appendix 2: Metrics selection for the rankings

Currently, the most commonly used metrics in the existing works on video frame interpolation and video deblurring are: PSNR, SSIM and LPIPS. Exactly in that order.

The main purpose of creating my rankings is to look for the best perceptually-oriented model for practical applications - hence the primary metric in my rankings will be the most common perceptual image quality metric in scientific papers: LPIPS.

At the time of writing these words, in October 2023, in relation to VFI, I have only found another perceptual image quality metric - DISTS in one paper: [](https://ieeexplore.ieee.org/document/10182248) and also in one paper I found a bespoke VFI metric - FloLPIPS [[arXiv]](https://arxiv.org/abs/2303.09508). Unfortunately, both of these papers omit to evaluate the best performing models based on the LPIPS metric. If, in the future, some researcher will evaluate LPIPS top-performing models using alternative, better perceptual metrics, I would of course be happy to add rankings based on those metrics.

I would like to use only one metric - LPIPS. Unfortunately still many of the best VFI and video deblurring methods are only evaluated using PSNR or PSNR and SSIM. For this reason, I will additionally present rankings based on PSNR, which will show the models that can, after perceptually-oriented training, be the best for practical applications, as well as providing a source of knowledge for building even better practical models in the future.

I have decided to completely abandon rankings based on the SSIM metric. Below are the main reasons for this decision, ranked from the most important to the less important.

- The main reason is the following quote, which I found in a paper by researchers at Adobe Research: [^28]. In the quote they refer to a paper by researchers at NVIDIA: [[arXiv]](https://arxiv.org/abs/2006.13846).

> We limit the evaluation herein to the PSNR metric since SSIM [57] is subject to unexpected and unintuitive results [39].

- The second reason is, more and more papers are appearing where PSNR scores are given, but without SSIM: [^42] and [](https://ieeexplore.ieee.org/document/10182248) A model from such a paper appearing only in the PSNR-based ranking and at the same time not appearing in the SSIM-based ranking may give the misleading impression that the SSIM score is so poor that it does not exceed the ranking eligibility threshold, while there is simply no SSIM score in a paper.

- The third reason is, that often the SSIM scores of individual models are very close to each other or identical. This is the case in the SNU-FILM Easy test, as shown in Table 3: [[CVPR 2023]](https://openaccess.thecvf.com/content/CVPR2023/html/Yu_Range-Nullspace_Video_Frame_Interpolation_With_Focalized_Motion_Estimation_CVPR_2023_paper.html), where as many as 6 models achieve the same score of 0.991 and as many as 5 models achieve the same score of 0.990. In the same test, PSNR makes it easier to determine the order of the ranking, with the same number of significant digits.

- The fourth reason is that PSNR-based rankings are only ancillary when a model does not have an LPIPS score. For this reason, SSIM rankings do not add value to my repository and only reduce its readability.

- The fifth reason is that I want to encourage researchers who want to use only two metrics in their paper to use LPIPS and PSNR instead of PSNR and SSIM.

- The sixth reason is that the time saved by dropping the SSIM-based rankings will allow me to add new rankings based on other test data, which will be more useful and valuable.

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

## Appendix 3: List of all research papers from the above rankings

| Method | Abbr. | Paper | Venue

(Alt link) | Official

repository |

|:---:|:---:|:---:|:---:|:---:|

| BiM-VFI | - | BiM-VFI: Bidirectional Motion Field-Guided Frame Interpolation for Video with Non-uniform Motions | [](https://arxiv.org/abs/2412.11365) | - |

| FILM | - | FILM: Frame Interpolation for Large Motion | [](https://www.ecva.net/papers/eccv_2022/papers_ECCV/html/4614_ECCV_2022_paper.php) | [](https://github.com/google-research/frame-interpolation) |

| GIMM-VFI | - | Generalizable Implicit Motion Modeling for Video Frame Interpolation | [](https://proceedings.neurips.cc/paper_files/paper/2024/hash/7495fa446f10e9edef6e47b2d327596e-Abstract-Conference.html)

[](https://arxiv.org/abs/2407.08680) | [](https://github.com/GSeanCDAT/GIMM-VFI) |

| InterpAny-Clearer | IA-Clearer | Clearer Frames, Anytime: Resolving Velocity Ambiguity in Video Frame Interpolation | [](https://www.ecva.net/papers/eccv_2024/papers_ECCV/html/4908_ECCV_2024_paper.php) | [](https://github.com/zzh-tech/InterpAny-Clearer) |

| MoMo | - | Disentangled Motion Modeling for Video Frame Interpolation | [](https://arxiv.org/abs/2406.17256) | [](https://github.com/JHLew/MoMo) |

| UGFI | - | Frame Interpolation Transformer and Uncertainty Guidance | [](https://openaccess.thecvf.com/content/CVPR2023/html/Plack_Frame_Interpolation_Transformer_and_Uncertainty_Guidance_CVPR_2023_paper.html) | - |

📝 **Note:** Temporarily, the following list contains full descriptions of those methods that have been removed from the footnotes or not included in the footnotes at all due to the new layout of the tables.

| Method | Paper | Venue |

|:---:|:---:|:---:|

| ABME | | |

| AdaFNIO | | |

| ALANET | | |

| AMT | | |

| BIN | Blurry Video Frame Interpolation | [](https://openaccess.thecvf.com/content_CVPR_2020/html/Shen_Blurry_Video_Frame_Interpolation_CVPR_2020_paper.html) |

| BiT | Blur Interpolation Transformer for Real-World Motion from Blur | [](https://openaccess.thecvf.com/content/CVPR2023/html/Zhong_Blur_Interpolation_Transformer_for_Real-World_Motion_From_Blur_CVPR_2023_paper.html) |

| BVFI | Three-Stage Cascade Framework for Blurry Video Frame Interpolation | [](https://arxiv.org/abs/2310.05383) |

| CDFI | | |

| CtxSyn | Context-aware Synthesis for Video Frame Interpolation | [](https://openaccess.thecvf.com/content_cvpr_2018/html/Niklaus_Context-Aware_Synthesis_for_CVPR_2018_paper.html) |

| DBVI | | |

| DeMFI | DeMFI: Deep Joint Deblurring and Multi-Frame Interpolation with Flow-Guided Attentive Correlation and Recursive Boosting | [](https://www.ecva.net/papers/eccv_2022/papers_ECCV/html/4406_ECCV_2022_paper.php) |

| DQBC | | |

| DRVI | | |

| EAFI | Error-Aware Spatial Ensembles for Video Frame Interpolation | [](https://arxiv.org/abs/2207.12305) |

| EBME | | |

| EDC | Enhancing Deformable Convolution based Video Frame Interpolation with Coarse-to-fine 3D CNN | [](https://ieeexplore.ieee.org/document/9897929) |

| EDENVFI | | |

| EDSC | Multiple Video Frame Interpolation via Enhanced Deformable Separable Convolution | [](https://ieeexplore.ieee.org/document/9501506) |

| EMA-VFI | | |

| FGDCN | | |

| FLAVR | FLAVR: Flow-Agnostic Video Representations for Fast Frame Interpolation | [](https://openaccess.thecvf.com/content/WACV2023/html/Kalluri_FLAVR_Flow-Agnostic_Video_Representations_for_Fast_Frame_Interpolation_WACV_2023_paper.html) |

| HiFI | High-Resolution Frame Interpolation with Patch-based Cascaded Diffusion | [](https://arxiv.org/abs/2410.11838) |

| HRFFM | Video Frame Interpolation with Region-Distinguishable Priors from SAM | [](https://arxiv.org/abs/2312.15868) |

| H-VFI | | |

| IFRNet | IFRNet: Intermediate Feature Refine Network for Efficient Frame Interpolation | [](https://openaccess.thecvf.com/content/CVPR2022/html/Kong_IFRNet_Intermediate_Feature_Refine_Network_for_Efficient_Frame_Interpolation_CVPR_2022_paper.html) |

| IQ-VFI | IQ-VFI: Implicit Quadratic Motion Estimation for Video Frame Interpolation | [](https://openaccess.thecvf.com/content/CVPR2024/html/Hu_IQ-VFI_Implicit_Quadratic_Motion_Estimation_for_Video_Frame_Interpolation_CVPR_2024_paper.html) |

| JNMR | | |

| LADDER | LADDER: An Efficient Framework for Video Frame Interpolation | [](https://arxiv.org/abs/2404.11108) |

| M2M | Many-to-many Splatting for Efficient Video Frame Interpolation | [](https://openaccess.thecvf.com/content/CVPR2022/html/Hu_Many-to-Many_Splatting_for_Efficient_Video_Frame_Interpolation_CVPR_2022_paper.html) |

| MA-GCSPA | Exploring Motion Ambiguity and Alignment for High-Quality Video Frame Interpolation | [](https://openaccess.thecvf.com/content/CVPR2023/html/Zhou_Exploring_Motion_Ambiguity_and_Alignment_for_High-Quality_Video_Frame_Interpolation_CVPR_2023_paper.html) |

| NCM | | |

| PerVFI | Perceptual-Oriented Video Frame Interpolation Via Asymmetric Synergistic Blending | [](https://openaccess.thecvf.com/content/CVPR2024/html/Wu_Perception-Oriented_Video_Frame_Interpolation_via_Asymmetric_Blending_CVPR_2024_paper.html) |

| PRF | Video Frame Interpolation and Enhancement via Pyramid Recurrent Framework | [](https://ieeexplore.ieee.org/document/9257179) |

| ProBoost-Net | Progressive Motion Boosting for Video Frame Interpolation | [](https://ieeexplore.ieee.org/document/10003662) |

| RIFE | Real-Time Intermediate Flow Estimation for Video Frame Interpolation | [](https://www.ecva.net/papers/eccv_2022/papers_ECCV/html/95_ECCV_2022_paper.php) |

| RN-VFI | Range-nullspace Video Frame Interpolation with Focalized Motion Estimation | [](https://openaccess.thecvf.com/content/CVPR2023/html/Yu_Range-Nullspace_Video_Frame_Interpolation_With_Focalized_Motion_Estimation_CVPR_2023_paper.html) |

| SoftSplat | Softmax Splatting for Video Frame Interpolation | [](https://openaccess.thecvf.com/content_CVPR_2020/html/Niklaus_Softmax_Splatting_for_Video_Frame_Interpolation_CVPR_2020_paper.html) |

| SSR | Video Frame Interpolation with Many-to-many Splatting and Spatial Selective Refinement | [](https://ieeexplore.ieee.org/document/10294102) |

| ST-MFNet | | |

| Swin-VFI | Video Frame Interpolation for Polarization via Swin-Transformer | [](https://arxiv.org/abs/2406.11371) |

| TDPNet | Textural Detail Preservation Network for Video Frame Interpolation | [](https://ieeexplore.ieee.org/document/10182248) |

| TTVFI | | |

| UPR-Net | | |

| UTI-VFI | | |

| VFIformer | Video Frame Interpolation with Transformer | [](https://openaccess.thecvf.com/content/CVPR2022/html/Lu_Video_Frame_Interpolation_With_Transformer_CVPR_2022_paper.html) |

| VFIFT | Video Frame Interpolation with Flow Transformer | [](https://dl.acm.org/doi/10.1145/3581783.3612440) |

| VFIMamba | VFIMamba: Video Frame Interpolation with State Space Models | [](https://arxiv.org/abs/2407.02315) |

| VFIT | Video Frame Interpolation Transformer | [](https://openaccess.thecvf.com/content/CVPR2022/html/Shi_Video_Frame_Interpolation_Transformer_CVPR_2022_paper.html) |

| VIDUE | | |

| VRT | VRT: A Video Restoration Transformer | [](https://arxiv.org/abs/2201.12288) |

[](#video-frame-interpolation-rankingsand-video-deblurring-rankings)

[](#list-of-rankings)

[^1]: JNMR: Joint Non-linear Motion Regression for Video Frame Interpolation [[TIP 2023]](https://ieeexplore.ieee.org/document/10255610) [[arXiv]](https://arxiv.org/abs/2206.04231)

[^2]: Video Frame Interpolation Transformer [[CVPR 2022]](https://openaccess.thecvf.com/content/CVPR2022/html/Shi_Video_Frame_Interpolation_Transformer_CVPR_2022_paper.html) [[arXiv]](https://arxiv.org/abs/2111.13817)

[^3]: Exploring Motion Ambiguity and Alignment for High-Quality Video Frame Interpolation [[CVPR 2023]](https://openaccess.thecvf.com/content/CVPR2023/html/Zhou_Exploring_Motion_Ambiguity_and_Alignment_for_High-Quality_Video_Frame_Interpolation_CVPR_2023_paper.html) [[arXiv]](https://arxiv.org/abs/2203.10291)

[^4]: TTVFI: Learning Trajectory-Aware Transformer for Video Frame Interpolation [[TIP 2023]](https://ieeexplore.ieee.org/document/10215337) [[arXiv]](https://arxiv.org/abs/2207.09048)

[^7]: ST-MFNet: A Spatio-Temporal Multi-Flow Network for Frame Interpolation [[CVPR 2022]](https://openaccess.thecvf.com/content/CVPR2022/html/Danier_ST-MFNet_A_Spatio-Temporal_Multi-Flow_Network_for_Frame_Interpolation_CVPR_2022_paper.html) [[arXiv]](https://arxiv.org/abs/2111.15483)

[^8]: Error-Aware Spatial Ensembles for Video Frame Interpolation [[arXiv]](https://arxiv.org/abs/2207.12305)

[^9]: FLAVR: Flow-Agnostic Video Representations for Fast Frame Interpolation [[WACV 2023]](https://openaccess.thecvf.com/content/WACV2023/html/Kalluri_FLAVR_Flow-Agnostic_Video_Representations_for_Fast_Frame_Interpolation_WACV_2023_paper.html) [[arXiv]](https://arxiv.org/abs/2012.08512)

[^10]: IFRNet: Intermediate Feature Refine Network for Efficient Frame Interpolation [[CVPR 2022]](https://openaccess.thecvf.com/content/CVPR2022/html/Kong_IFRNet_Intermediate_Feature_Refine_Network_for_Efficient_Frame_Interpolation_CVPR_2022_paper.html) [[arXiv]](https://arxiv.org/abs/2205.14620)

[^11]: Enhanced Bi-directional Motion Estimation for Video Frame Interpolation [[WACV 2023]](https://openaccess.thecvf.com/content/WACV2023/html/Jin_Enhanced_Bi-Directional_Motion_Estimation_for_Video_Frame_Interpolation_WACV_2023_paper.html) [[arXiv]](https://arxiv.org/abs/2206.08572)

[^12]: Real-Time Intermediate Flow Estimation for Video Frame Interpolation [[ECCV 2022]](https://www.ecva.net/papers/eccv_2022/papers_ECCV/html/95_ECCV_2022_paper.php) [[arXiv]](https://arxiv.org/abs/2011.06294)

[^13]: Asymmetric Bilateral Motion Estimation for Video Frame Interpolation [[ICCV 2021]](https://openaccess.thecvf.com/content/ICCV2021/html/Park_Asymmetric_Bilateral_Motion_Estimation_for_Video_Frame_Interpolation_ICCV_2021_paper.html) [[arXiv]](https://arxiv.org/abs/2108.06815)

[^14]: Enhancing Deformable Convolution based Video Frame Interpolation with Coarse-to-fine 3D CNN [[ICIP 2022]](https://ieeexplore.ieee.org/document/9897929) [[arXiv]](https://arxiv.org/abs/2202.07731)

[^15]: Softmax Splatting for Video Frame Interpolation [[CVPR 2020]](https://openaccess.thecvf.com/content_CVPR_2020/html/Niklaus_Softmax_Splatting_for_Video_Frame_Interpolation_CVPR_2020_paper.html) [[arXiv]](https://arxiv.org/abs/2003.05534)

[^17]: Deep Bayesian Video Frame Interpolation [[ECCV 2022]](https://www.ecva.net/papers/eccv_2022/papers_ECCV/html/1287_ECCV_2022_paper.php)

[^18]: A Unified Pyramid Recurrent Network for Video Frame Interpolation [[CVPR 2023]](https://openaccess.thecvf.com/content/CVPR2023/html/Jin_A_Unified_Pyramid_Recurrent_Network_for_Video_Frame_Interpolation_CVPR_2023_paper.html) [[arXiv]](https://arxiv.org/abs/2211.03456)

[^19]: AdaFNIO: Adaptive Fourier Neural Interpolation Operator for video frame interpolation [[arXiv]](https://arxiv.org/abs/2211.10791)

[^20]: H-VFI: Hierarchical Frame Interpolation for Videos with Large Motions [[arXiv]](https://arxiv.org/abs/2211.11309)

[^21]: Flow Guidance Deformable Compensation Network for Video Frame Interpolation [[TMM 2023]](https://ieeexplore.ieee.org/document/10164197) [[arXiv]](https://arxiv.org/abs/2211.12117)

[^22]: Extracting Motion and Appearance via Inter-Frame Attention for Efficient Video Frame Interpolation [[CVPR 2023]](https://openaccess.thecvf.com/content/CVPR2023/html/Zhang_Extracting_Motion_and_Appearance_via_Inter-Frame_Attention_for_Efficient_Video_CVPR_2023_paper.html) [[arXiv]](https://arxiv.org/abs/2303.00440)

[^23]: AdaPool: Exponential Adaptive Pooling for Information-Retaining Downsampling [[TIP 2022]](https://ieeexplore.ieee.org/document/9982650) [[arXiv]](https://arxiv.org/abs/2111.00772)

[^24]: CDFI: Compression-Driven Network Design for Frame Interpolation [[CVPR 2021]](https://openaccess.thecvf.com/content/CVPR2021/html/Ding_CDFI_Compression-Driven_Network_Design_for_Frame_Interpolation_CVPR_2021_paper.html) [[arXiv]](https://arxiv.org/abs/2103.10559)

[^25]: Multiple Video Frame Interpolation via Enhanced Deformable Separable Convolution [[TPAMI 2021]](https://ieeexplore.ieee.org/document/9501506) [[arXiv]](https://arxiv.org/abs/2006.08070)

[^26]: DRVI: Dual Refinement for Video Interpolation [[Access 2021]](https://ieeexplore.ieee.org/document/9513293)

[^27]: Exploring Discontinuity for Video Frame Interpolation [[CVPR 2023]](https://openaccess.thecvf.com/content/CVPR2023/html/Lee_Exploring_Discontinuity_for_Video_Frame_Interpolation_CVPR_2023_paper.html) [[arXiv]](https://arxiv.org/abs/2202.07291)

[^28]: Revisiting Adaptive Convolutions for Video Frame Interpolation [[WACV 2021]](https://openaccess.thecvf.com/content/WACV2021/html/Niklaus_Revisiting_Adaptive_Convolutions_for_Video_Frame_Interpolation_WACV_2021_paper.html) [[arXiv]](https://arxiv.org/abs/2011.01280)

[^29]: Locally Adaptive Structure and Texture Similarity for Image Quality Assessment [[MM 2021]](https://dl.acm.org/doi/10.1145/3474085.3475419) [[arXiv]](https://arxiv.org/abs/2110.08521)

[^30]: The Unreasonable Effectiveness of Deep Features as a Perceptual Metric [[CVPR 2018]](https://openaccess.thecvf.com/content_cvpr_2018/html/Zhang_The_Unreasonable_Effectiveness_CVPR_2018_paper.html) [[arXiv]](https://arxiv.org/abs/1801.03924)

[^31]: AMT: All-Pairs Multi-Field Transforms for Efficient Frame Interpolation [[CVPR 2023]](https://openaccess.thecvf.com/content/CVPR2023/html/Li_AMT_All-Pairs_Multi-Field_Transforms_for_Efficient_Frame_Interpolation_CVPR_2023_paper.html) [[arXiv]](https://arxiv.org/abs/2304.09790)

[^32]: Neighbor Correspondence Matching for Flow-based Video Frame Synthesis [[MM 2022]](https://dl.acm.org/doi/10.1145/3503161.3548163) [[arXiv]](https://arxiv.org/abs/2207.06763)

[^33]: Blur Interpolation Transformer for Real-World Motion from Blur [[CVPR 2023]](https://openaccess.thecvf.com/content/CVPR2023/html/Zhong_Blur_Interpolation_Transformer_for_Real-World_Motion_From_Blur_CVPR_2023_paper.html) [[arXiv]](https://arxiv.org/abs/2211.11423)

[^34]: DeMFI: Deep Joint Deblurring and Multi-Frame Interpolation with Flow-Guided Attentive Correlation and Recursive Boosting [[ECCV 2022]](https://www.ecva.net/papers/eccv_2022/papers_ECCV/html/4406_ECCV_2022_paper.php) [[arXiv]](https://arxiv.org/abs/2111.09985)

[^35]: Blurry Video Frame Interpolation [[CVPR 2020]](https://openaccess.thecvf.com/content_CVPR_2020/html/Shen_Blurry_Video_Frame_Interpolation_CVPR_2020_paper.html) [[arXiv]](https://arxiv.org/abs/2002.12259)

[^36]: Video Frame Interpolation with Densely Queried Bilateral Correlation [[IJCAI 2023]](https://www.ijcai.org/proceedings/2023/198) [[arXiv]](https://arxiv.org/abs/2304.13596)

[^37]: ALANET: Adaptive Latent Attention Network for Joint Video Deblurring and Interpolation [[MM 2020]](https://dl.acm.org/doi/10.1145/3394171.3413686) [[arXiv]](https://arxiv.org/abs/2009.01005)

[^38]: Video Frame Interpolation and Enhancement via Pyramid Recurrent Framework [[TIP 2020]](https://ieeexplore.ieee.org/document/9257179)

[^39]: Joint Video Multi-Frame Interpolation and Deblurring under Unknown Exposure Time [[CVPR 2023]](https://openaccess.thecvf.com/content/CVPR2023/html/Shang_Joint_Video_Multi-Frame_Interpolation_and_Deblurring_Under_Unknown_Exposure_Time_CVPR_2023_paper.html) [[arXiv]](https://arxiv.org/abs/2303.15043)

[^40]: Video Frame Interpolation without Temporal Priors [[NeurIPS 2020]](https://proceedings.neurips.cc/paper_files/paper/2020/hash/9a11883317fde3aef2e2432a58c86779-Abstract.html) [[arXiv]](https://arxiv.org/abs/2112.01161)

[^42]: Efficient Convolution and Transformer-Based Network for Video Frame Interpolation [[ICIP 2023]](https://ieeexplore.ieee.org/document/10222296) [[arXiv]](https://arxiv.org/abs/2307.06443)