https://github.com/MC-E/DragonDiffusion

ICLR 2024 (Spotlight)

https://github.com/MC-E/DragonDiffusion

Last synced: 10 months ago

JSON representation

ICLR 2024 (Spotlight)

- Host: GitHub

- URL: https://github.com/MC-E/DragonDiffusion

- Owner: MC-E

- License: apache-2.0

- Created: 2023-06-22T08:15:41.000Z (over 2 years ago)

- Default Branch: master

- Last Pushed: 2024-03-02T01:26:51.000Z (almost 2 years ago)

- Last Synced: 2024-10-30T23:35:53.310Z (about 1 year ago)

- Language: Python

- Homepage:

- Size: 10.5 MB

- Stars: 721

- Watchers: 41

- Forks: 22

- Open Issues: 21

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

- awesome-diffusion-categorized - [Code

README

# [DragonDiffusion](https://arxiv.org/abs/2307.02421) + [DiffEditor](https://arxiv.org/abs/2402.02583)

[Chong Mou](https://scholar.google.com/citations?user=SYQoDk0AAAAJ&hl=zh-CN),

[Xintao Wang](https://xinntao.github.io/),

[Jiechong Song](),

[Ying Shan](https://scholar.google.com/citations?user=4oXBp9UAAAAJ),

[Jian Zhang](https://jianzhang.tech/)

[](https://mc-e.github.io/project/DragonDiffusion/)

[](https://arxiv.org/abs/2307.02421)

[](https://arxiv.org/abs/2402.02583)

---

https://user-images.githubusercontent.com/54032224/302051504-dac634f3-85ef-4ff1-80a2-bd2805e067ea.mp4

## 🚩 **New Features/Updates**

- [2024/02/26] **DiffEditor** is accepted by CVPR 2024.

- [2024/02/05] Releasing the paper of **DiffEditor**.

- [2024/02/04] Releasing the code of **DragonDiffusion** and **DiffEditor**.

- [2024/01/15] **DragonDiffusion** is accepted by ICLR 2024 (**Spotlight**).

- [2023/07/06] Paper of **DragonDiffusion** is available [here](https://arxiv.org/abs/2307.02421).

---

# Introduction

**DragonDiffusion** is a turning-free method for fine-grained image editing. The core idea of DragonDiffusion comes from [score-based diffusion](https://arxiv.org/abs/2011.13456). It can perform various editing tasks, including object moving, object resizing, object appearance replacement, content dragging, and object pasting. **DiffEditor** further improves the editing accuracy and flexibility of DragonDiffusion.

# 🔥🔥🔥 Main Features

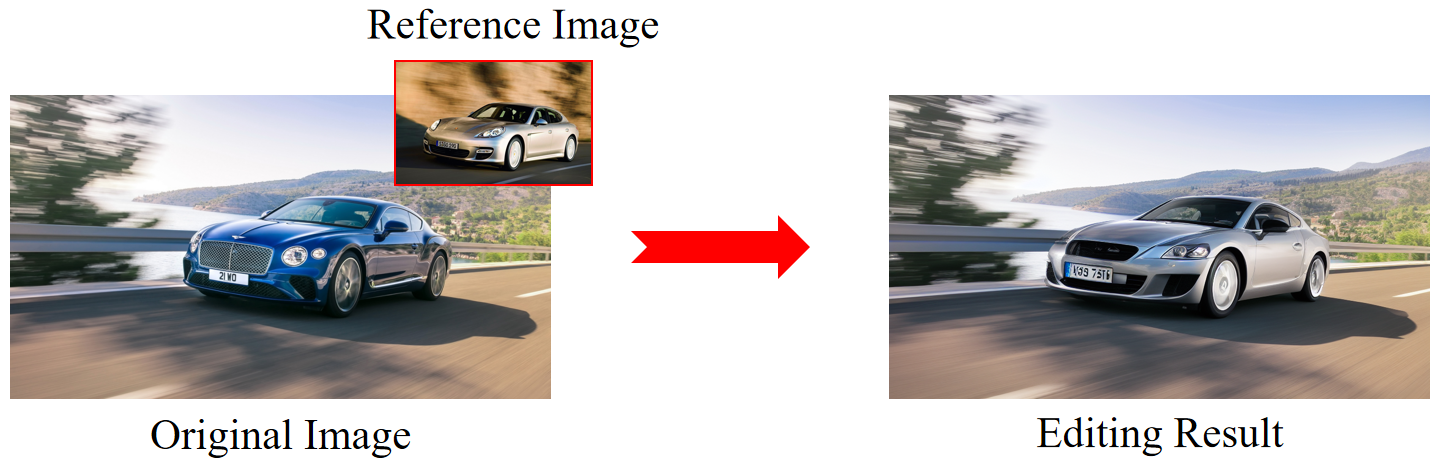

### **Appearance Modulation**

Appearance Modulation can change the appearance of an object in an image. The final appearance can be specified by a reference image.

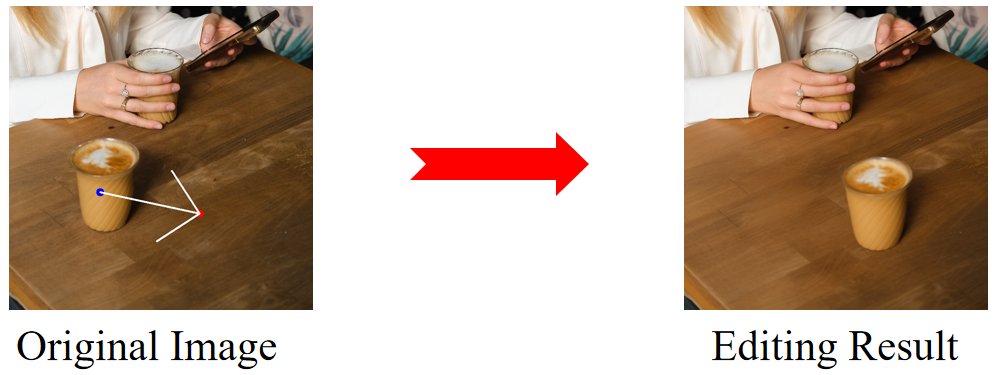

### **Object Moving & Resizing**

Object Moving can move an object in the image to a specified location.

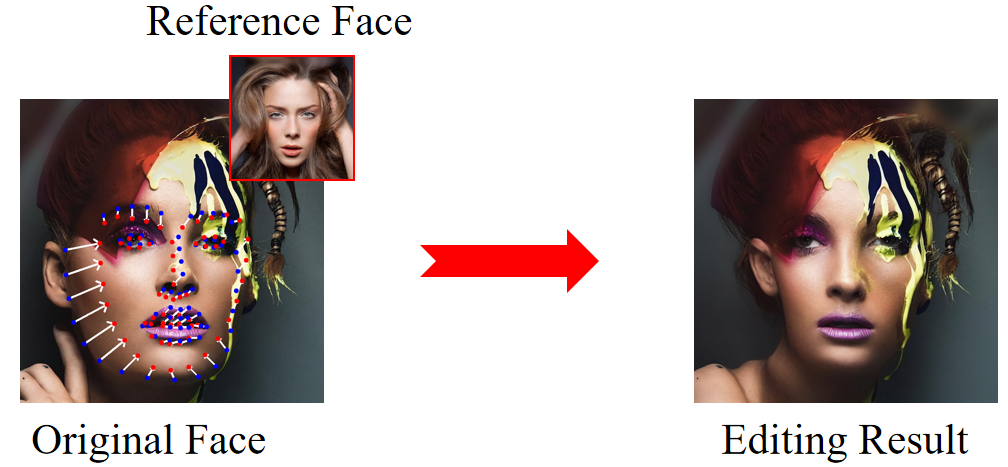

### **Face Modulation**

Face Modulation can transform the outline of one face into the outline of another reference face.

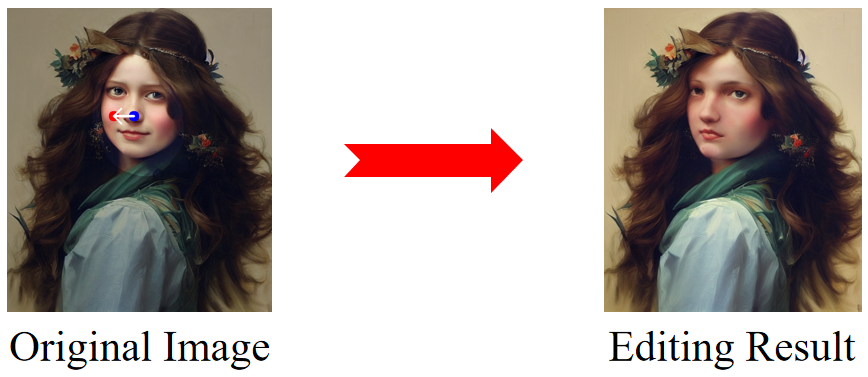

### **Content Dragging**

Content Dragging can perform image editing through point-to-point dragging.

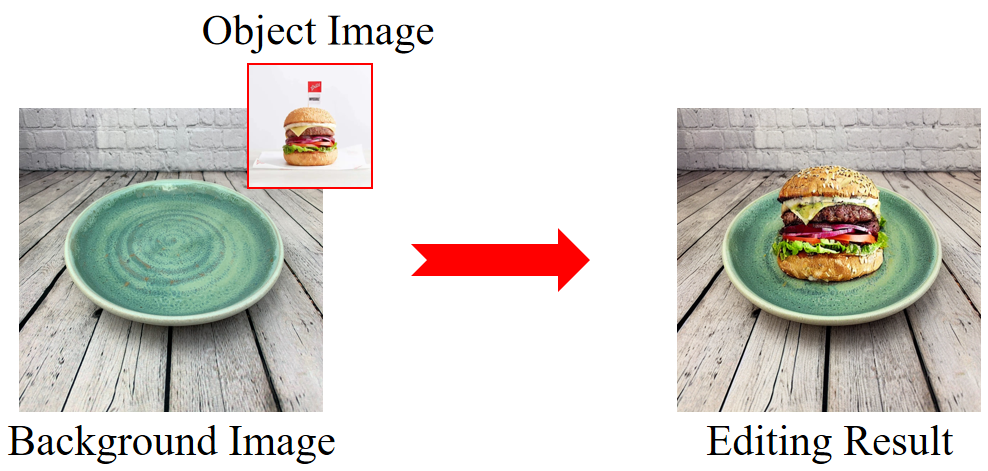

### **Object Pasting**

Object Pasting can paste a given object onto a background image.

# 🔧 Dependencies and Installation

- Python >= 3.8 (Recommend to use [Anaconda](https://www.anaconda.com/download/#linux) or [Miniconda](https://docs.conda.io/en/latest/miniconda.html))

- [PyTorch >= 2.0.1](https://pytorch.org/)

```bash

pip install -r requirements.txt

pip install dlib==19.14.0

```

# ⏬ Download Models

All models will be automatically downloaded. You can also choose to download manually from this [url](https://huggingface.co/Adapter/DragonDiffusion).

# 💻 How to Test

Inference requires at least `16GB` of GPU memory for editing a `768x768` image.

We provide a quick start on gradio demo.

```bash

python app.py

```

# Related Works

[1] Drag Your GAN: Interactive Point-based Manipulation on the Generative Image Manifold

[2] DragDiffusion: Harnessing Diffusion Models for Interactive Point-based Image Editing

[3]

Emergent Correspondence from Image Diffusion

[4] Diffusion Self-Guidance for Controllable Image Generation

[5] IP-Adapter: Text Compatible Image Prompt Adapter for Text-to-Image Diffusion Models

# 🤗 Acknowledgements

We appreciate the foundational work done by [score-based diffusion](https://arxiv.org/abs/2011.13456) and [DragGAN](https://arxiv.org/abs/2305.10973).

# BibTeX

@article{mou2023dragondiffusion,

title={Dragondiffusion: Enabling drag-style manipulation on diffusion models},

author={Mou, Chong and Wang, Xintao and Song, Jiechong and Shan, Ying and Zhang, Jian},

journal={arXiv preprint arXiv:2307.02421},

year={2023}

}

@article{mou2023diffeditor,

title={DiffEditor: Boosting Accuracy and Flexibility on Diffusion-based Image Editing},

author={Mou, Chong and Wang, Xintao and Song, Jiechong and Shan, Ying and Zhang, Jian},

journal={arXiv preprint arXiv:2402.02583},

year={2023}

}