https://github.com/Sxela/ArcaneGAN

ArcaneGAN

https://github.com/Sxela/ArcaneGAN

Last synced: 9 days ago

JSON representation

ArcaneGAN

- Host: GitHub

- URL: https://github.com/Sxela/ArcaneGAN

- Owner: Sxela

- License: mit

- Created: 2021-12-05T22:38:46.000Z (over 3 years ago)

- Default Branch: main

- Last Pushed: 2024-01-08T05:29:28.000Z (over 1 year ago)

- Last Synced: 2024-08-02T20:49:19.079Z (9 months ago)

- Size: 25.4 KB

- Stars: 652

- Watchers: 31

- Forks: 80

- Open Issues: 12

-

Metadata Files:

- Readme: README.md

- Funding: .github/FUNDING.yml

- License: LICENSE

Awesome Lists containing this project

- awesome - Sxela/ArcaneGAN - ArcaneGAN (Others)

README

# ArcaneGAN by [Alex Spirin](https://twitter.com/devdef)

[![][github-release-shield]][github-release-link]

[![][github-release-date-shield]][github-release-link]

[![][github-downloads-shield]][github-downloads-link][github-release-shield]: https://img.shields.io/github/v/release/Sxela/ArcaneGAN?style=flat&sort=semver

[github-release-link]: https://github.com/Sxela/ArcaneGAN/releases

[github-release-date-shield]: https://img.shields.io/github/release-date/Sxela/ArcaneGAN?style=flat

[github-downloads-shield]: https://img.shields.io/github/downloads/Sxela/ArcaneGAN/total?style=flat

[github-downloads-link]: https://github.com/Sxela/ArcaneGAN/releasesPhotos [](https://colab.research.google.com/drive/1r1hhciakk5wHaUn1eJk7TP58fV9mjy_W)

Videos [](https://colab.research.google.com/drive/1ohKCiOwZrhM3pza4L93AHAfMkIkJ5YQF)

If you like what I'm doing you can:

- follow me on [twitter](https://twitter.com/devdef)

- check my collections at [opensea](https://opensea.io/collection/ai-scrapers)

- tip me on [patreon](https://www.patreon.com/sxela)Thank you for being awesome!

**Changelog**

* 2022-04-04 Added a new image2image model training [url](https://github.com/aarcosg/fastai-course-v3-notes/blob/master/refactored_by_topics/CNN_L7_gan_feature-loss.md)

* 2021-12-27 Added [colab for videos](https://colab.research.google.com/drive/1ohKCiOwZrhM3pza4L93AHAfMkIkJ5YQF)

* 2021-12-25 ArcaneGAN v0.4 is [live](https://github.com/Sxela/ArcaneGAN/releases/tag/v0.4)

* 2021-12-14 Added [Video demo](https://huggingface.co/spaces/sxela/ArcaneGAN-video) on huggingface

* 2021-12-12 ArcaneGAN v0.3 is [live](https://github.com/Sxela/ArcaneGAN/releases/tag/v0.3)

* 2021-12-09 Thanks to [ak92501](https://twitter.com/ak92501) we now have a [huggingface demo](https://huggingface.co/spaces/akhaliq/ArcaneGAN)## ArcaneGAN v0.4

The main differences are:

- lighter styling (closer to original input)

- sharper result

- happier faces

- reduced childish eyes effect

- reduced stubble on feminine faces

- increased temporal stability on videos

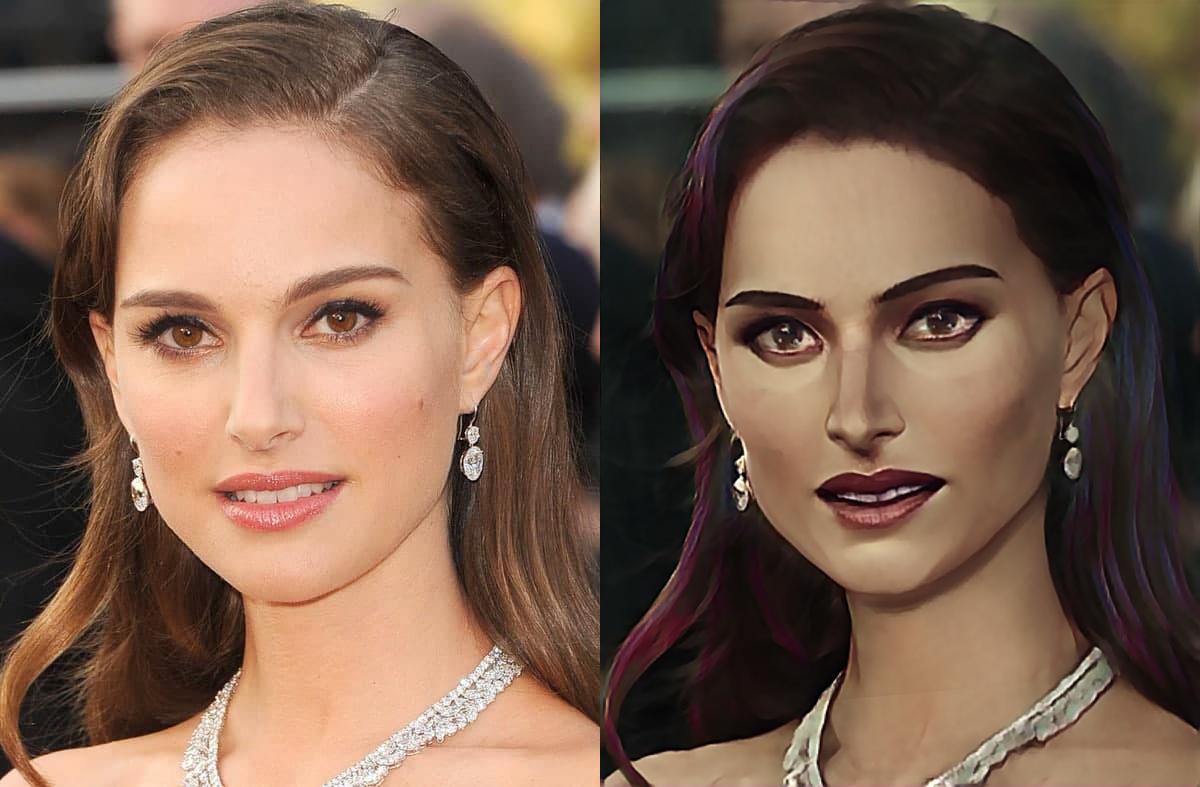

- reduced mouth\teeth artifacts### Image samples

v0.3 vs v0.4

### Video samples

https://user-images.githubusercontent.com/11751592/146966428-f4e27929-19dd-423f-a772-8aee709d2116.mp4

https://user-images.githubusercontent.com/11751592/146966462-6511998e-77f5-4fd2-8ad9-5709bf0cd172.mp4

## ArcaneGAN v0.3

Videos processed by the huggingface video inference colab.

https://user-images.githubusercontent.com/11751592/145702737-c02b8b00-ad30-4358-98bf-97c8ad7fefdf.mp4

https://user-images.githubusercontent.com/11751592/145702740-afd3377d-d117-467d-96ca-045e25d85ac6.mp4

# Image samples

Faces were enhanced via [GPEN](https://github.com/yangxy/GPEN) before applying the ArcaneGAN v0.3 filter.

## ArcaneGAN v0.2

The release is [here](https://github.com/Sxela/ArcaneGAN/releases/tag/v0.2)

## Implementation Details

The model is a pytroch *.jit of a fastai v1 flavored u-net trained on a paired dataset, generated via a blended stylegan2.

- Model architecture: [fastai v1 u-net](https://fastai1.fast.ai/vision.models.unet.html)

- Stylegan2 implementation used: [stylegan3 repo](https://github.com/NVlabs/stylegan3)

- Stylegan blending example: [stylegan3 blending](https://github.com/Sxela/stylegan3_blending)

- Paired image2image training: [fastai v1 superres notebook](https://github.com/aarcosg/fastai-course-v3-notes/blob/master/refactored_by_topics/CNN_L7_gan_feature-loss.md)