https://github.com/WenjieDu/SAITS

The official PyTorch implementation of the paper "SAITS: Self-Attention-based Imputation for Time Series". A fast and state-of-the-art (SOTA) deep-learning neural network model for efficient time-series imputation (impute multivariate incomplete time series containing NaN missing data/values with machine learning). https://arxiv.org/abs/2202.08516

https://github.com/WenjieDu/SAITS

attention attention-mechanism deep-learning imputation imputation-model impute incomplete-data incomplete-time-series interpolation irregular-sampling machine-learning missing-values partially-observed partially-observed-data partially-observed-time-series pytorch self-attention time-series time-series-imputation transformer

Last synced: 7 months ago

JSON representation

The official PyTorch implementation of the paper "SAITS: Self-Attention-based Imputation for Time Series". A fast and state-of-the-art (SOTA) deep-learning neural network model for efficient time-series imputation (impute multivariate incomplete time series containing NaN missing data/values with machine learning). https://arxiv.org/abs/2202.08516

- Host: GitHub

- URL: https://github.com/WenjieDu/SAITS

- Owner: WenjieDu

- License: mit

- Created: 2021-12-07T14:57:37.000Z (almost 4 years ago)

- Default Branch: main

- Last Pushed: 2024-04-25T10:10:15.000Z (over 1 year ago)

- Last Synced: 2024-05-21T11:48:32.908Z (over 1 year ago)

- Topics: attention, attention-mechanism, deep-learning, imputation, imputation-model, impute, incomplete-data, incomplete-time-series, interpolation, irregular-sampling, machine-learning, missing-values, partially-observed, partially-observed-data, partially-observed-time-series, pytorch, self-attention, time-series, time-series-imputation, transformer

- Language: Python

- Homepage: https://doi.org/10.1016/j.eswa.2023.119619

- Size: 587 KB

- Stars: 271

- Watchers: 5

- Forks: 47

- Open Issues: 1

-

Metadata Files:

- Readme: README.md

- License: LICENSE

- Citation: CITATION.cff

Awesome Lists containing this project

- awesome-time-series - [code

README

> [!TIP]

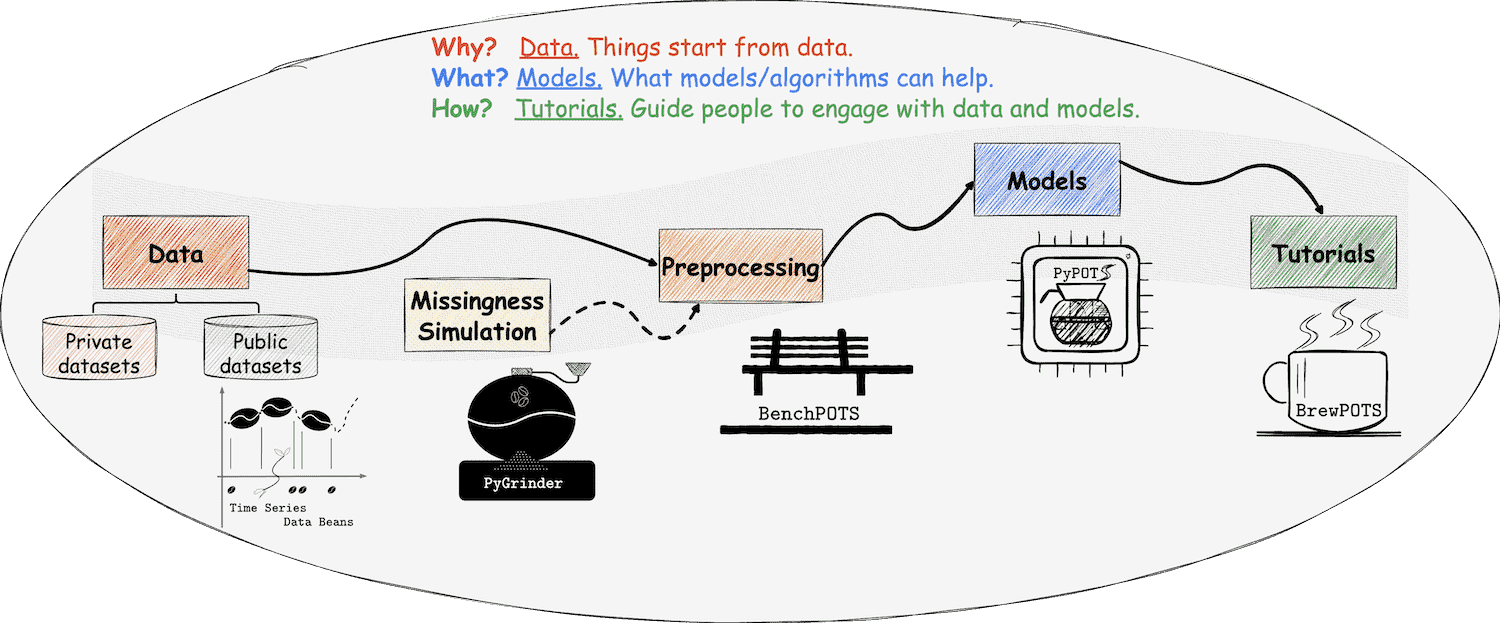

> **[Updates in Jun 2024]** 😎 The 1st comprehensive time-seres imputation benchmark paper

[TSI-Bench: Benchmarking Time Series Imputation](https://arxiv.org/abs/2406.12747) now is public available.

The code is open source in the repo [Awesome_Imputation](https://github.com/WenjieDu/Awesome_Imputation).

With nearly 35,000 experiments, we provide a comprehensive benchmarking study on 28 imputation methods, 3 missing patterns (points, sequences, blocks),

various missing rates, and 8 real-world datasets.

>

> **[Updates in May 2024]** 🔥 We applied SAITS embedding and training strategies to **iTransformer, FiLM, FreTS, Crossformer, PatchTST, DLinear, ETSformer, FEDformer,

> Informer, Autoformer, Non-stationary Transformer, Pyraformer, Reformer, SCINet, RevIN, Koopa, MICN, TiDE, and StemGNN** in  PyPOTS

PyPOTS

> to enable them applicable to the time-series imputation task.

>

> **[Updates in Feb 2024]** 🎉 Our survey paper [Deep Learning for Multivariate Time Series Imputation: A Survey](https://arxiv.org/abs/2402.04059) has been released on arXiv.

We comprehensively review the literature of the state-of-the-art deep-learning imputation methods for time series,

provide a taxonomy for them, and discuss the challenges and future directions in this field.

**‼️Kind reminder: This document can help you solve many common questions, please read it before you run the code.**

The official code repository is for the paper [SAITS: Self-Attention-based Imputation for Time Series](https://doi.org/10.1016/j.eswa.2023.119619)

(preprint on arXiv is [here](https://arxiv.org/abs/2202.08516)), which has been accepted by the journal

*[Expert Systems with Applications (ESWA)](https://www.sciencedirect.com/journal/expert-systems-with-applications)*

[2022 IF 8.665, CiteScore 12.2, JCR-Q1, CAS-Q1, CCF-C]. You may never have heard of ESWA,

while it was ranked 1st in Google Scholar under the top publications of Artificial Intelligence in 2016

([info source](https://www.sciencedirect.com/journal/expert-systems-with-applications/about/news#expert-systems-with-applications-is-currently-ranked-no1-in)), and is still the top 1 AI journal according to Google Scholar metrics

([here is the current ranking list](https://scholar.google.com/citations?view_op=top_venues&hl=en&vq=eng_artificialintelligence) FYI).

SAITS is the first work applying pure self-attention without any recursive design in the algorithm for general time series imputation.

Basically you can take it as a validated framework for time series imputation, like we've integrated 2️⃣0️⃣ forecasting models into PyPOTS by adapting SAITS framework.

More generally, you can use it for sequence imputation. Besides, the code here is open source under the MIT license.

Therefore, you're welcome to modify the SAITS code for your own research purpose and domain applications.

Of course, it probably needs a bit of modification in the model structure or loss functions for specific scenarios or data input.

And this is [an incomplete list](https://scholar.google.com/scholar?hl=en&as_sdt=0%2C5&as_ylo=2022&q=%E2%80%9CSAITS%E2%80%9D+%22time+series%22) of scientific research referencing SAITS in their papers.

🤗 Please [cite SAITS](https://github.com/WenjieDu/SAITS#-citing-saits) in your publications if it helps with your work.

Please star🌟 this repo to help others notice SAITS if you think it is useful.

It really means a lot to our open-source research. Thank you!

BTW, you may also like

PyPOTS

for easily modeling your partially-observed time-series datasets.

> [!IMPORTANT]

>

> **📣 Attention please:**

>

> SAITS now is available in [PyPOTS](https://github.com/WenjieDu/PyPOTS), a Python toolbox for data mining on POTS (Partially-Observed Time Series).

> An example of training SAITS for imputing dataset PhysioNet-2012 is shown below. With [PyPOTS](https://github.com/WenjieDu/PyPOTS), easy peasy! 😉

👉 Click here to see the example 👀

``` python

import numpy as np

from sklearn.preprocessing import StandardScaler

from pygrinder import mcar, calc_missing_rate

from benchpots.datasets import preprocess_physionet2012

data = preprocess_physionet2012(subset='set-a',rate=0.1) # Our ecosystem libs will automatically download and extract it

train_X, val_X, test_X = data["train_X"], data["val_X"], data["test_X"]

print(train_X.shape) # (n_samples, n_steps, n_features)

print(val_X.shape) # samples (n_samples) in train set and val set are different, but they have the same sequence len (n_steps) and feature dim (n_features)

print(f"We have {calc_missing_rate(train_X):.1%} values missing in train_X")

train_set = {"X": train_X} # in training set, simply put the incomplete time series into it

val_set = {

"X": val_X,

"X_ori": data["val_X_ori"], # in validation set, we need ground truth for evaluation and picking the best model checkpoint

}

test_set = {"X": test_X} # in test set, only give the testing incomplete time series for model to impute

test_X_ori = data["test_X_ori"] # test_X_ori bears ground truth for evaluation

indicating_mask = np.isnan(test_X) ^ np.isnan(test_X_ori) # mask indicates the values that are missing in X but not in X_ori, i.e. where the gt values are

from pypots.imputation import SAITS # import the model you want to use

from pypots.nn.functional import calc_mae

saits = SAITS(n_steps=train_X.shape[1], n_features=train_X.shape[2], n_layers=2, d_model=256, n_heads=4, d_k=64, d_v=64, d_ffn=128, dropout=0.1, epochs=5)

saits.fit(train_set, val_set) # train the model on the dataset

imputation = saits.impute(test_set) # impute the originally-missing values and artificially-missing values

mae = calc_mae(imputation, np.nan_to_num(test_X_ori), indicating_mask) # calculate mean absolute error on the ground truth (artificially-missing values)

saits.save("save_it_here/saits_physionet2012.pypots") # save the model for future use

saits.load("save_it_here/saits_physionet2012.pypots") # reload the serialized model file for following imputation or training

```

☕️ Welcome to the universe of PyPOTS. Enjoy it and have fun!

## ❖ Motivation and Performance

⦿ **`Motivation`**: SAITS is developed primarily to help overcome the drawbacks (slow speed, memory constraints, and compounding error)

of RNN-based imputation models and to obtain the state-of-the-art (SOTA) imputation accuracy on partially-observed time series.

⦿ **`Performance`**: SAITS outperforms [BRITS](https://papers.nips.cc/paper/2018/hash/734e6bfcd358e25ac1db0a4241b95651-Abstract.html)

by **12% ∼ 38%** in MAE (mean absolute error) and achieves **2.0 ∼ 2.6** times faster training speed.

Furthermore, SAITS outperforms Transformer (trained by our joint-optimization approach) by **2% ∼ 19%** in MAE with a

more efficient model structure (to obtain comparable performance, SAITS needs only **15% ∼ 30%** parameters of Transformer).

Compared to another SOTA self-attention imputation model [NRTSI](https://github.com/lupalab/NRTSI), SAITS achieves

**7% ∼ 39%** smaller mean squared error (above 20% in nine out of sixteen cases), meanwhile, needs much

fewer parameters and less imputation time in practice.

Please refer to our [full paper](https://arxiv.org/pdf/2202.08516.pdf) for more details about SAITS' performance.

## ❖ Brief Graphical Illustration of Our Methodology

Here we only show the two main components of our method: the joint-optimization training approach and SAITS structure.

For the detailed description and explanation, please read our full paper `Paper_SAITS.pdf` in this repo

or [on arXiv](https://arxiv.org/pdf/2202.08516.pdf).

Fig. 1: Training approach

Fig. 2: SAITS structure

## ❖ Citing SAITS

If you find SAITS is helpful to your work, please cite our paper as below,

⭐️star this repository, and recommend it to others who you think may need it. 🤗 Thank you!

```bibtex

@article{du2023saits,

title = {{SAITS: Self-Attention-based Imputation for Time Series}},

journal = {Expert Systems with Applications},

volume = {219},

pages = {119619},

year = {2023},

issn = {0957-4174},

doi = {10.1016/j.eswa.2023.119619},

url = {https://arxiv.org/abs/2202.08516},

author = {Wenjie Du and David Cote and Yan Liu},

}

```

or

> Wenjie Du, David Cote, and Yan Liu.

> SAITS: Self-Attention-based Imputation for Time Series.

> Expert Systems with Applications, 219:119619, 2023.

### 😎 Our latest survey and benchmarking research on time-series imputation may also be useful to your work:

```bibtex

@article{du2024tsibench,

title={TSI-Bench: Benchmarking Time Series Imputation},

author={Wenjie Du and Jun Wang and Linglong Qian and Yiyuan Yang and Fanxing Liu and Zepu Wang and Zina Ibrahim and Haoxin Liu and Zhiyuan Zhao and Yingjie Zhou and Wenjia Wang and Kaize Ding and Yuxuan Liang and B. Aditya Prakash and Qingsong Wen},

journal={arXiv preprint arXiv:2406.12747},

year={2024}

}

```

```bibtex

@article{wang2024deep,

title={Deep Learning for Multivariate Time Series Imputation: A Survey},

author={Jun Wang and Wenjie Du and Wei Cao and Keli Zhang and Wenjia Wang and Yuxuan Liang and Qingsong Wen},

journal={arXiv preprint arXiv:2402.04059},

year={2024}

}

```

### 🔥 In case you use PyPOTS in your research, please also cite the following paper:

``` bibtex

@article{du2023pypots,

title={{PyPOTS: a Python toolbox for data mining on Partially-Observed Time Series}},

author={Wenjie Du},

journal={arXiv preprint arXiv:2305.18811},

year={2023},

}

```

or

> Wenjie Du.

> PyPOTS: a Python toolbox for data mining on Partially-Observed Time Series.

> arXiv, abs/2305.18811, 2023.

## ❖ Repository Structure

The implementation of SAITS is in dir [`modeling`](https://github.com/WenjieDu/SAITS/blob/main/modeling/SA_models.py).

We give configurations of our models in dir [`configs`](https://github.com/WenjieDu/SAITS/tree/main/configs), provide

the dataset links and preprocessing scripts in dir [`dataset_generating_scripts`](https://github.com/WenjieDu/SAITS/tree/main/dataset_generating_scripts).

Dir [`NNI_tuning`](https://github.com/WenjieDu/SAITS/tree/main/NNI_tuning) contains the hyper-parameter searching configurations.

## ❖ Development Environment

All dependencies of our development environment are listed in file [`conda_env_dependencies.yml`](https://github.com/WenjieDu/SAITS/blob/main/conda_env_dependencies.yml).

You can quickly create a usable python environment with an anaconda command `conda env create -f conda_env_dependencies.yml`.

## ❖ Datasets

For datasets downloading and generating, please check out the scripts in

dir [`dataset_generating_scripts`](https://github.com/WenjieDu/SAITS/tree/main/dataset_generating_scripts).

## ❖ Quick Run

Generate the dataset you need first. To do so, please check out the generating scripts in

dir [`dataset_generating_scripts`](https://github.com/WenjieDu/SAITS/tree/main/dataset_generating_scripts).

After data generation, train and test your model, for example,

```shell

# create a dir to save logs and results

mkdir NIPS_results

# train a model

nohup python run_models.py \

--config_path configs/PhysioNet2012_SAITS_best.ini \

> NIPS_results/PhysioNet2012_SAITS_best.out &

# during training, you can run the blow command to read the training log

less NIPS_results/PhysioNet2012_SAITS_best.out

# after training, pick the best model and modify the path of the model for testing in the config file, then run the below command to test the model

python run_models.py \

--config_path configs/PhysioNet2012_SAITS_best.ini \

--test_mode

```

❗️Note that paths of datasets and saving dirs may be different on personal computers, please check them in the configuration files.

## ❖ Acknowledgments

Thanks to Ciena, Mitacs, and NSERC (Natural Sciences and Engineering Research Council of Canada) for funding support.

Thanks to all our reviewers for helping improve the quality of this paper.

Thanks to Ciena for providing computing resources.

And thank you all for your attention to this work.

### ✨Stars/forks/issues/PRs are all welcome!

👏 Click to View Stargazers and Forkers:

[](https://github.com/WenjieDu/SAITS/stargazers)

[](https://github.com/WenjieDu/SAITS/network/members)

## ❖ Last but Not Least

If you have any additional questions or have interests in collaboration,

please take a look at [my GitHub profile](https://github.com/WenjieDu) and feel free to contact me 😃.