https://github.com/abacaj/mpt-30b-inference

Run inference on MPT-30B using CPU

https://github.com/abacaj/mpt-30b-inference

ctransformers ggml mpt-30b

Last synced: 6 months ago

JSON representation

Run inference on MPT-30B using CPU

- Host: GitHub

- URL: https://github.com/abacaj/mpt-30b-inference

- Owner: abacaj

- License: mit

- Created: 2023-06-26T05:30:01.000Z (over 2 years ago)

- Default Branch: main

- Last Pushed: 2023-06-30T19:18:55.000Z (over 2 years ago)

- Last Synced: 2025-03-29T01:15:14.593Z (6 months ago)

- Topics: ctransformers, ggml, mpt-30b

- Language: Python

- Homepage:

- Size: 3.93 MB

- Stars: 575

- Watchers: 13

- Forks: 94

- Open Issues: 6

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

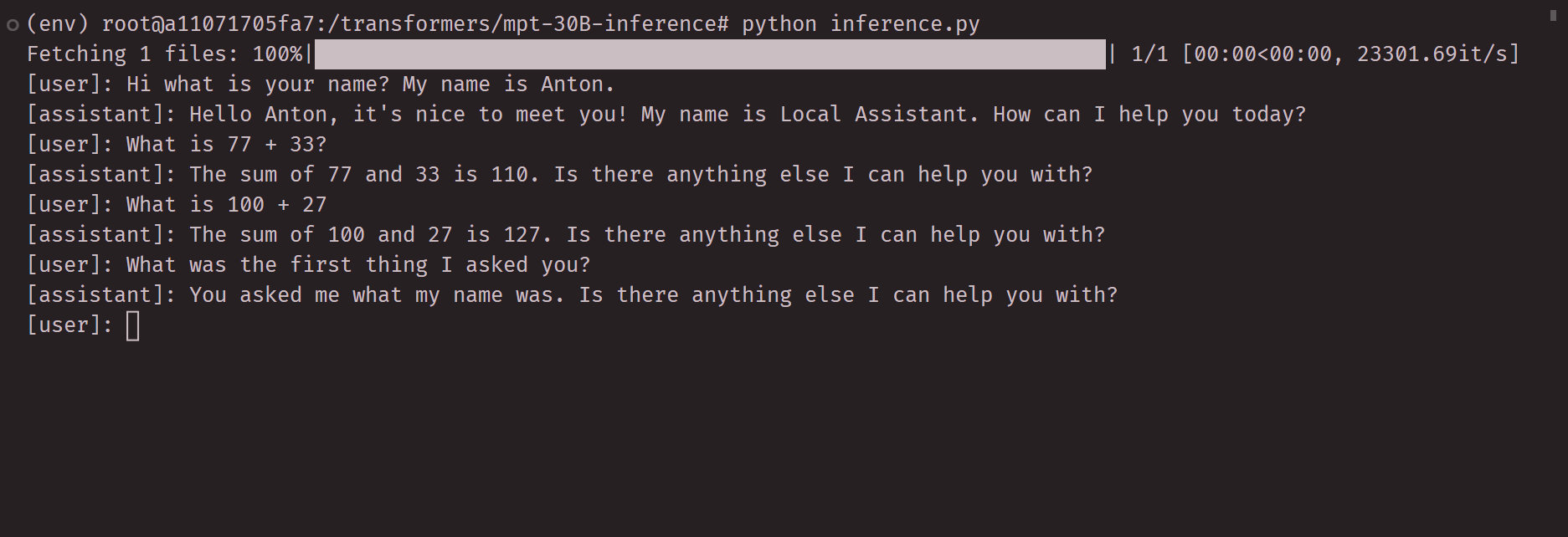

# MPT 30B inference code using CPU

Run inference on the latest MPT-30B model using your CPU. This inference code uses a [ggml](https://github.com/ggerganov/ggml) quantized model. To run the model we'll use a library called [ctransformers](https://github.com/marella/ctransformers) that has bindings to ggml in python.

Turn style with history on latest commit:

Video of initial demo:

[Inference Demo](https://github.com/abacaj/mpt-30B-inference/assets/7272343/486fc9b1-8216-43cc-93c3-781677235502)

## Requirements

I recommend you use docker for this model, it will make everything easier for you. Minimum specs system with 32GB of ram. Recommend to use `python 3.10`.

## Tested working on

Will post some numbers for these two later.

- AMD Epyc 7003 series CPU

- AMD Ryzen 5950x CPU

## Setup

First create a venv.

```sh

python -m venv env && source env/bin/activate

```

Next install dependencies.

```sh

pip install -r requirements.txt

```

Next download the quantized model weights (about 19GB).

```sh

python download_model.py

```

Ready to rock, run inference.

```sh

python inference.py

```

Next modify inference script prompt and generation parameters.