https://github.com/acai66/yolov5_rotation

rotated bbox detection. inspired by https://github.com/hukaixuan19970627/YOLOv5_DOTA_OBB, thanks hukaixuan19970627.

https://github.com/acai66/yolov5_rotation

object-detection rotation rotation-detector yolov5

Last synced: 4 months ago

JSON representation

rotated bbox detection. inspired by https://github.com/hukaixuan19970627/YOLOv5_DOTA_OBB, thanks hukaixuan19970627.

- Host: GitHub

- URL: https://github.com/acai66/yolov5_rotation

- Owner: acai66

- License: gpl-3.0

- Created: 2021-08-10T10:14:29.000Z (over 4 years ago)

- Default Branch: master

- Last Pushed: 2023-03-13T04:59:12.000Z (almost 3 years ago)

- Last Synced: 2025-08-13T07:02:04.359Z (4 months ago)

- Topics: object-detection, rotation, rotation-detector, yolov5

- Language: HTML

- Homepage:

- Size: 9.61 MB

- Stars: 90

- Watchers: 3

- Forks: 16

- Open Issues: 19

-

Metadata Files:

- Readme: README.md

- Contributing: CONTRIBUTING.md

- Funding: .github/FUNDING.yml

- License: LICENSE

Awesome Lists containing this project

- awesome-yolo-object-detection - acai66/yolov5_rotation

README

YOLOv5 🚀 is a family of object detection architectures and models pretrained on the COCO dataset, and represents Ultralytics

open-source research into future vision AI methods, incorporating lessons learned and best practices evolved over thousands of hours of research and development.

##

Documentation

See the [YOLOv5 Docs](https://docs.ultralytics.com) for full documentation on training, testing and deployment.

##

Quick Start Examples

Install

[**Python>=3.6.0**](https://www.python.org/) is required with all

[requirements.txt](https://github.com/ultralytics/yolov5/blob/master/requirements.txt) installed including

[**PyTorch>=1.7**](https://pytorch.org/get-started/locally/):

```bash

$ git clone https://github.com/ultralytics/yolov5

$ cd yolov5

$ pip install -r requirements.txt

```

Inference

Inference with YOLOv5 and [PyTorch Hub](https://github.com/ultralytics/yolov5/issues/36). Models automatically download

from the [latest YOLOv5 release](https://github.com/ultralytics/yolov5/releases).

```python

import torch

# Model

model = torch.hub.load('ultralytics/yolov5', 'yolov5s') # or yolov5m, yolov5l, yolov5x, custom

# Images

img = 'https://ultralytics.com/images/zidane.jpg' # or file, Path, PIL, OpenCV, numpy, list

# Inference

results = model(img)

# Results

results.print() # or .show(), .save(), .crop(), .pandas(), etc.

```

Inference with detect.py

`detect.py` runs inference on a variety of sources, downloading models automatically from

the [latest YOLOv5 release](https://github.com/ultralytics/yolov5/releases) and saving results to `runs/detect`.

```bash

$ python detect.py --source 0 # webcam

img.jpg # image

vid.mp4 # video

path/ # directory

path/*.jpg # glob

'https://youtu.be/Zgi9g1ksQHc' # YouTube

'rtsp://example.com/media.mp4' # RTSP, RTMP, HTTP stream

```

Training

Run commands below to reproduce results

on [COCO](https://github.com/ultralytics/yolov5/blob/master/data/scripts/get_coco.sh) dataset (dataset auto-downloads on

first use). Training times for YOLOv5s/m/l/x are 2/4/6/8 days on a single V100 (multi-GPU times faster). Use the

largest `--batch-size` your GPU allows (batch sizes shown for 16 GB devices).

```bash

$ python train.py --data coco.yaml --cfg yolov5s.yaml --weights '' --batch-size 64

yolov5m 40

yolov5l 24

yolov5x 16

```

Tutorials

* [Train Custom Data](https://github.com/ultralytics/yolov5/wiki/Train-Custom-Data) 🚀 RECOMMENDED

* [Tips for Best Training Results](https://github.com/ultralytics/yolov5/wiki/Tips-for-Best-Training-Results) ☘️

RECOMMENDED

* [Weights & Biases Logging](https://github.com/ultralytics/yolov5/issues/1289) 🌟 NEW

* [Roboflow for Datasets, Labeling, and Active Learning](https://github.com/ultralytics/yolov5/issues/4975) 🌟 NEW

* [Multi-GPU Training](https://github.com/ultralytics/yolov5/issues/475)

* [PyTorch Hub](https://github.com/ultralytics/yolov5/issues/36) ⭐ NEW

* [TorchScript, ONNX, CoreML Export](https://github.com/ultralytics/yolov5/issues/251) 🚀

* [Test-Time Augmentation (TTA)](https://github.com/ultralytics/yolov5/issues/303)

* [Model Ensembling](https://github.com/ultralytics/yolov5/issues/318)

* [Model Pruning/Sparsity](https://github.com/ultralytics/yolov5/issues/304)

* [Hyperparameter Evolution](https://github.com/ultralytics/yolov5/issues/607)

* [Transfer Learning with Frozen Layers](https://github.com/ultralytics/yolov5/issues/1314) ⭐ NEW

* [TensorRT Deployment](https://github.com/wang-xinyu/tensorrtx)

##

Environments

Get started in seconds with our verified environments. Click each icon below for details.

##

Integrations

|Weights and Biases|Roboflow ⭐ NEW|

|:-:|:-:|

|Automatically track and visualize all your YOLOv5 training runs in the cloud with [Weights & Biases](https://wandb.ai/site?utm_campaign=repo_yolo_readme)|Label and export your custom datasets directly to YOLOv5 for training with [Roboflow](https://roboflow.com/?ref=ultralytics) |

##

Why YOLOv5

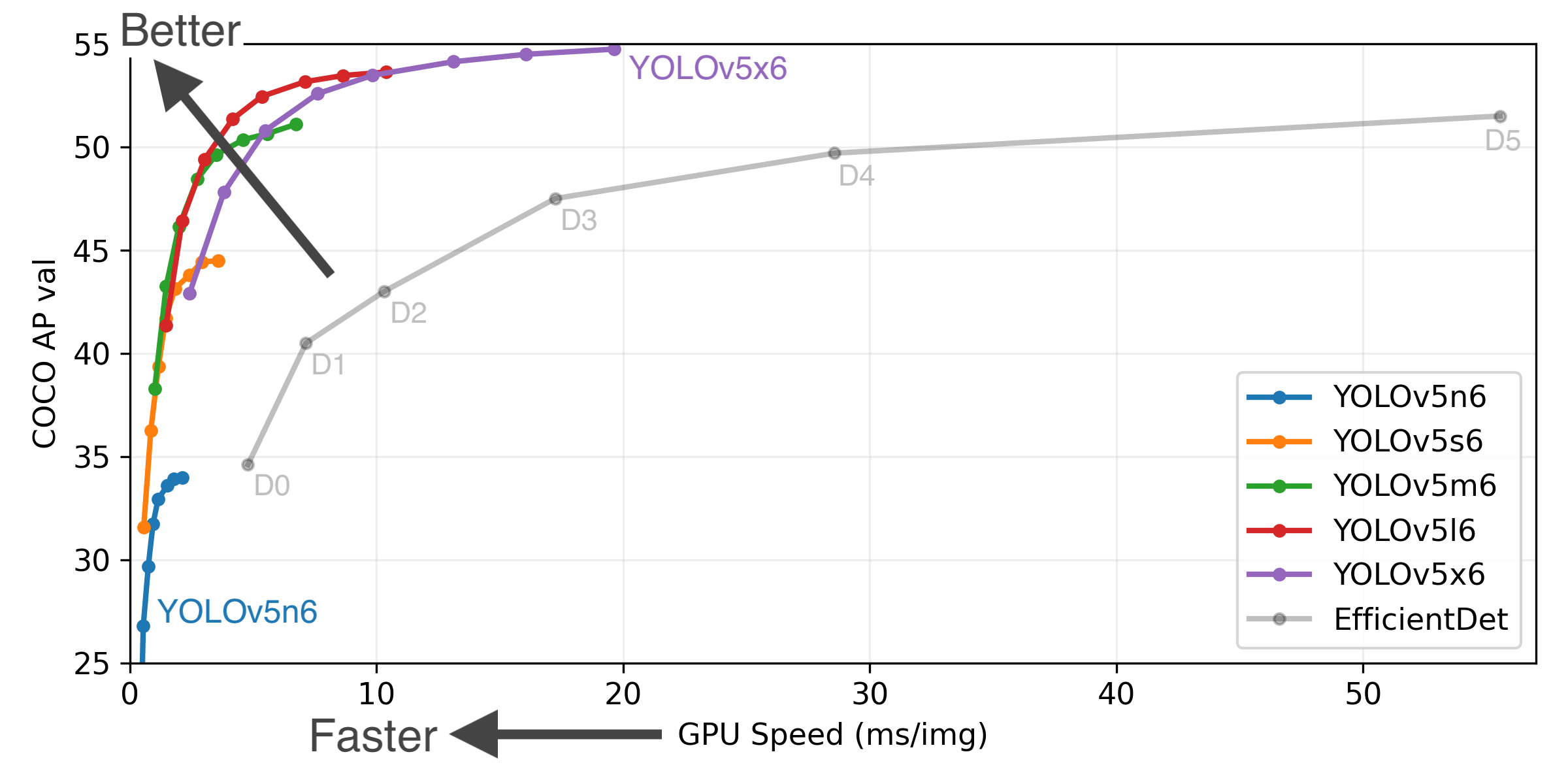

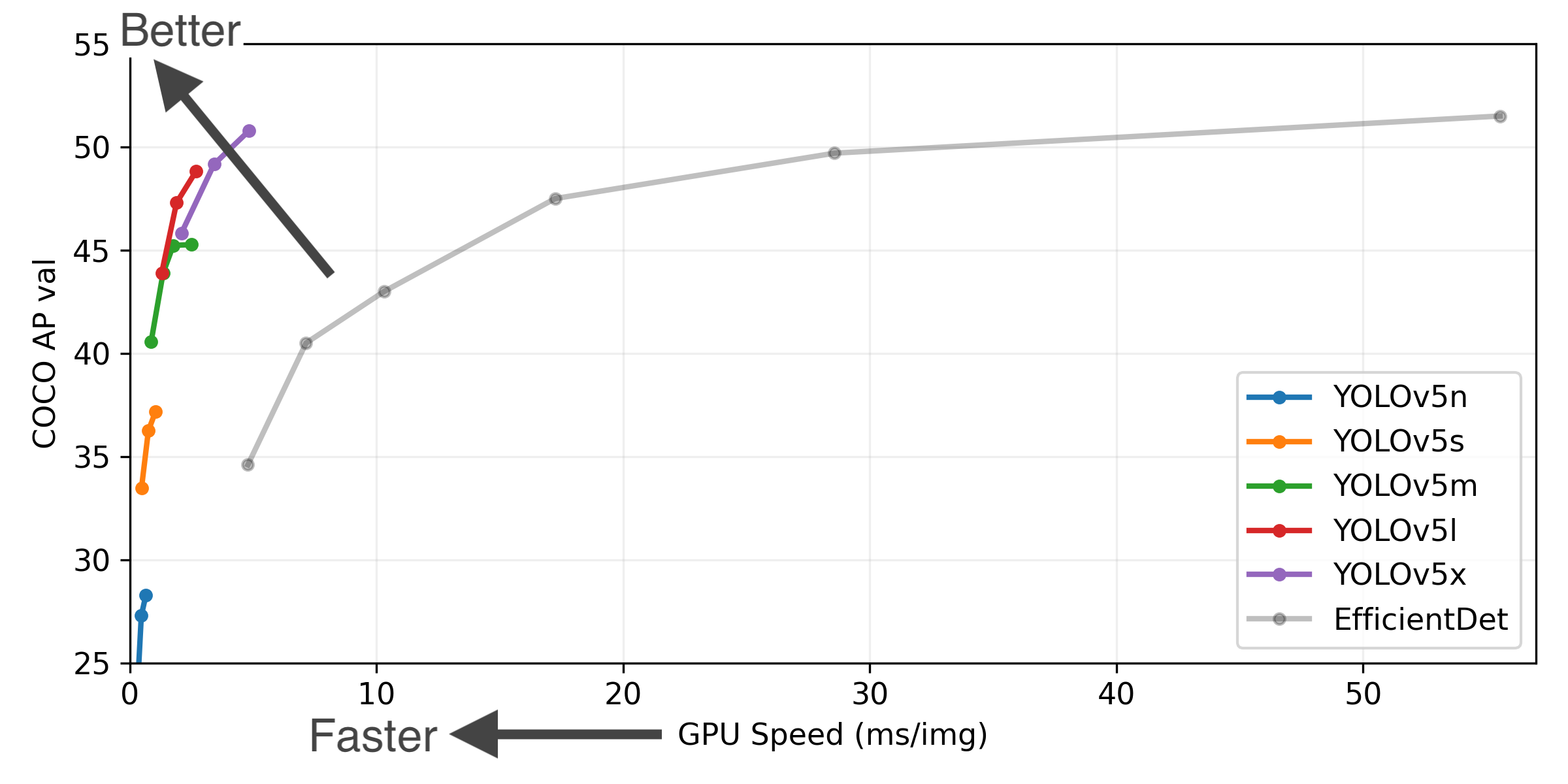

YOLOv5-P5 640 Figure (click to expand)

Figure Notes (click to expand)

* **COCO AP val** denotes mAP@0.5:0.95 metric measured on the 5000-image [COCO val2017](http://cocodataset.org) dataset over various inference sizes from 256 to 1536.

* **GPU Speed** measures average inference time per image on [COCO val2017](http://cocodataset.org) dataset using a [AWS p3.2xlarge](https://aws.amazon.com/ec2/instance-types/p3/) V100 instance at batch-size 32.

* **EfficientDet** data from [google/automl](https://github.com/google/automl) at batch size 8.

* **Reproduce** by `python val.py --task study --data coco.yaml --iou 0.7 --weights yolov5n6.pt yolov5s6.pt yolov5m6.pt yolov5l6.pt yolov5x6.pt`

### Pretrained Checkpoints

[assets]: https://github.com/ultralytics/yolov5/releases

[TTA]: https://github.com/ultralytics/yolov5/issues/303

|Model |size

(pixels) |mAPval

0.5:0.95 |mAPval

0.5 |Speed

CPU b1

(ms) |Speed

V100 b1

(ms) |Speed

V100 b32

(ms) |params

(M) |FLOPs

@640 (B)

|--- |--- |--- |--- |--- |--- |--- |--- |---

|[YOLOv5n][assets] |640 |28.4 |46.0 |**45** |**6.3**|**0.6**|**1.9**|**4.5**

|[YOLOv5s][assets] |640 |37.2 |56.0 |98 |6.4 |0.9 |7.2 |16.5

|[YOLOv5m][assets] |640 |45.2 |63.9 |224 |8.2 |1.7 |21.2 |49.0

|[YOLOv5l][assets] |640 |48.8 |67.2 |430 |10.1 |2.7 |46.5 |109.1

|[YOLOv5x][assets] |640 |50.7 |68.9 |766 |12.1 |4.8 |86.7 |205.7

| | | | | | | | |

|[YOLOv5n6][assets] |1280 |34.0 |50.7 |153 |8.1 |2.1 |3.2 |4.6

|[YOLOv5s6][assets] |1280 |44.5 |63.0 |385 |8.2 |3.6 |16.8 |12.6

|[YOLOv5m6][assets] |1280 |51.0 |69.0 |887 |11.1 |6.8 |35.7 |50.0

|[YOLOv5l6][assets] |1280 |53.6 |71.6 |1784 |15.8 |10.5 |76.8 |111.4

|[YOLOv5x6][assets]

+ [TTA][TTA]|1280

1536 |54.7

**55.4** |**72.4**

72.3 |3136

- |26.2

- |19.4

- |140.7

- |209.8

-

Table Notes (click to expand)

* All checkpoints are trained to 300 epochs with default settings and hyperparameters.

* **mAPval** values are for single-model single-scale on [COCO val2017](http://cocodataset.org) dataset.

Reproduce by `python val.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65`

* **Speed** averaged over COCO val images using a [AWS p3.2xlarge](https://aws.amazon.com/ec2/instance-types/p3/) instance. NMS times (~1 ms/img) not included.

Reproduce by `python val.py --data coco.yaml --img 640 --conf 0.25 --iou 0.45`

* **TTA** [Test Time Augmentation](https://github.com/ultralytics/yolov5/issues/303) includes reflection and scale augmentations.

Reproduce by `python val.py --data coco.yaml --img 1536 --iou 0.7 --augment`

##

Contribute

We love your input! We want to make contributing to YOLOv5 as easy and transparent as possible. Please see our [Contributing Guide](CONTRIBUTING.md) to get started, and fill out the [YOLOv5 Survey](https://ultralytics.com/survey?utm_source=github&utm_medium=social&utm_campaign=Survey) to send us feedback on your experiences. Thank you to all our contributors!

##

Contact

For YOLOv5 bugs and feature requests please visit [GitHub Issues](https://github.com/ultralytics/yolov5/issues). For business inquiries or

professional support requests please visit [https://ultralytics.com/contact](https://ultralytics.com/contact).