https://github.com/afa-farkhod/elasticsearch-elk-installation

Elastic Stack Installation: Elasticsearch, Kibana and Logstash.

https://github.com/afa-farkhod/elasticsearch-elk-installation

elasticsearch kibana logstash

Last synced: 2 months ago

JSON representation

Elastic Stack Installation: Elasticsearch, Kibana and Logstash.

- Host: GitHub

- URL: https://github.com/afa-farkhod/elasticsearch-elk-installation

- Owner: afa-farkhod

- License: gpl-3.0

- Created: 2023-07-03T01:29:51.000Z (almost 2 years ago)

- Default Branch: main

- Last Pushed: 2023-11-22T14:57:13.000Z (over 1 year ago)

- Last Synced: 2025-02-02T01:13:36.863Z (4 months ago)

- Topics: elasticsearch, kibana, logstash

- Homepage: https://www.elastic.co/guide/en/elastic-stack/current/installing-elastic-stack.html

- Size: 5.56 MB

- Stars: 2

- Watchers: 1

- Forks: 0

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

# [Elastic-Stack-Installation](https://www.elastic.co/guide/en/elastic-stack/current/installing-elastic-stack.html)

Elastic Stack Installation: Elasticsearch, Kibana and Logstash.

### [Elastic stack installation guide:](https://www.elastic.co/guide/en/elastic-stack/current/installing-elastic-stack.html)

- When installing the Elastic Stack, you must use the same version across the entire stack. For example, if you are using `Elasticsearch 8.8.2`, you install `Beats 8.8.2`, `APM Server 8.8.2`, `Elasticsearch Hadoop 8.8.2`, `Kibana 8.8.2`, and `Logstash 8.8.2`.

- [Installation Order](https://www.elastic.co/guide/en/elastic-stack/current/installing-elastic-stack.html#install-order-elastic-stack)

- Install the Elastic Stack products you want to use in the following order:

- Elasticsearch

- Kibana

- Logstash

- Beats

- APM

- Elasticsearch Hadoop

- [Beginner's Crash Course to Elastic Stack](https://youtu.be/gS_nHTWZEJ8) - Official Elastic Community. Beginner’s Crash Course is a series of workshops for all developers with little to no experience with Elasticsearch and Kibana or those who could use a refresher. By the end of this workshop, you will be able to:

- understand how the products of Elastic Stack work together to search, analyze, and visualize data in real time

- understand the basic architecture of Elasticsearch

- run CRUD operations with Elasticsearch and Kibana

- [Download Elasticsearch](https://www.elastic.co/downloads/elasticsearch)

- You can choose OS type: Windows, macOS, Linux.

- Also can choose packages: yum, dnf, zypper, apt-get

- You can run in `Docker` container

- After downloading the `Elasticsearch` run the following command to start the Elasticsearch server

```

bin/elasticsearch.bat

```

- Before running it, disable the security configuration in the following file, it is not necessary, but if you are having trouble to retrieve your password for elastic then it might be useful: `c:\elastic-stack\elasticsearch-8.7.1\config\elasticsearch.yml` by making the configuration to `false`

- Then go inside the following directory: `c:\elastic-stack\elasticsearch-8.7.1\bin` and run the command: `elasticsearch.bat`, then open the port: `localhost:9200` in the browser, you should see the following:

- [Download Kibana](https://www.elastic.co/kr/downloads/kibana)

- You can choose OS type: Windows, macOS, Linux.

- Also can choose packages: yum, dnf, zypper, apt-get

- You can run in `Docker` container

- Go inside the following directory: `c:\elastic-stack\kibana-8.7.1\bin` then run the command: `kibana.bat`, there is nothing to make changes in the configuration files. Just open the port: `localhost:5601` in browser, then you can see Kibana interface is running:

- [Download Logstash](https://www.elastic.co/kr/downloads/logstash)

- You can choose OS type: Windows, macOS, Linux.

- Also can choose packages: yum, apt-get

- You can run in `Docker` container

- Logstash is basically used as the pipeline to ingest the data to elasticsearch. So in the following we get sample from `kaggle.com` dataset website, the following is the sample dataset `flowers.csv`:

- So now we create `logstash.conf` file, and show the dataset location to ingest to elasticsearch. Before, in `flowers.csv` file we have to show the following columns:

- Following is the `logstash.conf` file with the dataset path and columns names:

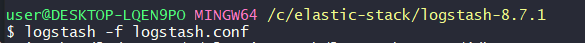

- To ingest the data, we have to be in a folder where our `logstash.conf` file is located. Then run the following command: `logstash -f logstash.conf`

- Then in Kibana interface we go to Dev Tools and can check our ingested data:

-----------------

### [Elasticsearch on Linux](https://www.digitalocean.com/community/tutorials/how-to-install-and-configure-elasticsearch-on-ubuntu-22-04)

- We can also run the Elasticsearch, Kibana and Logstash at once inside the `docker-compose.yml` file based on `Linux` Operating System:

- Following is the `Elasticsearch` container by pulling the `elasticsearch:7.16.2` image from the Docker hub:

- Following is `Kibana` container by pulling the `kibana:7.16.2` image from the Docker hub:

- Following is `Logstash` container by pulling the `logstash:7.16.2` image from the Docker hub:

- Then we bring them all inside the `docker-compose.yml` file which runs the elasticsearch inside the docker container. To do so we run the command `docker-compose.up`:

- We can see our running servers inside the docker containers with the following command:

- We can check Elasticsearch on port:9200 (if you pay attention to cluster_name: "docker-cluster")

-------------------------

- Don't forget to set environmental variables as following in the beginning!:

## [Additional Information]()

- [Search Template](https://www.elastic.co/guide/en/elasticsearch/reference/current/search-template.html) - If you use Elasticsearch for a custom application, search templates let you change your searches without modifying your app’s code.

- [Query DSL](https://www.elastic.co/guide/en/elasticsearch/reference/current/query-dsl.html) - Elasticsearch provides a full Query DSL (Domain Specific Language) based on JSON to define queries.

- [Match Query](https://www.elastic.co/guide/en/elasticsearch/reference/current/query-dsl-match-query.html) - Returns documents that match a provided text, number, date or boolean value. The provided text is analyzed before matching.

- [CURL](https://www.elastic.co/guide/en/cloud/current/ec-working-with-elasticsearch.html) - manage data from the command line. The `curl` command-line tool is commonly used to interact with Elasticsearch using `HTTP` requests.