https://github.com/airscholar/redditdataengineering

This project provides a comprehensive data pipeline solution to extract, transform, and load (ETL) Reddit data into a Redshift data warehouse. The pipeline leverages a combination of tools and services including Apache Airflow, Celery, PostgreSQL, Amazon S3, AWS Glue, Amazon Athena, and Amazon Redshift.

https://github.com/airscholar/redditdataengineering

apache-airflow aws celery data-pipeline end-to-end-data-engineering reddit

Last synced: 5 months ago

JSON representation

This project provides a comprehensive data pipeline solution to extract, transform, and load (ETL) Reddit data into a Redshift data warehouse. The pipeline leverages a combination of tools and services including Apache Airflow, Celery, PostgreSQL, Amazon S3, AWS Glue, Amazon Athena, and Amazon Redshift.

- Host: GitHub

- URL: https://github.com/airscholar/redditdataengineering

- Owner: airscholar

- Created: 2023-10-23T12:05:13.000Z (over 2 years ago)

- Default Branch: main

- Last Pushed: 2023-10-23T12:13:02.000Z (over 2 years ago)

- Last Synced: 2025-04-10T00:36:47.974Z (10 months ago)

- Topics: apache-airflow, aws, celery, data-pipeline, end-to-end-data-engineering, reddit

- Language: Python

- Homepage: https://www.youtube.com/watch?v=LSlt6iVI_9Y

- Size: 118 KB

- Stars: 128

- Watchers: 4

- Forks: 65

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

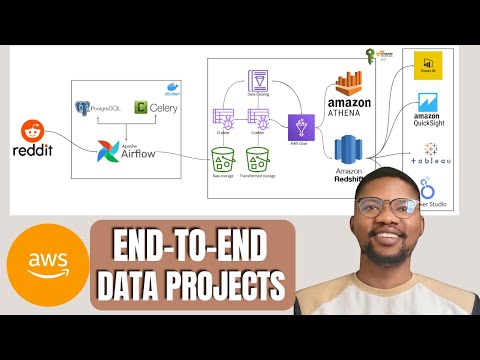

# Data Pipeline with Reddit, Airflow, Celery, Postgres, S3, AWS Glue, Athena, and Redshift

This project provides a comprehensive data pipeline solution to extract, transform, and load (ETL) Reddit data into a Redshift data warehouse. The pipeline leverages a combination of tools and services including Apache Airflow, Celery, PostgreSQL, Amazon S3, AWS Glue, Amazon Athena, and Amazon Redshift.

## Table of Contents

- [Overview](#overview)

- [Architecture](#architecture)

- [Prerequisites](#prerequisites)

- [System Setup](#system-setup)

- [Video](#video)

## Overview

The pipeline is designed to:

1. Extract data from Reddit using its API.

2. Store the raw data into an S3 bucket from Airflow.

3. Transform the data using AWS Glue and Amazon Athena.

4. Load the transformed data into Amazon Redshift for analytics and querying.

## Architecture

1. **Reddit API**: Source of the data.

2. **Apache Airflow & Celery**: Orchestrates the ETL process and manages task distribution.

3. **PostgreSQL**: Temporary storage and metadata management.

4. **Amazon S3**: Raw data storage.

5. **AWS Glue**: Data cataloging and ETL jobs.

6. **Amazon Athena**: SQL-based data transformation.

7. **Amazon Redshift**: Data warehousing and analytics.

## Prerequisites

- AWS Account with appropriate permissions for S3, Glue, Athena, and Redshift.

- Reddit API credentials.

- Docker Installation

- Python 3.9 or higher

## System Setup

1. Clone the repository.

```bash

git clone https://github.com/airscholar/RedditDataEngineering.git

```

2. Create a virtual environment.

```bash

python3 -m venv venv

```

3. Activate the virtual environment.

```bash

source venv/bin/activate

```

4. Install the dependencies.

```bash

pip install -r requirements.txt

```

5. Rename the configuration file and the credentials to the file.

```bash

mv config/config.conf.example config/config.conf

```

6. Starting the containers

```bash

docker-compose up -d

```

7. Launch the Airflow web UI.

```bash

open http://localhost:8080

```

## Video

[](https://www.youtube.com/watch?v=LSlt6iVI_9Y)