https://github.com/ansonhex/llama3-nas

This is a script to locally build Llama3 on NAS

https://github.com/ansonhex/llama3-nas

llama3 nas

Last synced: 3 months ago

JSON representation

This is a script to locally build Llama3 on NAS

- Host: GitHub

- URL: https://github.com/ansonhex/llama3-nas

- Owner: ansonhex

- Created: 2024-04-26T23:32:51.000Z (about 1 year ago)

- Default Branch: master

- Last Pushed: 2024-04-27T00:09:19.000Z (about 1 year ago)

- Last Synced: 2025-04-11T20:04:14.756Z (3 months ago)

- Topics: llama3, nas

- Homepage:

- Size: 1000 Bytes

- Stars: 7

- Watchers: 1

- Forks: 1

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

# Running Llama3 on NAS

This is a simple instruction on how to locally run Llama3 on your NAS using [Open WebUI](https://github.com/open-webui/open-webui) and [Ollama](https://github.com/ollama/ollama).

## Hardware

- **[UGREEN NASync DXP480T Plus](https://www.kickstarter.com/projects/urgreen/ugreen-nasync-next-level-storage-limitless-possibilities?ref=9q75gj)**

- CPU: Intel® Core™ i5-1235U Processor (12M Cache, up to 4.40 GHz, 10 Cores 12 Threads)

- RAM: 8G

## Setup Instructions

### 1. Create Necessary Directories

Open a terminal and run the following commands to create directories for your AI models and the web interface:

```sh

$ mkdir -p /home/ansonhe/AI/ollama

$ mkdir -p /home/ansonhe/AI/Llama3/open-webui

```

### 2. Deploy Using Docker

Copy the provided `docker-compose.yml` file to your directory, then start the services using Docker:

```sh

$ sudo docker-compose up -d

```

### 3. Access the Web Interface

Open web browser and enter `YOUR_NAS_IP:8080`

### 4. Configure Ollama Models

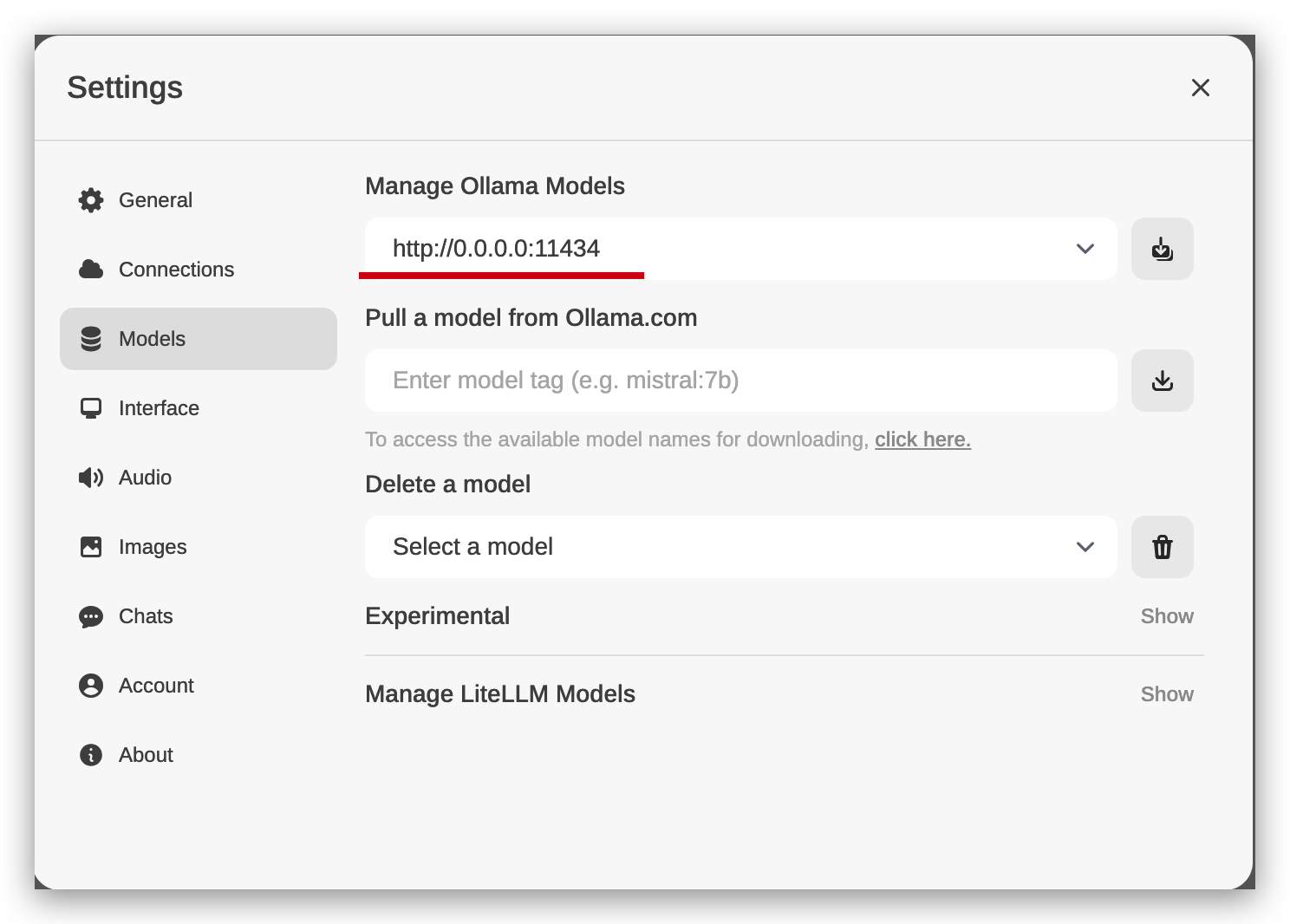

In the web interface, change the Ollama Models address to `0.0.0.0:11434`.

### 5. Download the Llama3 Model

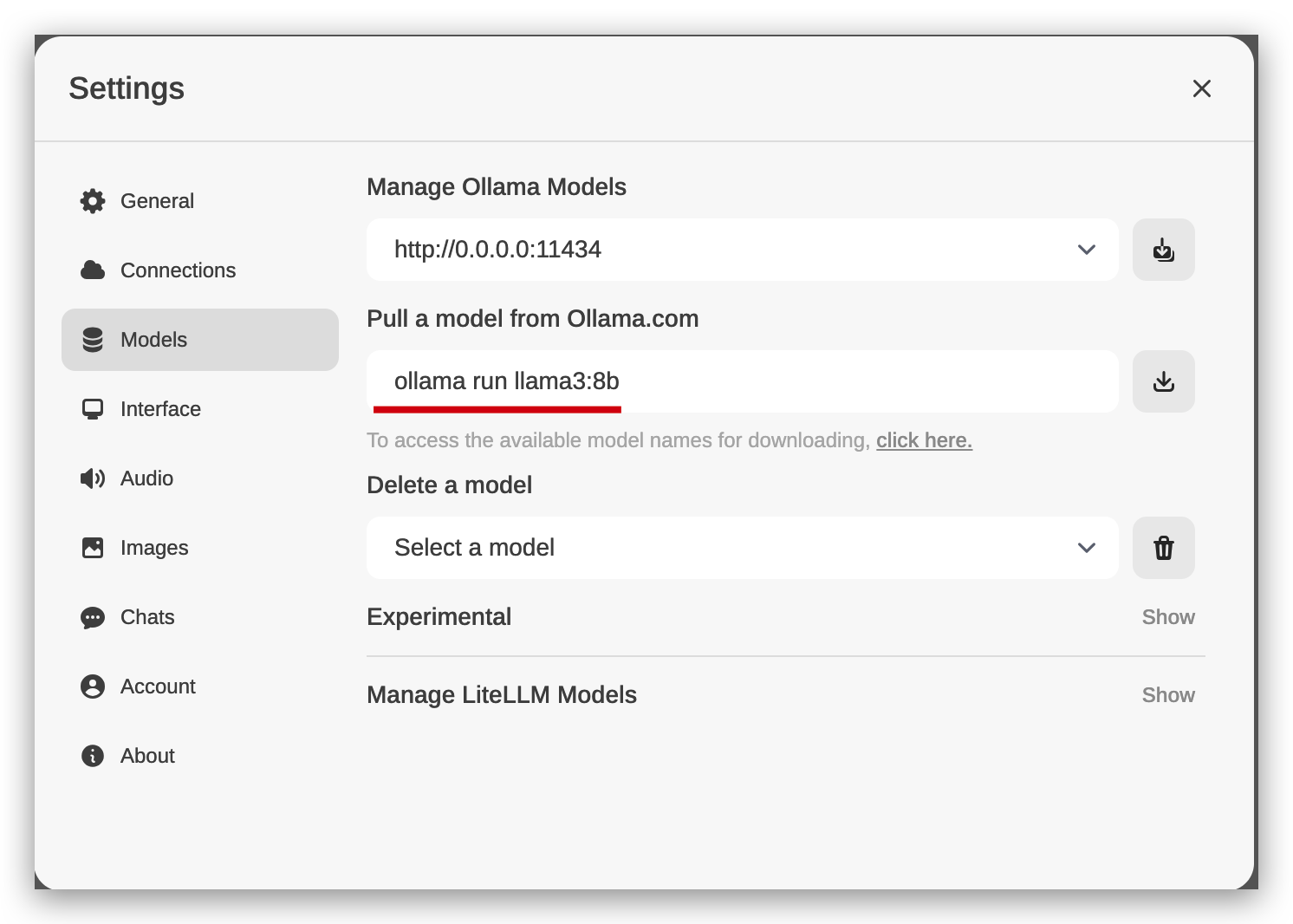

Download the Llama3 Model **`ollama run llama3:8b`**, (Only using 8b versions here since performance limitations on NAS)

### 6. Set Llama3 as the Default Model

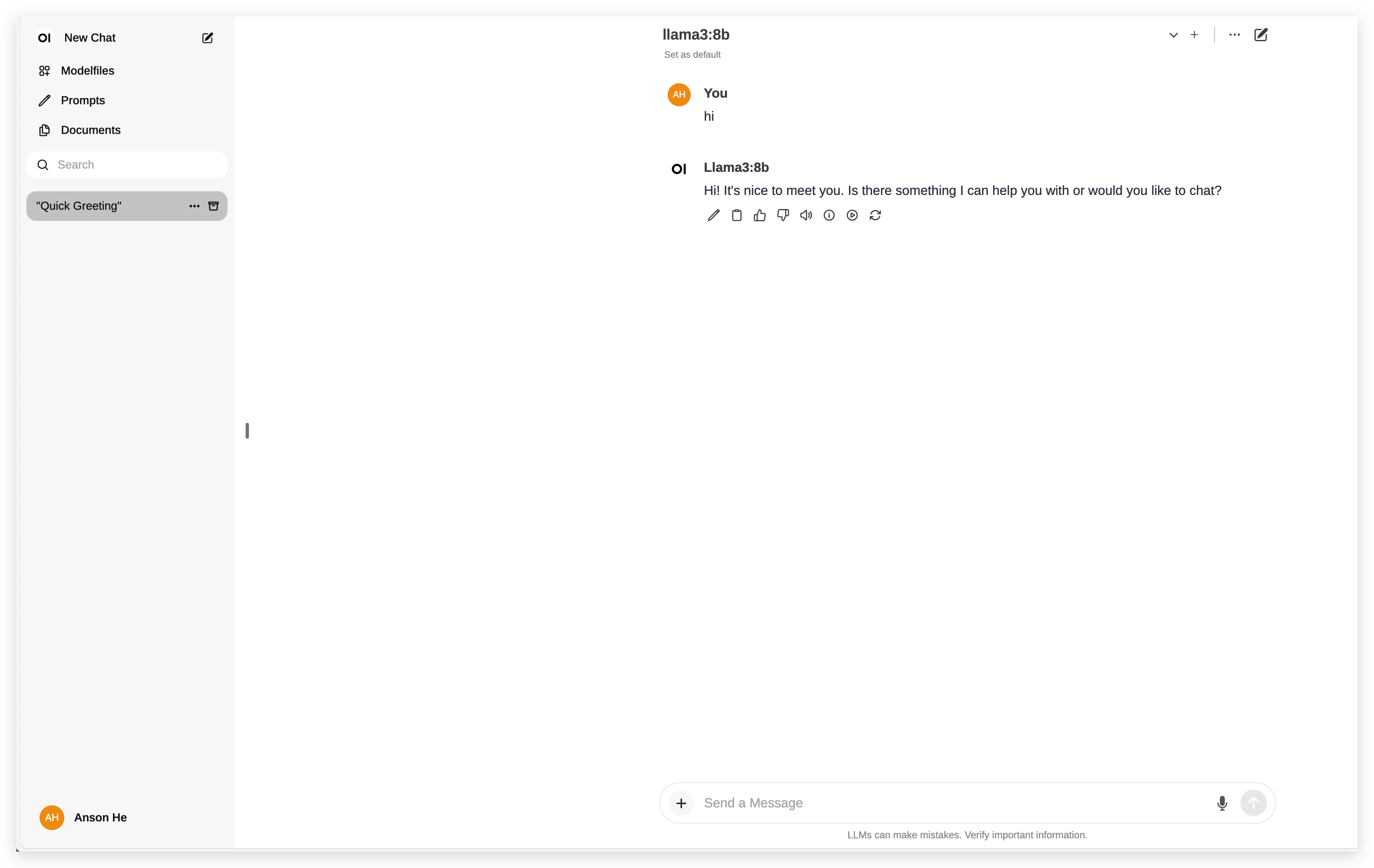

After the model has been downloaded, you can select Llama3 as your default model and host it locally.

## Performance Considerations

Please note that the CPU of the NAS may still face limitations when running large language models (LLMs) due to its processing capabilities.