https://github.com/armandpl/wandb-jetracer

Training a racecar with W&B!

https://github.com/armandpl/wandb-jetracer

jetracer jetson-nano machine-learning nvidia object-detection self-driving-car weights-and-biases

Last synced: about 2 months ago

JSON representation

Training a racecar with W&B!

- Host: GitHub

- URL: https://github.com/armandpl/wandb-jetracer

- Owner: Armandpl

- Created: 2021-04-23T08:49:16.000Z (over 4 years ago)

- Default Branch: master

- Last Pushed: 2023-05-12T16:34:16.000Z (over 2 years ago)

- Last Synced: 2025-04-02T07:35:15.193Z (7 months ago)

- Topics: jetracer, jetson-nano, machine-learning, nvidia, object-detection, self-driving-car, weights-and-biases

- Language: Python

- Homepage:

- Size: 526 KB

- Stars: 10

- Watchers: 1

- Forks: 0

- Open Issues: 27

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

# 🏁🏎️💨 = W&B ➕ NVIDIA Jetracer

[](https://github.com/Armandpl/wandb_jetracer/actions/workflows/ci.yml) [](https://github.com/Armandpl/wandb_jetracer/actions/workflows/lint.yml) [](https://codecov.io/gh/Armandpl/wandb_jetracer)

A picture of the car along with it's POV

This project builds on top of the [NVIDIA Jetracer](https://github.com/NVIDIA-AI-IOT/jetracer) project to instrument it with [Weights&Biases](https://wandb.ai/site), making it easier to train, evaluate and refine models.

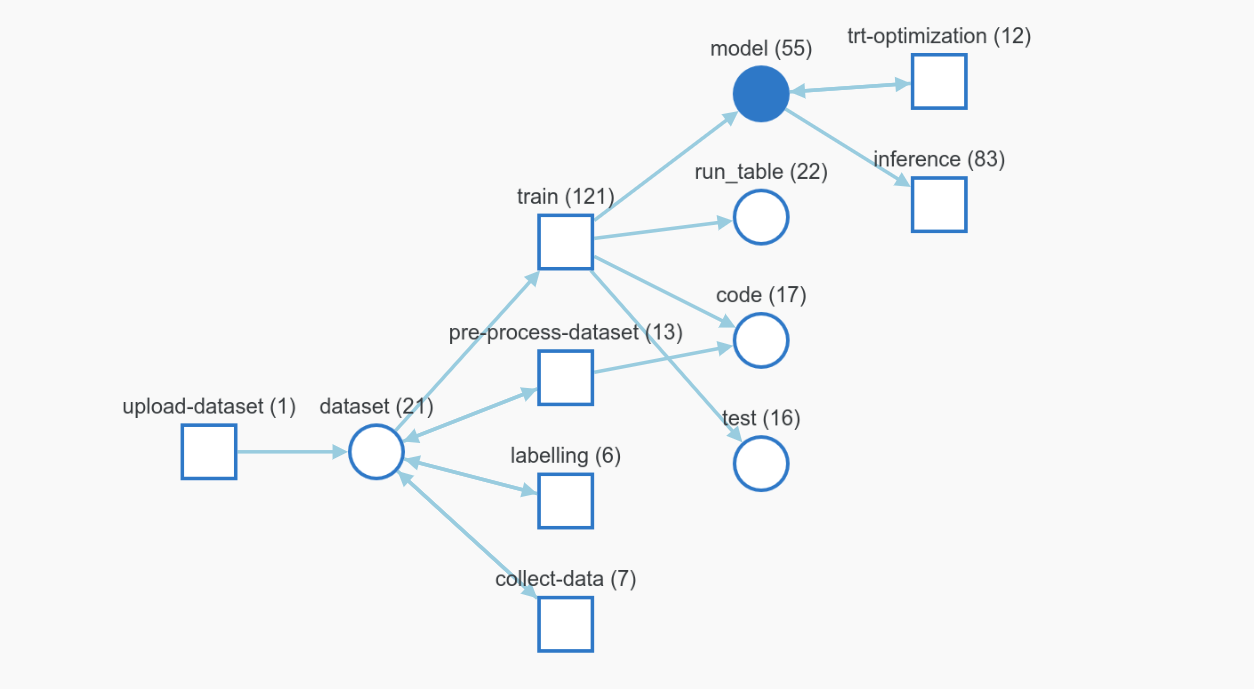

It features a full pipeline to effortlessly `collect_data`, `label` it, `train`/`optimize` models and finally `drive` the car while monitoring how the model is doing.

Weights&Biases Artifacts' graph showing the whole pipeline.

## Pipeline overview

The repo is meant to be used by running (and modifying!) scripts under `src/wandb_jetracer/scripts`. These allow to train a model to detect the center of the racetrack which we can then feed to a control policy to drive the car.

1. `collect_data.py` will take pictures using the car's camera and upload them to Weights&Biases. It should be ran while manually driving the car around.

2. `label.py` is a labelling utiliy. It will download the images from the previous step to a computer to annotate them with the relevant labels. The labels will then be added to the dataset stored on Weights&Biases servers.

3. [](https://colab.research.google.com/github/Armandpl/wandb-jetracer/blob/master/src/wandb_jetracer_training.ipynb)`wandb_jetracer_training.ipynb` is used to download the same dataset, train a model and upload it's weights to WandB.

4. `trt_optim` is meant to be ran on the car. It will convert the latest trained model to [TensorRT](https://developer.nvidia.com/tensorrt) for inference.

5. `drive.py` will take the optimized model and use it to drive the car. It will also log sensor data (IMU, Camera), system metrics ([jetson stats](https://github.com/rbonghi/jetson_stats), inference time) as well as the control signal to WandB. This helps with monitoring the model's perfomances in production.

## Building the car

Check out [NVIDIA Jetracer](https://github.com/NVIDIA-AI-IOT/jetracer).

## Setup and dependencies

These scripts are ran on three different types of machine: the actual [embedded jetson nano computer](https://developer.nvidia.com/embedded/jetson-nano-developer-kit) on the car, a machine used for labelling and a colab instance used for training.

They all relie on different dependencies:

- Training dependencies are installed in the Colab notebook so you don't need to worry about those.

- Labelling dependencies can be installed in a conda env using `conda create -f labelling_env.yml`

- Dependencies for the car are slightly trickier to get right, you'll find instructions [here](https://github.com/Armandpl/wandb-jetracer/blob/master/JETSON_SETUP.md). Feel free to open issues if you run into troubles!

## Disclaimer about default throttle values:

Even though default throttle values are set in the scripts under `/src/scripts` I would recommend testing those while the car is on a stand and it's wheels are not touching the ground. Depending on how your ESC was calibrated a throttle value of 0.0002 might mean going full reverse and your car might fly off into a wall.

## Testing

After installing the labelling dependencies run ```pytest```

## Footnote

Feel free to open GitHub issues if you have any questions!

## Ressources

- [Donkey car](donkeycar.com)

- [WandB dashboard for this project](https://wandb.ai/wandb/racecar)

- [NVIDIA Jetracer](https://github.com/NVIDIA-AI-IOT/jetracer)