https://github.com/automix-llm/automix

Mixing Language Models with Self-Verification and Meta-Verification

https://github.com/automix-llm/automix

few-shot-learning large-language-models model-selection prompting question-answering

Last synced: 8 months ago

JSON representation

Mixing Language Models with Self-Verification and Meta-Verification

- Host: GitHub

- URL: https://github.com/automix-llm/automix

- Owner: automix-llm

- License: apache-2.0

- Created: 2023-10-09T20:53:55.000Z (about 2 years ago)

- Default Branch: main

- Last Pushed: 2024-12-12T22:03:03.000Z (about 1 year ago)

- Last Synced: 2024-12-12T23:18:01.768Z (about 1 year ago)

- Topics: few-shot-learning, large-language-models, model-selection, prompting, question-answering

- Language: Jupyter Notebook

- Homepage:

- Size: 2.26 MB

- Stars: 97

- Watchers: 3

- Forks: 8

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

## What is AutoMix?

The idea behind AutoMix is simple:

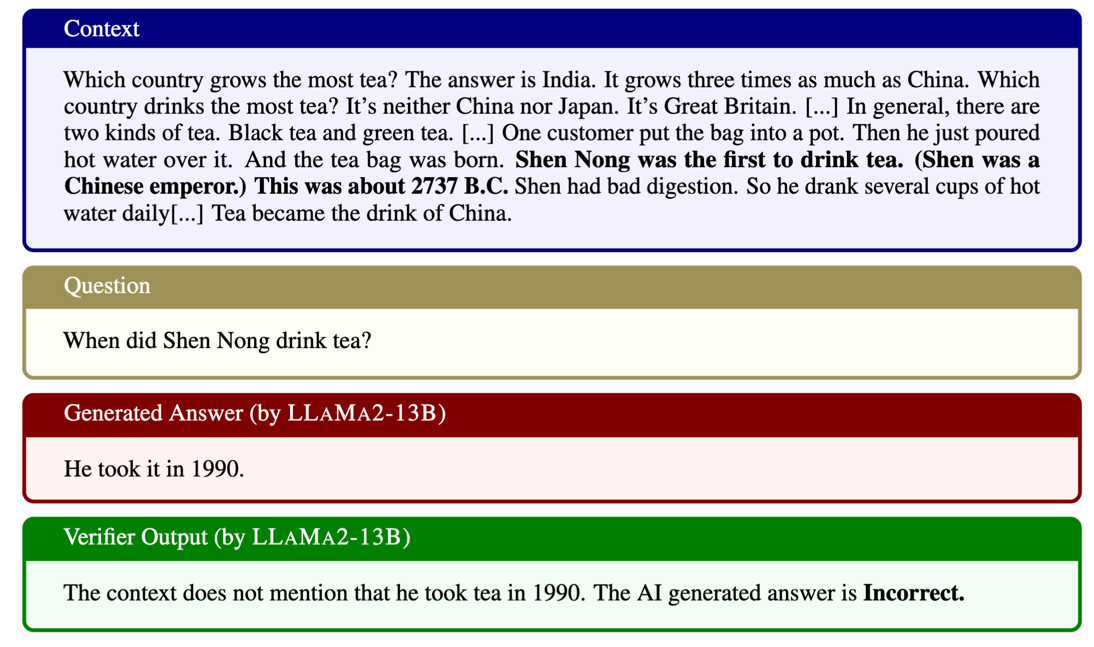

1. Send a query to small language model (SLM), gets a noisy label on its correctness using **few-shot self-verification** done with the same model (SLM).

2. Use a meta-verifier to _double check_ verifier's output, and route the query to a larger language model (LLM) if needed.

## Self-Verification and Meta-verification

At the center of automix is the idea of context-grounded self-verification:

- However, such verification can often be noisy, so we introduce an additional layer of meta-verification using [POMDPs](https://www.pomdp.org/) or thresholding.

## Notebooks

### Running inference

- [**Step1 Run inference to solve tasks**](https://github.com/automix-llm/automix/blob/main/colabs/Step1_SolveQueries.ipynb) - Task prompts, code to run inference from different language models.

[](https://colab.research.google.com/github/automix-llm/automix/blob/main/colabs/Step1_SolveQueries.ipynb)

### Few-shot self-verification

- [**Step2 Self Verify**](https://github.com/automix-llm/automix/blob/main/colabs/Step2_SelfVerify.ipynb) - Verification prompts, code to run verification on the outputs produced in step 1.

[](https://colab.research.google.com/github/automix-llm/automix/blob/main/colabs/Step2_SelfVerify.ipynb)

### Meta-verification

- [**Step3 Meta Verify**](https://github.com/automix-llm/automix/blob/main/colabs/Step3_MetaVerify.ipynb) - Run meta-verification using different AutoMix methods on outputs produced from Step 2.

[](https://colab.research.google.com/github/automix-llm/automix/blob/main/colabs/Step3_MetaVerify.ipynb)

- You can run `pip install automix-llm' to use the meta-verifier system wide.

### Replicating the results

- To replicate the results in the paper, please run `python scripts paper_results.py`

## Data and Outputs

- We experiment with 5 datasets: CNLI, CoQA, NarrativeQA, QASPER, and Quality.

- Note: The dataset are sourced from [scrolls](https://www.scrolls-benchmark.com/). Please cite scrolls and the appropriate sources if you use these datasets. We are making them available in a sinlge jsonl file for ease of use and reproducibility. For details on how CoQa was prepared, please see [**Preparing COQA**](https://github.com/automix-llm/automix/blob/main/colabs/Preparing_COQA.ipynb).

- **Inputs:** All input data for the AutoMix project is provided in `automix_inputs.jsonl`. You can access and download it directly from [Google Drive](https://drive.google.com/file/d/1dhyt7UuYumk9Gae9eJ_mpTVrLeSTuRht/view?usp=sharing).

- **Outputs from LLAMA2:** The outputs generated using the LLAMA2 model are stored in `automix_llama2_outputs.jsonl`, available alongside the input file in the linked Google Drive.

```

id: A unique identifier for each question and answer pair.

pid: An additional identifier potentially mapping to specific instances or model variants.

base_ctx: The context.

question: Input question or query.

output: Ground truth.

dataset: .

llama13b_pred_ans: The answer generated by the llama13b model.

llama70b_pred_ans: The answer generated by the llama70b model.

llama13b_ver: Verification outputs of the llama13b model’s answers.

```

### Stats

```txt

--------------------------------

| Dataset | Split | Count |

|--------------|-------|-------|

| cnli | train | 7191 |

| | val | 1037 |

| coqa | train | 3941 |

| | val | 3908 |

| narrative_qa | train | 9946 |

| | val | 5826 |

| qasper | train | 2556 |

| | val | 1715 |

| quality | train | 2515 |

| | val | 2085 |

--------------------------------

Name: split, dtype: int64

```

## Citation

```

@misc{madaan2023automix,

title={AutoMix: Automatically Mixing Language Models},

author={Aman Madaan and Pranjal Aggarwal and Ankit Anand and Srividya Pranavi Potharaju and Swaroop Mishra and Pei Zhou and Aditya Gupta and Dheeraj Rajagopal and Karthik Kappaganthu and Yiming Yang and Shyam Upadhyay and Mausam and Manaal Faruqui},

year={2023},

eprint={2310.12963},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```