Ecosyste.ms: Awesome

An open API service indexing awesome lists of open source software.

https://github.com/bighuang624/rl-czsl

[ICMR 2023] Reference-Limited Compositional Zero-Shot Learning

https://github.com/bighuang624/rl-czsl

Last synced: 5 days ago

JSON representation

[ICMR 2023] Reference-Limited Compositional Zero-Shot Learning

- Host: GitHub

- URL: https://github.com/bighuang624/rl-czsl

- Owner: bighuang624

- Created: 2022-08-13T12:11:59.000Z (over 2 years ago)

- Default Branch: master

- Last Pushed: 2023-12-12T12:06:10.000Z (11 months ago)

- Last Synced: 2023-12-12T13:27:40.125Z (11 months ago)

- Language: Python

- Homepage: https://kyonhuang.top/publication/reference-limited-CZSL

- Size: 196 KB

- Stars: 0

- Watchers: 1

- Forks: 0

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

# Reference-Limited Compositional Zero-Shot Learning (RL-CZSL)

* **Title**: **[Reference-Limited Compositional Zero-Shot Learning](https://arxiv.org/pdf/2208.10046)**

* **Authors**: [Siteng Huang](https://kyonhuang.top/), [Qiyao Wei](https://qiyaowei.github.io/index.html), [Donglin Wang](https://milab.westlake.edu.cn/)

* **Institutes**: Zhejiang University, University of Cambridge, Westlake University

* **Conference**: Proceedings of the 2023 ACM International Conference on Multimedia Retrieval (ICMR 2023)

* **More details**: [[arXiv]](https://arxiv.org/pdf/2208.10046) | [[homepage]](https://kyonhuang.top/publication/reference-limited-CZSL)

**News**: We are in the process of cleaning up the source code and datasets. As I am currently away from university on weekdays, we hope to complete these tasks within a few weeks. For any questions, please contact huangsiteng[AT]westlake.edu.cn.

## Datasets

[Google Drive](https://drive.google.com/drive/folders/1zaNu4ay1ZiRstdFqk1S9hdwdi58YO1iF?usp=sharing)

## Running

In progress

## Overview

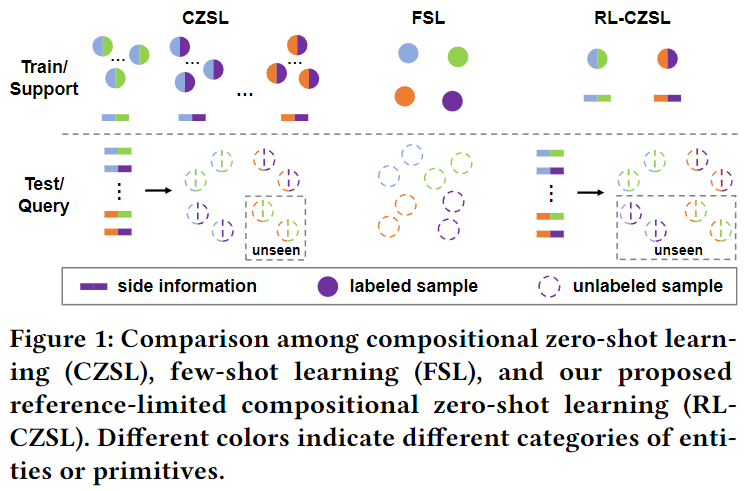

* We introduce a new problem named **reference-limited compositional zero-shot learning (RL-CZSL)**, where given only a few samples of limited compositions, the model is required to generalize to recognize unseen compositions. This offers a more realistic and challenging environment for evaluating compositional learners.

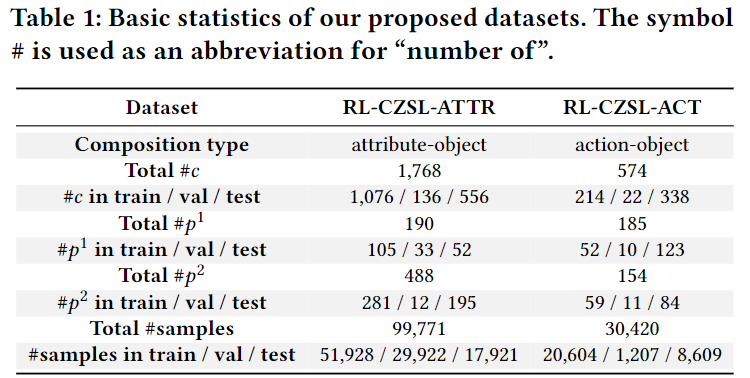

* We establish **two benchmark datasets with diverse compositional labels and well-designed data splits**, providing the required platform for systematically assessing progress on the task.

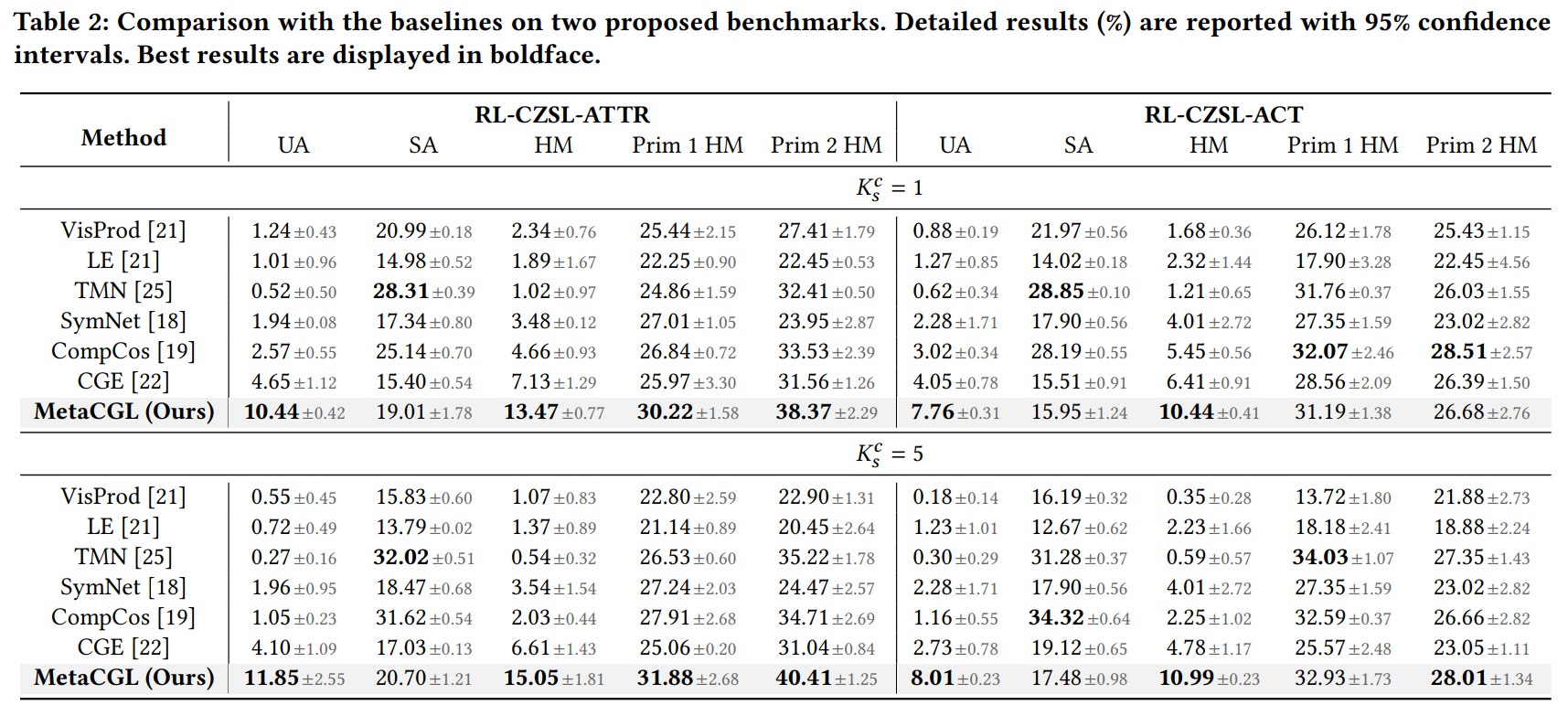

* We propose a novel method, **Meta Compositional Graph Learner (MetaCGL)**, for the challenging RL-CZSL problem. Experimental results show that MetaCGL consistently outperforms popular baselines on recognizing unseen compositions.

## Citation

If you find this work useful in your research, please cite our paper:

```

@inproceedings{Huang2023RLCZSL,

title={Reference-Limited Compositional Zero-Shot Learning},

author={Siteng Huang and Qiyao Wei and Donglin Wang},

booktitle = {Proceedings of the 2023 ACM International Conference on Multimedia Retrieval},

month = {June},

year = {2023}

}

```

## Acknowledgement

Our code references the following projects:

* [czsl](https://github.com/ExplainableML/czsl)