https://github.com/bryandlee/animegan2-pytorch

PyTorch implementation of AnimeGANv2

https://github.com/bryandlee/animegan2-pytorch

gan image2image style-transfer

Last synced: 22 days ago

JSON representation

PyTorch implementation of AnimeGANv2

- Host: GitHub

- URL: https://github.com/bryandlee/animegan2-pytorch

- Owner: bryandlee

- License: mit

- Created: 2021-02-16T11:34:21.000Z (about 4 years ago)

- Default Branch: main

- Last Pushed: 2023-01-06T10:26:08.000Z (over 2 years ago)

- Last Synced: 2025-04-06T09:04:31.687Z (29 days ago)

- Topics: gan, image2image, style-transfer

- Language: Jupyter Notebook

- Homepage:

- Size: 37.6 MB

- Stars: 4,427

- Watchers: 58

- Forks: 649

- Open Issues: 37

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

- awesome - bryandlee/animegan2-pytorch - PyTorch implementation of AnimeGANv2 (Jupyter Notebook)

- StarryDivineSky - bryandlee/animegan2-pytorch

README

## PyTorch Implementation of [AnimeGANv2](https://github.com/TachibanaYoshino/AnimeGANv2)

**Updates**

* `2021-10-17` Add weights for [FacePortraitV2](#additional-model-weights). [](https://colab.research.google.com/github/bryandlee/animegan2-pytorch/blob/main/colab_demo.ipynb)

* `2021-11-07` Thanks to [ak92501](https://twitter.com/ak92501), a [web demo](https://huggingface.co/spaces/akhaliq/AnimeGANv2) is integrated to [Huggingface Spaces](https://huggingface.co/spaces) with [Gradio](https://github.com/gradio-app/gradio). [](https://huggingface.co/spaces/akhaliq/AnimeGANv2)

* `2021-11-07` Thanks to [xhlulu](https://github.com/xhlulu), the `torch.hub` model is now available. See [Torch Hub Usage](#torch-hub-usage).

## Basic Usage

**Inference**

```

python test.py --input_dir [image_folder_path] --device [cpu/cuda]

```

## Torch Hub Usage

You can load the model via `torch.hub`:

```python

import torch

model = torch.hub.load("bryandlee/animegan2-pytorch", "generator").eval()

out = model(img_tensor) # BCHW tensor

```

Currently, the following `pretrained` shorthands are available:

```python

model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="celeba_distill")

model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="face_paint_512_v1")

model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="face_paint_512_v2")

model = torch.hub.load("bryandlee/animegan2-pytorch:main", "generator", pretrained="paprika")

```

You can also load the `face2paint` util function:

```python

from PIL import Image

face2paint = torch.hub.load("bryandlee/animegan2-pytorch:main", "face2paint", size=512)

img = Image.open(...).convert("RGB")

out = face2paint(model, img)

```

More details about `torch.hub` is in [the torch docs](https://pytorch.org/docs/stable/hub.html)

## Weight Conversion from the Original Repo (Tensorflow)

1. Install the [original repo's dependencies](https://github.com/TachibanaYoshino/AnimeGANv2#requirements): python 3.6, tensorflow 1.15.0-gpu

2. Install torch >= 1.7.1

3. Clone the original repo & run

```

git clone https://github.com/TachibanaYoshino/AnimeGANv2

python convert_weights.py

```

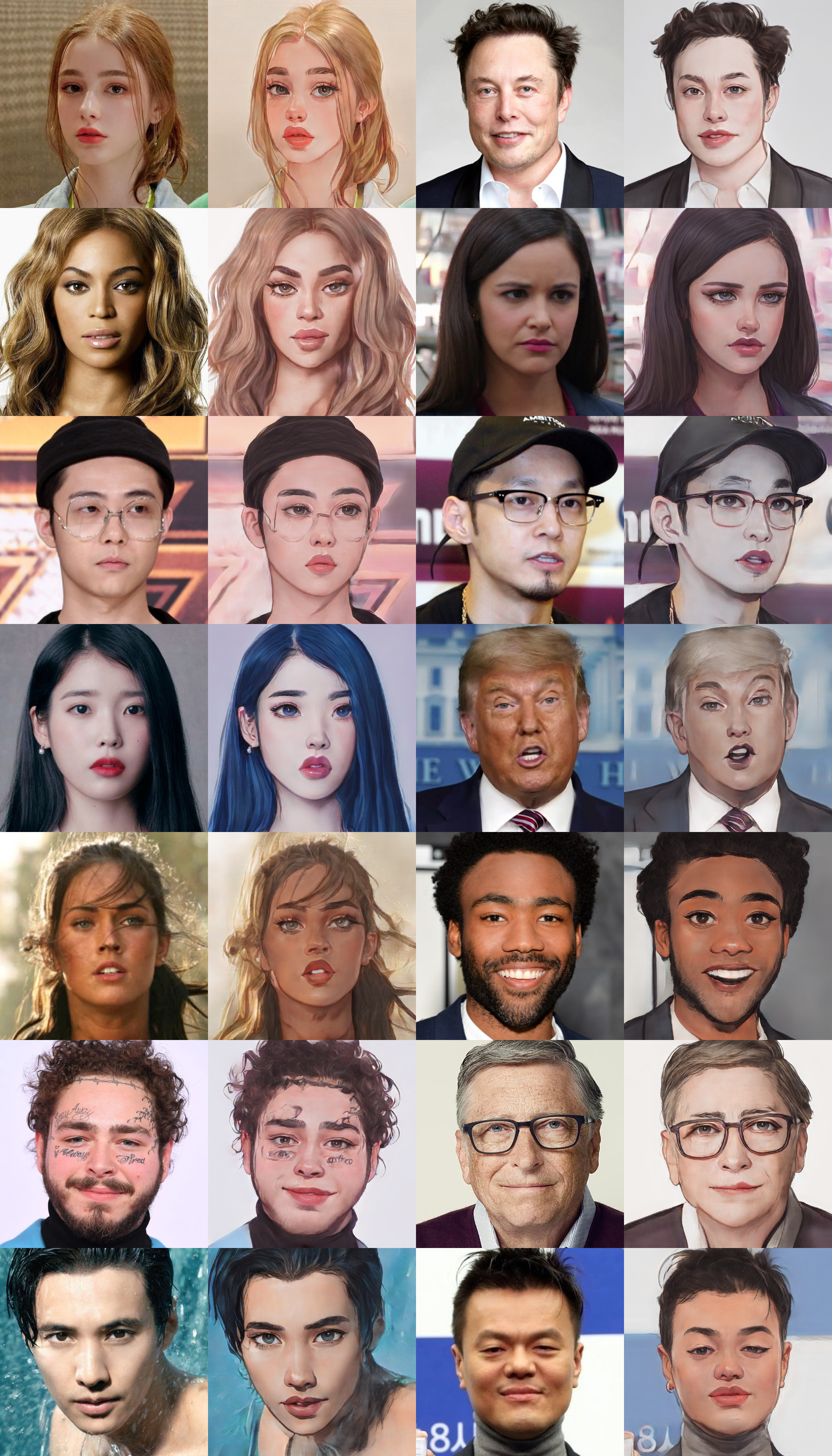

samples

Results from converted `Paprika` style model (input image, original tensorflow result, pytorch result from left to right)

**Note:** Results from converted weights slightly different due to the [bilinear upsample issue](https://github.com/pytorch/pytorch/issues/10604)

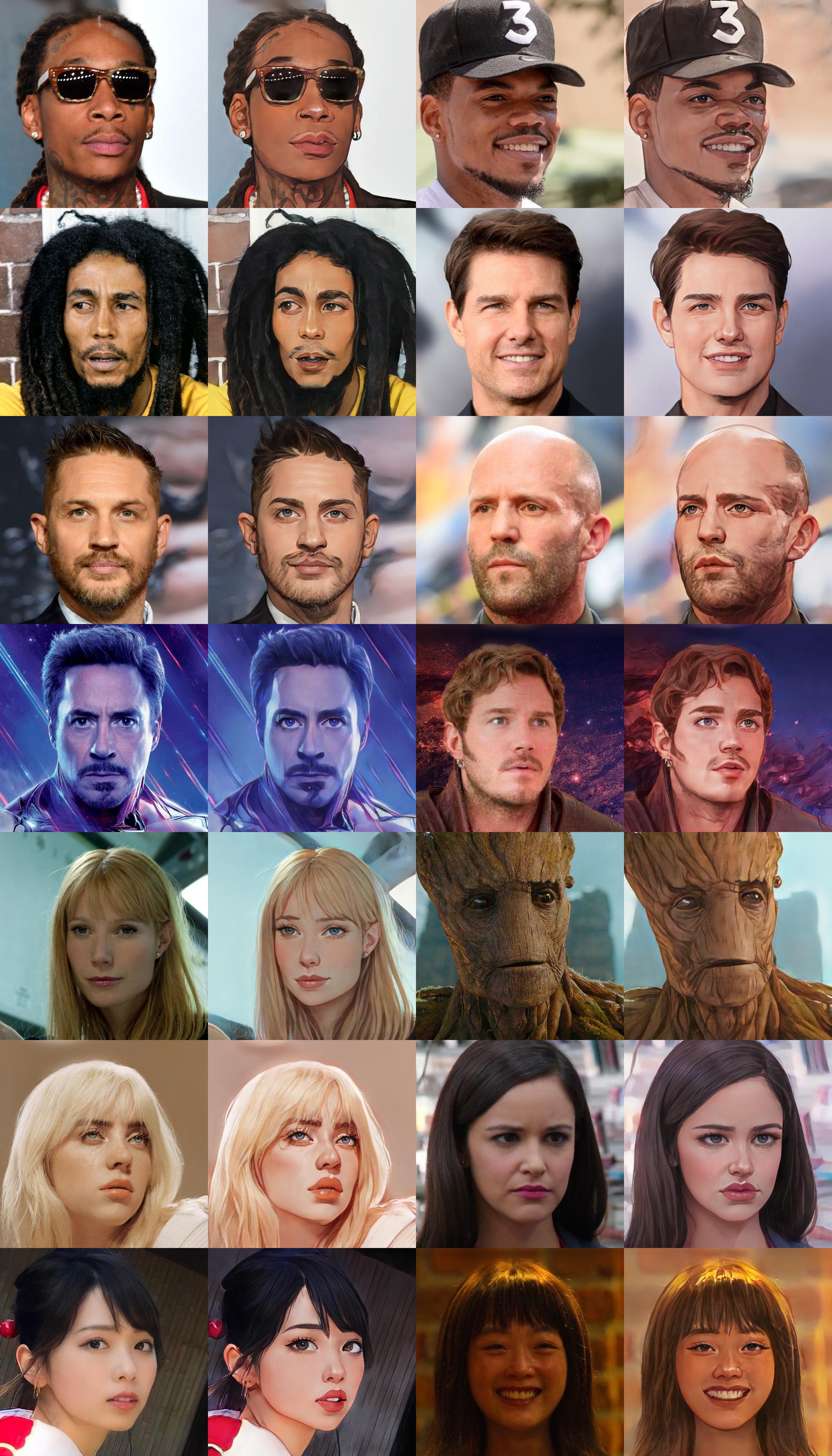

## Additional Model Weights

**Webtoon Face** [[ckpt]](https://drive.google.com/file/d/10T6F3-_RFOCJn6lMb-6mRmcISuYWJXGc)

samples

Trained on 256x256 face images. Distilled from [webtoon face model](https://github.com/bryandlee/naver-webtoon-faces/blob/master/README.md#face2webtoon) with L2 + VGG + GAN Loss and CelebA-HQ images.

**Face Portrait v1** [[ckpt]](https://drive.google.com/file/d/1WK5Mdt6mwlcsqCZMHkCUSDJxN1UyFi0-)

samples

Trained on 512x512 face images.

[](https://colab.research.google.com/drive/1jCqcKekdtKzW7cxiw_bjbbfLsPh-dEds?usp=sharing)

[📺](https://youtu.be/CbMfI-HNCzw?t=317)

**Face Portrait v2** [[ckpt]](https://drive.google.com/uc?id=18H3iK09_d54qEDoWIc82SyWB2xun4gjU)

samples

Trained on 512x512 face images. Compared to v1, `🔻beautify` `🔺robustness`

[](https://colab.research.google.com/drive/1jCqcKekdtKzW7cxiw_bjbbfLsPh-dEds?usp=sharing)

🦑 🎮 🔥