Ecosyste.ms: Awesome

An open API service indexing awesome lists of open source software.

https://github.com/bshall/tacotron

A PyTorch implementation of Location-Relative Attention Mechanisms For Robust Long-Form Speech Synthesis

https://github.com/bshall/tacotron

attention-mechanism pytorch speech-synthesis tacotron text-to-speech tts

Last synced: 10 days ago

JSON representation

A PyTorch implementation of Location-Relative Attention Mechanisms For Robust Long-Form Speech Synthesis

- Host: GitHub

- URL: https://github.com/bshall/tacotron

- Owner: bshall

- License: mit

- Created: 2020-11-02T13:48:16.000Z (over 4 years ago)

- Default Branch: main

- Last Pushed: 2020-12-02T15:30:22.000Z (about 4 years ago)

- Last Synced: 2025-01-31T19:11:11.382Z (21 days ago)

- Topics: attention-mechanism, pytorch, speech-synthesis, tacotron, text-to-speech, tts

- Language: Python

- Homepage: https://bshall.github.io/Tacotron/

- Size: 1.01 MB

- Stars: 113

- Watchers: 2

- Forks: 24

- Open Issues: 6

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

# Tacotron with Location Relative Attention

A PyTorch implementation of [Location-Relative Attention Mechanisms For Robust Long-Form Speech Synthesis](https://arxiv.org/abs/1910.10288). Audio samples can be found [here](https://bshall.github.io/Tacotron/). Colab demo can be found [here](https://colab.research.google.com/github/bshall/Tacotron/blob/main/tacotron-demo.ipynb).

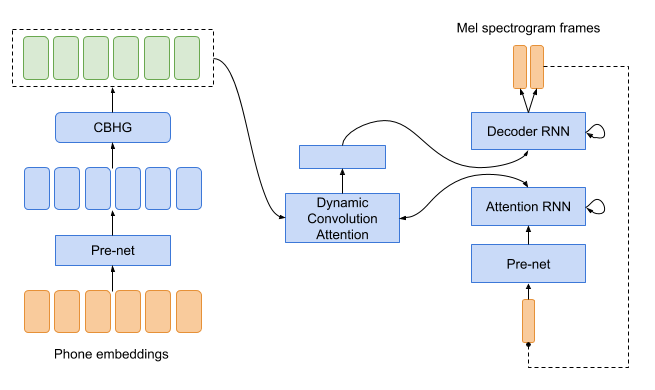

Fig 1:Tacotron (with Dynamic Convolution Attention).

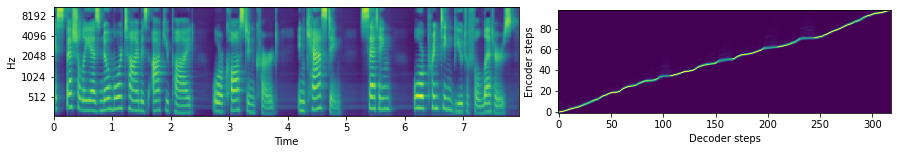

Fig 2:Example Mel-spectrogram and attention plot.

## Quick Start

Ensure you have Python 3.6 and PyTorch 1.7 or greater installed. Then install this package (along with the [univoc vocoder](https://github.com/bshall/UniversalVocoding)):

```

pip install tacotron univoc

```

## Example Usage

```python

import torch

import soundfile as sf

from univoc import Vocoder

from tacotron import load_cmudict, text_to_id, Tacotron

# download pretrained weights for the vocoder (and optionally move to GPU)

vocoder = Vocoder.from_pretrained(

"https://github.com/bshall/UniversalVocoding/releases/download/v0.2/univoc-ljspeech-7mtpaq.pt"

).cuda()

# download pretrained weights for tacotron (and optionally move to GPU)

tacotron = Tacotron.from_pretrained(

"https://github.com/bshall/Tacotron/releases/download/v0.1/tacotron-ljspeech-yspjx3.pt"

).cuda()

# load cmudict and add pronunciation of PyTorch

cmudict = load_cmudict()

cmudict["PYTORCH"] = "P AY1 T AO2 R CH"

text = "A PyTorch implementation of Location-Relative Attention Mechanisms For Robust Long-Form Speech Synthesis."

# convert text to phone ids

x = torch.LongTensor(text_to_id(text, cmudict)).unsqueeze(0).cuda()

# synthesize audio

with torch.no_grad():

mel, _ = tacotron.generate(x)

wav, sr = vocoder.generate(mel.transpose(1, 2))

# save output

sf.write("location_relative_attention.wav", wav, sr)

```

## Train from Scatch

1. Clone the repo:

```

git clone https://github.com/bshall/Tacotron

cd ./Tacotron

```

2. Install requirements:

```

pipenv install

```

3. Download and extract the [LJ-Speech dataset](https://keithito.com/LJ-Speech-Dataset/):

```

wget https://data.keithito.com/data/speech/LJSpeech-1.1.tar.bz2

tar -xvjf LJSpeech-1.1.tar.bz2

```

4. Download the train split [here](https://github.com/bshall/Tacotron/releases/tag/v0.1) and extract it in the root directory of the repo.

5. Extract Mel spectrograms and preprocess audio:

```

pipenv run python preprocess.py path/to/LJSpeech-1.1 datasets/LJSpeech-1.1

```

```

usage: preprocess.py [-h] in_dir out_dir

Preprocess an audio dataset.

positional arguments:

in_dir Path to the dataset directory

out_dir Path to the output directory

optional arguments:

-h, --help show this help message and exit

```

6. Train the model:

```

pipenv run python train.py ljspeech path/to/LJSpeech-1.1/metadata.csv datasets/LJSpeech-1.1

```

```

usage: train.py [-h] [--resume RESUME] checkpoint_dir text_path dataset_dir

Train Tacotron with dynamic convolution attention.

positional arguments:

checkpoint_dir Path to the directory where model checkpoints will be saved

text_path Path to the dataset transcripts

dataset_dir Path to the preprocessed data directory

optional arguments:

-h, --help show this help message and exit

--resume RESUME Path to the checkpoint to resume from

```

## Pretrained Models

Pretrained weights for the LJSpeech model are available [here](https://github.com/bshall/Tacotron/releases/tag/v0.1).

## Notable Differences from the Paper

1. Trained using a batch size of 64 on a single GPU (using automatic mixed precision).

2. Used a gradient clipping threshold of 0.05 as it seems to stabilize the alignment with the smaller batch size.

3. Used a different learning rate schedule (again to deal with smaller batch size).

4. Used 80-bin (instead of 128 bin) log-Mel spectrograms.

## Acknowlegements

- https://github.com/keithito/tacotron

- https://github.com/PetrochukM/PyTorch-NLP

- https://github.com/fatchord/WaveRNN