https://github.com/bytedance/ui-tars-desktop

A GUI Agent application based on UI-TARS(Vision-Language Model) that allows you to control your computer using natural language.

https://github.com/bytedance/ui-tars-desktop

agent browser-use computer-use electron gui-agents mcp mcp-server vision vite vlm

Last synced: about 1 month ago

JSON representation

A GUI Agent application based on UI-TARS(Vision-Language Model) that allows you to control your computer using natural language.

- Host: GitHub

- URL: https://github.com/bytedance/ui-tars-desktop

- Owner: bytedance

- License: apache-2.0

- Created: 2025-01-19T09:04:43.000Z (9 months ago)

- Default Branch: main

- Last Pushed: 2025-05-08T10:21:00.000Z (5 months ago)

- Last Synced: 2025-05-09T02:45:01.719Z (5 months ago)

- Topics: agent, browser-use, computer-use, electron, gui-agents, mcp, mcp-server, vision, vite, vlm

- Language: TypeScript

- Homepage: https://agent-tars.com

- Size: 43.8 MB

- Stars: 13,258

- Watchers: 123

- Forks: 1,072

- Open Issues: 145

-

Metadata Files:

- Readme: README.md

- Contributing: CONTRIBUTING.md

- License: LICENSE

- Code of conduct: CODE_OF_CONDUCT.md

- Security: SECURITY.md

Awesome Lists containing this project

README

## Introduction

English | [简体中文](./README.zh-CN.md)

[](https://trendshift.io/repositories/13584)

TARS\* is a Multimodal AI Agent stack, currently shipping two projects: [Agent TARS](#agent-tars) and [UI-TARS-desktop](#ui-tars-desktop):

Agent TARS

UI-TARS-desktop

Agent TARS is a general multimodal AI Agent stack, it brings the power of GUI Agent and Vision into your terminal, computer, browser and product.

It primarily ships with a CLI and Web UI for usage.

It aims to provide a workflow that is closer to human-like task completion through cutting-edge multimodal LLMs and seamless integration with various real-world MCP tools.

UI-TARS Desktop is a desktop application that provides a native GUI Agent based on the UI-TARS model.

It primarily ships a

local and

remote computer as well as browser operators.

## Table of Contents

- [News](#news)

- [Agent TARS](#agent-tars)

- [Showcase](#showcase)

- [Core Features](#core-features)

- [Quick Start](#quick-start)

- [Documentation](#documentation)

- [UI-TARS Desktop](#ui-tars-desktop)

- [Showcase](#showcase-1)

- [Features](#features)

- [Quick Start](#quick-start-1)

- [Contributing](#contributing)

- [License](#license)

- [Citation](#citation)

## News

- **\[2025-06-25\]** We released a Agent TARS Beta and Agent TARS CLI - [Introducing Agent TARS Beta](https://agent-tars.com/blog/2025-06-25-introducing-agent-tars-beta.html), a multimodal AI agent that aims to explore a work form that is closer to human-like task completion through rich multimodal capabilities (such as GUI Agent, Vision) and seamless integration with various real-world tools.

- **\[2025-06-12\]** - 🎁 We are thrilled to announce the release of UI-TARS Desktop v0.2.0! This update introduces two powerful new features: **Remote Computer Operator** and **Remote Browser Operator**—both completely free. No configuration required: simply click to remotely control any computer or browser, and experience a new level of convenience and intelligence.

- **\[2025-04-17\]** - 🎉 We're thrilled to announce the release of new UI-TARS Desktop application v0.1.0, featuring a redesigned Agent UI. The application enhances the computer using experience, introduces new browser operation features, and supports [the advanced UI-TARS-1.5 model](https://seed-tars.com/1.5) for improved performance and precise control.

- **\[2025-02-20\]** - 📦 Introduced [UI TARS SDK](./docs/sdk.md), is a powerful cross-platform toolkit for building GUI automation agents.

- **\[2025-01-23\]** - 🚀 We updated the **[Cloud Deployment](./docs/deployment.md#cloud-deployment)** section in the 中文版: [GUI模型部署教程](https://bytedance.sg.larkoffice.com/docx/TCcudYwyIox5vyxiSDLlgIsTgWf#U94rdCxzBoJMLex38NPlHL21gNb) with new information related to the ModelScope platform. You can now use the ModelScope platform for deployment.

## Agent TARS

Agent TARS is a general multimodal AI Agent stack, it brings the power of GUI Agent and Vision into your terminal, computer, browser and product.

It primarily ships with a CLI and Web UI for usage.

It aims to provide a workflow that is closer to human-like task completion through cutting-edge multimodal LLMs and seamless integration with various real-world MCP tools.

### Showcase

```

Please help me book the earliest flight from San Jose to New York on September 1st and the last return flight on September 6th on Priceline

```

https://github.com/user-attachments/assets/772b0eef-aef7-4ab9-8cb0-9611820539d8

Booking Hotel

Generate Chart with extra MCP Servers

Instruction: I am in Los Angeles from September 1st to September 6th, with a budget of $5,000. Please help me book a Ritz-Carlton hotel closest to the airport on booking.com and compile a transportation guide for me

Instruction: Draw me a chart of Hangzhou's weather for one month

For more use cases, please check out [#842](https://github.com/bytedance/UI-TARS-desktop/issues/842).

### Core Features

- 🖱️ **One-Click Out-of-the-box CLI** - Supports both **headful** [Web UI](https://agent-tars.com/guide/basic/web-ui.html) and **headless** [server](https://agent-tars.com/guide/advanced/server.html)) [execution](https://agent-tars.com/guide/basic/cli.html).

- 🌐 **Hybrid Browser Agent** - Control browsers using [GUI Agent](https://agent-tars.com/guide/basic/browser.html#visual-grounding), [DOM](https://agent-tars.com/guide/basic/browser.html#dom), or a hybrid strategy.

- 🔄 **Event Stream** - Protocol-driven Event Stream drives [Context Engineering](https://agent-tars.com/beta#context-engineering) and [Agent UI](https://agent-tars.com/blog/2025-06-25-introducing-agent-tars-beta.html#easy-to-build-applications).

- 🧰 **MCP Integration** - The kernel is built on MCP and also supports mounting [MCP Servers](https://agent-tars.com/guide/basic/mcp.html) to connect to real-world tools.

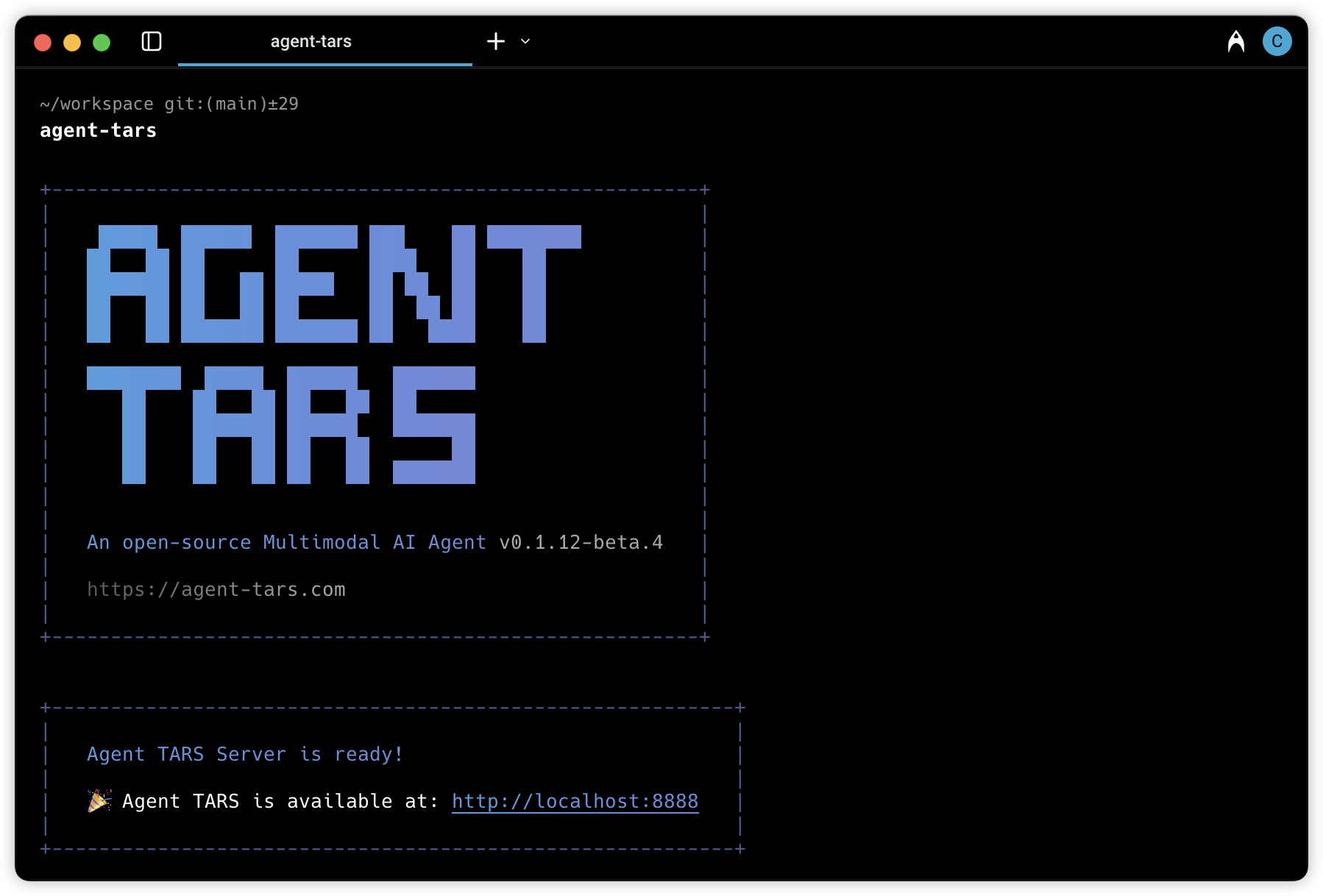

### Quick Start

```bash

# Luanch with `npx`.

npx @agent-tars/cli@latest

# Install globally, required Node.js >= 22

npm install @agent-tars/cli@latest -g

# Run with your preferred model provider

agent-tars --provider volcengine --model doubao-1-5-thinking-vision-pro-250428 --apiKey your-api-key

agent-tars --provider anthropic --model claude-3-7-sonnet-latest --apiKey your-api-key

```

Visit the comprehensive [Quick Start](https://agent-tars.com/guide/get-started/quick-start.html) guide for detailed setup instructions.

### Documentation

> 🌟 **Explore Agent TARS Universe** 🌟

Category

Resource Link

Description

🏠 Central Hub

Your gateway to Agent TARS ecosystem

📚 Quick Start

Zero to hero in 5 minutes

🚀 What's New

Discover cutting-edge features & vision

🛠️ Developer Zone

Master every command & features

🎯 Showcase

View use cases built by the official and community

🔧 Reference

Complete technical reference

## UI-TARS Desktop

UI-TARS Desktop is a native GUI agent for your local computer, driven by [UI-TARS](https://github.com/bytedance/UI-TARS) and Seed-1.5-VL/1.6 series models.

📑 Paper

| 🤗 Hugging Face Models

|   🫨 Discord

|   🤖 ModelScope

🖥️ Desktop Application

|    👓 Midscene (use in browser)

### Showcase

| Instruction | Local Operator | Remote Operator |

| :----------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------: |

| Please help me open the autosave feature of VS Code and delay AutoSave operations for 500 milliseconds in the VS Code setting. | | |

| Could you help me check the latest open issue of the UI-TARS-Desktop project on GitHub? | | |

### Features

- 🤖 Natural language control powered by Vision-Language Model

- 🖥️ Screenshot and visual recognition support

- 🎯 Precise mouse and keyboard control

- 💻 Cross-platform support (Windows/MacOS/Browser)

- 🔄 Real-time feedback and status display

- 🔐 Private and secure - fully local processing

### Quick Start

See [Quick Start](./docs/quick-start.md)

## Contributing

See [CONTRIBUTING.md](./CONTRIBUTING.md).

## License

This project is licensed under the Apache License 2.0.

## Citation

If you find our paper and code useful in your research, please consider giving a star :star: and citation :pencil:

```BibTeX

@article{qin2025ui,

title={UI-TARS: Pioneering Automated GUI Interaction with Native Agents},

author={Qin, Yujia and Ye, Yining and Fang, Junjie and Wang, Haoming and Liang, Shihao and Tian, Shizuo and Zhang, Junda and Li, Jiahao and Li, Yunxin and Huang, Shijue and others},

journal={arXiv preprint arXiv:2501.12326},

year={2025}

}

```