https://github.com/chriscummins/clgen

Deep learning program generator

https://github.com/chriscummins/clgen

benchmarking big-data deep-learning gpu lstm machine-learning neural-network opencl synthetic-programs

Last synced: 10 months ago

JSON representation

Deep learning program generator

- Host: GitHub

- URL: https://github.com/chriscummins/clgen

- Owner: ChrisCummins

- License: gpl-3.0

- Created: 2016-09-10T18:29:20.000Z (over 9 years ago)

- Default Branch: master

- Last Pushed: 2023-12-04T22:16:58.000Z (about 2 years ago)

- Last Synced: 2025-03-30T18:22:22.577Z (11 months ago)

- Topics: benchmarking, big-data, deep-learning, gpu, lstm, machine-learning, neural-network, opencl, synthetic-programs

- Language: Python

- Homepage: https://chriscummins.cc/pub/2017-cgo.pdf

- Size: 8.75 MB

- Stars: 106

- Watchers: 7

- Forks: 30

- Open Issues: 17

-

Metadata Files:

- Readme: README.md

- Contributing: CONTRIBUTING.md

- License: LICENSE

Awesome Lists containing this project

README

-------

Maintainer: fivosts. See BenchPress for a more recent version. BenchPress also supports CLgen models.

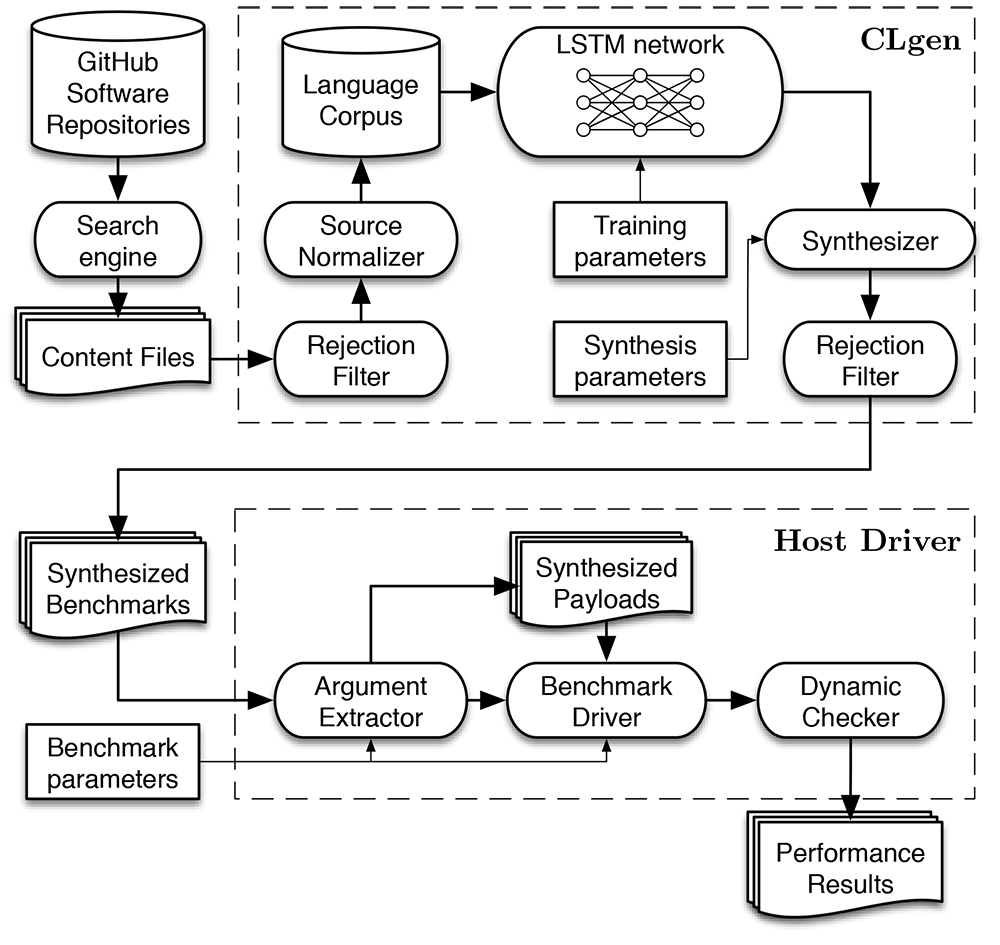

**CLgen** is an open source application for generating runnable programs using

deep learning. CLgen *learns* to program using neural networks which model the

semantics and usage from large volumes of program fragments, generating

many-core OpenCL programs that are representative of, but *distinct* from, the

programs it learns from.

## Getting Started

First check out [INSTALL.md](/INSTALL.md) for instructions on getting the build

environment set up .

Then build CLgen using:

```sh

$ bazel build -c opt //deeplearning/clgen

```

Use our tiny example dataset to train and sample your first CLgen model:

```sh

$ bazel-bin/deeplearning/clgen -- \

--config $PWD/deeplearning/clgen/tests/data/tiny/config.pbtxt

```

#### What next?

CLgen is a tool for generating source code. How you use it will depend entirely

on what you want to do with the generated code. As a first port of call I'd

recommend checking out how CLgen is configured. CLgen is configured through a

handful of

[protocol buffers](https://developers.google.com/protocol-buffers/) defined in

[//deeplearning/clgen/proto](/deeplearning/clgen/proto).

The [clgen.Instance](/deeplearning/clgen/proto/clgen.proto) message type

combines a [clgen.Model](/deeplearning/clgen/proto/model.proto) and

[clgen.Sampler](/deeplearning/clgen/proto/sampler.proto) which define the

way in which models are trained, and how new programs are generated,

respectively. You will probably want to assemble a large corpus of source code

to train a new model on - I have [tools](/datasets/github/scrape_repos) which

may help with that. You may also want a means to execute arbitrary generated

code - as it happens I have [tools](/gpu/cldrive) for that too. :-) Thought of a

new use case? I'd love to hear about it!

## Resources

Presentation slides:

Publication

["Synthesizing Benchmarks for Predictive Modeling"](https://github.com/ChrisCummins/paper-synthesizing-benchmarks)

(CGO'17).

[Jupyter notebook](https://github.com/ChrisCummins/paper-synthesizing-benchmarks/blob/master/code/Paper.ipynb)

containing experimental evaluation of an early version of CLgen.

My documentation sucks. Don't be afraid to get stuck in and start

[reading the code!](deeplearning/clgen/clgen.py)

## License

Copyright 2016-2020 Chris Cummins .

Released under the terms of the GPLv3 license. See

[LICENSE](/deeplearning/clgen/LICENSE) for details.