https://github.com/curt-park/mnist-fastapi-celery-triton

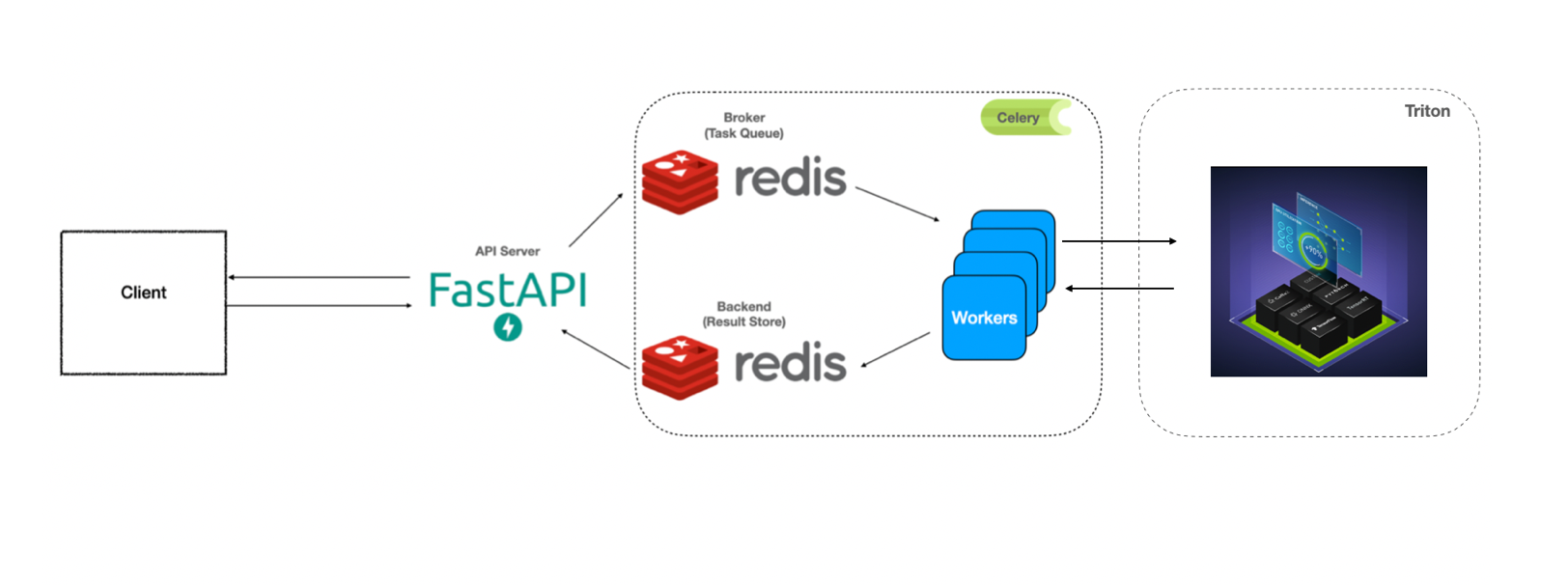

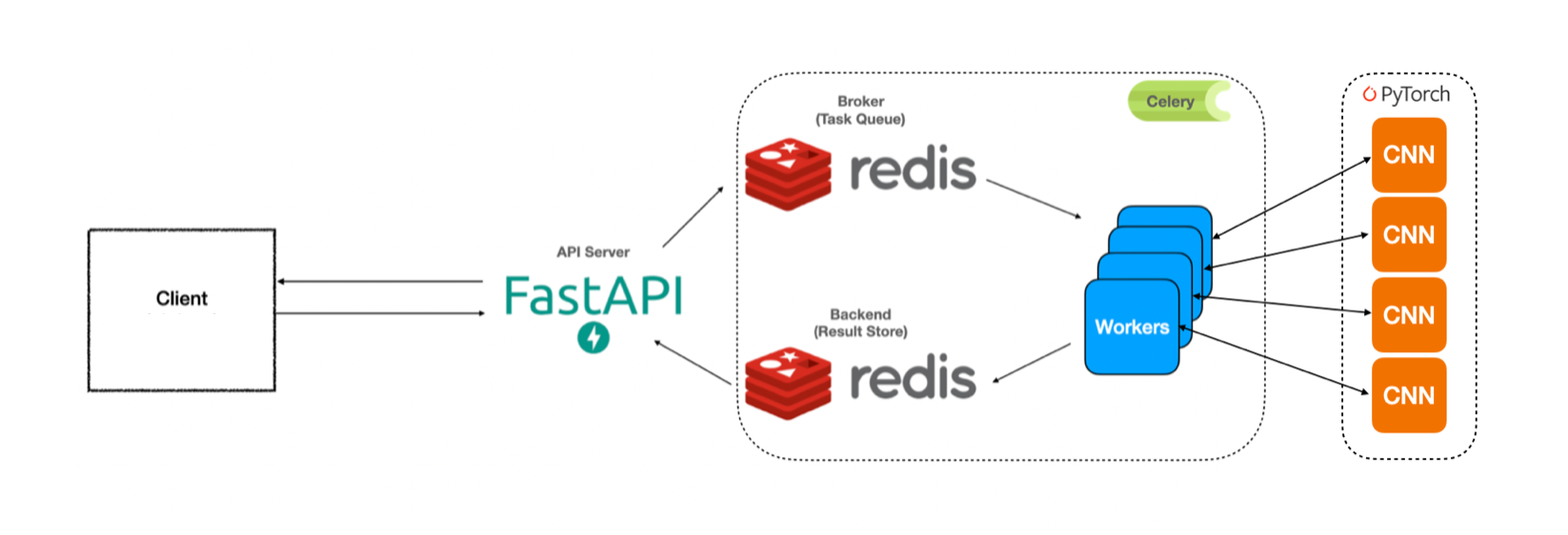

Simple example of FastAPI + Celery + Triton for benchmarking

https://github.com/curt-park/mnist-fastapi-celery-triton

Last synced: 6 months ago

JSON representation

Simple example of FastAPI + Celery + Triton for benchmarking

- Host: GitHub

- URL: https://github.com/curt-park/mnist-fastapi-celery-triton

- Owner: Curt-Park

- License: mit

- Created: 2022-03-01T05:38:55.000Z (over 3 years ago)

- Default Branch: main

- Last Pushed: 2022-08-11T02:04:47.000Z (about 3 years ago)

- Last Synced: 2025-04-13T20:15:50.286Z (6 months ago)

- Language: Python

- Size: 92.8 KB

- Stars: 63

- Watchers: 2

- Forks: 7

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

Follow-up work:

- https://github.com/Curt-Park/mnist-fastapi-aio-triton

You can also see the previous work from:

- https://github.com/Curt-Park/producer-consumer-fastapi-celery

- https://github.com/Curt-Park/triton-inference-server-practice (00-quick-start)

# Benchmark FastAPI + Celery with / without Triton

## with Triton Server

## without Triton Server

## Benchmark Results

See [Benchmark Results](/benchmark)

## Preparation

#### 1. Setup packages

Install [Anaconda](https://docs.anaconda.com/anaconda/install/index.html) and execute the following commands:

```bash

$ make env # create a conda environment (need only once)

$ source init.sh # activate the env

$ make setup # setup packages (need only once)

```

#### 2. Train a CNN model (Recommended on GPU)

```bash

$ source create_model.sh

```

#### 3. Check the model repository created

```bash

$ tree model_repository

model_repository

└── mnist_cnn

├── 1

│ └── model.pt

└── config.pbtxt

2 directories, 2 files

```

## How to play

#### Server (Option 1 - On your Local)

Install [Redis](https://redis.io/topics/quickstart) & [Docker](https://docs.docker.com/engine/install/),

and run the following commands:

```bash

$ make triton # run triton server

$ make broker # run redis broker

$ make worker # run celery worker

$ make api # run fastapi server

$ make dashboard # run dashboard that monitors celery

```

#### Server (Option 2 - Docker Compose available on GPU devices)

Install [Docker](https://docs.docker.com/engine/install/) & [Docker Compose](https://docs.docker.com/compose/install/),

and run the following command:

```bash

$ docker-compose up

```

#### [Optional] Additional Triton Servers

You can start up additional Triton servers on other devices.

```bash

$ make triton

```

#### [Optional] Additional Workers

You can start up additional workers on other devices.

```bash

$ export BROKER_URL=redis://redis-broker-ip:6379 # default is localhost

$ export BACKEND_URL=redis://redis-backend-ip:6379 # default is localhost

$ export TRITON_SERVER_URL=triton-server-ip:9000 # default is localhost

$ make worker

```

* NOTE: Worker needs to run on the machine which Triton runs on due to shared memory settings.

#### Dashboard for Celery (Flower)

http://0.0.0.0:5555/

## Load Test (w/ Locust)

#### Execute Locust

```bash

$ make load # for load test without Triton

or

$ make load-triton # for load test with Triton

```

#### Open http://0.0.0.0:8089

Type url for the API server.

## Issue Handling

#### Redis Error 8 connecting localhost:6379. nodename nor servname provided, or not known.

`$ ulimit -n 1024`

#### Docker's `network_mode=bridge`degrades the network performance.

We recommend to use Linux server if you would like to run `docker-compose up`.

## For Developers

```bash

$ make setup-dev # setup for developers

$ make format # format scripts

$ make lint # lints scripts

$ make utest # runs unit tests

$ make cov # opens unit test coverage information

```