Ecosyste.ms: Awesome

An open API service indexing awesome lists of open source software.

https://github.com/davidberenstein1957/crosslingual-coreference

A multi-lingual approach to AllenNLP CoReference Resolution along with a wrapper for spaCy.

https://github.com/davidberenstein1957/crosslingual-coreference

coreference coreference-resolution hacktoberfest natural-language-processing nlp python spacy

Last synced: 25 days ago

JSON representation

A multi-lingual approach to AllenNLP CoReference Resolution along with a wrapper for spaCy.

- Host: GitHub

- URL: https://github.com/davidberenstein1957/crosslingual-coreference

- Owner: davidberenstein1957

- License: mit

- Created: 2022-03-25T14:31:51.000Z (almost 3 years ago)

- Default Branch: main

- Last Pushed: 2024-04-16T13:20:41.000Z (10 months ago)

- Last Synced: 2024-12-22T20:11:06.615Z (about 1 month ago)

- Topics: coreference, coreference-resolution, hacktoberfest, natural-language-processing, nlp, python, spacy

- Language: Python

- Homepage:

- Size: 548 KB

- Stars: 104

- Watchers: 4

- Forks: 18

- Open Issues: 10

-

Metadata Files:

- Readme: README.md

- License: LICENSE

- Citation: CITATION.cff

Awesome Lists containing this project

README

# Crosslingual Coreference

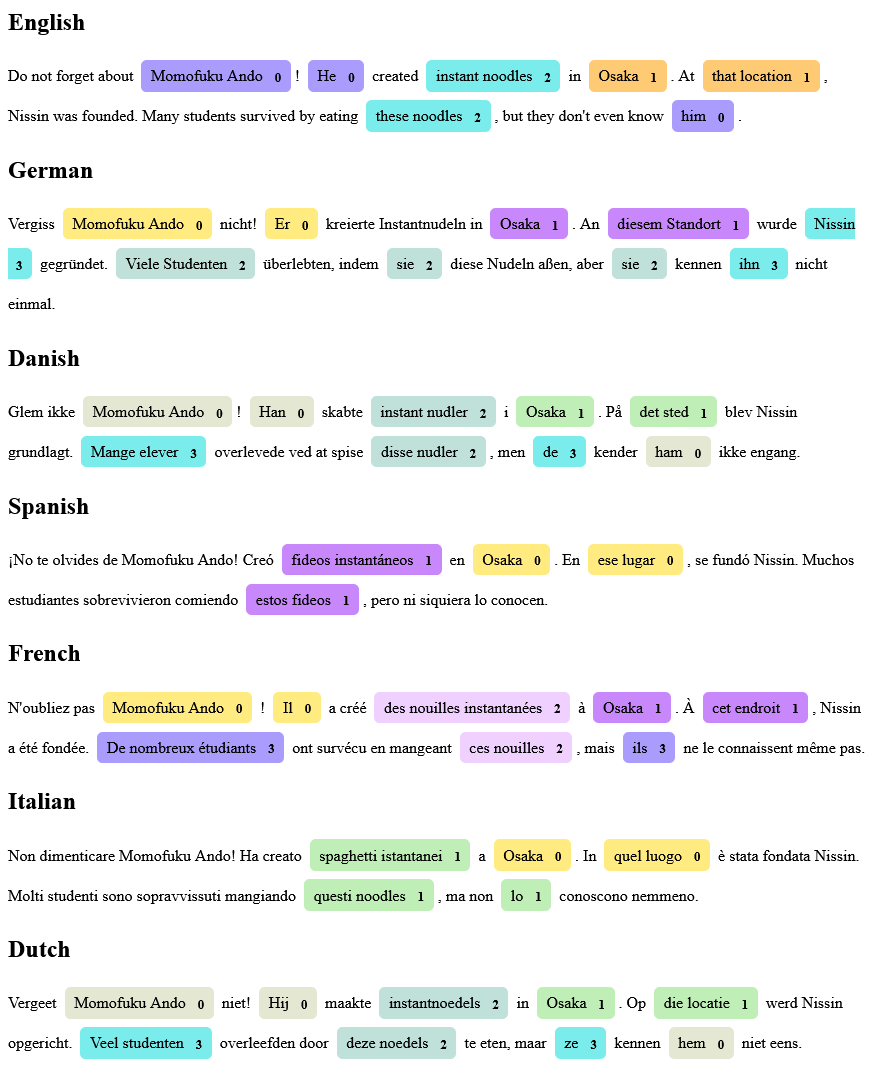

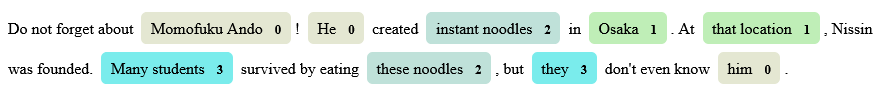

Coreference is amazing but the data required for training a model is very scarce. In our case, the available training for non-English languages also proved to be poorly annotated. Crosslingual Coreference, therefore, uses the assumption a trained model with English data and cross-lingual embeddings should work for languages with similar sentence structures.

[](https://github.com/pandora-intelligence/crosslingual-coreference/releases)

[](https://pypi.org/project/crosslingual-coreference/)

[](https://pypi.org/project/crosslingual-coreference/)

[](https://github.com/ambv/black)

# Install

```

pip install crosslingual-coreference

```

# Quickstart

```python

from crosslingual_coreference import Predictor

text = (

"Do not forget about Momofuku Ando! He created instant noodles in Osaka. At"

" that location, Nissin was founded. Many students survived by eating these"

" noodles, but they don't even know him."

)

# choose minilm for speed/memory and info_xlm for accuracy

predictor = Predictor(

language="en_core_web_sm", device=-1, model_name="minilm"

)

print(predictor.predict(text)["resolved_text"])

print(predictor.pipe([text])[0]["resolved_text"])

# Note you can also get 'cluster_heads' and 'clusters'

# Output

#

# Do not forget about Momofuku Ando!

# Momofuku Ando created instant noodles in Osaka.

# At Osaka, Nissin was founded.

# Many students survived by eating instant noodles,

# but Many students don't even know Momofuku Ando.

```

## Models

As of now, there are two models available "spanbert", "info_xlm", "xlm_roberta", "minilm", which scored 83, 77, 74 and 74 on OntoNotes Release 5.0 English data, respectively.

- The "minilm" model is the best quality speed trade-off for both mult-lingual and english texts.

- The "info_xlm" model produces the best quality for multi-lingual texts.

- The AllenNLP "spanbert" model produces the best quality for english texts.

## Chunking/batching to resolve memory OOM errors

```python

from crosslingual_coreference import Predictor

predictor = Predictor(

language="en_core_web_sm",

device=0,

model_name="minilm",

chunk_size=2500,

chunk_overlap=2,

)

```

## Use spaCy pipeline

```python

import spacy

text = (

"Do not forget about Momofuku Ando! He created instant noodles in Osaka. At"

" that location, Nissin was founded. Many students survived by eating these"

" noodles, but they don't even know him."

)

nlp = spacy.load("en_core_web_sm")

nlp.add_pipe(

"xx_coref", config={"chunk_size": 2500, "chunk_overlap": 2, "device": 0}

)

doc = nlp(text)

print(doc._.coref_clusters)

# Output

#

# [[[4, 5], [7, 7], [27, 27], [36, 36]],

# [[12, 12], [15, 16]],

# [[9, 10], [27, 28]],

# [[22, 23], [31, 31]]]

print(doc._.resolved_text)

# Output

#

# Do not forget about Momofuku Ando!

# Momofuku Ando created instant noodles in Osaka.

# At Osaka, Nissin was founded.

# Many students survived by eating instant noodles,

# but Many students don't even know Momofuku Ando.

print(doc._.cluster_heads)

# Output

#

# {Momofuku Ando: [5, 6],

# instant noodles: [11, 12],

# Osaka: [14, 14],

# Nissin: [21, 21],

# Many students: [26, 27]}

```

### Visualize spacy pipeline

This only works with spacy >= 3.3.

```python

import spacy

from spacy.tokens import Span

from spacy import displacy

text = (

"Do not forget about Momofuku Ando! He created instant noodles in Osaka. At"

" that location, Nissin was founded. Many students survived by eating these"

" noodles, but they don't even know him."

)

nlp = spacy.load("nl_core_news_sm")

nlp.add_pipe("xx_coref", config={"model_name": "minilm"})

doc = nlp(text)

spans = []

for idx, cluster in enumerate(doc._.coref_clusters):

for span in cluster:

spans.append(

Span(doc, span[0], span[1]+1, str(idx).upper())

)

doc.spans["custom"] = spans

displacy.render(doc, style="span", options={"spans_key": "custom"})

```

## More Examples