https://github.com/deepmancer/byol-pytorch

BYOL (Bootstrap Your Own Latent), implemented from scratch in Pytorch

https://github.com/deepmancer/byol-pytorch

bootstrap byol contrastive-learning from-scratch pytorch

Last synced: 3 months ago

JSON representation

BYOL (Bootstrap Your Own Latent), implemented from scratch in Pytorch

- Host: GitHub

- URL: https://github.com/deepmancer/byol-pytorch

- Owner: deepmancer

- Created: 2023-08-17T21:28:05.000Z (almost 2 years ago)

- Default Branch: main

- Last Pushed: 2024-08-16T11:23:33.000Z (11 months ago)

- Last Synced: 2024-10-11T20:06:19.592Z (9 months ago)

- Topics: bootstrap, byol, contrastive-learning, from-scratch, pytorch

- Language: Jupyter Notebook

- Homepage:

- Size: 252 KB

- Stars: 4

- Watchers: 1

- Forks: 0

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

# 📈 BYOL (Bootstrap Your Own Latent) – From Scratch Implementation in PyTorch

Welcome to the **BYOL (Bootstrap Your Own Latent)** repository! This project provides a comprehensive, from-scratch implementation of BYOL, a revolutionary self-supervised learning algorithm. Whether you're a researcher, developer, or enthusiast, this repository offers valuable insights into state-of-the-art unsupervised feature learning.

If you find this project useful, please star this repository! ⭐

---

## 🚀 Key Highlights

- **From-Scratch Implementation**: Dive deep into the mechanics of BYOL with clean, understandable PyTorch code.

- **Self-Supervised Learning**: Explore a negative-sample-free approach to feature learning.

- **Documentation**: Clear explanations, visualizations, and results to aid your understanding.

- **Interactive Notebooks**: Experiment hands-on with our Jupyter notebooks.

---

## 🧠 What is BYOL?

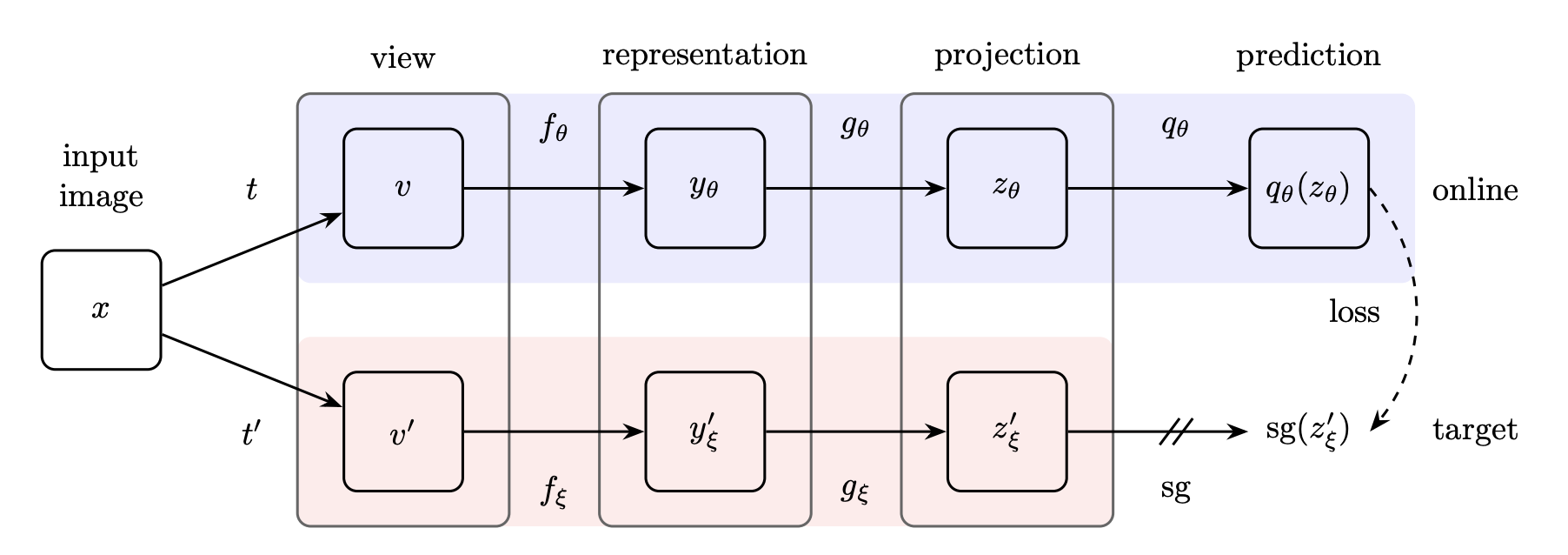

**Bootstrap Your Own Latent (BYOL)** is a self-supervised learning algorithm that breaks new ground by learning from positive pairs (different augmentations of the same image) without relying on negative samples. This simplicity leads to faster training and competitive results across a range of benchmarks.

### ✨ Key Features:

- **No Negative Samples**: Reduces complexity while maintaining high performance.

- **State-of-the-Art Results**: Competes effectively with contrastive methods on various image classification tasks.

- **Minimal Computational Overhead**: Optimized for efficiency during training.

BYOL Model Overview

---

## 📊 Experimental Results

We evaluated our implementation using the **STL10 Dataset**, showcasing the impact of BYOL pretraining:

| **Training Method** | **Accuracy** |

|---------------------------|--------------|

| Without Pretraining | 84.58% |

| With BYOL Pretraining | **87.61%** |

This demonstrates how BYOL can effectively enhance feature learning, even with relatively few epochs.

---

## 📁 Dataset: STL10

The **STL10 Dataset** is designed for unsupervised and self-supervised learning models, making it a perfect fit for BYOL.

- **Classes**: 10 categories, including animals and vehicles.

- **Train/Test Split**: 500 training images and 800 test images per class.

- **Source**: [STL10 Dataset](https://cs.stanford.edu/~acoates/stl10/)

STL10 Dataset Sample

---

## 🔧 Getting Started

**Prerequisites**

- **Python 3.6+**

- **PyTorch**

- **Torchvision**

- **Jupyter Notebook** (for interactive experiments)

Clone the repository and install the required dependencies

---

## 📚 Citations

```bibtex

@inproceedings{NEURIPS2020_f3ada80d,

author = {Grill, Jean-Bastien and Strub, Florian and Altch\'{e}, Florent and Tallec, Corentin and Richemond, Pierre and Buchatskaya, Elena and Doersch, Carl and Avila Pires, Bernardo and Guo, Zhaohan and Gheshlaghi Azar, Mohammad and Piot, Bilal and kavukcuoglu, koray and Munos, Remi and Valko, Michal},

booktitle = {Advances in Neural Information Processing Systems},

editor = {H. Larochelle and M. Ranzato and R. Hadsell and M.F. Balcan and H. Lin},

pages = {21271--21284},

publisher = {Curran Associates, Inc.},

title = {Bootstrap Your Own Latent - A New Approach to Self-Supervised Learning},

url = {https://proceedings.neurips.cc/paper_files/paper/2020/file/f3ada80d5c4ee70142b17b8192b2958e-Paper.pdf},

volume = {33},

year = {2020}

}

```

---

## 🧾 License

This project is licensed under the **MIT License**. See the [LICENSE](LICENSE) file for details.