https://github.com/dkeras-project/dkeras

Distributed Keras Engine, Make Keras faster with only one line of code.

https://github.com/dkeras-project/dkeras

data-parallelism deep-learning deep-neural-networks distributed distributed-deep-learning distributed-keras-engine distributed-systems keras keras-classification-models keras-models keras-neural-networks keras-tensorflow machine-learning neural-network parallel-computing plaidml python ray tensorflow tensorflow-models

Last synced: 10 months ago

JSON representation

Distributed Keras Engine, Make Keras faster with only one line of code.

- Host: GitHub

- URL: https://github.com/dkeras-project/dkeras

- Owner: dkeras-project

- License: mit

- Created: 2019-07-09T18:43:41.000Z (over 6 years ago)

- Default Branch: master

- Last Pushed: 2019-10-03T17:50:59.000Z (over 6 years ago)

- Last Synced: 2025-04-07T01:52:23.672Z (11 months ago)

- Topics: data-parallelism, deep-learning, deep-neural-networks, distributed, distributed-deep-learning, distributed-keras-engine, distributed-systems, keras, keras-classification-models, keras-models, keras-neural-networks, keras-tensorflow, machine-learning, neural-network, parallel-computing, plaidml, python, ray, tensorflow, tensorflow-models

- Language: Python

- Homepage:

- Size: 6.48 MB

- Stars: 189

- Watchers: 7

- Forks: 12

- Open Issues: 3

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

# dKeras: Distributed Keras Engine

### ***Make Keras faster with only one line of code.***

dKeras is a distributed Keras engine that is built on top of

[Ray](https://github.com/ray-project/ray). By wrapping dKeras around your

original Keras model, it allows you to use many distributed deep learning

techniques to automatically improve your system's performance.

With an easy-to-use API and a backend framework that can be deployed from

the laptop to the data center, dKeras simpilifies what used to be a complex

and time-consuming process into only a few adjustments.

#### Why Use dKeras?

Distributed deep learning can be essential for production systems where you

need fast inference but don't want expensive hardware accelerators or when

researchers need to train large models made up of distributable parts.

This becomes a challenge for developers because they'll need expertise in not

only deep learning but also distributed systems. A production team might also

need a machine learning optimization engineer to use neural network

optimizers in terms of precision changes, layer fusing, or other techniques.

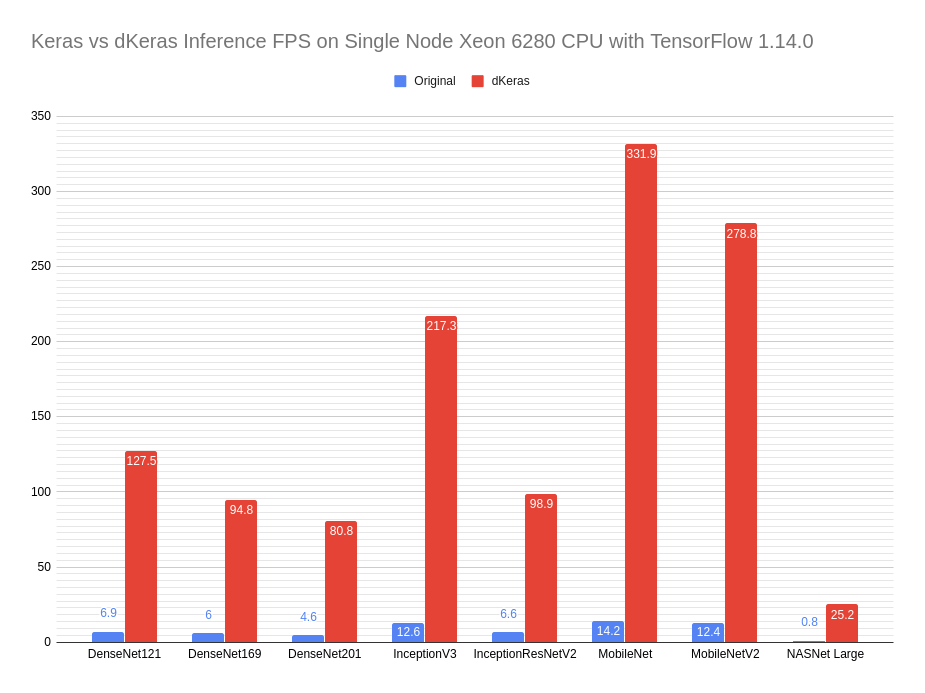

Distributed inference is a simple way to get better inference FPS. The graph

below shows how non-optimized, out-of-box models from default frameworks can

be quickly sped up through data parallelism:

#### Current Capabilities:

- Data Parallelism Inference

#### Future Capabilities:

- Model Parallelism Inference

- Distributed Training

- Easy Multi-model production-ready building

- Data stream input distributed inference

- PlaidML Support

- Autoscaling

- Automatic optimal hardware configuration

- PBS/Torque support

## Installation

The first official release of dKeras will be available soon. For

now, install from source.

```bash

pip install git+https://github.com/dkeras-project/dkeras

```

### Requirements

- Python 3.6 or higher

- ray

- psutil

- Linux (or OSX, dKeras works on laptops too!)

- numpy

### Coming Soon: [PlaidML](https://github.com/plaidml/plaidml) Support

dKeras will soon work alongside [PlaidML](https://github.com/plaidml/plaidml),

a "portable tensor compiler for enabling deep learning on laptops, embedded devices,

or other devices where the available computing hardware is not well

supported or the available software stack contains unpalatable

license restrictions."

## Distributed Inference

### Example

#### Original

```python

model = ResNet50()

model.predict(data)

```

#### dKeras Version

```python

from dkeras import dKeras

model = dKeras(ResNet50)

model.predict(data)

```

#### Full Example

```python

from tensorflow.keras.applications import ResNet50

from dkeras import dKeras

import numpy as np

import ray

ray.init()

data = np.random.uniform(-1, 1, (100, 224, 224, 3))

model = dKeras(ResNet50, init_ray=False, wait_for_workers=True, n_workers=4)

preds = model.predict(data)

```

#### Multiple Model Example

```python

import numpy as np

from tensorflow.keras.applications import ResNet50, MobileNet

from dkeras import dKeras

import ray

ray.init()

model1 = dKeras(ResNet50, weights='imagenet', wait_for_workers=True, n_workers=3)

model2 = dKeras(MobileNet, weights='imagenet', wait_for_workers=True, n_workers=3)

test_data = np.random.uniform(-1, 1, (100, 224, 224, 3))

model1.predict(test_data)

model2.predict(test_data)

model1.close()

model2.close()

```