https://github.com/elementsinteractive/lgtm-ai

Your AI-powered code review companion

https://github.com/elementsinteractive/lgtm-ai

ai code-reviews dev-tools github gitlab llms

Last synced: about 1 month ago

JSON representation

Your AI-powered code review companion

- Host: GitHub

- URL: https://github.com/elementsinteractive/lgtm-ai

- Owner: elementsinteractive

- License: mit

- Created: 2025-05-22T12:38:20.000Z (9 months ago)

- Default Branch: main

- Last Pushed: 2026-01-15T11:30:56.000Z (about 1 month ago)

- Last Synced: 2026-01-15T15:49:31.011Z (about 1 month ago)

- Topics: ai, code-reviews, dev-tools, github, gitlab, llms

- Language: Python

- Homepage: https://www.elements.nl/en

- Size: 7.92 MB

- Stars: 23

- Watchers: 2

- Forks: 8

- Open Issues: 10

-

Metadata Files:

- Readme: README.md

- Changelog: CHANGELOG.md

- License: LICENSE

- Codeowners: CODEOWNERS

Awesome Lists containing this project

- awesome-AI-driven-development - lgtm-ai - Your AI-powered code review companion (Code Review & Collaboration / Other IDEs)

README

# lgtm-ai

[](https://github.com/astral-sh/ruff)

[](https://pypi.org/project/lgtm-ai/)

[](https://hub.docker.com/r/elementsinteractive/lgtm-ai)

[](https://github.com/marketplace/actions/lgtm-ai-code-review)

[](LICENSE)

---

lgtm-ai is your AI-powered code review companion. It generates code reviews using your favorite LLMs and helps human reviewers with detailed, context-aware reviewer guides. Supports GitHub, GitLab, and major AI models including GPT-4, Claude, Gemini, and more.

**Table of Contents**

- [Quick Usage](#quick-usage)

- [Review](#review)

- [Local Changes](#local-changes)

- [Reviewer Guide](#reviewer-guide)

- [Installation](#installation)

- [How it works](#how-it-works)

- [Review scores and comment categories](#review-scores-and-comment-categories)

- [Supported Code Repository Services](#supported-code-repository-services)

- [Using Issue/User Story Information](#using-issueuser-story-information)

- [Supported AI models](#supported-ai-models)

- [Summary](#summary)

- [OpenAI](#openai)

- [Google Gemini](#google-gemini)

- [Anthropic's Claude](#anthropics-claude)

- [Mistral AI](#mistral-ai)

- [DeepSeek](#deepseek)

- [Local models](#local-models)

- [CI/CD Integration](#cicd-integration)

- [Configuration](#configuration)

- [Main options](#main-options)

- [Review options](#review-options)

- [Issues Integration options](#issues-integration-options)

- [Example `lgtm.toml`](#example-lgtmtoml)

- [Contributing](#contributing)

- [Running the project](#running-the-project)

- [Managing requirements](#managing-requirements)

- [Commit messages](#commit-messages)

- [Contributors ✨](#contributors-)

## Quick Usage

### Review

```sh

lgtm review --ai-api-key $OPENAI_API_KEY \

--git-api-key $GITLAB_TOKEN \

--model gpt-5 \

--publish \

"https://gitlab.com/your-repo/-/merge-requests/42"

```

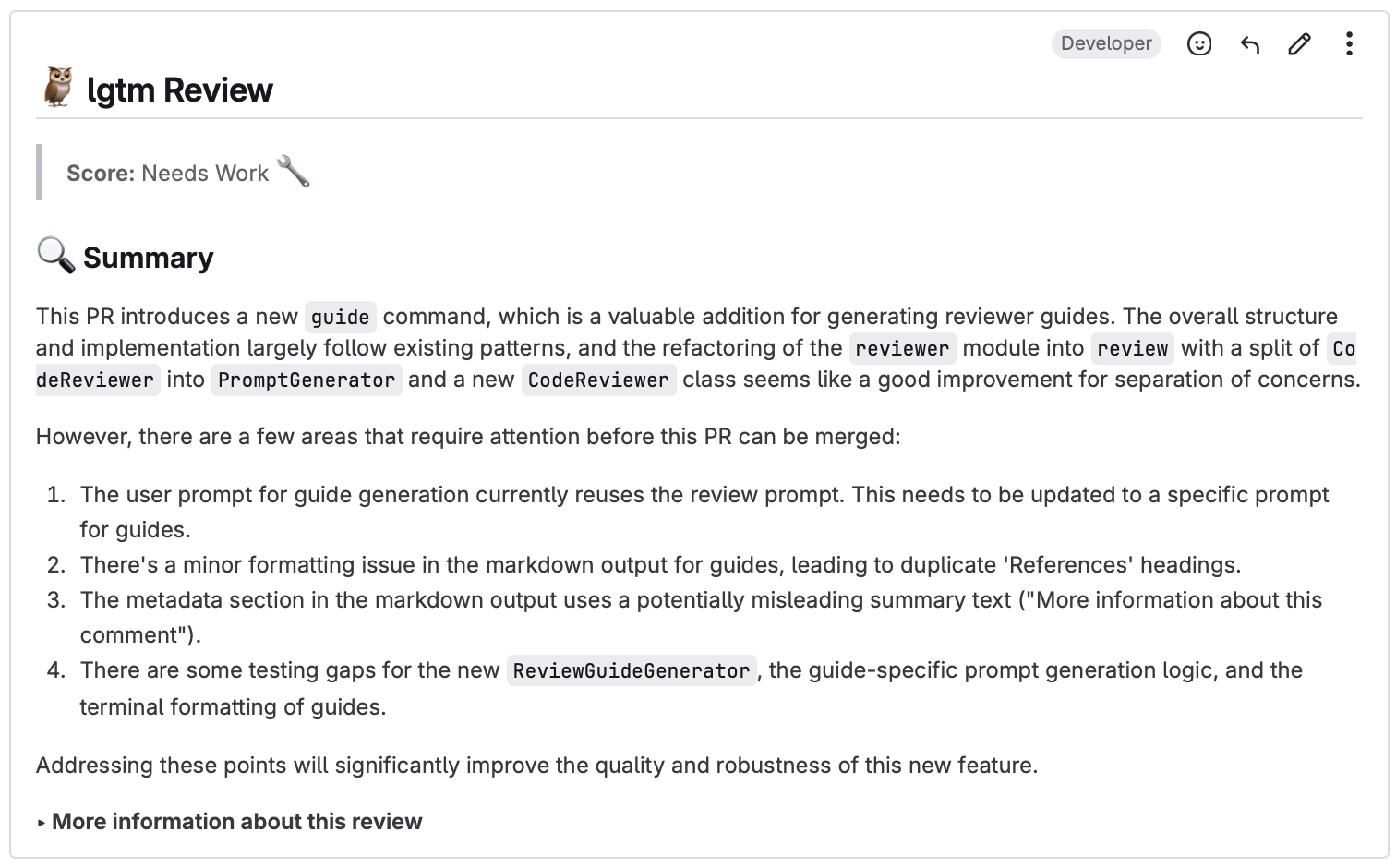

This will generate a **review** like this one:

#### Local Changes

You can also review local changes without a pull request:

```sh

lgtm review --ai-api-key $OPENAI_API_KEY \

--model gpt-5 \

--compare main \

path/to/git/repo

```

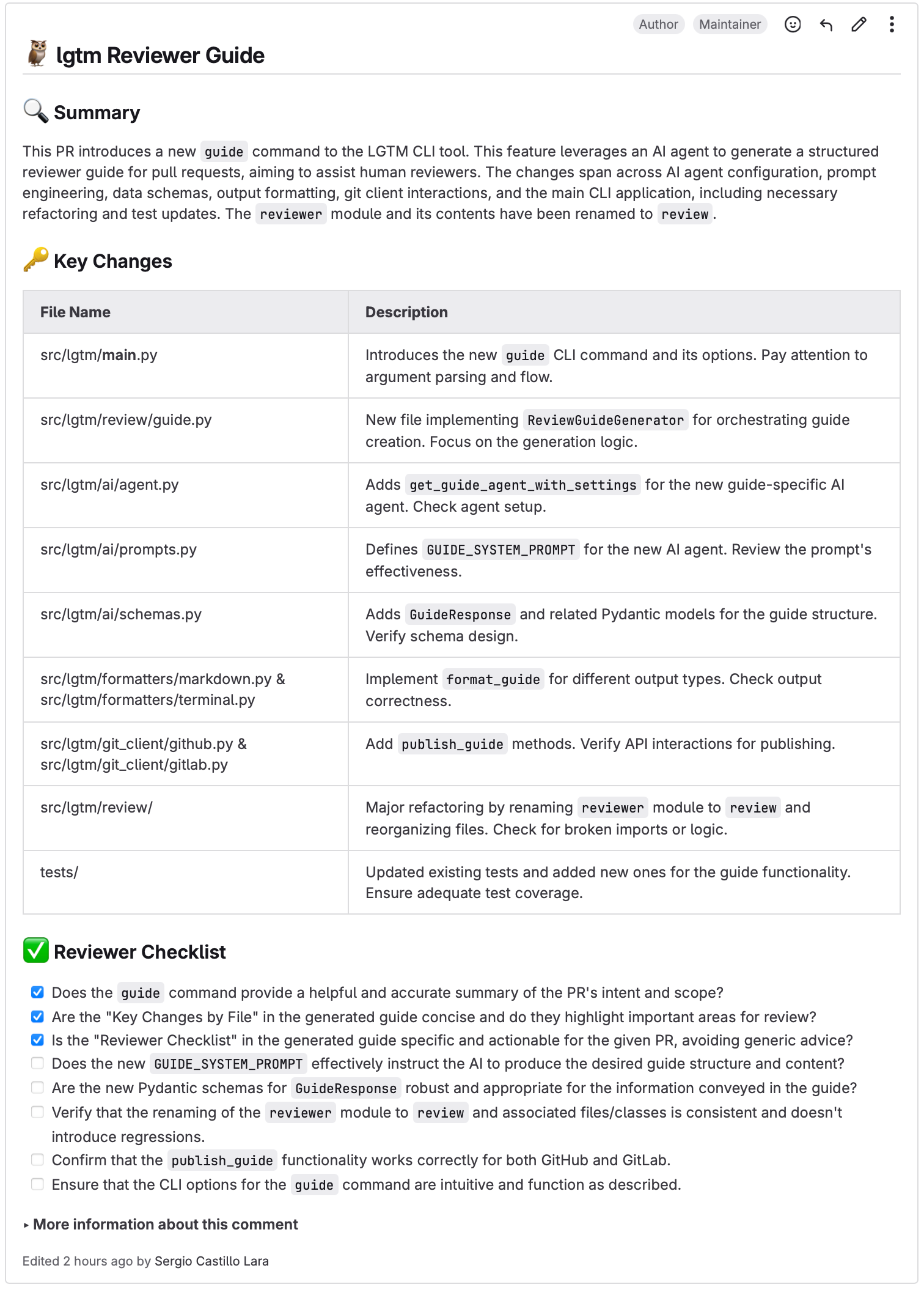

### Reviewer Guide

```sh

lgtm guide --ai-api-key $OPENAI_API_KEY \

--git-api-key $GITLAB_TOKEN \

--model gpt-5 \

--publish \

"https://gitlab.com/your-repo/-/merge-requests/42"

```

This will generate a **reviewer guide** like this one:

## Installation

```sh

pip install lgtm-ai

```

Or you can use the official Docker image:

```sh

docker pull elementsinteractive/lgtm-ai

```

## How it works

lgtm reads the given pull request and feeds it to several AI agents to generate a code review or a reviewer guide. The philosophy of lgtm is to keep the models out of the picture and totally configurable, so that you can choose which model to use based on pricing, security, data privacy, or whatever is important to you.

If instructed (with the option `--publish`), lgtm will publish the review or guide to the pull request page as comments.

### Review scores and comment categories

Reviews generated by lgtm will be assigned a **score**, using the following scale:

| Score | Description |

| ------ | --- |

| LGTM 👍 | The PR is generally ready to be merged. |

| Nitpicks 🤓 | There are some minor issues, but the PR is almost ready to be merged. |

| Needs Work 🔧 | There are some issues with the PR, and it is not ready to be merged. The approach is generally good, the fundamental structure is there, but there are some issues that need to be fixed. |

| Needs a Lot of Work 🚨 | Issues are major, overarching, and/or numerous. However, the approach taken is not necessarily wrong. |

| Abandon ❌ | The approach taken is wrong, and the author needs to start from scratch. The PR is not ready to be merged as is at all. |

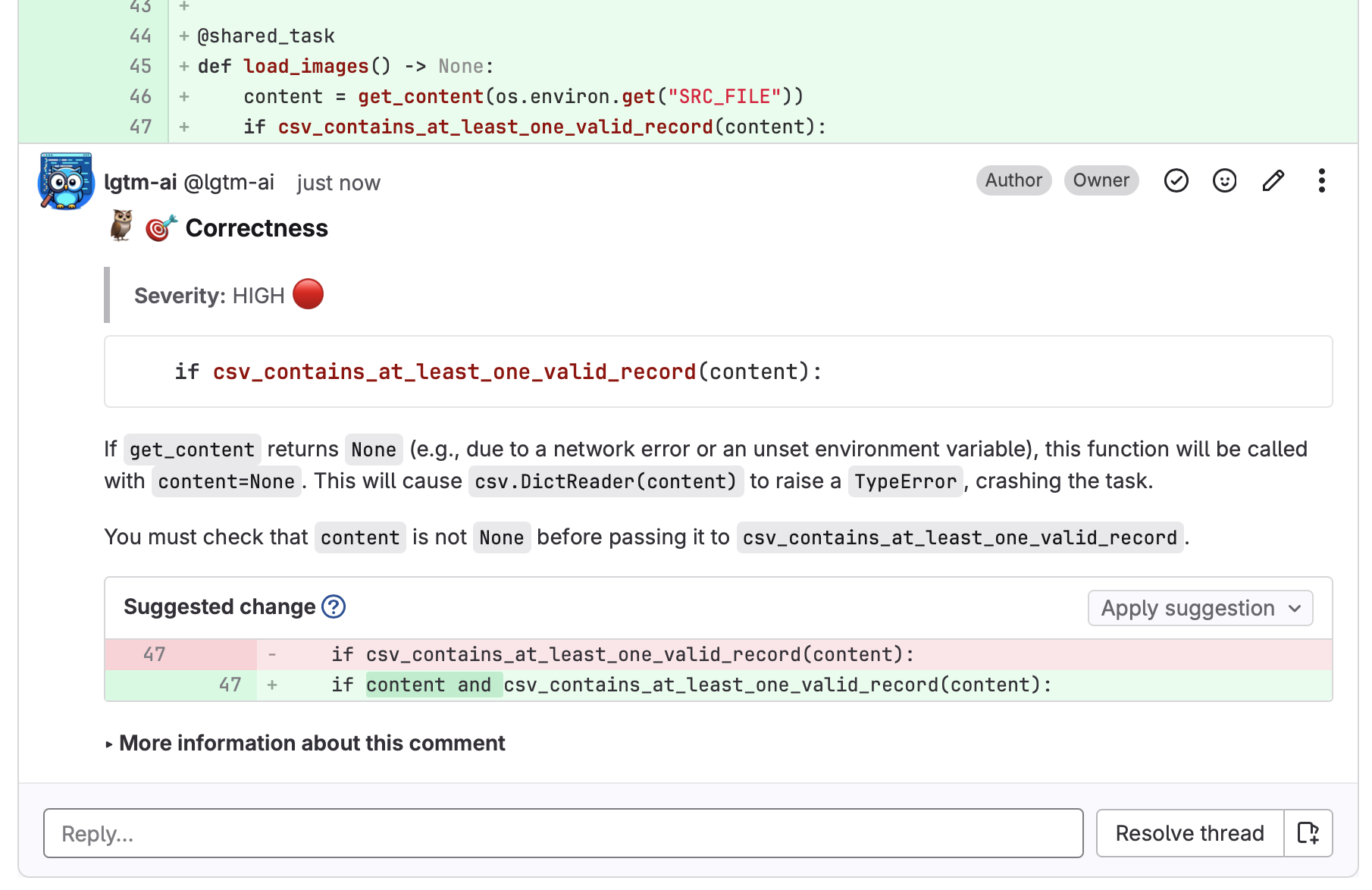

For each review, lgtm may create several inline comments, pointing out specific issues within the PR. These comments belong to a **category** and have a **severity**. You can configure which categories you want lgtm to take a look at (see the [configuration section below](#configuration)). The available categories are:

| Category | Description |

| ------- | ----------- |

| Correctness 🎯 | Does the code behave as intended? Identifies logical errors, bugs, incorrect algorithms, broken functionality, or deviations from requirements. |

| Quality ✨ | Is the code clean, readable, and maintainable? Evaluates naming, structure, modularity, and adherence to clean code principles (e.g., SOLID, DRY, KISS). |

| Testing 🧪 | Are there sufficient and appropriate tests? Includes checking for meaningful test coverage, especially for edge cases and critical paths. Are tests isolated, reliable, and aligned with the behavior being verified? |

| Security 🔒 | Does the code follow secure programming practices? Looks for common vulnerabilities such as injection attacks, insecure data handling, improper access control, hardcoded credentials, or lack of input validation. |

There are three available severities for comments:

- LOW 🔵

- MEDIUM 🟡

- HIGH 🔴

### Supported Code Repository Services

lgtm aims to work with as many services as possible, and that includes remote repository providers. At the moment, lgtm supports:

- [GitLab](https://gitlab.com) (both gitlab.com and [self-managed](https://about.gitlab.com/install/)).

- [GitHub](https://github.com)

lgtm will autodetect the url of the pull request passed as an argument.

### Using Issue/User Story Information

lgtm-ai can enhance code reviews by including context from linked issues or user stories (e.g., GitHub/GitLab issues). This helps the AI understand the purpose and requirements of the PR.

**How to use:**

- Provide the following options to the `lgtm review` command:

- `--issues-url`: The base URL of the issues or user story page.

- `--issues-platform`: The platform for the issues (e.g., `github`, `gitlab`, `jira`).

- `--issues-regex`: (Optional) A regex pattern to extract the issue ID from the PR title or description.

- `--issues-api-key`: (Optional) API key for the issues platform (if different from `--git-api-key`).

- `--issues-user`: (Optional) Username for the issues platform (required if source is `jira`).

**Example:**

```sh

lgtm review \

--issues-url "https://github.com/your-org/your-repo/issues" \

--issues-platform github \

--issues-regex "(?:Fixes|Resolves) #(\d+)" \

--issues-api-key $GITHUB_TOKEN \

...

"https://github.com/your-org/your-repo/pull/42"

```

- These options can also be set in the `lgtm.toml` configuration file, see more in the [configuration section](#configuration).

- lgtm will automatically extract the issue ID from the PR metadata using the provided regex, fetch the issue content, and include it as additional context for the review.

**Notes:**

- GitHub, GitLab, and [JIRA cloud](https://developer.atlassian.com/cloud/jira/platform/) issues are supported.

- If `--issues-api-key` is not provided, lgtm will use `--git-api-key` for authentication.

- If no issue is found, the review will proceed without issue context.

- lgtm provides a default regex for extracting issue IDs that works with [conventional commits](https://www.conventionalcommits.org). This means you often do not need to specify `--issues-regex` if your PR titles or commit messages follow the conventional commit format (e.g., `feat(#123): add new feature`), or if your PR descriptions contain mentions to issues like: `refs: #123` or `closes: #123`.

### Supported AI models

lgtm supports several AI models so you can hook up your preferred LLM to perform reviews for you.

This is the full list of supported models:

#### Summary

| Provider | Example Models | API Key Setup |

|-------------|---------------------------------|-------------------------------------------------------------------------------|

| **OpenAI** | `gpt-5`, `gpt-4.1`, `gpt-4o-mini`, `o1-preview` | [Generate API key](https://platform.openai.com/api-keys) |

| **Google Gemini** | `gemini-2.5-pro`, `gemini-2.5-flash` | [Get API key](https://aistudio.google.com/apikey) |

| **Anthropic (Claude)** | `claude-opus-4-5`, `claude-sonnet-4-5`, `claude-haiku-4-5` | [Anthropic Console](https://console.anthropic.com/dashboard) |

| **Mistral** | `mistral-large-latest`, `mistral-small`, `codestral-latest` | [Mistral Platform](https://console.mistral.ai/api-keys) |

| **DeepSeek** | `deepseek-chat`, `deepseek-reasoner` | [DeepSeek Platform](https://platform.deepseek.com/usage) |

| **Local / Custom** | Any OpenAI-compatible model (e.g. `llama3`) | Run with `--model-url http://localhost:11434/v1` |

#### OpenAI

Check out the OpenAI platform page to see [all available models provided by OpenAI](https://platform.openai.com/docs/overview).

To use OpenAI LLMs, you need to provide lgtm with an API Key, which can be generated in the [OpenAI platform page for your project, or your user](https://platform.openai.com/api-keys).

Supported OpenAI models

These are the main supported models, though the CLI may support additional ones due to the use of [pydantic-ai](https://ai.pydantic.dev).

| Model name |

| -------- |

| gpt-5 |

| gpt-5-mini |

| gpt-4.1 |

| gpt-4.1-mini |

| gpt-4.1-nano |

| gpt-4o * |

| gpt-4o-mini |

| o4-mini |

| o3-mini |

| o3 |

| o1-preview |

| o1-mini |

| o1 |

| gpt-4-turbo |

| gpt-4 |

| gpt-3.5-turbo |

| chatgpt-4o-latest |

#### Google Gemini

Check out the [Gemini developer docs](https://ai.google.dev/gemini-api/docs/models) to see all models provided by Google.

To use Gemini LLMs, you need to provide lgtm an API Key, which can be generated [here](https://aistudio.google.com/apikey).

These are the main supported models, though the CLI may support additional ones due to the use of [pydantic-ai](https://ai.pydantic.dev). Gemini timestamps models, so be sure to always use the latest model of each family, if possible.

For Gemini models exclusively, you can provide a wildcard at the end of the model name and lgtm will attempt to select the latest model (e.g., `gemini-2.5-pro*`)

Supported Google's Gemini models

| Model name |

| ----------- |

| gemini-2.5-pro |

| gemini-2.5-pro-preview-06-05 |

| gemini-2.5-pro-preview-05-06 |

| gemini-2.5-flash |

| gemini-2.0-pro-exp-02-05 |

| gemini-1.5-pro |

| gemini-1.5-flash |

#### Anthropic's Claude

Check out [Anthropic documentation](https://docs.anthropic.com/en/docs/about-claude/models/all-models) to see which models they provide. lgtm works with a subset of Claude models. To use Anthropic LLMs, you need to provide lgtm with an API Key, which can be generated from the [Anthropic Console](https://console.anthropic.com/dashboard).

Supported Anthropic models

These are the main supported models, though the CLI may support additional ones due to the use of [pydantic-ai](https://ai.pydantic.dev).

| Model name |

| ---------------------------- |

| claude-opus-4-5 |

| claude-sonnet-4-5 |

| claude-haiku-4-5 |

| claude-opus-4-1-20250805 |

| claude-sonnet-4-0 |

| claude-3-7-sonnet-latest |

| claude-3-5-sonnet-latest |

| claude-3-5-haiku-latest |

| claude-3-opus-latest |

#### Mistral AI

Check out the [Mistral documentation](https://docs.mistral.ai/getting-started/models/models_overview/) to see all models provided by Mistral.

To use Mistral LLMs, you need to provide lgtm with an API Key, which can be generated from Mistral's [Le Platforme](https://console.mistral.ai/api-keys).

Supported Mistral AI models

These are the main supported models, though the CLI may support additional ones due to the use of [pydantic-ai](https://ai.pydantic.dev).

| Model name |

| ------------------ |

| mistral-large-latest |

| mistral-small |

| codestral-latest |

#### DeepSeek

Check out the [DeepSeek documentation](https://api-docs.deepseek.com/quick_start/pricing) to see all models provided by DeepSeek.

At the moment, lgtm only supports DeepSeek from `https://api.deepseek.com`: other providers and custom URLs are not supported. However, this is in our roadmap!

To get an API key for DeepSeek, create one at [DeepSeek Platform](https://platform.deepseek.com/usage).

Supported DeepSeek models

| Model name |

| ----------- |

| deepseek-chat |

| deepseek-reasoner |

#### Local models

You can run lgtm against a model available at a custom url (say, models running with [ollama](https://ollama.com) at http://localhost:11434/v1). These models need to be compatible with OpenAI. In that case, you need to pass the option `--model-url` (and you can choose to skip the option `--ai-api-token`). Check out the [pydantic-ai documentation](https://ai.pydantic.dev/models/openai/#openai-responses-api) to see more information about how lgtm interacts with these models.

```sh

lgtm review \

--model llama3.2 \

--model-url http://localhost:11434/v1 \

...

https://github.com/group/repo/pull/1

```

### CI/CD Integration

lgtm is meant to be integrated into your CI/CD pipeline, so that PR authors can choose to request reviews by running the necessary pipeline step.

For GitLab, you can use this .gitlab-ci.yml step as inspiration:

```yaml

lgtm-review:

image:

name: docker.io/elementsinteractive/lgtm-ai

entrypoint: [""]

stage: ai-review

needs: []

rules:

- if: $CI_MERGE_REQUEST_ID

when: manual

script:

- lgtm review --git-api-key ${LGTM_GIT_API_KEY} --ai-api-key ${LGTM_AI_API_KEY} -v ${MR_URL}

variables:

MR_URL: "${CI_PROJECT_URL}/-/merge_requests/${CI_MERGE_REQUEST_IID}"

```

For GitHub, you can use the official [LGTM AI GitHub Action](https://github.com/marketplace/actions/lgtm-ai-code-review):

```yaml

- name: AI Code Review

uses: elementsinteractive/lgtm-ai-action@v1.0.0

with:

ai-api-key: ${{ secrets.AI_API_KEY }}

git-api-key: ${{ secrets.GITHUB_TOKEN }}

model: 'gpt-5'

pr-number: ${{ github.event.issue.number }}

```

You can also check out this repo's [lgtm workflow](./.github/workflows/lgtm.yml) for a complete example with comment triggers (`/lgtm review`).

### Configuration

You can customize how lgtm works by passing cli arguments to it on invocation, or by using the *lgtm configuration file*.

You can configure lgtm through cli arguments, through environment variables, and through a configuration file. lgtm uses a `.toml` file to configure how it works. It will autodetect a `lgtm.toml` file in the current directory, or you can pass a specific file path with the CLI option `--config `.

Alternatively, lgtm also supports [pyproject.toml](https://packaging.python.org/en/latest/guides/writing--toml/) files, you just need to nest the options inside `[tool.lgtm]`.

When it comes to preference for selecting options, lgtm follows this preference order:

`CLI options` > `lgtm.toml` > `pyproject.toml`

Summary of options

| Option | Feature Group | Optionality | Notes/Conditions |

|----------------------|----------------------|---------------------|---------------------------------------------------------------------------------|

| model | Main (review + guide) | 🟢 Optional | AI model to use. Defaults to `gemini-2.5-flash` if not set. |

| model_url | Main (review + guide) | 🟡 Conditionally required | Only needed for custom/local models. |

| exclude | Main (review + guide) | 🟢 Optional | File patterns to exclude from review. |

| publish | Main (review + guide) | 🟢 Optional | If true, posts review as comments. Default: false. |

| output_format | Main (review + guide) | 🟢 Optional | `pretty` (default), `json`, or `markdown`. |

| silent | Main (review + guide) | 🟢 Optional | Suppress terminal output. Default: false. |

| ai_retries | Main (review + guide) | 🟢 Optional | Number of retries for AI agent queries. Default: 1. |

| ai_input_tokens_limit| Main (review + guide) | 🟢 Optional | Max input tokens for LLM. Default: 500,000. Use `"no-limit"` to disable. |

| git_api_key | Main (review + guide) | 🟡 Conditionally required | API key for git service (GitHub/GitLab). Can't be given through config file. Also available through env variable `LGTM_GIT_API_KEY`. Required if reviewing a PR URL from a remote repository service (GitHub, GitLab, etc.). |

| ai_api_key | Main (review + guide) | 🔴 Required* | API key for AI model. Can't be given through config file. Also available through env variable `LGTM_AI_API_KEY`. |

| technologies | Review Only | 🟢 Optional | List of technologies for reviewer expertise. |

| categories | Review Only | 🟢 Optional | Review categories. Defaults to all (`Quality`, `Correctness`, `Testing`, `Security`). |

| additional_context | Review Only | 🟢 Optional | Extra context for the LLM (array of prompts/paths/URLs). Can't be given through the CLI |

| compare | Review Only | 🟢 Optional | If reviewing local changes, what to compare against (branch, commit, range, etc.). CLI only. |

| issues_url | Issues Integration | 🟢 Optional | Enables issue context. If set, `issues_platform` becomes required. |

| issues_platform | Issues Integration | 🟡 Conditionally required | Required if `issues_url` is set. |

| issues_regex | Issues Integration | 🟢 Optional | Regex for issue ID extraction. Defaults to conventional commit compatible regex. |

| issues_api_key | Issues Integration | 🟢 Optional | API key for issues platform (if different from `git_api_key`). Can't be given through config file. Also available through env variable `LGTM_ISSUES_API_KEY`. |

| issues_user | Issues Integration | 🟡 Conditionally required | Username for accessing issues information. Only required for `issues_platform=jira` |

#### Main options

These options apply to both reviews and guides generated by lgtm.

- **model**: Choose which AI model you want lgtm to use. If not set, defaults to `gemini-2.5-flash`.

- **model_url**: When not using one of the specific supported models from the providers mentioned above, you can pass a custom URL where the model is deployed (e.g., for local/hosted models).

- **exclude**: Instruct lgtm to ignore certain files. This is important to reduce noise in reviews, but also to reduce the amount of tokens used for each review (and to avoid running into token limits). You can specify file patterns (e.g., `exclude = ["*.md", "package-lock.json"]`).

- **publish**: If `true`, lgtm will post the review as comments on the PR page. Default is `false`.

- **output_format**: Format of the terminal output of lgtm. Can be `pretty` (default), `json`, or `markdown`.

- **silent**: Do not print the review in the terminal. Default is `false`.

- **ai_retries**: How many times to retry calls to the LLM when they do not succeed. By default, this is set to 1 (no retries at all).

- **ai_input_tokens_limit**: Set a limit on the input tokens sent to the LLM in total. Default is 500,000. To disable the limit, you can pass the string `"no-limit"`.

- **git_api_key**: API key to post the review in the source system of the PR. Can be given as a CLI argument, or as an environment variable (`LGTM_GIT_API_KEY`). You can omit this option if reviewing local changes.

- **ai_api_key**: API key to call the selected AI model. Can be given as a CLI argument, or as an environment variable (`LGTM_AI_API_KEY`).

#### Review options

These options are only used when performing reviews through the command `lgtm review`.

- **technologies**: Specify, as a list of free strings, which technologies lgtm specializes in. This can help direct the reviewer towards specific technologies. By default, lgtm won't assume any technology and will just review the PR considering itself an "expert" in it.

- **categories**: lgtm will, by default, evaluate several areas of the given PR (`Quality`, `Correctness`, `Testing`, and `Security`). You can choose any subset of these (e.g., if you are only interested in `Correctness`, you can configure `categories` so that lgtm does not evaluate the other missing areas).

- **additional_context**: TOML array of extra context to send to the LLM. It supports setting the context directly in the `context` field, passing a relative file path so that lgtm downloads it from the repository, or passing any URL from which to download the context. Each element of the array must contain `prompt`, and either `context` (directly injecting context) or `file_url` (for directing lgtm to download it from there).

- **compare**: When reviewing local changes (the positional argument to `lgtm` is a valid `git` path), you can choose what to compare against to generate a git diff. You can pass branch names, commits, etc. Default is `HEAD`. Only available as a CLI option.

#### Issues Integration options

See [Using Issue/User Story Information section](#using-issueuser-story-information).

- **issues_url**: The base URL of the issues or user story page to fetch additional context for the PR. If set, `issues_platform` becomes required.

- **issues_platform**: The platform for the issues (e.g., `github`, `gitlab`, `jira`). Required if `issues_url` is set.

- **issues_regex**: A regex pattern to extract the issue ID from the PR title or description. If omitted, lgtm uses a default regex compatible with conventional commits and common PR formats.

- **issues_api_key**: API key for the issues platform (if different from `git_api_key`). Can be given as a CLI argument, or as an environment variable (`LGTM_ISSUES_API_KEY`).

- **issues_user**: Username for accessing the issues platform (only necessary for `jira`). Can be given as a CLI argument, or as an environment variable (`LGTM_ISSUES_USER`).

#### Example `lgtm.toml`

```toml

technologies = ["Django", "Python"]

categories = ["Correctness", "Quality", "Testing", "Security"]

exclude = ["*.md"]

model = "gpt-4.1"

silent = false

publish = true

ai_retries = 1

ai_input_tokens_limit = 30000

[[additional_context]]

prompt = "These are the development guidelines for the team, ensure the PR follows them"

file_url = "https://my.domain.com/dev-guidelines.md"

[[additional_context]]

prompt = "CI pipeline for the repo. Do not report issues that this pipeline would otherwise catch"

file_url = ".github/workflows/pr.yml"

[[additional_context]]

prompt = "Consider these points when making your review"

context = '''

- We avoid using libraries and rely mostly on the stdlib.

- We follow the newest syntax available for Python (3.13).

'''

# Optional Issue/user story integration

issues_url = "https://github.com/your-org/your-repo/issues"

issues_platform = "github"

# The options below are optional even if the two above are provided

issues_regex = "(?:Fixes|Resolves) #(\d+)"

issues_api_key = "${GITHUB_TOKEN}"

```

## Contributing

### Running the project

This project uses [`just`](https://github.com/casey/just) recipes to do all the basic operations (testing the package, formatting the code, etc.).

Installation:

```sh

brew install just

# or

snap install --edge --classic just

```

It requires [poetry](https://python-poetry.org/docs/#installation).

These are the available commands for the justfile:

```

Available recipes:

help # Shows list of recipes.

venv # Generate the virtual environment.

clean # Cleans all artifacts generated while running this project, including the virtualenv.

test *test-args='' # Runs the tests with the specified arguments (any path or pytest argument).

t *test-args='' # alias for `test`

test-all # Runs all tests including coverage report.

format # Format all code in the project.

lint # Lint all code in the project.

pre-commit *precommit-args # Runs pre-commit with the given arguments (defaults to install).

spellcheck *codespell-args # Spellchecks your markdown files.

lint-commit # Lints commit messages according to conventional commit rules.

```

To run the tests of this package, simply run:

```sh

# All tests

just t

# A single test

just t tests/test_dummy.py

# Pass arguments to pytest like this

just t -k test_dummy -vv

```

### Managing requirements

`poetry` is the tool we use for managing requirements in this project. The generated virtual environment is kept within the directory of the project (in a directory named `.venv`), thanks to the option `POETRY_VIRTUALENVS_IN_PROJECT=1`. Refer to the [poetry documentation](https://python-poetry.org/docs/cli/) to see the list of available commands.

As a short summary:

- Add a dependency:

poetry add foo-bar

- Remove a dependency:

poetry remove foo-bar

- Update a dependency (within constraints set in `pyproject.toml`):

poetry update foo-bar

- Update the lockfile with the contents of `pyproject.toml` (for instance, when getting a conflict after a rebase):

poetry lock

- Check if `pyproject.toml` is in sync with `poetry.lock`:

poetry lock --check

### Commit messages

In this project we enforce [conventional commits](https://www.conventionalcommits.org) guidelines for commit messages. The usage of [commitizen](https://commitizen-tools.github.io/commitizen/) is recommended, but not required. Story numbers (JIRA, etc.) must go in the scope section of the commit message. Example message:

```

feat(#): add new feature x

```

Merge requests must be approved before they can be merged to the `main` branch, and all the steps in the `ci` pipeline must pass.

This project includes an optional pre-commit configuration. Note that all necessary checks are always executed in the ci pipeline, but

configuring pre-commit to execute some of them can be beneficial to reduce late errors. To do so, simply execute the following just recipe:

```sh

just pre-commit

```

Feel free to create [GitHub Issues](https://github.com/elementsinteractive/lgtm-ai/issues) for any feature request, bug, or suggestion!

## Contributors ✨

Thanks goes to these wonderful people ([emoji key](https://allcontributors.org/docs/en/emoji-key)):

Sergio Castillo

💻 🎨 🤔 🚧

Jakub Bożanowski

💻 🤔 🚧

Sacha Brouté

💻 🤔

Daniel

🤔

Rooni

💻

This project follows the [all-contributors](https://github.com/all-contributors/all-contributors) specification. Contributions of any kind welcome!