https://github.com/facebookresearch/bipedal-skills

Bipedal Skills Benchmark for Reinforcement Learning

https://github.com/facebookresearch/bipedal-skills

Last synced: 7 months ago

JSON representation

Bipedal Skills Benchmark for Reinforcement Learning

- Host: GitHub

- URL: https://github.com/facebookresearch/bipedal-skills

- Owner: facebookresearch

- License: mit

- Archived: true

- Created: 2021-07-27T16:46:50.000Z (over 4 years ago)

- Default Branch: main

- Last Pushed: 2022-10-27T07:04:13.000Z (about 3 years ago)

- Last Synced: 2025-02-23T00:19:43.930Z (9 months ago)

- Language: Python

- Homepage: https://facebookresearch.github.io/hsd3

- Size: 424 KB

- Stars: 26

- Watchers: 7

- Forks: 2

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- Contributing: CONTRIBUTING.md

- License: LICENSE

- Code of conduct: CODE_OF_CONDUCT.md

Awesome Lists containing this project

- awesome-legged-locomotion-learning - [**bipedal-skills** - skills.svg?logo=github) (Code / Quadrupeds)

README

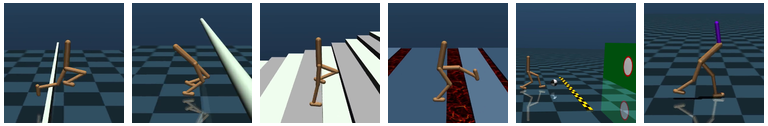

# The Bipedal Skills Benchmark

The bipedal skills benchmark is a suite of reinforcement learning

environments implemented for the MuJoCo physics simulator. It aims to provide a

set of tasks that demand a variety of motor skills beyond locomotion, and is

intended for evaluating skill discovery and hierarchical learning methods. The

majority of tasks exhibit a sparse reward structure.

This benchmark was introduced in [Hierarchial Skills for Efficient Exploration](https://facebookresearch.github.io/hsd3).

## Usage

In order to run the environments, a working MuJoCo setup (version 2.0 or higher) is required. You

can follow the respective [installation steps of

dm_control](https://github.com/deepmind/dm_control/#requirements-and-installation)

for that.

Afterwards, install the Python package with pip:

```sh

pip install bipedal-skills

```

To install the package from a working copy, do:

```sh

pip install .

```

All tasks are exposed and registered as Gym environments once the `bisk` module

is imported:

```py

import gym

import bisk

env = gym.make('BiskHurdles-v1', robot='Walker')

# Alternatively

env = gym.make('BiskHurdlesWalker-v1')

```

A detailed description of the tasks can be found in the [corresponding

publication](https://arxiv.org/abs/2110.10809).

## Evaluation Protocol

For evaluating agents, we recommend estimating returns on 50 environment

instances with distinct seeds.

This can be acheived in sequence or by using one of Gym's vector wrappers:

```py

# Sequential evaluation

env = gym.make('BiskHurdlesWalker-v1')

retrns = []

for i in range(50):

obs, _ = env.reset(seed=i)

retrn = 0

while True:

# Retrieve `action` from agent

obs, reward, terminated, truncated, info = env.step(action)

retrn += reward

if terminated or truncated:

# End of episode

retrns.append(reward)

break

print(f'Average return: {sum(retrns)/len(retrns)}')

# Batched evaluation

from gym.vector import SyncVectorEnv

import numpy as np

n = 50

env = SyncVectorEnv([lambda: gym.make('BiskHurdlesWalker-v1')] * n)

retrns = np.array([0.0] * n)

dones = np.array([False] * n)

obs, _ = env.reset(seed=0)

while not dones.all():

# Retrieve `action` from agent

obs, reward, terminated, truncated, info = env.step(action)

retrns += reward * np.logical_not(dones)

dones |= (terminated | truncated)

print(f'Average return: {retrns.mean()}')

```

## License

The bipedal skills benchmark is MIT licensed, as found in the LICENSE file.

Model definitions have been adapted from:

- [Gym](https://github.com/openai/gym) (HalfCheetah)

- [dm_control](https://github.com/deepmind/dm_control/) (Walker, Humanoid)