https://github.com/fepegar/gestures-miccai-2021

Code for "Pérez-García et al. 2021, Transfer Learning of Deep Spatiotemporal Networks to Model Arbitrarily Long Videos of Seizures, MICCAI 2021".

https://github.com/fepegar/gestures-miccai-2021

classification cnn epilepsy miccai miccai-2021 video video-understanding

Last synced: 8 months ago

JSON representation

Code for "Pérez-García et al. 2021, Transfer Learning of Deep Spatiotemporal Networks to Model Arbitrarily Long Videos of Seizures, MICCAI 2021".

- Host: GitHub

- URL: https://github.com/fepegar/gestures-miccai-2021

- Owner: fepegar

- Created: 2021-07-05T10:07:44.000Z (over 4 years ago)

- Default Branch: master

- Last Pushed: 2024-03-16T00:06:49.000Z (over 1 year ago)

- Last Synced: 2025-02-06T12:47:54.607Z (9 months ago)

- Topics: classification, cnn, epilepsy, miccai, miccai-2021, video, video-understanding

- Language: Python

- Homepage: https://doi.org/10.1007/978-3-030-87240-3_32

- Size: 788 KB

- Stars: 6

- Watchers: 3

- Forks: 3

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

# GESTURES – MICCAI 2021

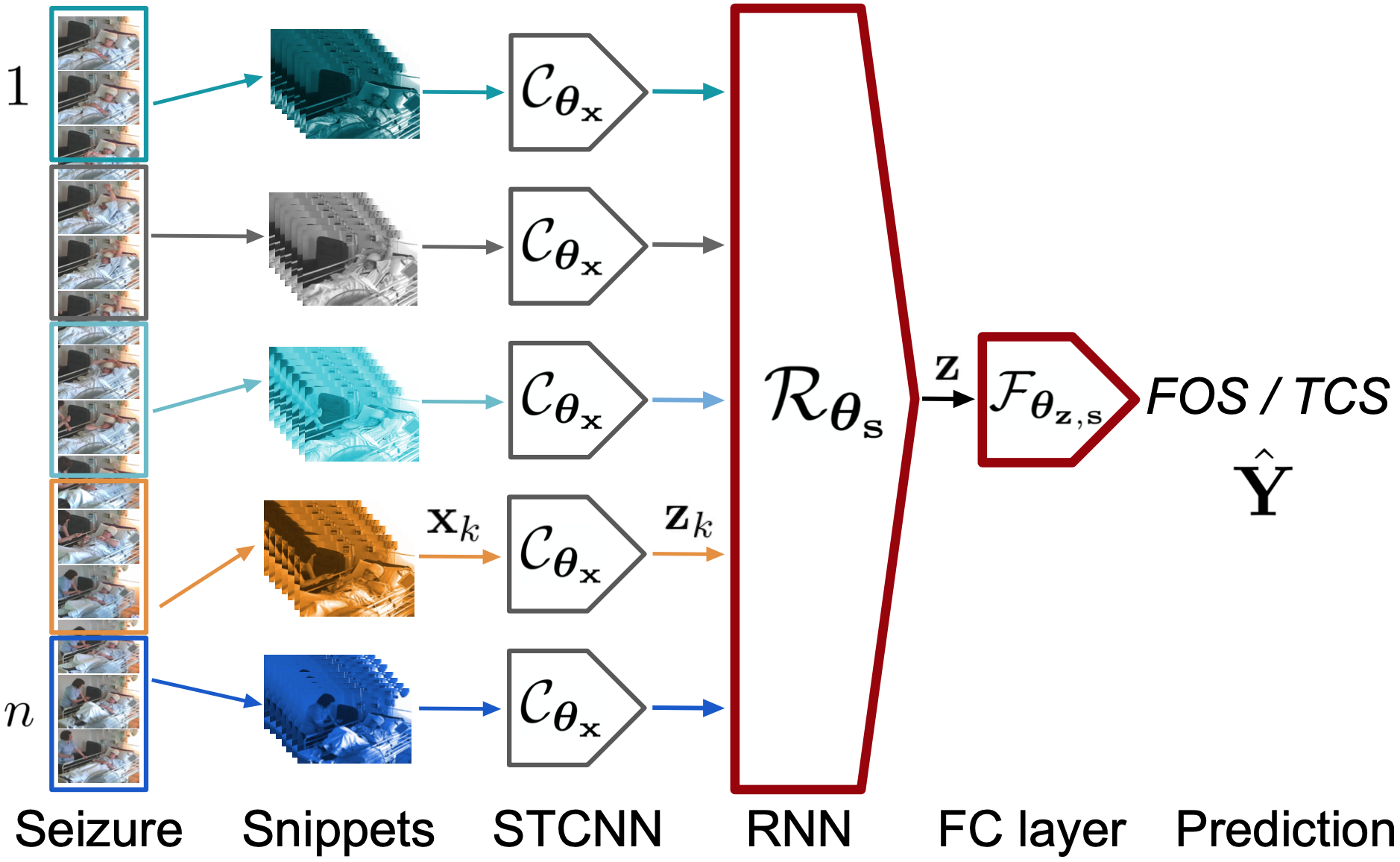

This repository contains the training scripts used in [Pérez-García et al., 2021, *Transfer Learning of Deep Spatiotemporal Networks to Model Arbitrarily Long Videos of Seizures*, 24th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI)](https://doi.org/10.1007/978-3-030-87240-3_32).

The (features) dataset is publicly available at the [UCL Research Data Repository](https://doi.org/10.5522/04/14781771).

GESTURES stands for **g**eneralized **e**pileptic **s**eizure classification from video-**t**elemetry **u**sing **re**current convolutional neural network**s**.

## Citation

If you use this code or the dataset for your research, please cite the [paper](https://doi.org/10.1007/978-3-030-87240-3_32) and the [dataset](https://doi.org/10.5522/04/14781771) appropriately.

## Installation

Using `conda` is recommended:

```shell

conda create -n miccai-gestures python=3.7 ipython -y && conda activate miccai-gestures

```

Using `light-the-torch` is recommended to install the best version of PyTorch automatically:

```shell

pip install light-the-torch

ltt install torch==1.7.0 torchvision==0.4.2

```

Then, clone this repository and install the rest of the requirements:

```shell

git clone https://github.com/fepegar/gestures-miccai-2021.git

cd gestures-miccai-2021

pip install -r requirements.txt

```

Finally, download the dataset:

```shell

curl -L -o dataset.zip https://ndownloader.figshare.com/files/28668096

unzip dataset.zip -d dataset

```

## Training

```shell

GAMMA=4 # gamma parameter for Beta distribution

AGG=blstm # aggregation mode. Can be "mean", "lstm" or "blstm"

N=16 # number of segments

K=0 # fold for k-fold cross-validation

python train_features_lstm.py \

--print-config \

with \

experiment_name=lstm_feats_jitter_${GAMMA}_agg_${AGG}_segs_${N} \

jitter_mode=${GAMMA} \

aggregation=${AGG} \

num_segments=${N} \

fold=${K}

```