https://github.com/francescosaveriozuppichini/glasses

High-quality Neural Networks for Computer Vision 😎

https://github.com/francescosaveriozuppichini/glasses

classification computer-vision convolutional-networks deep-learning deep-neural-networks machine-learning neural-network pretrained-models pytorch

Last synced: about 1 month ago

JSON representation

High-quality Neural Networks for Computer Vision 😎

- Host: GitHub

- URL: https://github.com/francescosaveriozuppichini/glasses

- Owner: FrancescoSaverioZuppichini

- License: mit

- Created: 2020-07-15T20:11:25.000Z (almost 5 years ago)

- Default Branch: master

- Last Pushed: 2022-09-02T12:58:47.000Z (over 2 years ago)

- Last Synced: 2025-04-04T12:28:27.669Z (about 1 month ago)

- Topics: classification, computer-vision, convolutional-networks, deep-learning, deep-neural-networks, machine-learning, neural-network, pretrained-models, pytorch

- Language: Jupyter Notebook

- Homepage: https://francescosaveriozuppichini.github.io/glasses-webapp/

- Size: 129 MB

- Stars: 444

- Watchers: 14

- Forks: 35

- Open Issues: 31

-

Metadata Files:

- Readme: README.ipynb

- License: LICENSE

- Code of conduct: CODE_OF_CONDUCT.md

Awesome Lists containing this project

README

{

"cells": [

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"%load_ext autoreload\n",

"%autoreload 2"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"# Glasses 😎\n",

"\n",

"\n",

"\n",

"[](https://codecov.io/gh/FrancescoSaverioZuppichini/glasses)\n",

"\n",

"Compact, concise and customizable \n",

"deep learning computer vision library\n",

"\n",

"**Models have been stored into the [hugging face hub!](https://huggingface.co/glasses)**\n",

"\n",

"Doc is [here](https://francescosaveriozuppichini.github.io/glasses/index.html)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## TL;TR\n",

"\n",

"This library has\n",

"\n",

"- human readable code, no *research code*\n",

"- common component are shared across [models](#Models)\n",

"- [same APIs](#classification) for all models (you learn them once and they are always the same)\n",

"- clear and easy to use model constomization (see [here](#block))\n",

"- [classification](#classification) and [segmentation](#segmentation) \n",

"- emoji in the name ;)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Stuff implemented so far:\n",

"\n",

"- [Training data-efficient image transformers & distillation through attention](https://arxiv.org/pdf/2010.11929.pdf)\n",

"- [Vision Transformer - An Image Is Worth 16x16 Words: Transformers For Image Recognition At Scale](https://arxiv.org/pdf/2010.11929.pdf)\n",

"- [ResNeSt: Split-Attention Networks](https://arxiv.org/abs/2004.08955) \n",

"- [AlexNet- ImageNet Classification with Deep Convolutional Neural Networks](https://papers.nips.cc/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b-Paper.pdf)\n",

"- [DenseNet - Densely Connected Convolutional Networks](https://arxiv.org/abs/1608.06993)\n",

"- [EfficientNet - EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks](https://arxiv.org/abs/1905.11946)\n",

"- [EfficientNetLite - Higher accuracy on vision models with EfficientNet-Lite](https://blog.tensorflow.org/2020/03/higher-accuracy-on-vision-models-with-efficientnet-lite.html)\n",

"- [FishNet - FishNet: A Versatile Backbone for Image, Region, and Pixel Level Prediction\n",

"](https://arxiv.org/abs/1901.03495)\n",

"- [MobileNet - MobileNetV2: Inverted Residuals and Linear Bottlenecks](https://arxiv.org/pdf/1801.04381.pdf)\n",

"- [RegNet - Designing Network Design Spaces](https://arxiv.org/abs/2003.13678)\n",

"- [ResNet - Deep Residual Learning for Image Recognition](https://arxiv.org/abs/1512.03385)\n",

"- [ResNetD - Bag of Tricks for Image Classification with Convolutional Neural Networks](https://arxiv.org/pdf/1812.01187.pdf)\n",

"- [ResNetXt - Aggregated Residual Transformations for Deep Neural Networks](https://arxiv.org/pdf/1611.05431.pdf)\n",

"- [SEResNet - Concurrent Spatial and Channel Squeeze & Excitation in Fully Convolutional Networks](https://arxiv.org/abs/1803.02579)\n",

"- [VGG - Very Deep Convolutional Networks For Large-scale Image Recognition](https://arxiv.org/pdf/1409.1556.pdf)\n",

"- [WideResNet - Wide Residual Networks](https://arxiv.org/pdf/1605.07146.pdf)\n",

"- [FPN - Feature Pyramid Networks for Object Detection](https://arxiv.org/abs/1612.03144)\n",

"- [PFPN - Panoptic Feature Pyramid Networks](https://arxiv.org/pdf/1901.02446.pdf)\n",

"- [UNet - U-Net: Convolutional Networks for Biomedical Image Segmentation](https://arxiv.org/abs/1505.04597)\n",

"- [Squeeze and Excitation - Concurrent Spatial and Channel Squeeze & Excitation in Fully Convolutional Networks](https://arxiv.org/abs/1803.02579)\n",

"- [ECA - ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks](https://arxiv.org/pdf/1910.03151.pdf)\n",

"- [DropBlock: A regularization method for convolutional networks](https://arxiv.org/abs/1810.12890)\n",

"- [Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition](https://arxiv.org/pdf/1406.4729.pdf)\n",

"- [Score-CAM: Score-Weighted Visual Explanations for Convolutional Neural Networks](https://arxiv.org/abs/1910.01279)\n",

"- [Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization](https://arxiv.org/abs/1610.02391)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Installation\n",

"\n",

"You can install `glasses` using pip by running\n",

"\n",

"```\n",

"pip install git+https://github.com/FrancescoSaverioZuppichini/glasses\n",

"```"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Motivations\n",

"\n",

"Almost all existing implementations of the most famous model are written with very bad coding practices, what today is called *research code*. I struggled to understand some of the implementations even if in the end were just a few lines of code. \n",

"\n",

"Most of them are missing a global structure, they used tons of code repetition, they are not easily customizable and not tested. Since I do computer vision for living, I needed a way to make my life easier."

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Getting started\n",

"\n",

"The API are shared across **all** models!"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"scrolled": true

},

"outputs": [],

"source": [

"import torch\n",

"from glasses.models import AutoModel, AutoTransform\n",

"# load one model\n",

"model = AutoModel.from_pretrained('resnet18').eval()\n",

"# and its correct input transformation\n",

"tr = AutoTransform.from_name('resnet18')\n",

"model.summary(device='cpu' ) # thanks to torchinfo"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# at any time, see all the models\n",

"AutoModel.models_table() "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"```python\n",

" Models \n",

"┏━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━┓\n",

"┃ Name ┃ Pretrained ┃\n",

"┡━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━┩\n",

"│ resnet18 │ true │\n",

"│ resnet26 │ true │\n",

"│ resnet26d │ true │\n",

"│ resnet34 │ true │\n",

"│ resnet34d │ true │\n",

"│ resnet50 │ true │\n",

"...\n",

"```"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Interpretability"

]

},

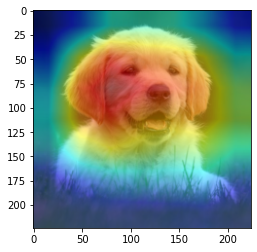

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"import requests\n",

"from PIL import Image\n",

"from io import BytesIO\n",

"from glasses.interpretability import GradCam, SaliencyMap\n",

"from torchvision.transforms import Normalize\n",

"# get a cute dog 🐶\n",

"r = requests.get('https://i.insider.com/5df126b679d7570ad2044f3e?width=700&format=jpeg&auto=webp')\n",

"im = Image.open(BytesIO(r.content))\n",

"# un-normalize when done\n",

"mean, std = tr.transforms[-1].mean, tr.transforms[-1].std\n",

"postprocessing = Normalize(-mean / std, (1.0 / std))\n",

"# apply preprocessing\n",

"x = tr(im).unsqueeze(0)\n",

"_ = model.interpret(x, using=GradCam(), postprocessing=postprocessing).show()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

""

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Classification"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"scrolled": true

},

"outputs": [],

"source": [

"from glasses.models import ResNet\n",

"from torch import nn\n",

"# change activation\n",

"model = AutoModel.from_pretrained('resnet18', activation = nn.SELU).eval()\n",

"# or directly from the model class\n",

"ResNet.resnet18(activation = nn.SELU)\n",

"# change number of classes\n",

"ResNet.resnet18(n_classes=100)\n",

"# freeze only the convolution weights\n",

"model = AutoModel.from_pretrained('resnet18') # or also ResNet.resnet18(pretrained=True) \n",

"model.freeze(who=model.encoder)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Get the **inner features**"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# model.encoder has special hooks ready to be activated\n",

"# call the .features to trigger them\n",

"model.encoder.features\n",

"x = torch.randn((1, 3, 224, 224))\n",

"model(x)\n",

"[f.shape for f in model.encoder.features]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Change inner block"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# what about resnet with inverted residuals?\n",

"from glasses.models.classification.efficientnet import InvertedResidualBlock\n",

"ResNet.resnet18(block = InvertedResidualBlock)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Segmentation"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"from functools import partial\n",

"from glasses.models.segmentation.unet import UNet, UNetDecoder\n",

"# vanilla Unet\n",

"unet = UNet()\n",

"# let's change the encoder\n",

"unet = UNet.from_encoder(partial(AutoModel.from_name, 'efficientnet_b1'))\n",

"# mmm I want more layers in the decoder!\n",

"unet = UNet(decoder=partial(UNetDecoder, widths=[256, 128, 64, 32, 16]))\n",

"# maybe resnet was better\n",

"unet = UNet(encoder=lambda **kwargs: ResNet.resnet26(**kwargs).encoder)\n",

"# same API\n",

"# unet.summary(input_shape=(1,224,224))\n",

"\n",

"unet"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### More examples"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"# change the decoder part\n",

"model = ResNet.resnet18(pretrained=True)\n",

"my_head = nn.Sequential(\n",

" nn.AdaptiveAvgPool2d((1,1)),\n",

" nn.Flatten(),\n",

" nn.Linear(model.encoder.widths[-1], 512),\n",

" nn.Dropout(0.2),\n",

" nn.ReLU(),\n",

" nn.Linear(512, 1000))\n",

"\n",

"model.head = my_head\n",

"\n",

"x = torch.rand((1,3,224,224))\n",

"model(x).shape #torch.Size([1, 1000])"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Pretrained Models\n",

"\n",

"**I am currently working on the pretrained models and the best way to make them available**\n",

"\n",

"This is a list of all the pretrained models available so far!. They are all trained on *ImageNet*.\n",

"\n",

"I used a `batch_size=64` and a GTX 1080ti to evaluale the models.\n",

"\n",

"| | top1 | top5 | time | batch_size |\n",

"|:-----------------------|--------:|--------:|----------:|-------------:|\n",

"| vit_base_patch16_384 | 0.842 | 0.9722 | 1130.81 | 64 |\n",

"| vit_large_patch16_224 | 0.82836 | 0.96406 | 893.486 | 64 |\n",

"| eca_resnet50t | 0.82234 | 0.96172 | 241.754 | 64 |\n",

"| eca_resnet101d | 0.82166 | 0.96052 | 213.632 | 64 |\n",

"| efficientnet_b3 | 0.82034 | 0.9603 | 199.599 | 64 |\n",

"| regnety_032 | 0.81958 | 0.95964 | 136.518 | 64 |\n",

"| vit_base_patch32_384 | 0.8166 | 0.9613 | 243.234 | 64 |\n",

"| vit_base_patch16_224 | 0.815 | 0.96018 | 306.686 | 64 |\n",

"| deit_small_patch16_224 | 0.81082 | 0.95316 | 132.868 | 64 |\n",

"| eca_resnet50d | 0.80604 | 0.95322 | 135.567 | 64 |\n",

"| resnet50d | 0.80492 | 0.95128 | 97.5827 | 64 |\n",

"| cse_resnet50 | 0.80292 | 0.95048 | 108.765 | 64 |\n",

"| efficientnet_b2 | 0.80126 | 0.95124 | 127.177 | 64 |\n",

"| eca_resnet26t | 0.79862 | 0.95084 | 155.396 | 64 |\n",

"| regnety_064 | 0.79712 | 0.94774 | 183.065 | 64 |\n",

"| regnety_040 | 0.79222 | 0.94656 | 124.881 | 64 |\n",

"| resnext101_32x8d | 0.7921 | 0.94556 | 290.38 | 64 |\n",

"| regnetx_064 | 0.79066 | 0.94456 | 176.3 | 64 |\n",

"| wide_resnet101_2 | 0.7891 | 0.94344 | 277.755 | 64 |\n",

"| regnetx_040 | 0.78486 | 0.94242 | 122.619 | 64 |\n",

"| wide_resnet50_2 | 0.78464 | 0.94064 | 201.634 | 64 |\n",

"| efficientnet_b1 | 0.7831 | 0.94096 | 98.7143 | 64 |\n",

"| resnet152 | 0.7825 | 0.93982 | 186.191 | 64 |\n",

"| regnetx_032 | 0.7792 | 0.93996 | 319.558 | 64 |\n",

"| resnext50_32x4d | 0.77628 | 0.9368 | 114.325 | 64 |\n",

"| regnety_016 | 0.77604 | 0.93702 | 96.547 | 64 |\n",

"| efficientnet_b0 | 0.77332 | 0.93566 | 67.2147 | 64 |\n",

"| resnet101 | 0.77314 | 0.93556 | 134.148 | 64 |\n",

"| densenet161 | 0.77146 | 0.93602 | 239.388 | 64 |\n",

"| resnet34d | 0.77118 | 0.93418 | 59.9938 | 64 |\n",

"| densenet201 | 0.76932 | 0.9339 | 158.514 | 64 |\n",

"| regnetx_016 | 0.76684 | 0.9328 | 91.7536 | 64 |\n",

"| resnet26d | 0.766 | 0.93188 | 70.6453 | 64 |\n",

"| regnety_008 | 0.76238 | 0.93026 | 54.1286 | 64 |\n",

"| resnet50 | 0.76012 | 0.92934 | 89.7976 | 64 |\n",

"| densenet169 | 0.75628 | 0.9281 | 127.077 | 64 |\n",

"| resnet26 | 0.75394 | 0.92584 | 65.5801 | 64 |\n",

"| resnet34 | 0.75096 | 0.92246 | 56.8985 | 64 |\n",

"| regnety_006 | 0.75068 | 0.92474 | 55.5611 | 64 |\n",

"| regnetx_008 | 0.74788 | 0.92194 | 57.9559 | 64 |\n",

"| densenet121 | 0.74472 | 0.91974 | 104.13 | 64 |\n",

"| deit_tiny_patch16_224 | 0.7437 | 0.91898 | 66.662 | 64 |\n",

"| vgg19_bn | 0.74216 | 0.91848 | 169.357 | 64 |\n",

"| regnety_004 | 0.73766 | 0.91638 | 68.4893 | 64 |\n",

"| regnetx_006 | 0.73682 | 0.91568 | 81.4703 | 64 |\n",

"| vgg16_bn | 0.73476 | 0.91536 | 150.317 | 64 |\n",

"| vgg19 | 0.7236 | 0.9085 | 155.851 | 64 |\n",

"| regnetx_004 | 0.72298 | 0.90644 | 58.0049 | 64 |\n",

"| vgg16 | 0.71628 | 0.90368 | 135.398 | 64 |\n",

"| vgg13_bn | 0.71618 | 0.9036 | 129.077 | 64 |\n",

"| efficientnet_lite0 | 0.7041 | 0.89894 | 62.4211 | 64 |\n",

"| vgg11_bn | 0.70408 | 0.89724 | 86.9459 | 64 |\n",

"| vgg13 | 0.69984 | 0.89306 | 116.052 | 64 |\n",

"| regnety_002 | 0.6998 | 0.89422 | 46.804 | 64 |\n",

"| resnet18 | 0.69644 | 0.88982 | 46.2029 | 64 |\n",

"| vgg11 | 0.68872 | 0.88658 | 79.4136 | 64 |\n",

"| regnetx_002 | 0.68658 | 0.88244 | 45.9211 | 64 |\n",

"\n",

"Assuming you want to load `efficientnet_b1`:"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"from glasses.models import EfficientNet, AutoModel, AutoTransform\n",

"\n",

"# load it using AutoModel\n",

"model = AutoModel.from_pretrained('efficientnet_b1').eval()\n",

"# or from its own class\n",

"model = EfficientNet.efficientnet_b1(pretrained=True)\n",

"# you may also need to get the correct transformation that must be applied on the input\n",

"tr = AutoTransform.from_name('efficientnet_b1')"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"In this case, `tr` is \n",

"\n",

"```\n",

"Compose(\n",

" Resize(size=240, interpolation=PIL.Image.BICUBIC)\n",

" CenterCrop(size=(240, 240))\n",

" ToTensor()\n",

" Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225))\n",

")\n",

"```"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Deep Customization\n",

"\n",

"All models are composed by sharable parts:\n",

"- `Block`\n",

"- `Layer`\n",

"- `Encoder`\n",

"- `Head`\n",

"- `Decoder`\n",

"\n",

"### Block\n",

"\n",

"Each model has its building block, they are noted by `*Block`. In each block, all the weights are in the `.block` field. This makes it very easy to customize one specific model. "

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"from glasses.models.classification.vgg import VGGBasicBlock\n",

"from glasses.models.classification.resnet import ResNetBasicBlock, ResNetBottleneckBlock, ResNetBasicPreActBlock, ResNetBottleneckPreActBlock\n",

"from glasses.models.classification.senet import SENetBasicBlock, SENetBottleneckBlock\n",

"from glasses.models.classification.resnetxt import ResNetXtBottleNeckBlock\n",

"from glasses.models.classification.densenet import DenseBottleNeckBlock\n",

"from glasses.models.classification.wide_resnet import WideResNetBottleNeckBlock\n",

"from glasses.models.classification.efficientnet import EfficientNetBasicBlock"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"For example, if we want to add Squeeze and Excitation to the resnet bottleneck block, we can just"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"scrolled": false

},

"outputs": [],

"source": [

"from glasses.nn.att import SpatialSE\n",

"from glasses.models.classification.resnet import ResNetBottleneckBlock\n",

"\n",

"class SEResNetBottleneckBlock(ResNetBottleneckBlock):\n",

" def __init__(self, in_features: int, out_features: int, squeeze: int = 16, *args, **kwargs):\n",

" super().__init__(in_features, out_features, *args, **kwargs)\n",

" # all the weights are in block, we want to apply se after the weights\n",

" self.block.add_module('se', SpatialSE(out_features, reduction=squeeze))\n",

" \n",

"SEResNetBottleneckBlock(32, 64)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Then, we can use the class methods to create the new models following the existing architecture blueprint, for example, to create `se_resnet50`"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"scrolled": true

},

"outputs": [],

"source": [

"ResNet.resnet50(block=ResNetBottleneckBlock)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The cool thing is each model has the same api, if I want to create a vgg13 with the `ResNetBottleneckBlock` I can just"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"scrolled": true

},

"outputs": [],

"source": [

"from glasses.models import VGG\n",

"model = VGG.vgg13(block=SEResNetBottleneckBlock)\n",

"model.summary()"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"Some specific model can require additional parameter to the block, for example `MobileNetV2` also required a `expansion` parameter so our `SEResNetBottleneckBlock` won't work. "

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Layer\n",

"\n",

"A `Layer` is a collection of blocks, it is used to stack multiple blocks together following some logic. For example, `ResNetLayer`"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"scrolled": true

},

"outputs": [],

"source": [

"from glasses.models.classification.resnet import ResNetLayer\n",

"\n",

"ResNetLayer(64, 128, depth=2)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Encoder\n",

"\n",

"The encoder is what encoders a vector, so the convolution layers. It has always two very important parameters.\n",

"\n",

"- widths\n",

"- depths\n",

"\n",

"\n",

"**widths** is the wide at each layer, so how much features there are\n",

"**depths** is the depth at each layer, so how many blocks there are\n",

"\n",

"For example, `ResNetEncoder` will creates multiple `ResNetLayer` based on the len of `widths` and `depths`. Let's see some example."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"scrolled": true

},

"outputs": [],

"source": [

"from glasses.models.classification.resnet import ResNetEncoder\n",

"# 3 layers, with 32,64,128 features and 1,2,3 block each\n",

"ResNetEncoder(\n",

" widths=[32,64,128],\n",

" depths=[1,2,3])\n"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"All encoders are subclass of `Encoder` that allows us to hook on specific stages to get the featuers. All you have to do is first call `.features` to notify the model you want to receive the features, and then pass an input."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {

"scrolled": true

},

"outputs": [],

"source": [

"enc = ResNetEncoder()\n",

"enc.features\n",

"enc(torch.randn((1,3,224,224)))\n",

"print([f.shape for f in enc.features])"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"**Remember** each model has always a `.encoder` field"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"from glasses.models import ResNet\n",

"\n",

"model = ResNet.resnet18()\n",

"model.encoder.widths[-1]"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"The encoder knows the number of output features, you can access them by"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"#### Features\n",

"\n",

"Each encoder can return a list of features accessable by the `.features` field. You need to call it once before in order to notify the encoder we wish to also store the features"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"from glasses.models.classification.resnet import ResNetEncoder\n",

"\n",

"x = torch.randn(1,3,224,224)\n",

"enc = ResNetEncoder()\n",

"enc.features # call it once\n",

"enc(x)\n",

"features = enc.features # now we have all the features from each layer (stage)\n",

"[print(f.shape) for f in features]\n",

"# torch.Size([1, 64, 112, 112])\n",

"# torch.Size([1, 64, 56, 56])\n",

"# torch.Size([1, 128, 28, 28])\n",

"# torch.Size([1, 256, 14, 14])"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Head\n",

"\n",

"Head is the last part of the model, it usually perform the classification"

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"from glasses.models.classification.resnet import ResNetHead\n",

"\n",

"\n",

"ResNetHead(512, n_classes=1000)"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"### Decoder\n",

"\n",

"The decoder takes the last feature from the `.encoder` and decode it. This is usually done in `segmentation` models, such as Unet."

]

},

{

"cell_type": "code",

"execution_count": null,

"metadata": {},

"outputs": [],

"source": [

"from glasses.models.segmentation.unet import UNetDecoder\n",

"x = torch.randn(1,3,224,224)\n",

"enc = ResNetEncoder()\n",

"enc.features # call it once\n",

"x = enc(x)\n",

"features = enc.features\n",

"# we need to tell the decoder the first feature size and the size of the lateral features\n",

"dec = UNetDecoder(start_features=enc.widths[-1],\n",

" lateral_widths=enc.features_widths[::-1])\n",

"out = dec(x, features[::-1])\n",

"out.shape"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"**This object oriented structure allows to reuse most of the code across the models**"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"| name | Parameters | Size (MB) |\n",

"|:-----------------------|:-------------|------------:|\n",

"| cse_resnet101 | 49,326,872 | 188.17 |\n",

"| cse_resnet152 | 66,821,848 | 254.91 |\n",

"| cse_resnet18 | 11,778,592 | 44.93 |\n",

"| cse_resnet34 | 21,958,868 | 83.77 |\n",

"| cse_resnet50 | 28,088,024 | 107.15 |\n",

"| deit_base_patch16_224 | 87,184,592 | 332.58 |\n",

"| deit_base_patch16_384 | 87,186,128 | 357.63 |\n",

"| deit_small_patch16_224 | 22,359,632 | 85.3 |\n",

"| deit_tiny_patch16_224 | 5,872,400 | 22.4 |\n",

"| densenet121 | 7,978,856 | 30.44 |\n",

"| densenet161 | 28,681,000 | 109.41 |\n",

"| densenet169 | 14,149,480 | 53.98 |\n",

"| densenet201 | 20,013,928 | 76.35 |\n",

"| eca_resnet101d | 44,568,563 | 212.62 |\n",

"| eca_resnet101t | 44,566,027 | 228.65 |\n",

"| eca_resnet18d | 16,014,452 | 98.41 |\n",

"| eca_resnet18t | 1,415,684 | 37.91 |\n",

"| eca_resnet26d | 16,014,452 | 98.41 |\n",

"| eca_resnet26t | 16,011,916 | 114.44 |\n",

"| eca_resnet50d | 25,576,350 | 136.65 |\n",

"| eca_resnet50t | 25,573,814 | 152.68 |\n",

"| efficientnet_b0 | 5,288,548 | 20.17 |\n",

"| efficientnet_b1 | 7,794,184 | 29.73 |\n",

"| efficientnet_b2 | 9,109,994 | 34.75 |\n",

"| efficientnet_b3 | 12,233,232 | 46.67 |\n",

"| efficientnet_b4 | 19,341,616 | 73.78 |\n",

"| efficientnet_b5 | 30,389,784 | 115.93 |\n",

"| efficientnet_b6 | 43,040,704 | 164.19 |\n",

"| efficientnet_b7 | 66,347,960 | 253.1 |\n",

"| efficientnet_b8 | 87,413,142 | 505.01 |\n",

"| efficientnet_l2 | 480,309,308 | 2332.13 |\n",

"| efficientnet_lite0 | 4,652,008 | 17.75 |\n",

"| efficientnet_lite1 | 5,416,680 | 20.66 |\n",

"| efficientnet_lite2 | 6,092,072 | 23.24 |\n",

"| efficientnet_lite3 | 8,197,096 | 31.27 |\n",

"| efficientnet_lite4 | 13,006,568 | 49.62 |\n",

"| fishnet150 | 24,960,808 | 95.22 |\n",

"| fishnet99 | 16,630,312 | 63.44 |\n",

"| mobilenet_v2 | 3,504,872 | 24.51 |\n",

"| mobilenetv2 | 3,504,872 | 13.37 |\n",

"| regnetx_002 | 2,684,792 | 10.24 |\n",

"| regnetx_004 | 5,157,512 | 19.67 |\n",

"| regnetx_006 | 6,196,040 | 23.64 |\n",

"| regnetx_008 | 7,259,656 | 27.69 |\n",

"| regnetx_016 | 9,190,136 | 35.06 |\n",

"| regnetx_032 | 15,296,552 | 58.35 |\n",

"| regnetx_040 | 22,118,248 | 97.66 |\n",

"| regnetx_064 | 26,209,256 | 114.02 |\n",

"| regnetx_080 | 34,561,448 | 147.43 |\n",

"| regnety_002 | 3,162,996 | 12.07 |\n",

"| regnety_004 | 4,344,144 | 16.57 |\n",

"| regnety_006 | 6,055,160 | 23.1 |\n",

"| regnety_008 | 6,263,168 | 23.89 |\n",

"| regnety_016 | 11,202,430 | 42.73 |\n",

"| regnety_032 | 19,436,338 | 74.14 |\n",

"| regnety_040 | 20,646,656 | 91.77 |\n",

"| regnety_064 | 30,583,252 | 131.52 |\n",

"| regnety_080 | 39,180,068 | 165.9 |\n",

"| resnest101e | 48,275,016 | 184.15 |\n",

"| resnest14d | 10,611,688 | 40.48 |\n",

"| resnest200e | 70,201,544 | 267.8 |\n",

"| resnest269e | 7,551,112 | 28.81 |\n",

"| resnest26d | 17,069,448 | 65.11 |\n",

"| resnest50d | 27,483,240 | 104.84 |\n",

"| resnest50d_1s4x24d | 25,677,000 | 97.95 |\n",

"| resnest50d_4s2x40d | 30,417,592 | 116.03 |\n",

"| resnet101 | 44,549,160 | 169.94 |\n",

"| resnet152 | 60,192,808 | 229.62 |\n",

"| resnet18 | 11,689,512 | 44.59 |\n",

"| resnet200 | 64,673,832 | 246.71 |\n",

"| resnet26 | 15,995,176 | 61.02 |\n",

"| resnet26d | 16,014,408 | 61.09 |\n",

"| resnet34 | 21,797,672 | 83.15 |\n",

"| resnet34d | 21,816,904 | 83.22 |\n",

"| resnet50 | 25,557,032 | 97.49 |\n",

"| resnet50d | 25,576,264 | 97.57 |\n",

"| resnext101_32x16d | 194,026,792 | 740.15 |\n",

"| resnext101_32x32d | 468,530,472 | 1787.3 |\n",

"| resnext101_32x48d | 828,411,176 | 3160.14 |\n",

"| resnext101_32x8d | 88,791,336 | 338.71 |\n",

"| resnext50_32x4d | 25,028,904 | 95.48 |\n",

"| se_resnet101 | 49,292,328 | 188.04 |\n",

"| se_resnet152 | 66,770,984 | 254.71 |\n",

"| se_resnet18 | 11,776,552 | 44.92 |\n",

"| se_resnet34 | 21,954,856 | 83.75 |\n",

"| se_resnet50 | 28,071,976 | 107.09 |\n",

"| unet | 23,202,530 | 88.51 |\n",

"| vgg11 | 132,863,336 | 506.83 |\n",

"| vgg11_bn | 132,868,840 | 506.85 |\n",

"| vgg13 | 133,047,848 | 507.54 |\n",

"| vgg13_bn | 133,053,736 | 507.56 |\n",

"| vgg16 | 138,357,544 | 527.79 |\n",

"| vgg16_bn | 138,365,992 | 527.82 |\n",

"| vgg19 | 143,667,240 | 548.05 |\n",

"| vgg19_bn | 143,678,248 | 548.09 |\n",

"| vit_base_patch16_224 | 86,415,592 | 329.65 |\n",

"| vit_base_patch16_384 | 86,415,592 | 329.65 |\n",

"| vit_base_patch32_384 | 88,185,064 | 336.4 |\n",

"| vit_huge_patch16_224 | 631,823,080 | 2410.21 |\n",

"| vit_huge_patch32_384 | 634,772,200 | 2421.46 |\n",

"| vit_large_patch16_224 | 304,123,880 | 1160.14 |\n",

"| vit_large_patch16_384 | 304,123,880 | 1160.14 |\n",

"| vit_large_patch32_384 | 306,483,176 | 1169.14 |\n",

"| vit_small_patch16_224 | 48,602,344 | 185.4 |\n",

"| wide_resnet101_2 | 126,886,696 | 484.03 |\n",

"| wide_resnet50_2 | 68,883,240 | 262.77 |"

]

},

{

"cell_type": "markdown",

"metadata": {},

"source": [

"## Credits\n",

"\n",

"Most of the weights were trained by other people and adapted to glasses. It is worth cite\n",

"\n",

"- [pytorch-image-models](https://github.com/rwightman/pytorch-image-models)\n",

"- [torchvision](hhttps://github.com/pytorch/vision)\n"

]

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.8.5"

}

},

"nbformat": 4,

"nbformat_minor": 4

}