https://github.com/fuqiuai/wordcloud

用python进行文本分词并生成词云

https://github.com/fuqiuai/wordcloud

jieba python wordcloud

Last synced: 10 months ago

JSON representation

用python进行文本分词并生成词云

- Host: GitHub

- URL: https://github.com/fuqiuai/wordcloud

- Owner: fuqiuai

- Created: 2017-10-13T10:57:13.000Z (over 8 years ago)

- Default Branch: master

- Last Pushed: 2017-10-19T08:18:07.000Z (over 8 years ago)

- Last Synced: 2025-03-30T21:07:26.912Z (10 months ago)

- Topics: jieba, python, wordcloud

- Language: Python

- Homepage:

- Size: 9.5 MB

- Stars: 432

- Watchers: 4

- Forks: 100

- Open Issues: 3

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

# wordCloud

用python进行文本分词并生成词云

## 需要安装的包

* `sudo pip3 install jieba`

* `sudo pip3 install wordcloud`

* wordcloud包依赖于Pillow,numpy,matplotlib

## 其他

* 分词采用结巴分词,并支持自定义字典

* 分词结果进行词频统计分析,并导出

* 词云图可自己设定背景图(英文词云图不需先进行分词)

## 运行结果

运行demo.py,会生成相应的的词云图,和“doc/词频统计.txt”

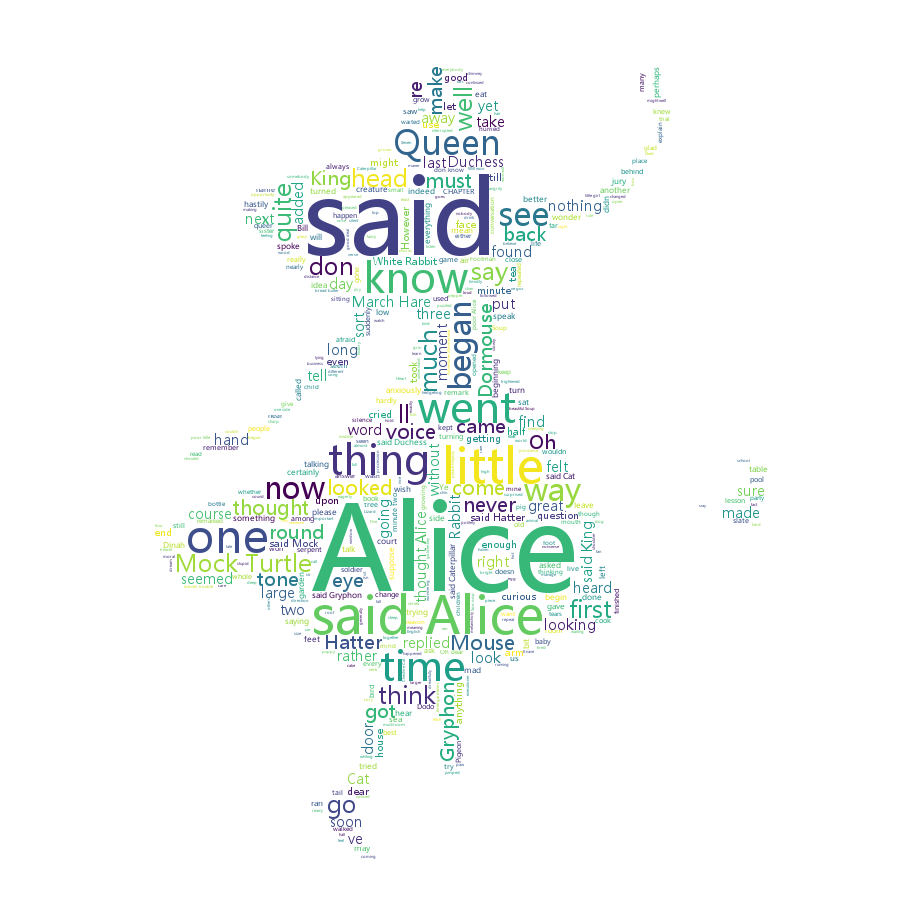

eg:输入“alice.txt”的词云图如下:

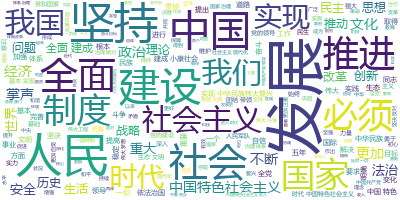

十九大报告全文的词云图如下: