https://github.com/gagniuc/entropy-of-strings

This application calculates the entropy of a string. The focus of this implementation is represented by a specialized function called "entropy" which receives a text sequence as a parameter and returns a value that represents the entropy. Entropy is a measure of the uncertainty in a random variable.

https://github.com/gagniuc/entropy-of-strings

code entropy entropy-measures function information javascript js measure random-variable shannon shannon-entropy strings uncertainty

Last synced: 6 months ago

JSON representation

This application calculates the entropy of a string. The focus of this implementation is represented by a specialized function called "entropy" which receives a text sequence as a parameter and returns a value that represents the entropy. Entropy is a measure of the uncertainty in a random variable.

- Host: GitHub

- URL: https://github.com/gagniuc/entropy-of-strings

- Owner: Gagniuc

- License: mit

- Created: 2022-02-08T17:33:07.000Z (over 3 years ago)

- Default Branch: main

- Last Pushed: 2022-03-21T10:26:26.000Z (over 3 years ago)

- Last Synced: 2025-03-26T03:41:39.927Z (7 months ago)

- Topics: code, entropy, entropy-measures, function, information, javascript, js, measure, random-variable, shannon, shannon-entropy, strings, uncertainty

- Language: HTML

- Homepage:

- Size: 127 KB

- Stars: 6

- Watchers: 1

- Forks: 1

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- Funding: .github/FUNDING.yml

- License: LICENSE.md

Awesome Lists containing this project

README

# Entropy-of-strings

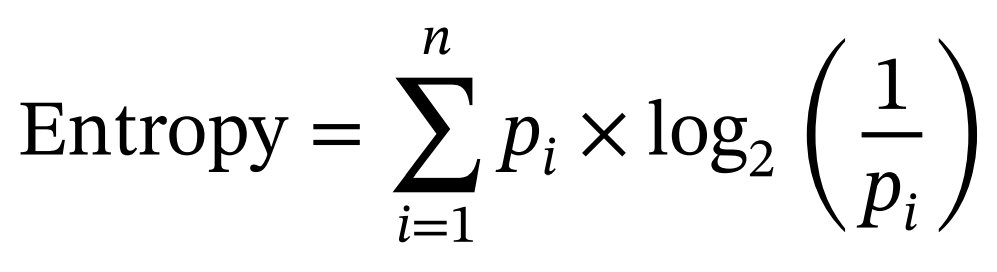

The current JS application calculates the [entropy of a string](https://gagniuc.github.io/Entropy-of-strings/). The focus of this implementation is represented by a specialized function called "entropy" which receives a text sequence as a parameter and returns a value that represents the entropy. Entropy is a measure of the uncertainty in a random variable. In the context of information theory the term "Entropy" refers to the Shannon entropy:

Which can also be written as:

Where n represents the total number of symbols in the alphabet of a sequence and pi represents the probability of occurrence of a symbol i found in the alphabet. A step-by-step version of the entropy calculation is also shown [here](https://github.com/Gagniuc/Entropy-of-Text). For more detailed information on entropy please see the specialized chapter from the book entitled Algorithms in Bioinformatics: Theory and Implementation.

```js

function entropy(c){

//ALPHABET DETECTION

var a = [];

var t = c.split('');

var k = t.length;

for(var i=0; i<=k; i++){

var q = 1;

for(var j=0; j<=a.length; j++){

if (t[i] === a[j]) {q = 0;}

}

if (q === 1) {a.push(t[i]);}

}

var e = 0;

var r = '';

var l = '';

for(var i=0; i<=a.length-1; i++){

r = c.replace(new RegExp(a[i], 'g'),'').length;

l = a[i];

a[i]=(k-r)/k;

//e += -(a[i]*Log(2,a[i]));

e += (a[i]*Log(2,(1/a[i])));

}

return e;

}

function Log(n, v) {

return Math.log(v) / Math.log(n);

}

```

**Live demo**: https://gagniuc.github.io/Entropy-of-strings/

# References

- Paul A. Gagniuc. Algorithms in Bioinformatics: Theory and Implementation. John Wiley & Sons, Hoboken, NJ, USA, 2021, ISBN: 9781119697961.