https://github.com/gal-dahan/crawling-jobs

Scans jobs in Israel By scraping public websites using a Node.js, every six hours.

https://github.com/gal-dahan/crawling-jobs

cheerio crawling-jobs israel nodejs

Last synced: 23 days ago

JSON representation

Scans jobs in Israel By scraping public websites using a Node.js, every six hours.

- Host: GitHub

- URL: https://github.com/gal-dahan/crawling-jobs

- Owner: gal-dahan

- Created: 2022-08-19T18:08:33.000Z (over 2 years ago)

- Default Branch: main

- Last Pushed: 2023-03-29T12:16:19.000Z (about 2 years ago)

- Last Synced: 2024-11-08T18:44:33.390Z (6 months ago)

- Topics: cheerio, crawling-jobs, israel, nodejs

- Language: JavaScript

- Homepage: https://jobs-israel.netlify.app

- Size: 469 KB

- Stars: 14

- Watchers: 2

- Forks: 2

- Open Issues: 4

-

Metadata Files:

- Readme: README.md

- Security: SECURITY.md

Awesome Lists containing this project

- awesome-opensource-israel - crawling-jobs - Scans jobs in Israel By scraping public websites using a Node.js, every six hours.   (Projects by main language / javascript)

README

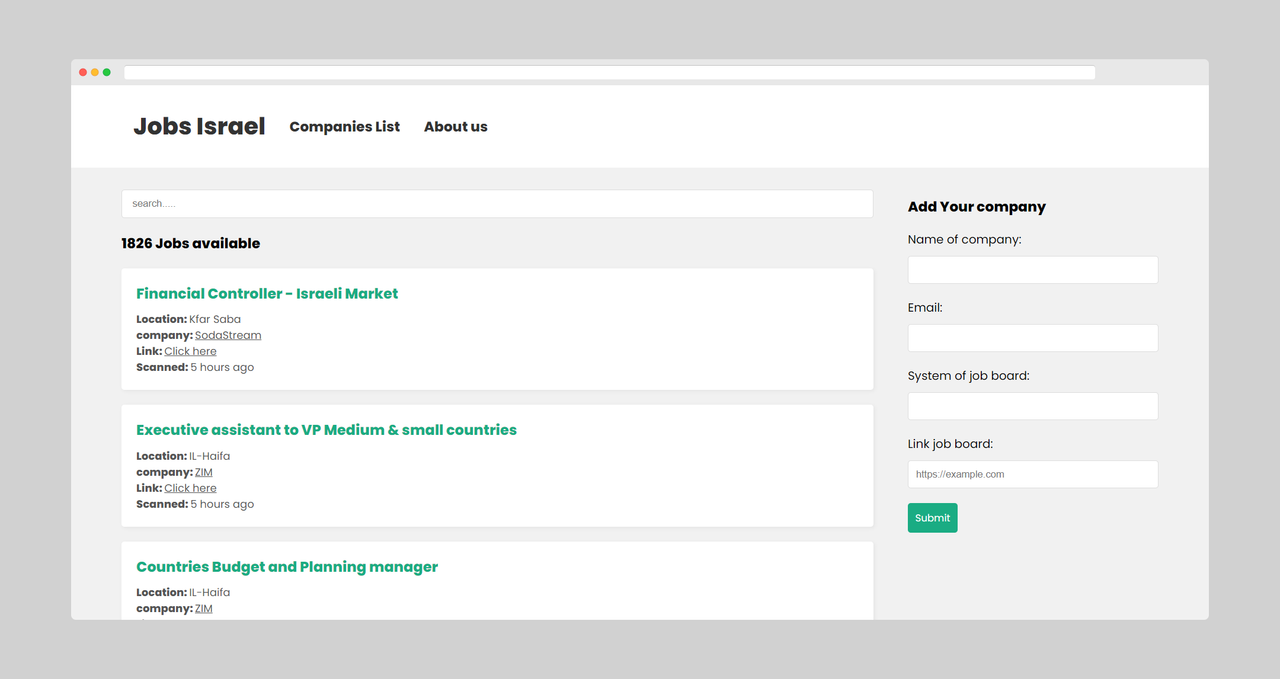

# Job Israel

Source code of [Jobs-Israel](https://jobs-israel.netlify.app)

## Tech Stack

**Client:** React

**Server:** Node(v14.17.1), Express, Cheerio, Nodemailer

**Database:** MongoDB

## Run Locally

Install project with npm.

Server:

```bash

cd server

npm ci

```

Client:

```bash

cd client

npm ci

```

# Start the server and client

```bash

npm Start

```

#### To set up your environment variables, create a new file named `.env` in the `server` folder of the project and copy the contents of `.env.example` into it. Then, replace the placeholders with your own values:

```

MONGO=mongodb+srv://username:[email protected]/your-db-name

[email protected]

MAIL_PASSWORD=your-email-password

```

## Debugging

If you would like to change the timing of the CronJob for debugging purposes,or startup the project locally in first time to Start scanning and filling the database. you can modify the cronTime value in the crawling-jobs/server/server.js file. By default, the CronJob is set to run every 6 hours in production, but for debugging purposes, you may want to set it to run more frequently.

To change the timing of the CronJob, find the following code in the crawling-jobs/server/server.js:

```

const job = new CronJob({

// 0 */6 * * * every 6 hours --- in production

cronTime: "0 */6 * * *",

onTick: function () {

console.log("start crawling", new Date());

startComeet();

startWpComeet();

startGreenHouse();

},

start: true,

timeZone: 'Europe/Berlin'

});

```

To change the timing for debugging purposes, you can modify the cronTime value to run more frequently. For example, if you want the CronJob to run every minute, you can change the cronTime value to "* * * * *":

```

const job = new CronJob({

// * * * * * every minute --- for Debugging

cronTime: "* * * * *",

onTick: function () {

console.log("start crawling", new Date());

startComeet();

startWpComeet();

startGreenHouse();

},

start: true,

timeZone: 'Europe/Berlin'

});

```