https://github.com/geekan/scrapy-examples

Multifarious Scrapy examples. Spiders for alexa / amazon / douban / douyu / github / linkedin etc.

https://github.com/geekan/scrapy-examples

Last synced: 4 months ago

JSON representation

Multifarious Scrapy examples. Spiders for alexa / amazon / douban / douyu / github / linkedin etc.

- Host: GitHub

- URL: https://github.com/geekan/scrapy-examples

- Owner: geekan

- Created: 2014-01-11T09:37:39.000Z (about 12 years ago)

- Default Branch: master

- Last Pushed: 2023-11-03T06:14:04.000Z (over 2 years ago)

- Last Synced: 2025-05-08T00:08:41.353Z (9 months ago)

- Language: Python

- Homepage:

- Size: 17.4 MB

- Stars: 3,235

- Watchers: 232

- Forks: 1,041

- Open Issues: 8

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

- awesome-security-collection - **2479**星

- my-awesome-starred - scrapy-examples - Multifarious Scrapy examples. (Python)

README

scrapy-examples

==============

Multifarious scrapy examples with integrated proxies and agents, which make you comfy to write a spider.

Don't use it to do anything illegal!

***

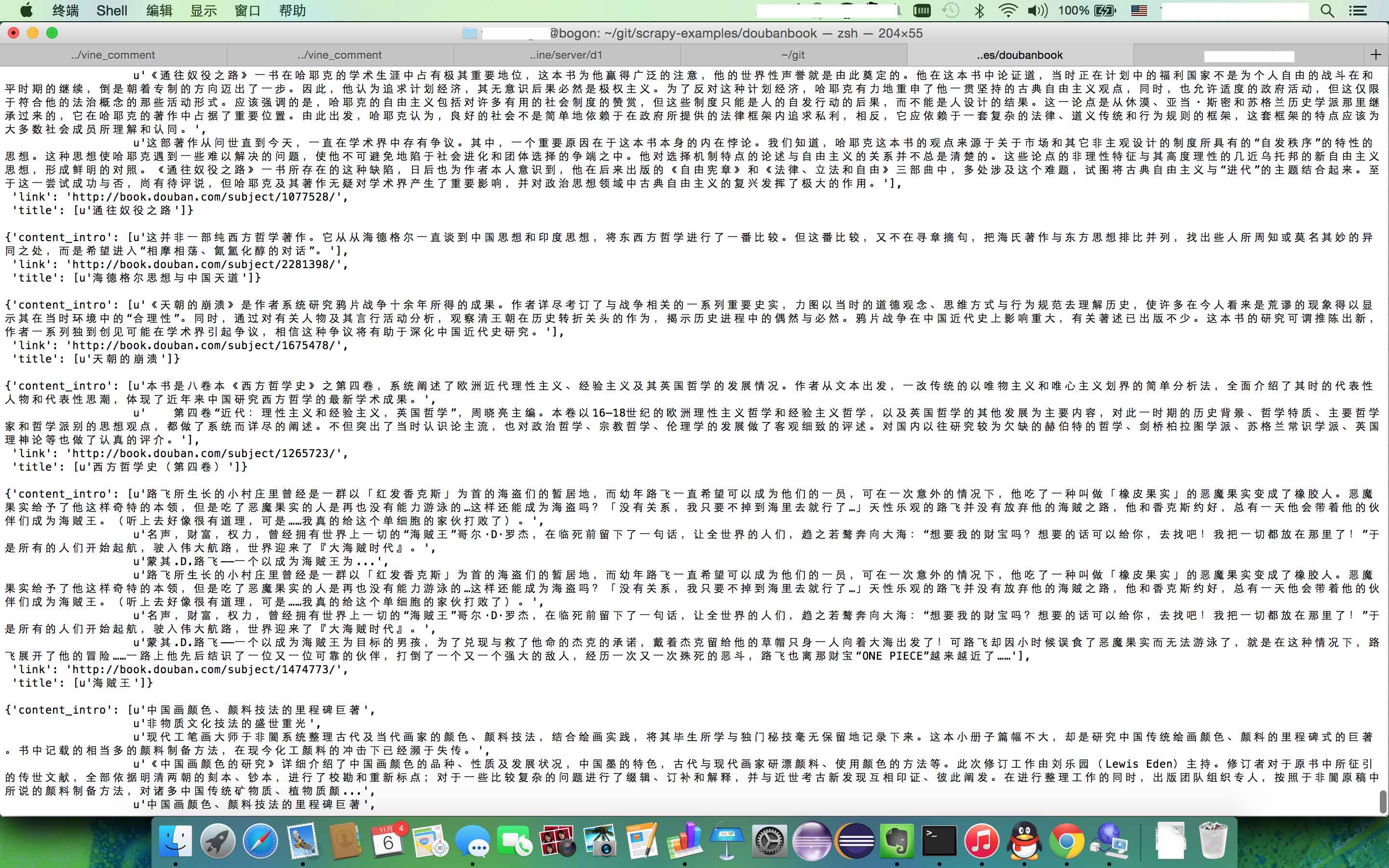

## Real spider example: doubanbook

#### Tutorial

git clone https://github.com/geekan/scrapy-examples

cd scrapy-examples/doubanbook

scrapy crawl doubanbook

#### Depth

There are several depths in the spider, and the spider gets

real data from depth2.

- Depth0: The entrance is `http://book.douban.com/tag/`

- Depth1: Urls like `http://book.douban.com/tag/外国文学` from depth0

- Depth2: Urls like `http://book.douban.com/subject/1770782/` from depth1

#### Example image

***

## Avaiable Spiders

* tutorial

* dmoz_item

* douban_book

* page_recorder

* douban_tag_book

* doubanbook

* linkedin

* hrtencent

* sis

* zhihu

* alexa

* alexa

* alexa.cn

## Advanced

* Use `parse_with_rules` to write a spider quickly.

See dmoz spider for more details.

* Proxies

* If you don't want to use proxy, just comment the proxy middleware in settings.

* If you want to custom it, hack `misc/proxy.py` by yourself.

* Notice

* Don't use `parse` as your method name, it's an inner method of CrawlSpider.

### Advanced Usage

* Run `./startproject.sh ` to start a new project.

It will automatically generate most things, the only left things are:

* `PROJECT/PROJECT/items.py`

* `PROJECT/PROJECT/spider/spider.py`

#### Example to hack `items.py` and `spider.py`

Hacked `items.py` with additional fields `url` and `description`:

```

from scrapy.item import Item, Field

class exampleItem(Item):

url = Field()

name = Field()

description = Field()

```

Hacked `spider.py` with start rules and css rules (here only display the class exampleSpider):

```

class exampleSpider(CommonSpider):

name = "dmoz"

allowed_domains = ["dmoz.org"]

start_urls = [

"http://www.dmoz.com/",

]

# Crawler would start on start_urls, and follow the valid urls allowed by below rules.

rules = [

Rule(sle(allow=["/Arts/", "/Games/"]), callback='parse', follow=True),

]

css_rules = {

'.directory-url li': {

'__use': 'dump', # dump data directly

'__list': True, # it's a list

'url': 'li > a::attr(href)',

'name': 'a::text',

'description': 'li::text',

}

}

def parse(self, response):

info('Parse '+response.url)

# parse_with_rules is implemented here:

# https://github.com/geekan/scrapy-examples/blob/master/misc/spider.py

self.parse_with_rules(response, self.css_rules, exampleItem)

```