https://github.com/geekywrites/datascience

This repository is a compilation of free resources for learning Data Science.

https://github.com/geekywrites/datascience

artificial-intelligence computer-vision data-science datascienceproject deeplearning machine-learning machine-learning-algorithms natural-language-processing neural-networks

Last synced: 10 months ago

JSON representation

This repository is a compilation of free resources for learning Data Science.

- Host: GitHub

- URL: https://github.com/geekywrites/datascience

- Owner: geekywrites

- License: gpl-3.0

- Created: 2021-01-27T13:02:06.000Z (about 5 years ago)

- Default Branch: main

- Last Pushed: 2024-07-26T15:51:40.000Z (over 1 year ago)

- Last Synced: 2024-07-26T21:42:01.137Z (over 1 year ago)

- Topics: artificial-intelligence, computer-vision, data-science, datascienceproject, deeplearning, machine-learning, machine-learning-algorithms, natural-language-processing, neural-networks

- Homepage: https://twitter.com/geekywrites

- Size: 318 KB

- Stars: 5,059

- Watchers: 380

- Forks: 522

- Open Issues: 7

-

Metadata Files:

- Readme: README.md

- Funding: .github/FUNDING.yml

- License: LICENSE

Awesome Lists containing this project

- awesome-resources - Data Science, Machine Learning Projects

- StarryDivineSky - geekywrites/datascience

README

Give a 🌟 if it's useful and share with other Data Science Enthusiasts.

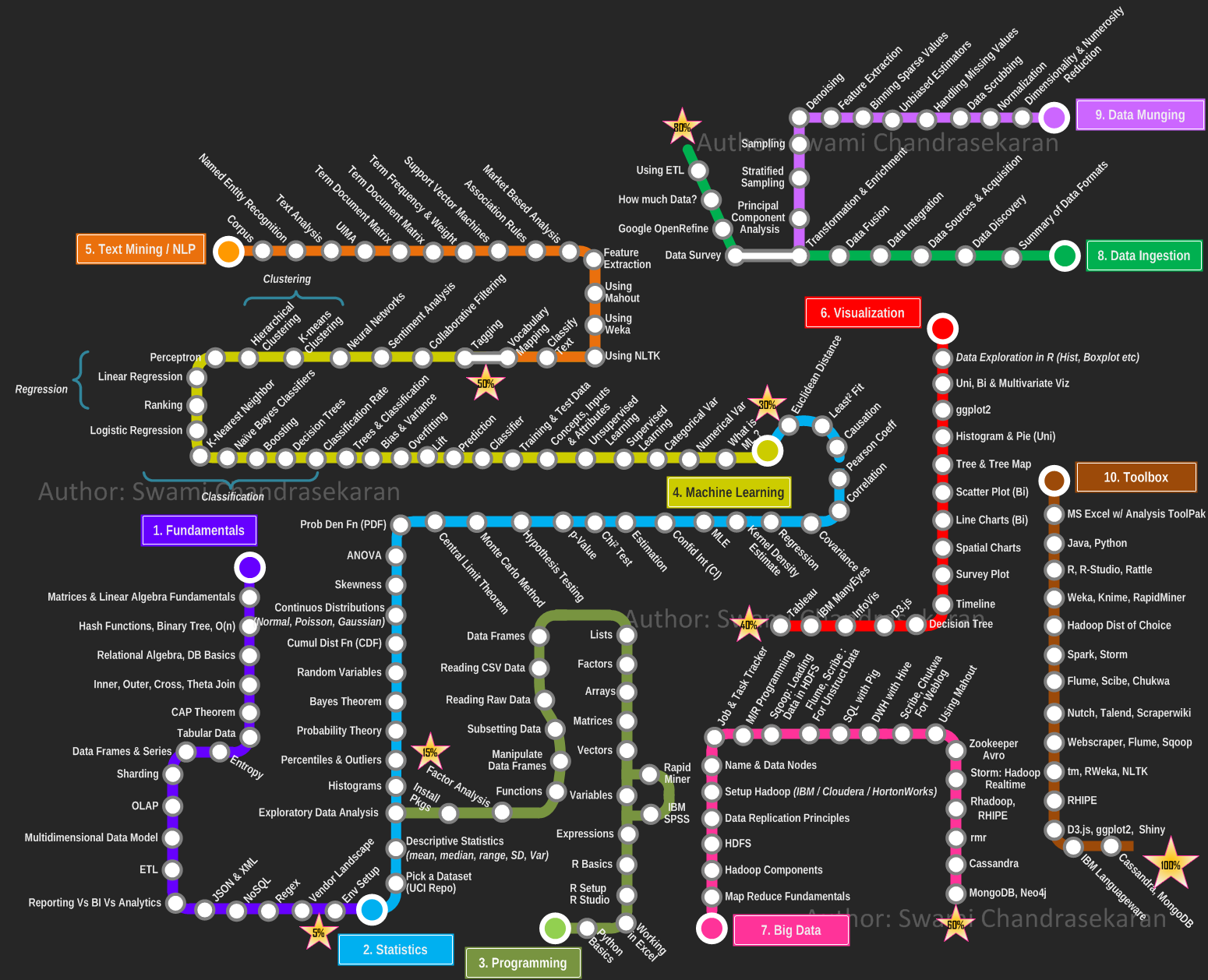

# Data-Scientist-Roadmap (2021)

****

# 1_ Fundamentals

## 1_ Matrices & Algebra fundamentals

### About

In mathematics, a matrix is a __rectangular array of numbers, symbols, or expressions, arranged in rows and columns__. A matrix could be reduced as a submatrix of a matrix by deleting any collection of rows and/or columns.

### Operations

There are a number of basic operations that can be applied to modify matrices:

* [Addition](https://en.wikipedia.org/wiki/Matrix_addition)

* [Scalar Multiplication](https://en.wikipedia.org/wiki/Scalar_multiplication)

* [Transposition](https://en.wikipedia.org/wiki/Transpose)

* [Multiplication](https://en.wikipedia.org/wiki/Matrix_multiplication)

## 2_ Hash function, binary tree, O(n)

### Hash function

#### Definition

A hash function is __any function that can be used to map data of arbitrary size to data of fixed size__. One use is a data structure called a hash table, widely used in computer software for rapid data lookup. Hash functions accelerate table or database lookup by detecting duplicated records in a large file.

### Binary tree

#### Definition

In computer science, a binary tree is __a tree data structure in which each node has at most two children__, which are referred to as the left child and the right child.

### O(n)

#### Definition

In computer science, big O notation is used to __classify algorithms according to how their running time or space requirements grow as the input size grows__. In analytic number theory, big O notation is often used to __express a bound on the difference between an arithmetical function and a better understood approximation__.

## 3_ Relational algebra, DB basics

### Definition

Relational algebra is a family of algebras with a __well-founded semantics used for modelling the data stored in relational databases__, and defining queries on it.

The main application of relational algebra is providing a theoretical foundation for __relational databases__, particularly query languages for such databases, chief among which is SQL.

### Natural join

#### About

In SQL language, a natural junction between two tables will be done if :

* At least one column has the same name in both tables

* Theses two columns have the same data type

* CHAR (character)

* INT (integer)

* FLOAT (floating point numeric data)

* VARCHAR (long character chain)

#### mySQL request

SELECT

FROM

NATURAL JOIN

SELECT

FROM ,

WHERE TABLE_1.ID = TABLE_2.ID

## 4_ Inner, Outer, Cross, theta-join

### Inner join

The INNER JOIN keyword selects records that have matching values in both tables.

#### Request

SELECT column_name(s)

FROM table1

INNER JOIN table2 ON table1.column_name = table2.column_name;

### Outer join

The FULL OUTER JOIN keyword return all records when there is a match in either left (table1) or right (table2) table records.

#### Request

SELECT column_name(s)

FROM table1

FULL OUTER JOIN table2 ON table1.column_name = table2.column_name;

### Left join

The LEFT JOIN keyword returns all records from the left table (table1), and the matched records from the right table (table2). The result is NULL from the right side, if there is no match.

#### Request

SELECT column_name(s)

FROM table1

LEFT JOIN table2 ON table1.column_name = table2.column_name;

### Right join

The RIGHT JOIN keyword returns all records from the right table (table2), and the matched records from the left table (table1). The result is NULL from the left side, when there is no match.

#### Request

SELECT column_name(s)

FROM table1

RIGHT JOIN table2 ON table1.column_name = table2.column_name;

## 5_ CAP theorem

It is impossible for a distributed data store to simultaneously provide more than two out of the following three guarantees:

* Every read receives the most recent write or an error.

* Every request receives a (non-error) response – without guarantee that it contains the most recent write.

* The system continues to operate despite an arbitrary number of messages being dropped (or delayed) by the network between nodes.

In other words, the CAP Theorem states that in the presence of a network partition, one has to choose between consistency and availability. Note that consistency as defined in the CAP Theorem is quite different from the consistency guaranteed in ACID database transactions.

## 6_ Tabular data

Tabular data are __opposed to relational__ data, like SQL database.

In tabular data, __everything is arranged in columns and rows__. Every row have the same number of column (except for missing value, which could be substituted by "N/A".

The __first line__ of tabular data is most of the time a __header__, describing the content of each column.

The most used format of tabular data in data science is __CSV___. Every column is surrounded by a character (a tabulation, a coma ..), delimiting this column from its two neighbours.

## 7_ Entropy

Entropy is a __measure of uncertainty__. High entropy means the data has high variance and thus contains a lot of information and/or noise.

For instance, __a constant function where f(x) = 4 for all x has no entropy and is easily predictable__, has little information, has no noise and can be succinctly represented . Similarly, f(x) = ~4 has some entropy while f(x) = random number is very high entropy due to noise.

## 8_ Data frames & series

A data frame is used for storing data tables. It is a list of vectors of equal length.

A series is a series of data points ordered.

## 9_ Sharding

*Sharding* is **horizontal(row wise) database partitioning** as opposed to **vertical(column wise) partitioning** which is *Normalization*

Why use Sharding?

1. Database systems with large data sets or high throughput applications can challenge the capacity of a single server.

2. Two methods to address the growth : Vertical Scaling and Horizontal Scaling

3. Vertical Scaling

* Involves increasing the capacity of a single server

* But due to technological and economical restrictions, a single machine may not be sufficient for the given workload.

4. Horizontal Scaling

* Involves dividing the dataset and load over multiple servers, adding additional servers to increase capacity as required

* While the overall speed or capacity of a single machine may not be high, each machine handles a subset of the overall workload, potentially providing better efficiency than a single high-speed high-capacity server.

* Idea is to use concepts of Distributed systems to achieve scale

* But it comes with same tradeoffs of increased complexity that comes hand in hand with distributed systems.

* Many Database systems provide Horizontal scaling via Sharding the datasets.

## 10_ OLAP

Online analytical processing, or OLAP, is an approach to answering multi-dimensional analytical (MDA) queries swiftly in computing.

OLAP is part of the __broader category of business intelligence__, which also encompasses relational database, report writing and data mining. Typical applications of OLAP include ___business reporting for sales, marketing, management reporting, business process management (BPM), budgeting and forecasting, financial reporting and similar areas, with new applications coming up, such as agriculture__.

The term OLAP was created as a slight modification of the traditional database term online transaction processing (OLTP).

## 11_ Multidimensional Data model

## 12_ ETL

* Extract

* extracting the data from the multiple heterogenous source system(s)

* data validation to confirm whether the data pulled has the correct/expected values in a given domain

* Transform

* extracted data is fed into a pipeline which applies multiple functions on top of data

* these functions intend to convert the data into the format which is accepted by the end system

* involves cleaning the data to remove noise, anamolies and redudant data

* Load

* loads the transformed data into the end target

## 13_ Reporting vs BI vs Analytics

## 14_ JSON and XML

### JSON

JSON is a language-independent data format. Example describing a person:

{

"firstName": "John",

"lastName": "Smith",

"isAlive": true,

"age": 25,

"address": {

"streetAddress": "21 2nd Street",

"city": "New York",

"state": "NY",

"postalCode": "10021-3100"

},

"phoneNumbers": [

{

"type": "home",

"number": "212 555-1234"

},

{

"type": "office",

"number": "646 555-4567"

},

{

"type": "mobile",

"number": "123 456-7890"

}

],

"children": [],

"spouse": null

}

## XML

Extensible Markup Language (XML) is a markup language that defines a set of rules for encoding documents in a format that is both human-readable and machine-readable.

Bloodroot

Sanguinaria canadensis

4

Mostly Shady

$2.44

031599

Columbine

Aquilegia canadensis

3

Mostly Shady

$9.37

030699

Marsh Marigold

Caltha palustris

4

Mostly Sunny

$6.81

051799

## 15_ NoSQL

noSQL is oppsed to relationnal databases (stand for __N__ot __O__nly __SQL__). Data are not structured and there's no notion of keys between tables.

Any kind of data can be stored in a noSQL database (JSON, CSV, ...) whithout thinking about a complex relationnal scheme.

__Commonly used noSQL stacks__: Cassandra, MongoDB, Redis, Oracle noSQL ...

## 16_ Regex

### About

__Reg__ ular __ex__ pressions (__regex__) are commonly used in informatics.

It can be used in a wide range of possibilities :

* Text replacing

* Extract information in a text (email, phone number, etc)

* List files with the .txt extension ..

http://regexr.com/ is a good website for experimenting on Regex.

### Utilisation

To use them in [Python](https://docs.python.org/3/library/re.html), just import:

import re

## 17_ Vendor landscape

## 18_ Env Setup

# 2_ Statistics

[Statistics-101 for data noobs](https://medium.com/@debuggermalhotra/statistics-101-for-data-noobs-2e2a0e23a5dc)

## 1_ Pick a dataset

### Datasets repositories

#### Generalists

- [KAGGLE](https://www.kaggle.com/datasets)

- [Google](https://toolbox.google.com/datasetsearch)

#### Medical

- [PMC](https://www.ncbi.nlm.nih.gov/pmc/)

#### Other languages

##### French

- [DATAGOUV](https://www.data.gouv.fr/fr/)

## 2_ Descriptive statistics

### Mean

In probability and statistics, population mean and expected value are used synonymously to refer to one __measure of the central tendency either of a probability distribution or of the random variable__ characterized by that distribution.

For a data set, the terms arithmetic mean, mathematical expectation, and sometimes average are used synonymously to refer to a central value of a discrete set of numbers: specifically, the __sum of the values divided by the number of values__.

### Median

The median is the value __separating the higher half of a data sample, a population, or a probability distribution, from the lower half__. In simple terms, it may be thought of as the "middle" value of a data set.

### Descriptive statistics in Python

[Numpy](http://www.numpy.org/) is a python library widely used for statistical analysis.

#### Installation

pip3 install numpy

#### Utilization

import numpy

## 3_ Exploratory data analysis

The step includes visualization and analysis of data.

Raw data may possess improper distributions of data which may lead to issues moving forward.

Again, during applications we must also know the distribution of data, for instance, the fact whether the data is linear or spirally distributed.

[Guide to EDA in Python](https://towardsdatascience.com/data-preprocessing-and-interpreting-results-the-heart-of-machine-learning-part-1-eda-49ce99e36655)

##### Libraries in Python

[Matplotlib](https://matplotlib.org/)

Library used to plot graphs in Python

__Installation__:

pip3 install matplotlib

__Utilization__:

import matplotlib.pyplot as plt

[Pandas](https://pandas.pydata.org/)

Library used to large datasets in python

__Installation__:

pip3 install pandas

__Utilization__:

import pandas as pd

[Seaborn](https://seaborn.pydata.org/)

Yet another Graph Plotting Library in Python.

__Installation__:

pip3 install seaborn

__Utilization__:

import seaborn as sns

#### PCA

PCA stands for principle component analysis.

We often require to shape of the data distribution as we have seen previously. We need to plot the data for the same.

Data can be Multidimensional, that is, a dataset can have multiple features.

We can plot only two dimensional data, so, for multidimensional data, we project the multidimensional distribution in two dimensions, preserving the principle components of the distribution, in order to get an idea of the actual distribution through the 2D plot.

It is used for dimensionality reduction also. Often it is seen that several features do not significantly contribute any important insight to the data distribution. Such features creates complexity and increase dimensionality of the data. Such features are not considered which results in decrease of the dimensionality of the data.

[Mathematical Explanation](https://medium.com/towards-artificial-intelligence/demystifying-principal-component-analysis-9f13f6f681e6)

[Application in Python](https://towardsdatascience.com/data-preprocessing-and-interpreting-results-the-heart-of-machine-learning-part-2-pca-feature-92f8f6ec8c8)

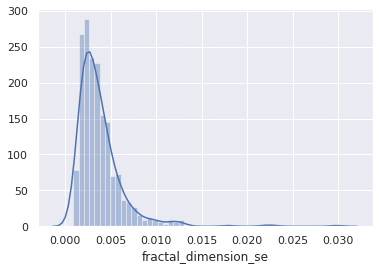

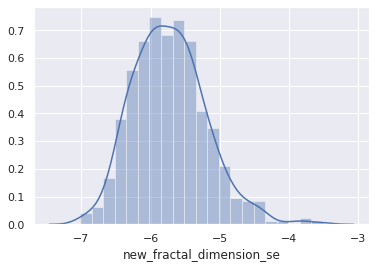

## 4_ Histograms

Histograms are representation of distribution of numerical data. The procedure consists of binnng the numeric values using range divisions i.e, the entire range in which the data varies is split into several fixed intervals. Count or frequency of occurences of the numbers in the range of the bins are represented.

[Histograms](https://en.wikipedia.org/wiki/Histogram)

In python, __Pandas__,__Matplotlib__,__Seaborn__ can be used to create Histograms.

## 5_ Percentiles & outliers

### Percentiles

Percentiles are numberical measures in statistics, which represents how much or what percentage of data falls below a given number or instance in a numerical data distribution.

For instance, if we say 70 percentile, it represents, 70% of the data in the ditribution are below the given numerical value.

[Percentiles](https://en.wikipedia.org/wiki/Percentile)

### Outliers

Outliers are data points(numerical) which have significant differences with other data points. They differ from majority of points in the distribution. Such points may cause the central measures of distribution, like mean, and median. So, they need to be detected and removed.

[Outliers](https://www.itl.nist.gov/div898/handbook/prc/section1/prc16.htm)

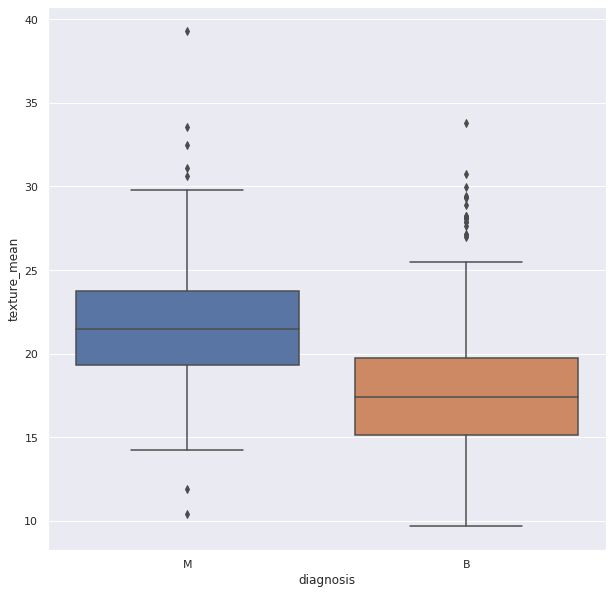

__Box Plots__ can be used detect Outliers in the data. They can be created using __Seaborn__ library

## 6_ Probability theory

__Probability__ is the likelihood of an event in a Random experiment. For instance, if a coin is tossed, the chance of getting a head is 50% so, probability is 0.5.

__Sample Space__: It is the set of all possible outcomes of a Random Experiment.

__Favourable Outcomes__: The set of outcomes we are looking for in a Random Experiment

__Probability = (Number of Favourable Outcomes) / (Sample Space)__

__Probability theory__ is a branch of mathematics that is associated with the concept of probability.

[Basics of Probability](https://towardsdatascience.com/basic-probability-theory-and-statistics-3105ab637213)

## 7_ Bayes theorem

### Conditional Probability:

It is the probability of one event occurring, given that another event has already occurred. So, it gives a sense of relationship between two events and the probabilities of the occurences of those events.

It is given by:

__P( A | B )__ : Probability of occurence of A, after B occured.

The formula is given by:

So, P(A|B) is equal to Probablity of occurence of A and B, divided by Probability of occurence of B.

[Guide to Conditional Probability](https://en.wikipedia.org/wiki/Conditional_probability)

### Bayes Theorem

Bayes theorem provides a way to calculate conditional probability. Bayes theorem is widely used in machine learning most in Bayesian Classifiers.

According to Bayes theorem the probability of A, given that B has already occurred is given by Probability of A multiplied by the probability of B given A has already occurred divided by the probability of B.

__P(A|B) = P(A).P(B|A) / P(B)__

[Guide to Bayes Theorem](https://machinelearningmastery.com/bayes-theorem-for-machine-learning/)

## 8_ Random variables

Random variable are the numeric outcome of an experiment or random events. They are normally a set of values.

There are two main types of Random Variables:

__Discrete Random Variables__: Such variables take only a finite number of distinct values

__Continous Random Variables__: Such variables can take an infinite number of possible values.

## 9_ Cumul Dist Fn (CDF)

In probability theory and statistics, the cumulative distribution function (CDF) of a real-valued random variable __X__, or just distribution function of __X__, evaluated at __x__, is the probability that __X__ will take a value less than or equal to __x__.

The cumulative distribution function of a real-valued random variable X is the function given by:

Resource:

[Wikipedia](https://en.wikipedia.org/wiki/Cumulative_distribution_function)

## 10_ Continuous distributions

A continuous distribution describes the probabilities of the possible values of a continuous random variable. A continuous random variable is a random variable with a set of possible values (known as the range) that is infinite and uncountable.

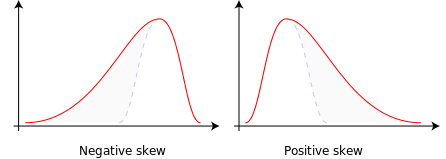

## 11_ Skewness

Skewness is the measure of assymetry in the data distribution or a random variable distribution about its mean.

Skewness can be positive, negative or zero.

__Negative skew__: Distribution Concentrated in the right, left tail is longer.

__Positive skew__: Distribution Concentrated in the left, right tail is longer.

Variation of central tendency measures are shown below.

Data Distribution are often Skewed which may cause trouble during processing the data. __Skewed Distribution can be converted to Symmetric Distribution, taking Log of the distribution__.

##### Skew Distribution

##### Log of the Skew Distribution.

[Guide to Skewness](https://en.wikipedia.org/wiki/Skewness)

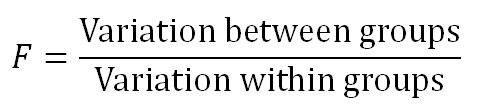

## 12_ ANOVA

ANOVA stands for __analysis of variance__.

It is used to compare among groups of data distributions.

Often we are provided with huge data. They are too huge to work with. The total data is called the __Population__.

In order to work with them, we pick random smaller groups of data. They are called __Samples__.

ANOVA is used to compare the variance among these groups or samples.

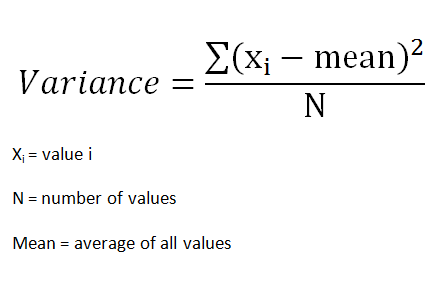

Variance of group is given by:

The differences in the collected samples are observed using the differences between the means of the groups. We often use the __t-test__ to compare the means and also to check if the samples belong to the same population,

Now, t-test can only be possible among two groups. But, often we get more groups or samples.

If we try to use t-test for more than two groups we have to perform t-tests multiple times, once for each pair. This is where ANOVA is used.

ANOVA has two components:

__1.Variation within each group__

__2.Variation between groups__

It works on a ratio called the __F-Ratio__

It is given by:

F ratio shows how much of the total variation comes from the variation between groups and how much comes from the variation within groups. If much of the variation comes from the variation between groups, it is more likely that the mean of groups are different. However, if most of the variation comes from the variation within groups, then we can conclude the elements in a group are different rather than entire groups. The larger the F ratio, the more likely that the groups have different means.

Resources:

[Defnition](https://statistics.laerd.com/statistical-guides/one-way-anova-statistical-guide.php)

[GUIDE 1](https://towardsdatascience.com/anova-analysis-of-variance-explained-b48fee6380af)

[Details](https://medium.com/@StepUpAnalytics/anova-one-way-vs-two-way-6b3ff87d3a94)

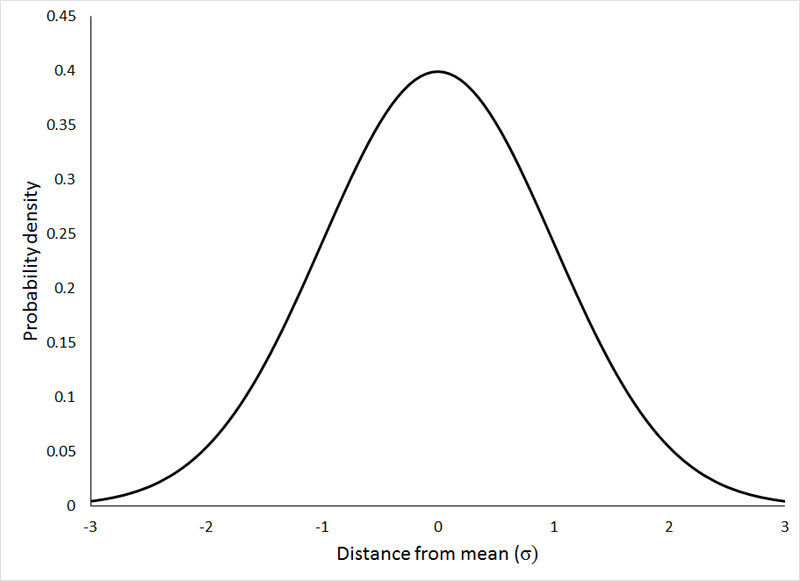

## 13_ Prob Den Fn (PDF)

It stands for probability density function.

__In probability theory, a probability density function (PDF), or density of a continuous random variable, is a function whose value at any given sample (or point) in the sample space (the set of possible values taken by the random variable) can be interpreted as providing a relative likelihood that the value of the random variable would equal that sample.__

The probability density function (PDF) P(x) of a continuous distribution is defined as the derivative of the (cumulative) distribution function D(x).

It is given by the integral of the function over a given range.

## 14_ Central Limit theorem

## 15_ Monte Carlo method

## 16_ Hypothesis Testing

### Types of curves

We need to know about two distribution curves first.

Distribution curves reflect the probabilty of finding an instance or a sample of a population at a certain value of the distribution.

__Normal Distribution__

The normal distribution represents how the data is distributed. In this case, most of the data samples in the distribution are scattered at and around the mean of the distribution. A few instances are scattered or present at the long tail ends of the distribution.

Few points about Normal Distributions are:

1. The curve is always Bell-shaped. This is because most of the data is found around the mean, so the proababilty of finding a sample at the mean or central value is more.

2. The curve is symmetric

3. The area under the curve is always 1. This is because all the points of the distribution must be present under the curve

4. For Normal Distribution, Mean and Median lie on the same line in the distribution.

__Standard Normal Distribution__

This type of distribution are normal distributions which following conditions.

1. Mean of the distribution is 0

2. The Standard Deviation of the distribution is equal to 1.

The idea of Hypothesis Testing works completely on the data distributions.

### Hypothesis Testing

Hypothesis testing is a statistical method that is used in making statistical decisions using experimental data. Hypothesis Testing is basically an assumption that we make about the population parameter.

For example, say, we take the hypothesis that boys in a class are taller than girls.

The above statement is just an assumption on the population of the class.

__Hypothesis__ is just an assumptive proposal or statement made on the basis of observations made on a set of information or data.

We initially propose two mutually exclusive statements based on the population of the sample data.

The initial one is called __NULL HYPOTHESIS__. It is denoted by H0.

The second one is called __ALTERNATE HYPOTHESIS__. It is denoted by H1 or Ha. It is used as a contrary to Null Hypothesis.

Based on the instances of the population we accept or reject the NULL Hypothesis and correspondingly we reject or accept the ALTERNATE Hypothesis.

#### Level of Significance

It is the degree which we consider to decide whether to accept or reject the NULL hypothesis. When we consider a hypothesis on a population, it is not the case that 100% or all instances of the population abides the assumption, so we decide a __level of significance as a cutoff degree, i.e, if our level of significance is 5%, and (100-5)% = 95% of the data abides by the assumption, we accept the Hypothesis.__

__It is said with 95% confidence, the hypothesis is accepted__

The non-reject region is called __acceptance region or beta region__. The rejection regions are called __critical or alpha regions__. __alpha__ denotes the __level of significance__.

If level of significance is 5%. the two alpha regions have (2.5+2.5)% of the population and the beta region has the 95%.

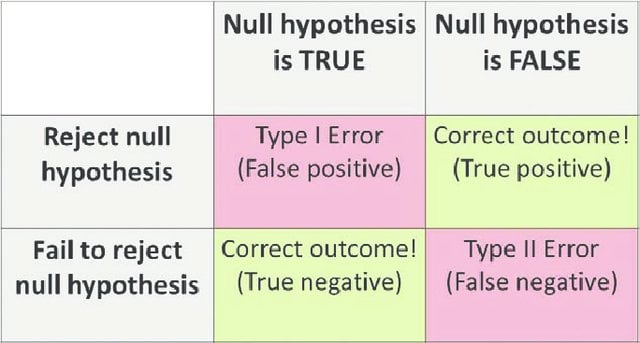

The acceptance and rejection gives rise to two kinds of errors:

__Type-I Error:__ NULL Hypothesis is true, but wrongly Rejected.

__Type-II Error:__ NULL Hypothesis if false but is wrongly accepted.

### Tests for Hypothesis

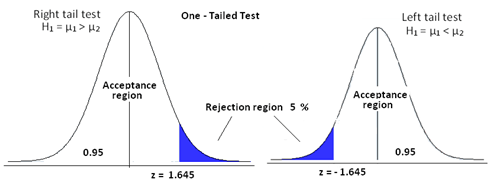

__One Tailed Test__:

This is a test for Hypothesis, where the rejection region is only one side of the sampling distribution. The rejection region may be in right tail end or in the left tail end.

The idea is if we say our level of significance is 5% and we consider a hypothesis "Hieght of Boys in a class is <=6 ft". We consider the hypothesis true if atmost 5% of our population are more than 6 feet. So, this will be one-tailed as the test condition only restricts one tail end, the end with hieght > 6ft.

In this case, the rejection region extends at both tail ends of the distribution.

The idea is if we say our level of significance is 5% and we consider a hypothesis "Hieght of Boys in a class is !=6 ft".

Here, we can accept the NULL hyposthesis iff atmost 5% of the population is less than or greater than 6 feet. So, it is evident that the crirtical region will be at both tail ends and the region is 5% / 2 = 2.5% at both ends of the distribution.

## 17_ p-Value

Before we jump into P-values we need to look at another important topic in the context: Z-test.

### Z-test

We need to know two terms: __Population and Sample.__

__Population__ describes the entire available data distributed. So, it refers to all records provided in the dataset.

__Sample__ is said to be a group of data points randomly picked from a population or a given distribution. The size of the sample can be any number of data points, given by __sample size.__

__Z-test__ is simply used to determine if a given sample distribution belongs to a given population.

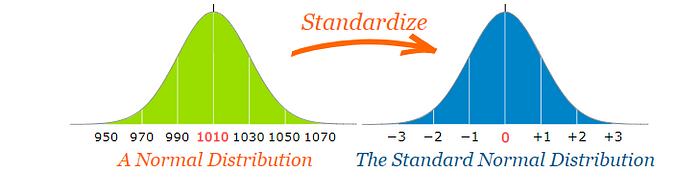

Now,for Z-test we have to use __Standard Normal Form__ for the standardized comparison measures.

As we already have seen, standard normal form is a normal form with mean=0 and standard deviation=1.

The __Standard Deviation__ is a measure of how much differently the points are distributed around the mean.

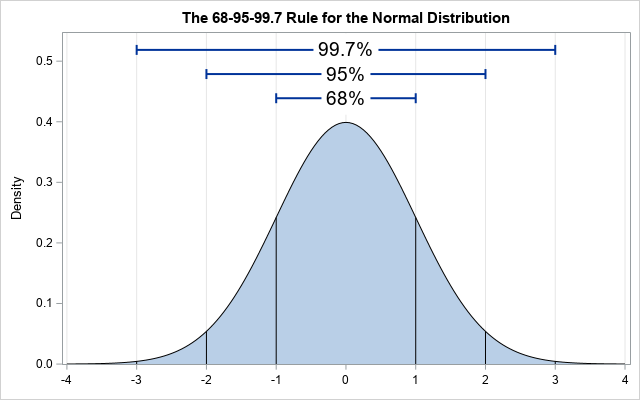

It states that approximately 68% , 95% and 99.7% of the data lies within 1, 2 and 3 standard deviations of a normal distribution respectively.

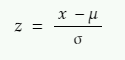

Now, to convert the normal distribution to standard normal distribution we need a standard score called Z-Score.

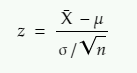

It is given by:

x = value that we want to standardize

µ = mean of the distribution of x

σ = standard deviation of the distribution of x

We need to know another concept __Central Limit Theorem__.

##### Central Limit Theorem

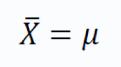

_The theorem states that the mean of the sampling distribution of the sample means is equal to the population mean irrespective if the distribution of population where sample size is greater than 30._

And

_The sampling distribution of sampling mean will also follow the normal distribution._

So, it states, if we pick several samples from a distribution with the size above 30, and pick the static sample means and use the sample means to create a distribution, the mean of the newly created sampling distribution is equal to the original population mean.

According to the theorem, if we draw samples of size N, from a population with population mean μ and population standard deviation σ, the condition stands:

i.e, mean of the distribution of sample means is equal to the sample means.

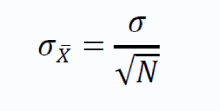

The standard deviation of the sample means is give by:

The above term is also called standard error.

We use the theory discussed above for Z-test. If the sample mean lies close to the population mean, we say that the sample belongs to the population and if it lies at a distance from the population mean, we say the sample is taken from a different population.

To do this we use a formula and check if the z statistic is greater than or less than 1.96 (considering two tailed test, level of significance = 5%)

The above formula gives Z-static

z = z statistic

X̄ = sample mean

μ = population mean

σ = population standard deviation

n = sample size

Now, as the Z-score is used to standardize the distribution, it gives us an idea how the data is distributed overall.

### P-values

It is used to check if the results are statistically significant based on the significance level.

Say, we perform an experiment and collect observations or data. Now, we make a hypothesis (NULL hypothesis) primary, and a second hypothesis, contradictory to the first one called the alternative hypothesis.

Then we decide a level of significance which serve as a threshold for our null hypothesis. The P value actually gives the probability of the statement. Say, the p-value of our alternative hypothesis is 0.02, it means the probability of alternate hypothesis happenning is 2%.

Now, the level of significance into play to decide if we can allow 2% or p-value of 0.02. It can be said as a level of endurance of the null hypothesis. If our level of significance is 5% using a two tailed test, we can allow 2.5% on both ends of the distribution, we accept the NULL hypothesis, as level of significance > p-value of alternate hypothesis.

But if the p-value is greater than level of significance, we tell that the result is __statistically significant, and we reject NULL hypothesis.__ .

Resources:

1. https://medium.com/analytics-vidhya/everything-you-should-know-about-p-value-from-scratch-for-data-science-f3c0bfa3c4cc

2. https://towardsdatascience.com/p-values-explained-by-data-scientist-f40a746cfc8

3.https://medium.com/analytics-vidhya/z-test-demystified-f745c57c324c

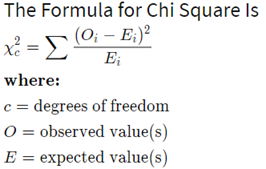

## 18_ Chi2 test

Chi2 test is extensively used in data science and machine learning problems for feature selection.

A chi-square test is used in statistics to test the independence of two events. So, it is used to check for independence of features used. Often dependent features are used which do not convey a lot of information but adds dimensionality to a feature space.

It is one of the most common ways to examine relationships between two or more categorical variables.

It involves calculating a number, called the chi-square statistic - χ2. Which follows a chi-square distribution.

It is given as the summation of the difference of the expected values and observed value divided by the observed value.

Resources:

[Definitions](investopedia.com/terms/c/chi-square-statistic.asp)

[Guide 1](https://towardsdatascience.com/chi-square-test-for-feature-selection-in-machine-learning-206b1f0b8223)

[Guide 2](https://medium.com/swlh/what-is-chi-square-test-how-does-it-work-3b7f22c03b01)

[Example of Operation](https://medium.com/@kuldeepnpatel/chi-square-test-of-independence-bafd14028250)

## 19_ Estimation

## 20_ Confid Int (CI)

## 21_ MLE

## 22_ Kernel Density estimate

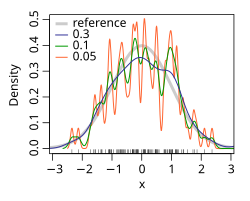

In statistics, kernel density estimation (KDE) is a non-parametric way to estimate the probability density function of a random variable. Kernel density estimation is a fundamental data smoothing problem where inferences about the population are made, based on a finite data sample.

Kernel Density estimate can be regarded as another way to represent the probability distribution.

It consists of choosing a kernel function. There are mostly three used.

1. Gaussian

2. Box

3. Tri

The kernel function depicts the probability of finding a data point. So, it is highest at the centre and decreases as we move away from the point.

We assign a kernel function over all the data points and finally calculate the density of the functions, to get the density estimate of the distibuted data points. It practically adds up the Kernel function values at a particular point on the axis. It is as shown below.

Now, the kernel function is given by:

where K is the kernel — a non-negative function — and h > 0 is a smoothing parameter called the bandwidth.

The 'h' or the bandwidth is the parameter, on which the curve varies.

Kernel density estimate (KDE) with different bandwidths of a random sample of 100 points from a standard normal distribution. Grey: true density (standard normal). Red: KDE with h=0.05. Black: KDE with h=0.337. Green: KDE with h=2.

Resources:

[Basics](https://www.youtube.com/watch?v=x5zLaWT5KPs)

[Advanced](https://jakevdp.github.io/PythonDataScienceHandbook/05.13-kernel-density-estimation.html)

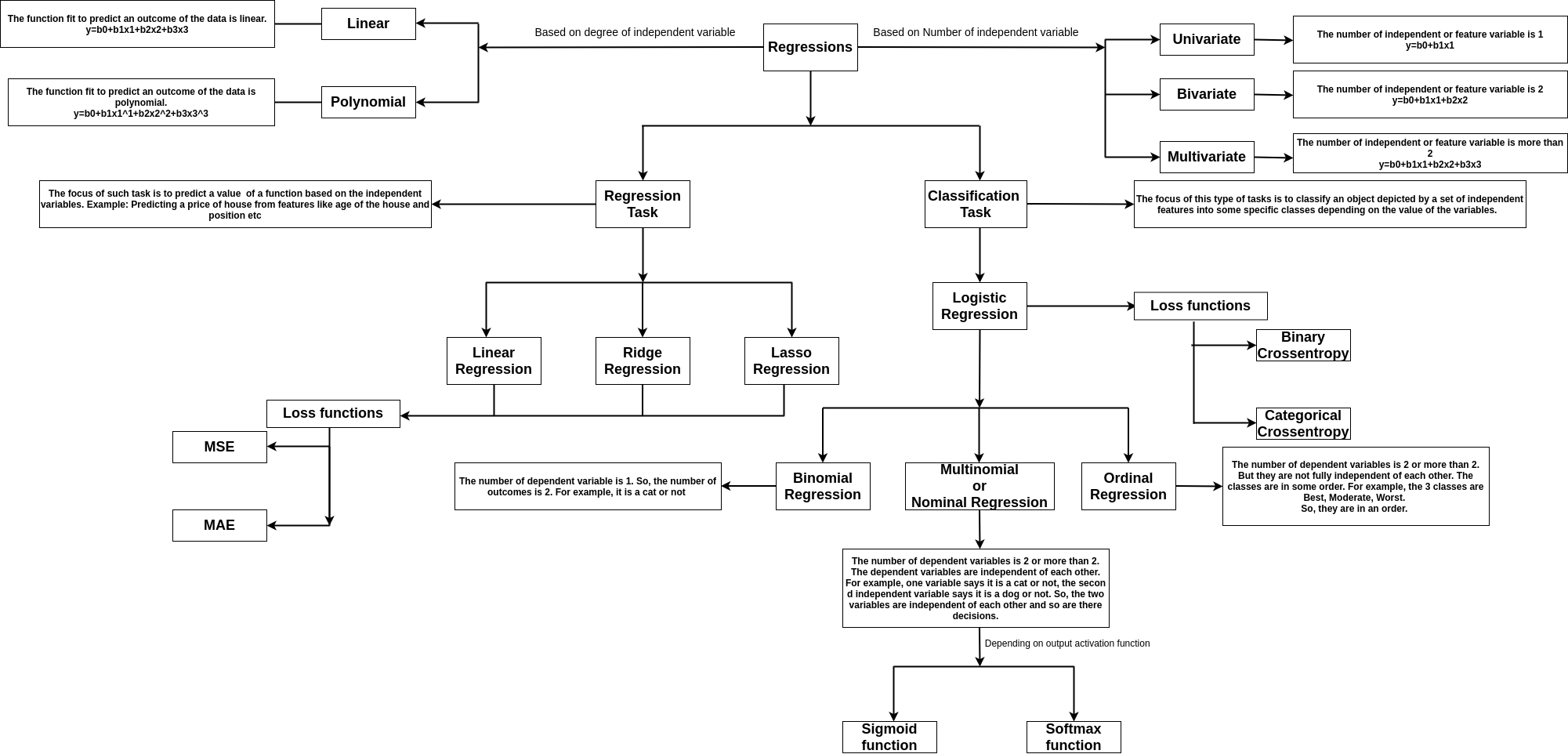

## 23_ Regression

Regression tasks deal with predicting the value of a __dependent variable__ from a set of __independent variables.__

Say, we want to predict the price of a car. So, it becomes a dependent variable say Y, and the features like engine capacity, top speed, class, and company become the independent variables, which helps to frame the equation to obtain the price.

If there is one feature say x. If the dependent variable y is linearly dependent on x, then it can be given by __y=mx+c__, where the m is the coefficient of the independent in the equation, c is the intercept or bias.

The image shows the types of regression

[Guide to Regression](https://towardsdatascience.com/a-deep-dive-into-the-concept-of-regression-fb912d427a2e)

## 24_ Covariance

### Variance

The variance is a measure of how dispersed or spread out the set is. If it is said that the variance is zero, it means all the elements in the dataset are same. If the variance is low, it means the data are slightly dissimilar. If the variance is very high, it means the data in the dataset are largely dissimilar.

Mathematically, it is a measure of how far each value in the data set is from the mean.

Variance (sigma^2) is given by summation of the square of distances of each point from the mean, divided by the number of points

### Covariance

Covariance gives us an idea about the degree of association between two considered random variables. Now, we know random variables create distributions. Distribution are a set of values or data points which the variable takes and we can easily represent as vectors in the vector space.

For vectors covariance is defined as the dot product of two vectors. The value of covariance can vary from positive infinity to negative infinity. If the two distributions or vectors grow in the same direction the covariance is positive and vice versa. The Sign gives the direction of variation and the Magnitude gives the amount of variation.

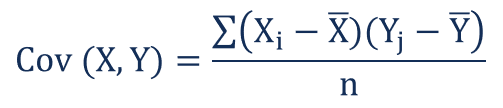

Covariance is given by:

where Xi and Yi denotes the i-th point of the two distributions and X-bar and Y-bar represent the mean values of both the distributions, and n represents the number of values or data points in the distribution.

## 25_ Correlation

Covariance measures the total relation of the variables namely both direction and magnitude. Correlation is a scaled measure of covariance. It is dimensionless and independent of scale. It just shows the strength of variation for both the variables.

Mathematically, if we represent the distribution using vectors, correlation is said to be the cosine angle between the vectors. The value of correlation varies from +1 to -1. +1 is said to be a strong positive correlation and -1 is said to be a strong negative correlation. 0 implies no correlation, or the two variables are independent of each other.

Correlation is given by:

Where:

ρ(X,Y) – the correlation between the variables X and Y

Cov(X,Y) – the covariance between the variables X and Y

σX – the standard deviation of the X-variable

σY – the standard deviation of the Y-variable

Standard deviation is given by square roo of variance.

## 26_ Pearson coeff

## 27_ Causation

## 28_ Least2-fit

## 29_ Euclidian Distance

__Eucladian Distance is the most used and standard measure for the distance between two points.__

It is given as the square root of sum of squares of the difference between coordinates of two points.

__The Euclidean distance between two points in Euclidean space is a number, the length of a line segment between the two points. It can be calculated from the Cartesian coordinates of the points using the Pythagorean theorem, and is occasionally called the Pythagorean distance.__

__In the Euclidean plane, let point p have Cartesian coordinates (p_{1},p_{2}) and let point q have coordinates (q_{1},q_{2}). Then the distance between p and q is given by:__

# 3_ Programming

## 1_ Python Basics

### About

Python is a high-level programming langage. I can be used in a wide range of works.

Commonly used in data-science, [Python](https://www.python.org/) has a huge set of libraries, helpful to quickly do something.

Most of informatics systems already support Python, without installing anything.

### Execute a script

* Download the .py file on your computer

* Make it executable (_chmod +x file.py_ on Linux)

* Open a terminal and go to the directory containing the python file

* _python file.py_ to run with Python2 or _python3 file.py_ with Python3

## 2_ Working in excel

## 3_ R setup / R studio

### About

R is a programming language specialized in statistics and mathematical visualizations.

It can be used with manually created scripts using the terminal, or directly in the R console.

### Installation

#### Linux

sudo apt-get install r-base

sudo apt-get install r-base-dev

#### Windows

Download the .exe setup available on [CRAN](https://cran.rstudio.com/bin/windows/base/) website.

### R-studio

Rstudio is a graphical interface for R. It is available for free on [their website](https://www.rstudio.com/products/rstudio/download/).

This interface is divided in 4 main areas :

* The top left is the script you are working on (highlight code you want to execute and press Ctrl + Enter)

* The bottom left is the console to instant-execute some lines of codes

* The top right is showing your environment (variables, history, ...)

* The bottom right show figures you plotted, packages, help ... The result of code execution

## 4_ R basics

R is an open source programming language and software environment for statistical computing and graphics that is supported by the R Foundation for Statistical Computing.

The R language is widely used among statisticians and data miners for developing statistical software and data analysis.

Polls, surveys of data miners, and studies of scholarly literature databases show that R's popularity has increased substantially in recent years.

## 5_ Expressions

## 6_ Variables

## 7_ IBM SPSS

## 8_ Rapid Miner

## 9_ Vectors

## 10_ Matrices

## 11_ Arrays

## 12_ Factors

## 13_ Lists

## 14_ Data frames

## 15_ Reading CSV data

CSV is a format of __tabular data__ comonly used in data science. Most of structured data will come in such a format.

To __open a CSV file__ in Python, just open the file as usual :

raw_file = open('file.csv', 'r')

* 'r': Reading, no modification on the file is possible

* 'w': Writing, every modification will erease the file

* 'a': Adding, every modification will be made at the end of the file

### How to read it ?

Most of the time, you will parse this file line by line and do whatever you want on this line. If you want to store data to use them later, build lists or dictionnaries.

To read such a file row by row, you can use :

* Python [library csv](https://docs.python.org/3/library/csv.html)

* Python [function open](https://docs.python.org/2/library/functions.html#open)

## 16_ Reading raw data

## 17_ Subsetting data

## 18_ Manipulate data frames

## 19_ Functions

A function is helpful to execute redondant actions.

First, define the function:

def MyFunction(number):

"""This function will multiply a number by 9"""

number = number * 9

return number

## 20_ Factor analysis

## 21_ Install PKGS

Python actually has two mainly used distributions. Python2 and python3.

### Install pip

Pip is a library manager for Python. Thus, you can easily install most of the packages with a one-line command. To install pip, just go to a terminal and do:

# __python2__

sudo apt-get install python-pip

# __python3__

sudo apt-get install python3-pip

You can then install a library with [pip](https://pypi.python.org/pypi/pip?) via a terminal doing:

# __python2__

sudo pip install [PCKG_NAME]

# __python3__

sudo pip3 install [PCKG_NAME]

You also can install it directly from the core (see 21_install_pkgs.py)

# 4_ Machine learning

## 1_ What is ML ?

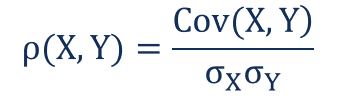

### Definition

Machine Learning is part of the Artificial Intelligences study. It concerns the conception, devloppement and implementation of sophisticated methods, allowing a machine to achieve really hard tasks, nearly impossible to solve with classic algorithms.

Machine learning mostly consists of three algorithms:

### Utilisation examples

* Computer vision

* Search engines

* Financial analysis

* Documents classification

* Music generation

* Robotics ...

## 2_ Numerical var

Variables which can take continous integer or real values. They can take infinite values.

These types of variables are mostly used for features which involves measurements. For example, hieghts of all students in a class.

## 3_ Categorical var

Variables that take finite discrete values. They take a fixed set of values, in order to classify a data item.

They act like assigned labels. For example: Labelling the students of a class according to gender: 'Male' and 'Female'

## 4_ Supervised learning

Supervised learning is the machine learning task of inferring a function from __labeled training data__.

The training data consist of a __set of training examples__.

In supervised learning, each example is a pair consisting of an input object (typically a vector) and a desired output value (also called the supervisory signal).

A supervised learning algorithm analyzes the training data and produces an inferred function, which can be used for mapping new examples.

In other words:

Supervised Learning learns from a set of labeled examples. From the instances and the labels, supervised learning models try to find the correlation among the features, used to describe an instance, and learn how each feature contributes to the label corresponding to an instance. On receiving an unseen instance, the goal of supervised learning is to label the instance based on its feature correctly.

__An optimal scenario will allow for the algorithm to correctly determine the class labels for unseen instances__.

## 5_ Unsupervised learning

Unsupervised machine learning is the machine learning task of inferring a function to describe hidden structure __from "unlabeled" data__ (a classification or categorization is not included in the observations).

Since the examples given to the learner are unlabeled, there is no evaluation of the accuracy of the structure that is output by the relevant algorithm—which is one way of distinguishing unsupervised learning from supervised learning and reinforcement learning.

Unsupervised learning deals with data instances only. This approach tries to group data and form clusters based on the similarity of features. If two instances have similar features and placed in close proximity in feature space, there are high chances the two instances will belong to the same cluster. On getting an unseen instance, the algorithm will try to find, to which cluster the instance should belong based on its feature.

Resource:

[Guide to unsupervised learning](https://towardsdatascience.com/a-dive-into-unsupervised-learning-bf1d6b5f02a7)

## 6_ Concepts, inputs and attributes

A machine learning problem takes in the features of a dataset as input.

For supervised learning, the model trains on the data and then it is ready to perform. So, for supervised learning, apart from the features we also need to input the corresponding labels of the data points to let the model train on them.

For unsupervised learning, the models simply perform by just citing complex relations among data items and grouping them accordingly. So, unsupervised learning do not need a labelled dataset. The input is only the feature section of the dataset.

## 7_ Training and test data

If we train a supervised machine learning model using a dataset, the model captures the dependencies of that particular data set very deeply. So, the model will always perform well on the data and it won't be proper measure of how well the model performs.

To know how well the model performs, we must train and test the model on different datasets. The dataset we train the model on is called Training set, and the dataset we test the model on is called the test set.

We normally split the provided dataset to create the training and test set. The ratio of splitting is majorly: 3:7 or 2:8 depending on the data, larger being the trining data.

#### sklearn.model_selection.train_test_split is used for splitting the data.

Syntax:

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.33, random_state=42)

[Sklearn docs](https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.train_test_split.html)

## 8_ Classifiers

Classification is the most important and most common machine learning problem. Classification problems can be both suprvised and unsupervised problems.

The classification problems involve labelling data points to belong to a particular class based on the feature set corresponding to the particluar data point.

Classification tasks can be performed using both machine learning and deep learning techniques.

Machine learning classification techniques involve: Logistic Regressions, SVMs, and Classification trees. The models used to perform the classification are called classifiers.

## 9_ Prediction

The output generated by a machine learning models for a particuolar problem is called its prediction.

There are majorly two kinds of predictions corresponding to two types of problen:

1. Classification

2. Regression

In classiication, the prediction is mostly a class or label, to which a data points belong

In regression, the prediction is a number, a continous a numeric value, because regression problems deal with predicting the value. For example, predicting the price of a house.

## 10_ Lift

## 11_ Overfitting

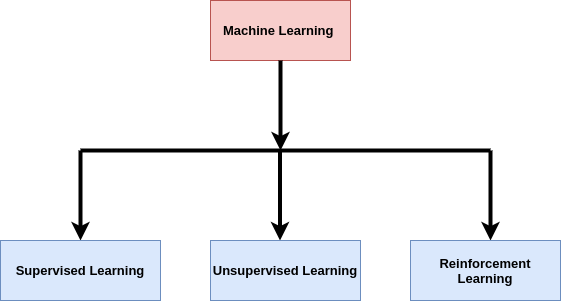

Often we train our model so much or make our model so complex that our model fits too tghtly with the training data.

The training data often contains outliers or represents misleading patterns in the data. Fitting the training data with such irregularities to deeply cause the model to lose its generalization. The model performs very well on the training set but not so good on the test set.

As we can see on training further a point the training error decreases and testing error increases.

A hypothesis h1 is said to overfit iff there exists another hypothesis h where h gives more error than h1 on training data and less error than h1 on the test data

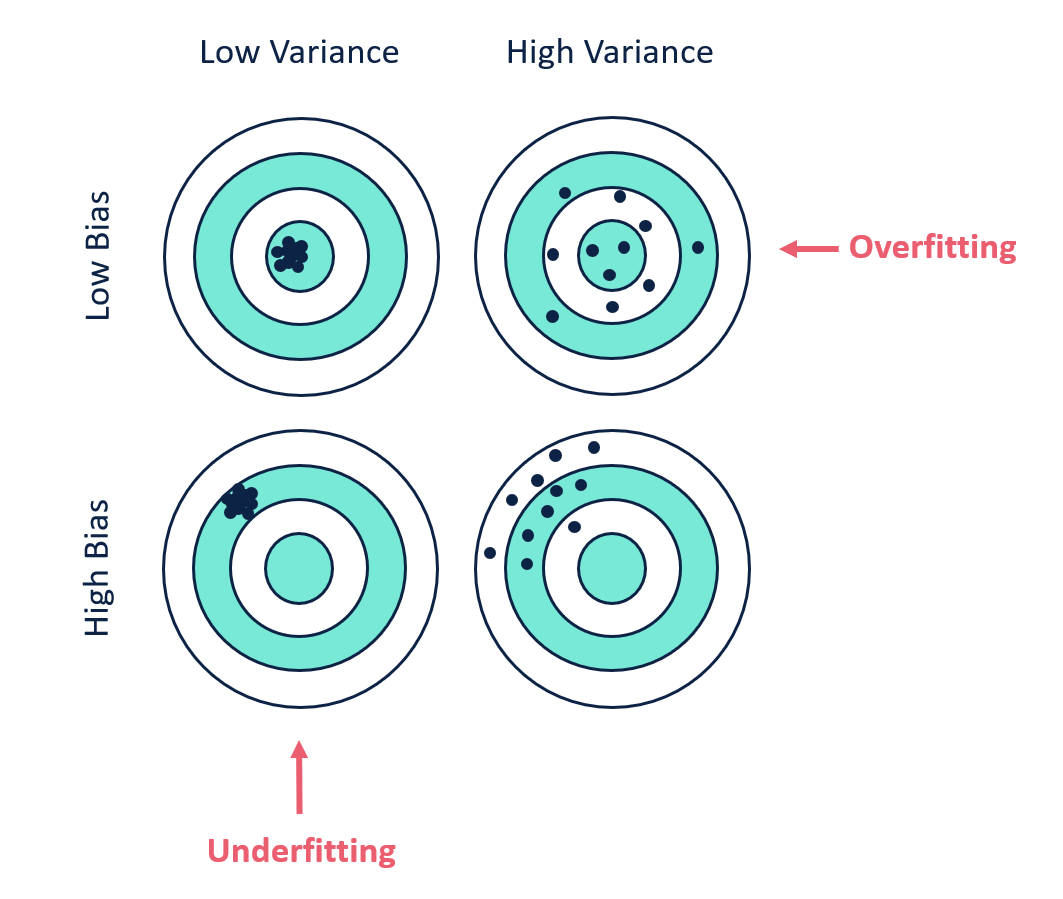

## 12_ Bias & variance

Bias is the difference between the average prediction of our model and the correct value which we are trying to predict. Model with high bias pays very little attention to the training data and oversimplifies the model. It always leads to high error on training and test data.

Variance is the variability of model prediction for a given data point or a value which tells us spread of our data. Model with high variance pays a lot of attention to training data and does not generalize on the data which it hasn’t seen before. As a result, such models perform very well on training data but has high error rates on test data.

Basically High variance causes overfitting and high bias causes underfitting. We want our model to have low bias and low variance to perform perfectly. We need to avoid a model with higher variance and high bias

We can see that for Low bias and Low Variance our model predicts all the data points correctly. Again in the last image having high bias and high variance the model predicts no data point correctly.

We can see from the graph that rge Error increases when the complex is either too complex or the model is too simple. The bias increases with simpler model and Variance increases with complex models.

This is one of the most important tradeoffs in machine learning

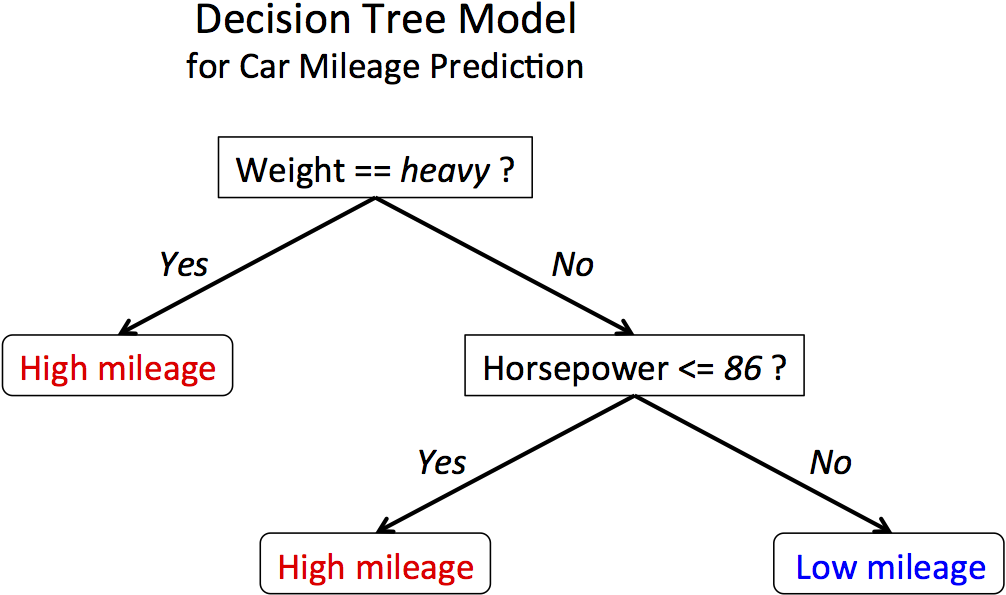

## 13_ Tree and classification

We have previously talked about classificaion. We have seen the most used methods are Logistic Regression, SVMs and decision trees. Now, if the decision boundary is linear the methods like logistic regression and SVM serves best, but its a complete scenerio when the decision boundary is non linear, this is where decision tree is used.

The first image shows linear decision boundary and second image shows non linear decision boundary.

Ih the cases, for non linear boundaries, the decision trees condition based approach work very well for classification problems. The algorithm creates conditions on features to drive and reach a decision, so is independent of functions.

Decision tree approach for classification

## 14_ Classification rate

## 15_ Decision tree

Decision Trees are some of the most used machine learning algorithms. They are used for both classification and Regression. They can be used for both linear and non-linear data, but they are mostly used for non-linear data. Decision Trees as the name suggests works on a set of decisions derived from the data and its behavior. It does not use a linear classifier or regressor, so its performance is independent of the linear nature of the data.

One of the other most important reasons to use tree models is that they are very easy to interpret.

Decision Trees can be used for both classification and regression. The methodologies are a bit different, though principles are the same. The decision trees use the CART algorithm (Classification and Regression Trees)

Resource:

[Guide to Decision Tree](https://towardsdatascience.com/a-dive-into-decision-trees-a128923c9298)

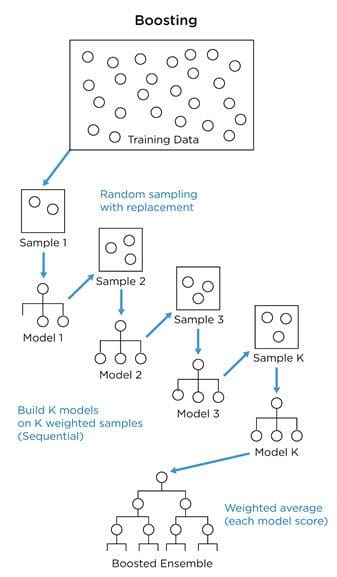

## 16_ Boosting

#### Ensemble Learning

It is the method used to enhance the performance of the Machine learning models by combining several number of models or weak learners. They provide improved efficiency.

There are two types of ensemble learning:

__1. Parallel ensemble learning or bagging method__

__2. Sequential ensemble learning or boosting method__

In parallel method or bagging technique, several weak classifiers are created in parallel. The training datasets are created randomly on a bootstrapping basis from the original dataset. The datasets used for the training and creation phases are weak classifiers. Later during predictions, the reults from all the classifiers are bagged together to provide the final results.

Ex: Random Forests

In sequential learning or boosting weak learners are created one after another and the data sample set are weighted in such a manner that during creation, the next learner focuses on the samples that were wrongly predicted by the previous classifier. So, at each step, the classifier improves and learns from its previous mistakes or misclassifications.

There are mostly three types of boosting algorithm:

__1. Adaboost__

__2. Gradient Boosting__

__3. XGBoost__

__Adaboost__ algorithm works in the exact way describe. It creates a weak learner, also known as stumps, they are not full grown trees, but contain a single node based on which the classification is done. The misclassifications are observed and they are weighted more than the correctly classified ones while training the next weak learner.

__sklearn.ensemble.AdaBoostClassifier__ is used for the application of the classifier on real data in python.

Reources:

[Understanding](https://blog.paperspace.com/adaboost-optimizer/#:~:text=AdaBoost%20is%20an%20ensemble%20learning,turn%20them%20into%20strong%20ones.)

__Gradient Boosting__ algorithm starts with a node giving 0.5 as output for both classification and regression. It serves as the first stump or weak learner. We then observe the Errors in predictions. Now, we create other learners or decision trees to actually predict the errors based on the conditions. The errors are called Residuals. Our final output is:

__0.5 (Provided by the first learner) + The error provided by the second tree or learner.__

Now, if we use this method, it learns the predictions too tightly, and loses generalization. In order to avoid that gradient boosting uses a learning parameter _alpha_.

So, the final results after two learners is obtained as:

__0.5 (Provided by the first learner) + _alpha_ X (The error provided by the second tree or learner.)__

We can see that using the added portion we take a small leap towards the correct results. We continue adding learners until the point we are very close to the actual value given by the training set.

Overall the equation becomes:

__0.5 (Provided by the first learner) + _alpha_ X (The error provided by the second tree or learner.)+ _alpha_ X (The error provided by the third tree or learner.)+.............__

__sklearn.ensemble.GradientBoostingClassifier__ used to apply gradient boosting in python

Resource:

[Guide](https://medium.com/mlreview/gradient-boosting-from-scratch-1e317ae4587d)

## 17_ Naïves Bayes classifiers

The Naive Bayes classifiers are a collection of classification algorithms based on __Bayes’ Theorem.__

Bayes theorem describes the probability of an event, based on prior knowledge of conditions that might be related to the event. It is given by:

Where P(A|B) is the probabaility of occurrence of A knowing B already occurred and P(B|A) is the probability of occurrence of B knowing A occurred.

[Scikit-learn Guide](https://github.com/abr-98/data-scientist-roadmap/edit/master/04_Machine-Learning/README.md)

There are mostly two types of Naive Bayes:

__1. Gaussian Naive Bayes__

__2. Multinomial Naive Bayes.__

#### Multinomial Naive Bayes

The method is used mostly for document classification. For example, classifying an article as sports article or say film magazine. It is also used for differentiating actual mails from spam mails. It uses the frequency of words used in different magazine to make a decision.

For example, the word "Dear" and "friends" are used a lot in actual mails and "offer" and "money" are used a lot in "Spam" mails. It calculates the prorbability of the occurrence of the words in case of actual mails and spam mails using the training examples. So, the probability of occurrence of "money" is much higher in case of spam mails and so on.

Now, we calculate the probability of a mail being a spam mail using the occurrence of words in it.

#### Gaussian Naive Bayes

When the predictors take up a continuous value and are not discrete, we assume that these values are sampled from a gaussian distribution.

It links guassian distribution and Bayes theorem.

Resources:

[GUIDE](https://youtu.be/H3EjCKtlVog)

## 18_ K-Nearest neighbor

K-nearest neighbour algorithm is the most basic and still essential algorithm. It is a memory based approach and not a model based one.

KNN is used in both supervised and unsupervised learning. It simply locates the data points across the feature space and used distance as a similarity metrics.

Lesser the distance between two data points, more similar the points are.

In K-NN classification algorithm, the point to classify is plotted on the feature space and classified as the class of its nearest K-neighbours. K is the user parameter. It gives the measure of how many points we should consider while deciding the label of the point concerned. If K is more than 1 we consider the label that is in majority.

If the dataset is very large, we can use a large k. The large k is less effected by noise and generates smooth boundaries. For small dataset, a small k must be used. A small k helps to notice the variation in boundaries better.

Resource:

[GUIDE](https://towardsdatascience.com/machine-learning-basics-with-the-k-nearest-neighbors-algorithm-6a6e71d01761)

## 19_ Logistic regression

Regression is one of the most important concepts used in machine learning.

[Guide to regression](https://towardsdatascience.com/a-deep-dive-into-the-concept-of-regression-fb912d427a2e)

Logistic Regression is the most used classification algorithm for linearly seperable datapoints. Logistic Regression is used when the dependent variable is categorical.

It uses the linear regression equation:

__Y= w1x1+w2x2+w3x3……..wkxk__

in a modified format:

__Y= 1/ 1+e^-(w1x1+w2x2+w3x3……..wkxk)__

This modification ensures the value always stays between 0 and 1. Thus, making it feasible to be used for classification.

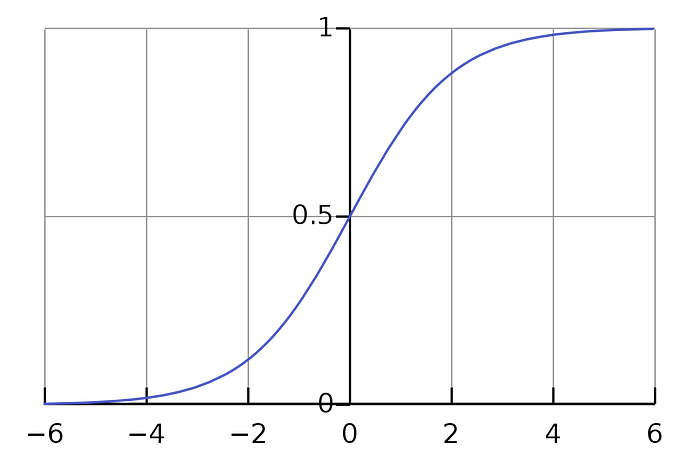

The above equation is called __Sigmoid__ function. The function looks like:

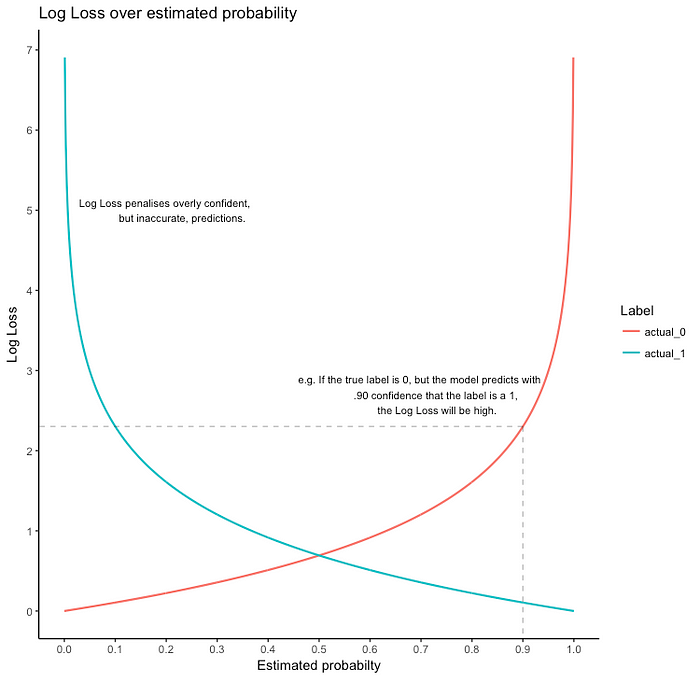

The loss fucnction used is called logloss or binary cross-entropy.

__Loss= —Y_actual. log(h(x)) —(1 — Y_actual.log(1 — h(x)))__

If Y_actual=1, the first part gives the error, else the second part.

Logistic Regression is used for multiclass classification also. It uses softmax regresssion or One-vs-all logistic regression.

[Guide to logistic Regression](https://towardsdatascience.com/logistic-regression-detailed-overview-46c4da4303bc)

__sklearn.linear_model.LogisticRegression__ is used to apply logistic Regression in python.

## 20_ Ranking

## 21_ Linear regression

Regression tasks deal with predicting the value of a dependent variable from a set of independent variables i.e, the provided features. Say, we want to predict the price of a car. So, it becomes a dependent variable say Y, and the features like engine capacity, top speed, class, and company become the independent variables, which helps to frame the equation to obtain the price.

Now, if there is one feature say x. If the dependent variable y is linearly dependent on x, then it can be given by y=mx+c, where the m is the coefficient of the feature in the equation, c is the intercept or bias. Both M and C are the model parameters.

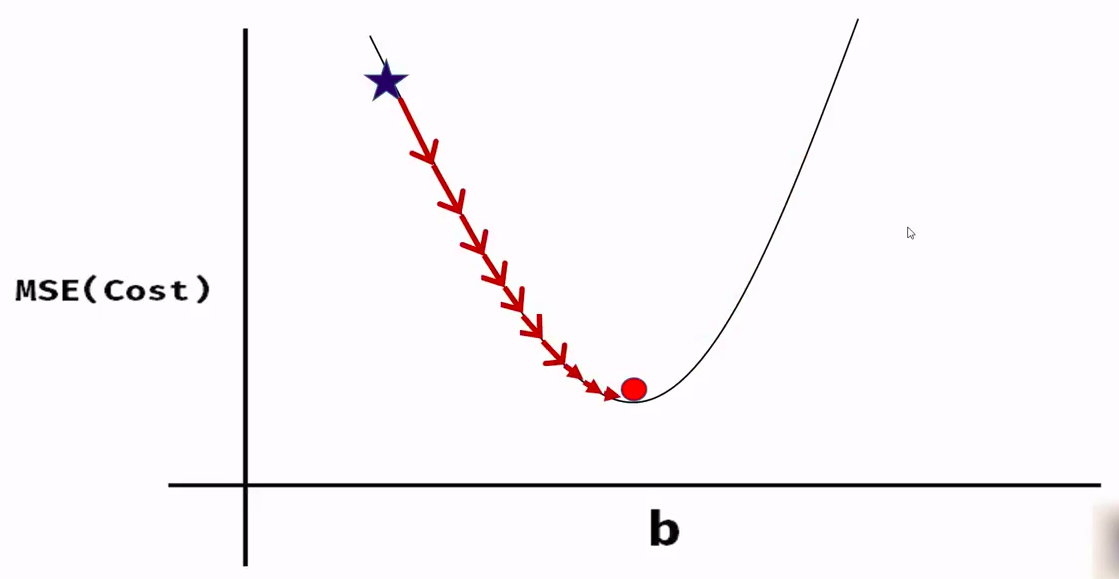

We use a loss function or cost function called Mean Square error of (MSE). It is given by the square of the difference between the actual and the predicted value of the dependent variable.

__MSE=1/2m * (Y_actual — Y_pred)²__

If we observe the function we will see its a parabola, i.e, the function is convex in nature. This convex function is the principle used in Gradient Descent to obtain the value of the model parameters

The image shows the loss function.

To get the correct estimate of the model parameters we use the method of __Gradient Descent__

[Guide to Gradient Descent](https://towardsdatascience.com/an-introduction-to-gradient-descent-and-backpropagation-81648bdb19b2)

[Guide to linear Regression](https://towardsdatascience.com/linear-regression-detailed-view-ea73175f6e86)

__sklearn.linear_model.LinearRegression__ is used to apply linear regression in python

## 22_ Perceptron

The perceptron has been the first model described in the 50ies.

This is a __binary classifier__, ie it can't separate more than 2 groups, and thoses groups have to be __linearly separable__.

The perceptron __works like a biological neuron__. It calculate an activation value, and if this value if positive, it returns 1, 0 otherwise.

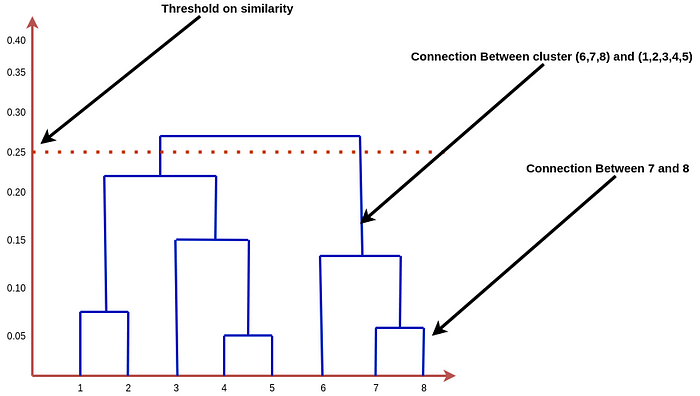

## 23_ Hierarchical clustering

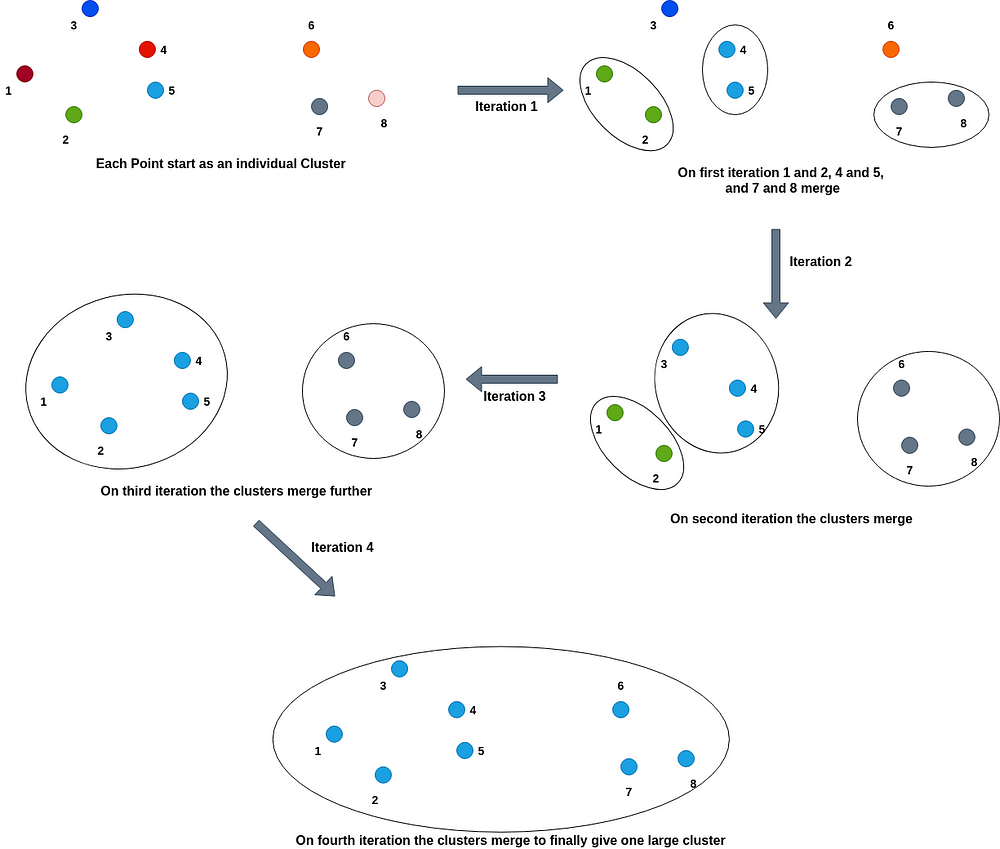

The hierarchical algorithms are so-called because they create tree-like structures to create clusters. These algorithms also use a distance-based approach for cluster creation.

The most popular algorithms are:

__Agglomerative Hierarchical clustering__

__Divisive Hierarchical clustering__

__Agglomerative Hierarchical clustering__: In this type of hierarchical clustering, each point initially starts as a cluster, and slowly the nearest or similar most clusters merge to create one cluster.

__Divisive Hierarchical Clustering__: The type of hierarchical clustering is just the opposite of Agglomerative clustering. In this type, all the points start as one large cluster and slowly the clusters get divided into smaller clusters based on how large the distance or less similarity is between the two clusters. We keep on dividing the clusters until all the points become individual clusters.

For agglomerative clustering, we keep on merging the clusters which are nearest or have a high similarity score to one cluster. So, if we define a cut-off or threshold score for the merging we will get multiple clusters instead of a single one. For instance, if we say the threshold similarity metrics score is 0.5, it means the algorithm will stop merging the clusters if no two clusters are found with a similarity score less than 0.5, and the number of clusters present at that step will give the final number of clusters that need to be created to the clusters.

Similarly, for divisive clustering, we divide the clusters based on the least similarity scores. So, if we define a score of 0.5, it will stop dividing or splitting if the similarity score between two clusters is less than or equal to 0.5. We will be left with a number of clusters and it won’t reduce to every point of the distribution.

The process is as shown below:

One of the most used methods for the measuring distance and applying cutoff is the dendrogram method.

The dendogram for above clustering is:

[Guide](https://towardsdatascience.com/understanding-the-concept-of-hierarchical-clustering-technique-c6e8243758ec)

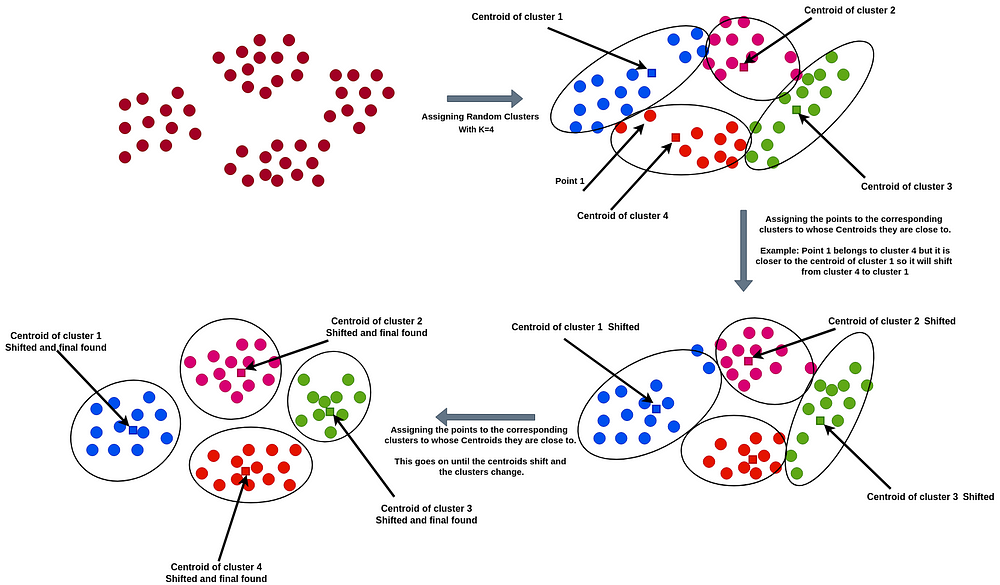

## 24_ K-means clustering

The algorithm initially creates K clusters randomly using N data points and finds the mean of all the point values in a cluster for each cluster. So, for each cluster we find a central point or centroid calculating the mean of the values of the cluster. Then the algorithm calculates the sum of squared error (SSE) for each cluster. SSE is used to measure the quality of clusters. If a cluster has large distances between the points and the center, then the SSE will be high and if we check the interpretation it allows only points in the close vicinity to create clusters.

The algorithm works on the principle that the points lying close to a center of a cluster should be in that cluster. So, if a point x is closer to the center of cluster A than cluster B, then x will belong to cluster A. Thus a point enters a cluster and as even a single point moves from one cluster to another, the centroid changes and so does the SSE. We keep doing this until the SSE decreases and the centroid does not change anymore. After a certain number of shifts, the optimal clusters are found and the shifting stops as the centroids don’t change any more.

The initial number of clusters ‘K’ is a user parameter.

The image shows the method

We have seen that for this type of clustering technique we need a user-defined parameter ‘K’ which defines the number of clusters that need to be created. Now, this is a very important parameter. To, find this parameter a number of methods are used. The most important and used method is the elbow method.

For smaller datasets, k=(N/2)^(1/2) or the square root of half of the number of points in the distribution.

[Guide](https://towardsdatascience.com/understanding-k-means-clustering-in-machine-learning-6a6e67336aa1)

## 25_ Neural networks

Neural Networks are a set of interconnected layers of artificial neurons or nodes. They are frameworks that are modeled keeping in mind, the structure and working of the human brain. They are meant for predictive modeling and applications where they can be trained via a dataset. They are based on self-learning algorithms and predict based on conclusions and complex relations derived from their training sets of information.

A typical Neural Network has a number of layers. The First Layer is called the Input Layer and The Last layer is called the Output Layer. The layers between the Input and Output layers are called Hidden Layers. It basically functions like a Black Box for prediction and classification. All the layers are interconnected and consist of numerous artificial neurons called Nodes.

[Guide to nueral Networks](https://medium.com/ai-in-plain-english/neural-networks-overview-e6ea484a474e)

Neural networks are too complex to work on Gradient Descent algorithms, so it works on the principles of Backproapagations and Optimizers.

[Guide to Backpropagation](https://towardsdatascience.com/an-introduction-to-gradient-descent-and-backpropagation-81648bdb19b2)

[Guide to optimizers](https://towardsdatascience.com/introduction-to-gradient-descent-weight-initiation-and-optimizers-ee9ae212723f)

## 26_ Sentiment analysis

Text Classification and sentiment analysis is a very common machine learning problem and is used in a lot of activities like product predictions, movie recommendations, and several others.

Text classification problems like sentimental analysis can be achieved in a number of ways using a number of algorithms. These are majorly divided into two main categories:

A bag of Word model: In this case, all the sentences in our dataset are tokenized to form a bag of words that denotes our vocabulary. Now each individual sentence or sample in our dataset is represented by that bag of words vector. This vector is called the feature vector. For example, ‘It is a sunny day’, and ‘The Sun rises in east’ are two sentences. The bag of words would be all the words in both the sentences uniquely.

The second method is based on a time series approach: Here each word is represented by an Individual vector. So, a sentence is represented as a vector of vectors.

[Guide to sentimental analysis](https://towardsdatascience.com/a-guide-to-text-classification-and-sentiment-analysis-2ab021796317)

## 27_ Collaborative filtering

We all have used services like Netflix, Amazon, and Youtube. These services use very sophisticated systems to recommend the best items to their users to make their experiences great.

Recommenders mostly have 3 components mainly, out of which, one of the main component is Candidate generation. This method is responsible for generating smaller subsets of candidates to recommend to a user, given a huge pool of thousands of items.

Types of Candidate Generation Systems:

__Content-based filtering System__

__Collaborative filtering System__

__Content-based filtering system__: Content-Based recommender system tries to guess the features or behavior of a user given the item’s features, he/she reacts positively to.

__Collaborative filtering System__: Collaborative does not need the features of the items to be given. Every user and item is described by a feature vector or embedding.

It creates embedding for both users and items on its own. It embeds both users and items in the same embedding space.

It considers other users’ reactions while recommending a particular user. It notes which items a particular user likes and also the items that the users with behavior and likings like him/her likes, to recommend items to that user.

It collects user feedbacks on different items and uses them for recommendations.

[Guide to collaborative filtering](https://towardsdatascience.com/introduction-to-recommender-systems-1-971bd274f421)

## 28_ Tagging

## 29_ Support Vector Machine

Support vector machines are used for both Classification and Regressions.

SVM uses a margin around its classifier or regressor. The margin provides an extra robustness and accuracy to the model and its performance.

The above image describes a SVM classifier. The Red line is the actual classifier and the dotted lines show the boundary. The points that lie on the boundary actually decide the Margins. They support the classifier margins, so they are called __Support Vectors__.

The distance between the classifier and the nearest points is called __Marginal Distance__.

There can be several classifiers possible but we choose the one with the maximum marginal distance. So, the marginal distance and the support vectors help to choose the best classifier.

[Official Documentation from Sklearn](https://scikit-learn.org/stable/modules/svm.html)

[Guide to SVM](https://towardsdatascience.com/support-vector-machine-introduction-to-machine-learning-algorithms-934a444fca47)

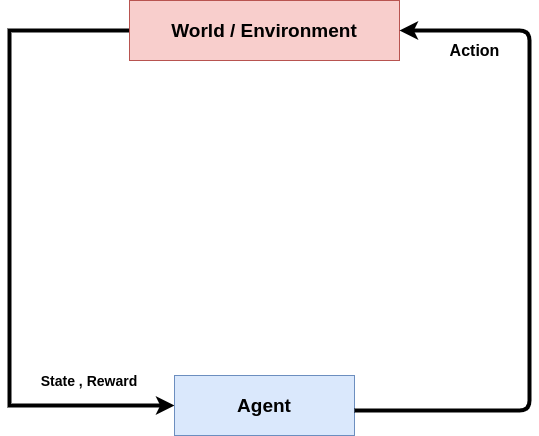

## 30_Reinforcement Learning

“Reinforcement learning (RL) is an area of machine learning concerned with how software agents ought to take actions in an environment in order to maximize the notion of cumulative reward.”

To play a game, we need to make multiple choices and predictions during the course of the game to achieve success, so they can be called a multiple decision processes. This is where we need a type of algorithm called reinforcement learning algorithms. The class of algorithm is based on decision-making chains which let such algorithms to support multiple decision processes.

The reinforcement algorithm can be used to reach a goal state from a starting state making decisions accordingly.

The reinforcement learning involves an agent which learns on its own. If it makes a correct or good move that takes it towards the goal, it is positively rewarded, else not. This way the agent learns.

The above image shows reinforcement learning setup.

[WIKI](https://en.wikipedia.org/wiki/Reinforcement_learning#:~:text=Reinforcement%20learning%20(RL)%20is%20an,supervised%20learning%20and%20unsupervised%20learning.)

# 5_ Text Mining

## 1_ Corpus

## 2_ Named Entity Recognition

## 3_ Text Analysis

## 4_ UIMA

## 5_ Term Document matrix

## 6_ Term frequency and Weight

## 7_ Support Vector Machines (SVM)

## 8_ Association rules

## 9_ Market based analysis

## 10_ Feature extraction

## 11_ Using mahout

## 12_ Using Weka

## 13_ Using NLTK

## 14_ Classify text

## 15_ Vocabulary mapping

# 6_ Data Visualization

Open .R scripts in Rstudio for line-by-line execution.

See [10_ Toolbox/3_ R, Rstudio, Rattle](https://github.com/MrMimic/data-scientist-roadmap/tree/master/10_Toolbox#3_-r-rstudio-rattle) for installation.

## 1_ Data exploration in R

In mathematics, the graph of a function f is the collection of all ordered pairs (x, f(x)). If the function input x is a scalar, the graph is a two-dimensional graph, and for a continuous function is a curve. If the function input x is an ordered pair (x1, x2) of real numbers, the graph is the collection of all ordered triples (x1, x2, f(x1, x2)), and for a continuous function is a surface.

## 2_ Uni, bi and multivariate viz

### Univariate

The term is commonly used in statistics to distinguish a distribution of one variable from a distribution of several variables, although it can be applied in other ways as well. For example, univariate data are composed of a single scalar component. In time series analysis, the term is applied with a whole time series as the object referred to: thus a univariate time series refers to the set of values over time of a single quantity.

### Bivariate

Bivariate analysis is one of the simplest forms of quantitative (statistical) analysis.[1] It involves the analysis of two variables (often denoted as X, Y), for the purpose of determining the empirical relationship between them.

### Multivariate

Multivariate analysis (MVA) is based on the statistical principle of multivariate statistics, which involves observation and analysis of more than one statistical outcome variable at a time. In design and analysis, the technique is used to perform trade studies across multiple dimensions while taking into account the effects of all variables on the responses of interest.

## 3_ ggplot2

### About

ggplot2 is a plotting system for R, based on the grammar of graphics, which tries to take the good parts of base and lattice graphics and none of the bad parts. It takes care of many of the fiddly details that make plotting a hassle (like drawing legends) as well as providing a powerful model of graphics that makes it easy to produce complex multi-layered graphics.

[http://ggplot2.org/](http://ggplot2.org/)

### Documentation

### Examples

[http://r4stats.com/examples/graphics-ggplot2/](http://r4stats.com/examples/graphics-ggplot2/)

## 4_ Histogram and pie (Uni)

### About

Histograms and pie are 2 types of graphes used to visualize frequencies.

Histogram is showing the distribution of these frequencies over classes, and pie the relative proportion of this frequencies in a 100% circle.

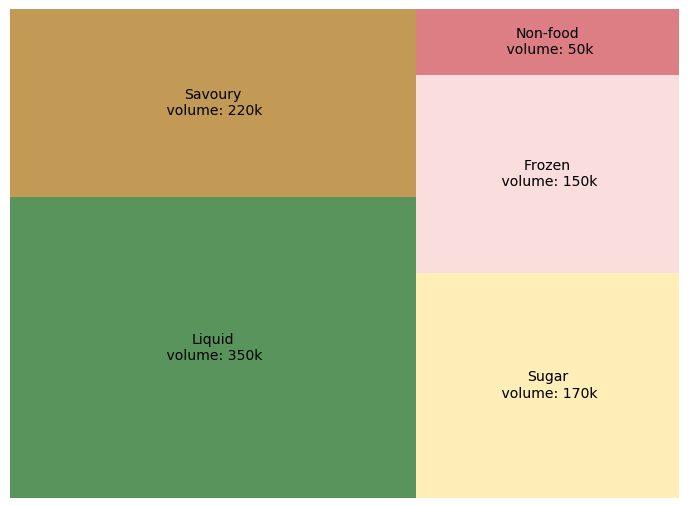

## 5_ Tree & tree map

### About

[Treemaps](https://en.wikipedia.org/wiki/Treemapping) display hierarchical (tree-structured) data as a set of nested rectangles.

Each branch of the tree is given a rectangle, which is then tiled with smaller rectangles representing sub-branches.

A leaf node’s rectangle has an area proportional to a specified dimension of the data.

Often the leaf nodes are colored to show a separate dimension of the data.

### When to use it ?

- Less than 10 branches.

- Positive values.

- Space for visualisation is limited.

### Example

This treemap describes volume for each product universe with corresponding surface. Liquid products are more sold than others.

If you want to explore more, we can go into products “liquid” and find which shelves are prefered by clients.

### More information

[Matplotlib Series 5: Treemap](https://jingwen-z.github.io/data-viz-with-matplotlib-series5-treemap/)

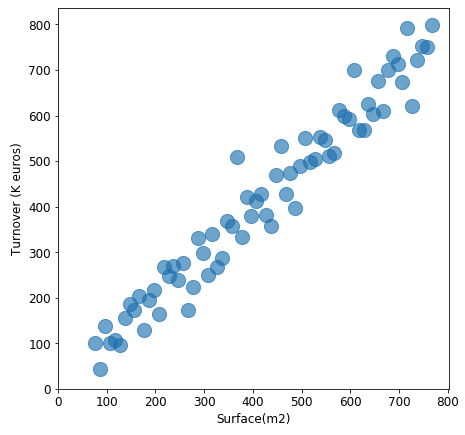

## 6_ Scatter plot

### About

A [scatter plot](https://en.wikipedia.org/wiki/Scatter_plot) (also called a scatter graph, scatter chart, scattergram, or scatter diagram) is a type of plot or mathematical diagram using Cartesian coordinates to display values for typically two variables for a set of data.

### When to use it ?

Scatter plots are used when you want to show the relationship between two variables.

Scatter plots are sometimes called correlation plots because they show how two variables are correlated.

### Example

This plot describes the positive relation between store’s surface and its turnover(k euros), which is reasonable: for stores, the larger it is, more clients it can accept, more turnover it will generate.

### More information

[Matplotlib Series 4: Scatter plot](https://jingwen-z.github.io/data-viz-with-matplotlib-series4-scatter-plot/)

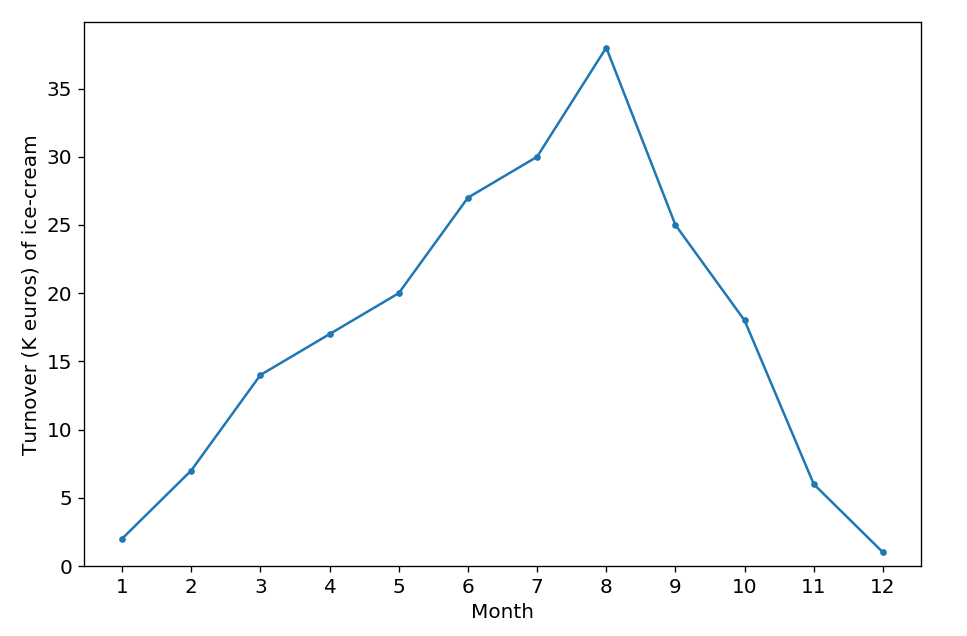

## 7_ Line chart

### About

A [line chart](https://en.wikipedia.org/wiki/Line_chart) or line graph is a type of chart which displays information as a series of data points called ‘markers’ connected by straight line segments. A line chart is often used to visualize a trend in data over intervals of time – a time series – thus the line is often drawn chronologically.

### When to use it ?

- Track changes over time.

- X-axis displays continuous variables.

- Y-axis displays measurement.

### Example

Suppose that the plot above describes the turnover(k euros) of ice-cream’s sales during one year.

According to the plot, we can clearly find that the sales reach a peak in summer, then fall from autumn to winter, which is logical.

### More information

[Matplotlib Series 2: Line chart](https://jingwen-z.github.io/data-viz-with-matplotlib-series2-line-chart/)