https://github.com/george0st/qgate-sln-mlrun

MLRun/Iguazio/Nuclio quality gate solution.

https://github.com/george0st/qgate-sln-mlrun

artificial-intelligence data-science e2e feature-store genai iguazio machine-learning mlops mlrun mlrun-test nuclio quality-assessment quality-assurance quality-gate testing

Last synced: 6 months ago

JSON representation

MLRun/Iguazio/Nuclio quality gate solution.

- Host: GitHub

- URL: https://github.com/george0st/qgate-sln-mlrun

- Owner: george0st

- License: apache-2.0

- Created: 2023-04-03T19:22:33.000Z (over 2 years ago)

- Default Branch: master

- Last Pushed: 2024-07-11T21:01:15.000Z (over 1 year ago)

- Last Synced: 2024-07-11T22:46:29.221Z (over 1 year ago)

- Topics: artificial-intelligence, data-science, e2e, feature-store, genai, iguazio, machine-learning, mlops, mlrun, mlrun-test, nuclio, quality-assessment, quality-assurance, quality-gate, testing

- Language: Python

- Homepage:

- Size: 2.89 MB

- Stars: 207

- Watchers: 5

- Forks: 9

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

[](https://opensource.org/licenses/Apache-2.0)

[](https://pypi.python.org/pypi/qgate-sln-mlrun/)

# QGate-Sln-MLRun

The Quality Gate for solution [MLRun](https://www.mlrun.org/) (and [Iguazio](https://www.iguazio.com/)). The main aims of the project are:

- independent quality test (function, integration, performance, vulnerability, acceptance, ... tests)

- deeper quality checks before full rollout/use in company environments

- identification of possible compatibility issues (if any)

- external and independent test coverage

- community support

- etc.

The tests use these key components, MLRun solution see **[GIT mlrun](https://github.com/mlrun/mlrun)**,

sample meta-data model see **[GIT qgate-model](https://github.com/george0st/qgate-model)** and this project.

## Test scenarios

The quality gate covers these test scenarios (✅ done, ✔ in-progress, ❌ planned):

- **01 - Project**

- ✅ TS101: Create project(s)

- ✅ TS102: Delete project(s)

- **02 - Feature set**

- ✅ TS201: Create feature set(s)

- ✅ TS202: Create feature set(s) & Ingest from DataFrame source (one step)

- ✅ TS203: Create feature set(s) & Ingest from CSV source (one step)

- ✅ TS204: Create feature set(s) & Ingest from Parquet source (one step)

- ✅ TS205: Create feature set(s) & Ingest from SQL source (one step)

- ✔ TS206: Create feature set(s) & Ingest from Kafka source (one step)

- ✔ TS207: Create feature set(s) & Ingest from HTTP source (one step)

- **03 - Ingest data**

- ✅ TS301: Ingest data (Preview mode)

- ✅ TS302: Ingest data to feature set(s) from DataFrame source

- ✅ TS303: Ingest data to feature set(s) from CSV source

- ✅ TS304: Ingest data to feature set(s) from Parquet source

- ✅ TS305: Ingest data to feature set(s) from SQL source

- ✔ TS306: Ingest data to feature set(s) from Kafka source

- ✔ TS307: Ingest data to feature set(s) from HTTP source

- **04 - Ingest data & pipeline**

- ✅ TS401: Ingest data & pipeline (Preview mode)

- ✅ TS402: Ingest data & pipeline to feature set(s) from DataFrame source

- ✅ TS403: Ingest data & pipeline to feature set(s) from CSV source

- ✅ TS404: Ingest data & pipeline to feature set(s) from Parquet source

- ✅ TS405: Ingest data & pipeline to feature set(s) from SQL source

- ✔ TS406: Ingest data & pipeline to feature set(s) from Kafka source

- ❌ TS407: Ingest data & pipeline to feature set(s) from HTTP source

- **05 - Feature vector**

- ✅ TS501: Create feature vector(s)

- **06 - Get data from vector**

- ✅ TS601: Get data from off-line feature vector(s)

- ✅ TS602: Get data from on-line feature vector(s)

- **07 - Pipeline**

- ✅ TS701: Simple pipeline(s)

- ✅ TS702: Complex pipeline(s)

- ✅ TS703: Complex pipeline(s), mass operation

- **08 - Build model**

- ✅ TS801: Build CART model

- ❌ TS802: Build XGBoost model

- ❌ TS803: Build DNN model

- **09 - Serve model**

- ✅ TS901: Serving score from CART

- ❌ TS902: Serving score from XGBoost

- ❌ TS903: Serving score from DNN

- **10 - Model monitoring/drifting**

- ❌ TS1001: Real-time monitoring

- ❌ TS1002: Batch monitoring

- **11 - Performance tests**

- ❌ TS1101: Simple pipeline

- ❌ TS1102: Complex pipeline(s)

- ❌ TS11xx: TBD.

NOTE: Each test scenario contains addition specific test cases (e.g. with different

targets for feature sets, etc.).

## Test inputs/outputs

The quality gate tests these inputs/outputs (✅ done, ✔ in-progress, ❌ planned):

- Outputs (targets)

- ✅ RedisTarget, ✅ SQLTarget/MySQL, ✔ SQLTarget/Postgres, ✅ KafkaTarget

- ✅ ParquetTarget, ✅ CSVTarget

- ✅ File system, ❌ S3, ❌ BlobStorage

- Inputs (sources)

- ✅ Pandas/DataFrame, ✅ SQLSource/MySQL, ❌ SQLSource/Postgres, ❌ KafkaSource

- ✅ ParquetSource, ✅ CSVSource

- ✅ File system, ❌ S3, ❌ BlobStorage

The current supported [sources/targets in MLRun](https://docs.mlrun.org/en/latest/feature-store/sources-targets.html).

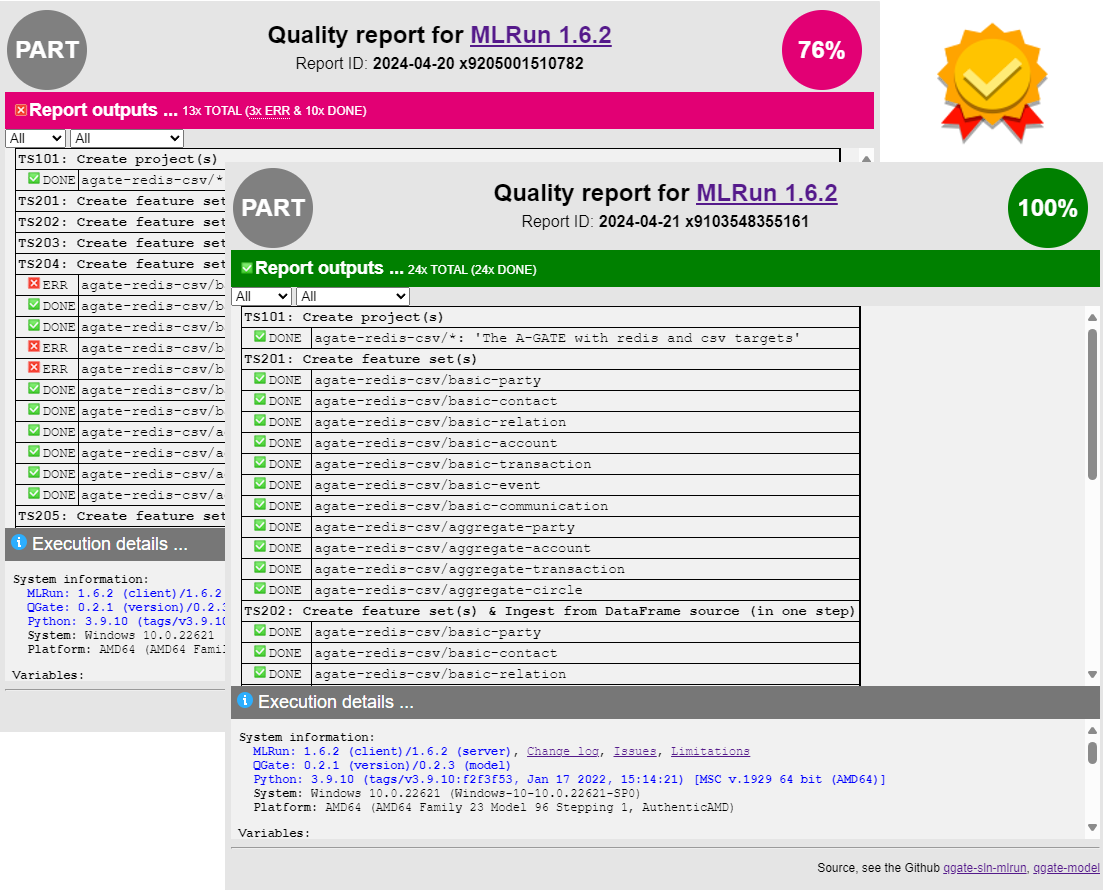

## Sample of outputs

The PART reports in original form, see:

- all DONE - [HTML](https://htmlpreview.github.io/?https://github.com/george0st/qgate-sln-mlrun/blob/master/docs/samples/outputs/qgt-mlrun-sample.html), [TXT](https://github.com/george0st/qgate-sln-mlrun/blob/master/docs/samples/outputs/qgt-mlrun-sample.txt?raw=true)

- with ERRors - [HTML](https://htmlpreview.github.io/?https://github.com/george0st/qgate-sln-mlrun/blob/master/docs/samples/outputs/qgt-mlrun-sample-err.html), [TXT](https://github.com/george0st/qgate-sln-mlrun/blob/master/docs/samples/outputs/qgt-mlrun-sample-err.txt?raw=true)

## Usage

You can easy use this solution in four steps:

1. Download content of these two GIT repositories to your local environment

- [qgate-sln-mlrun](https://github.com/george0st/qgate-sln-mlrun)

- [qgate-model](https://github.com/george0st/qgate-model)

2. Update file `qgate-sln-mlrun.env` from qgate-model

- Update variables for MLRun/Iguazio, see `MLRUN_DBPATH`, `V3IO_USERNAME`, `V3IO_ACCESS_KEY`, `V3IO_API`

- setting of `V3IO_*` is needed only in case of Iguazio installation (not for pure free MLRun)

- Update variables for QGate, see `QGATE_*` (basic description directly in *.env)

- detail setup [configuration](./docs/configuration.md)

3. Run from `qgate-sln-mlrun`

- **python main.py**

4. See outputs (location is based on `QGATE_OUTPUT` in configuration)

- './output/qgt-mlrun- .html'

- './output/qgt-mlrun- .txt'

Precondition: You have available MLRun or Iguazio solution (MLRun is part of that),

see official [installation steps](https://docs.mlrun.org/en/latest/install.html), or directly installation for [Desktop Docker](https://docs.mlrun.org/en/latest/install/local-docker.html).

## Tested with

The project was tested with these MLRun versions (see [change log](https://docs.mlrun.org/en/latest/change-log/index.html)):

- **MLRun** (in Kubernates or Desktop Docker)

- ❌ MLRun 1.8.0 (plan Q1/2025)

- ✔ MLRun 1.7.0 (? 1.7.1 ?)

- ✅ MLRun 1.6.4, 1.6.3, 1.6.2, 1.6.1, 1.6.0

- ✅ MLRun 1.5.2, 1.5.1, 1.5.0

- ✅ MLRun 1.4.1

- ✅ MLRun 1.3.0

- **Iguazio** (k8s, on-prem, VM on VMware)

- ✅ Iguazio 3.5.3 (with MLRun 1.4.1)

- ✅ Iguazio 3.5.1 (with MLRun 1.3.0)

NOTE: Current state, only the last MLRun/Iguazio versions are tested

(the backward compatibility is based on MLRun/Iguazio, [see](https://docs.mlrun.org/en/latest/install.html#mlrun-client-backward-compatibility)).

## Others

- **To-Do**, the list of expected/future improvements, [see](./docs/todo_list.md)

- **Applied limits**, the list of applied limits/issues, [see](./docs/applied-limits.md)

- **How can you test the solution?**, you have to focus on Linux env. or

Windows with WSL2 ([see](./docs/testing.md) step by step tutorial)

- **MLRun/Iguazio**, the key changes in a nutshell (customer view), [see](./docs/mlrun-iguazio-release-notes.md)

- **MLRun local installation**, [see the hack](https://github.com/mlrun/mlrun/blob/development/hack/local/README.md)