https://github.com/if-ai/ComfyUI-IF_MemoAvatar

Memory-Guided Diffusion for Expressive Talking Video Generation

https://github.com/if-ai/ComfyUI-IF_MemoAvatar

Last synced: 4 months ago

JSON representation

Memory-Guided Diffusion for Expressive Talking Video Generation

- Host: GitHub

- URL: https://github.com/if-ai/ComfyUI-IF_MemoAvatar

- Owner: if-ai

- License: mit

- Created: 2024-12-10T16:34:58.000Z (about 1 year ago)

- Default Branch: main

- Last Pushed: 2024-12-18T17:42:29.000Z (about 1 year ago)

- Last Synced: 2024-12-18T18:29:36.821Z (about 1 year ago)

- Language: Python

- Size: 4.77 MB

- Stars: 100

- Watchers: 3

- Forks: 4

- Open Issues: 4

-

Metadata Files:

- Readme: README.md

- Funding: .github/FUNDING.yml

- License: LICENSE

Awesome Lists containing this project

- awesome-comfyui - **IF_MemoAvatar** - Guided Diffusion for Expressive Talking Video Generation (All Workflows Sorted by GitHub Stars)

- awesome-comfyui - **IF_MemoAvatar** - Guided Diffusion for Expressive Talking Video Generation (Workflows (3395) sorted by GitHub Stars)

README

# ComfyUI-IF_MemoAvatar

Memory-Guided Diffusion for Expressive Talking Video Generation

#ORIGINAL REPO

**MEMO: Memory-Guided Diffusion for Expressive Talking Video Generation**

[Longtao Zheng](https://ltzheng.github.io)\*,

[Yifan Zhang](https://scholar.google.com/citations?user=zuYIUJEAAAAJ)\*,

[Hanzhong Guo](https://scholar.google.com/citations?user=q3x6KsgAAAAJ)\,

[Jiachun Pan](https://scholar.google.com/citations?user=nrOvfb4AAAAJ),

[Zhenxiong Tan](https://scholar.google.com/citations?user=HP9Be6UAAAAJ),

[Jiahao Lu](https://scholar.google.com/citations?user=h7rbA-sAAAAJ),

[Chuanxin Tang](https://scholar.google.com/citations?user=3ZC8B7MAAAAJ),

[Bo An](https://personal.ntu.edu.sg/boan/index.html),

[Shuicheng Yan](https://scholar.google.com/citations?user=DNuiPHwAAAAJ)

_[Project Page](https://memoavatar.github.io) | [arXiv](https://arxiv.org/abs/2412.04448) | [Model](https://huggingface.co/memoavatar/memo)_

This repository contains the example inference script for the MEMO-preview model. The gif demo below is compressed. See our [project page](https://memoavatar.github.io) for full videos.

# ComfyUI-IF_MemoAvatar

Memory-Guided Diffusion for Expressive Talking Video Generation

## Overview

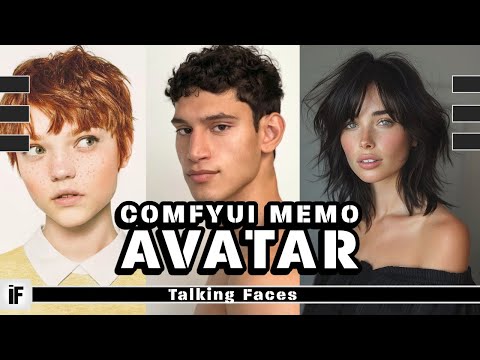

This is a ComfyUI implementation of MEMO (Memory-Guided Diffusion for Expressive Talking Video Generation), which enables the creation of expressive talking avatar videos from a single image and audio input.

## Features

- Generate expressive talking head videos from a single image

- Audio-driven facial animation

- Emotional expression transfer

- High-quality video output

https://github.com/user-attachments/assets/bfbf896d-a609-4e0f-8ed3-16ec48f8d85a

## Installation

*** Xformers NOT REQUIRED BUT BETTER IF INSTALLED***

*** MAKE SURE YoU HAVE HF Token On Your environment VARIABLES ***

git clone the repo to your custom_nodes folder and then

```bash

cd ComfyUI-IF_MemoAvatar

pip install -r requirements.txt

```

I removed xformers from the file because it needs a particular combination of pytorch on windows to work

if you are on linux you can just run

```bash

pip install xformers

```

for windows users if you don't have xformers on your env

```bash

pip show xformers

```

follow this guide to install a good comfyui environment if you don't see any version install the latest following this free guide

[Installing Triton and Sage Attention Flash Attention](https://ko-fi.com/post/Installing-Triton-and-Sage-Attention-Flash-Attenti-P5P8175434)

[](https://www.youtube.com/watch?v=nSUGEdm2wU4)

### Model Files

The models will automatically download to the following locations in your ComfyUI installation:

```bash

models/checkpoints/memo/

├── audio_proj/

├── diffusion_net/

├── image_proj/

├── misc/

│ ├── audio_emotion_classifier/

│ ├── face_analysis/

│ └── vocal_separator/

└── reference_net/

models/wav2vec/

models/vae/sd-vae-ft-mse/

models/emotion2vec/emotion2vec_plus_large/

```

Copy the faceanalisys/models models from the folder directly into faceanalisys

just until I make sure don't just move then duplicate them cos

HF will detect empty and download them every time

If you don't see a `models.json` or errors out create one yourself this is the content

```bash

{

"detection": [

"scrfd_10g_bnkps"

],

"recognition": [

"glintr100"

],

"analysis": [

"genderage",

"2d106det",

"1k3d68"

]

}

```

and a `version.txt` containing

`0.7.3`