https://github.com/iscyy/yoloair

🔥🔥🔥 专注于YOLO改进模型,Support to improve backbone, neck, head, loss, IoU, NMS and other modules🚀

https://github.com/iscyy/yoloair

anchor-based anchor-free attention backbone deep-learning ppyolo ppyoloe pytorch scaledyolov4 tood transformer yolo yoloair yolor yolov3 yolov4 yolov5 yolov6 yolov7 yolox

Last synced: 7 months ago

JSON representation

🔥🔥🔥 专注于YOLO改进模型,Support to improve backbone, neck, head, loss, IoU, NMS and other modules🚀

- Host: GitHub

- URL: https://github.com/iscyy/yoloair

- Owner: iscyy

- License: gpl-3.0

- Created: 2024-03-18T13:59:43.000Z (over 1 year ago)

- Default Branch: main

- Last Pushed: 2025-01-28T13:24:27.000Z (10 months ago)

- Last Synced: 2025-04-13T00:48:10.114Z (7 months ago)

- Language: Python

- Homepage:

- Size: 12.5 MB

- Stars: 265

- Watchers: 7

- Forks: 27

- Open Issues: 1

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

- StarryDivineSky - iscyy/yoloair

- awesome-yolo-object-detection - iscyy/yoloair

README

### 🌟 全新的YOLOAir2024版本来袭,最新改进点更新🚀2025

## YOLO Air : Makes improvements easy again

YOLOAir是一个基于PyTorch的YOLO算法库。统一模型代码框架、统一应用、统一改进、易于模块组合、构建更强大的网络模型。

简体中文 | [English](./README_EN.md)

#### **支持**

[特性🚀](#主要特性) • [使用🍉](#使用) • [文档📒](https://github.com/iscyy/yoloair) • [报告问题🌟](https://github.com/iscyy/yoloair/issues/new) • [更新💪](#-to-do) • [讨论✌️](https://github.com/iscyy/yoloair/discussions)

• [YOLO改进方式教程及原理参考🚀](#yolo网络模型具体改进方式教程及原理参考)•

## Introduction

**模型多样化**: 基于不同网络模块构建不同检测网络模型。

**模块组件化**: 帮助用户自定义快速组合Backbone、Neck、Head,使得网络模型多样化,助力科研改进检测算法、模型改进,网络排列组合🏆。构建强大的网络模型。

**统一模型代码框架、统一应用方式、统一调参、统一改进、集成多任务、易于模块组合、构建更强大的网络模型**。

内置集成YOLOv5、YOLOv7、YOLOv6、YOLOX、YOLOR、Transformer、PP-YOLO、PP-YOLOv2、PP-YOLOE、PP-YOLOEPlus、Scaled_YOLOv4、YOLOv3、YOLOv4、YOLO-Face、TPH-YOLO、YOLOv5Lite、SPD-YOLO、SlimNeck-YOLO、PicoDet等模型网络结构...

集成多种检测算法 和 相关多任务模型 使用统一模型代码框架,**集成在 YOLOAir 库中,统一应用方式**。便于科研者用于论文算法模型改进,模型对比,实现网络组合多样化。包含轻量化模型和精度更高的模型,根据场景合理选择,在精度和速度俩个方面取得平衡。同时该库支持解耦不同的结构和模块组件,让模块组件化,通过组合不同的模块组件,用户可以根据不同数据集或不同业务场景自行定制化构建不同检测模型。

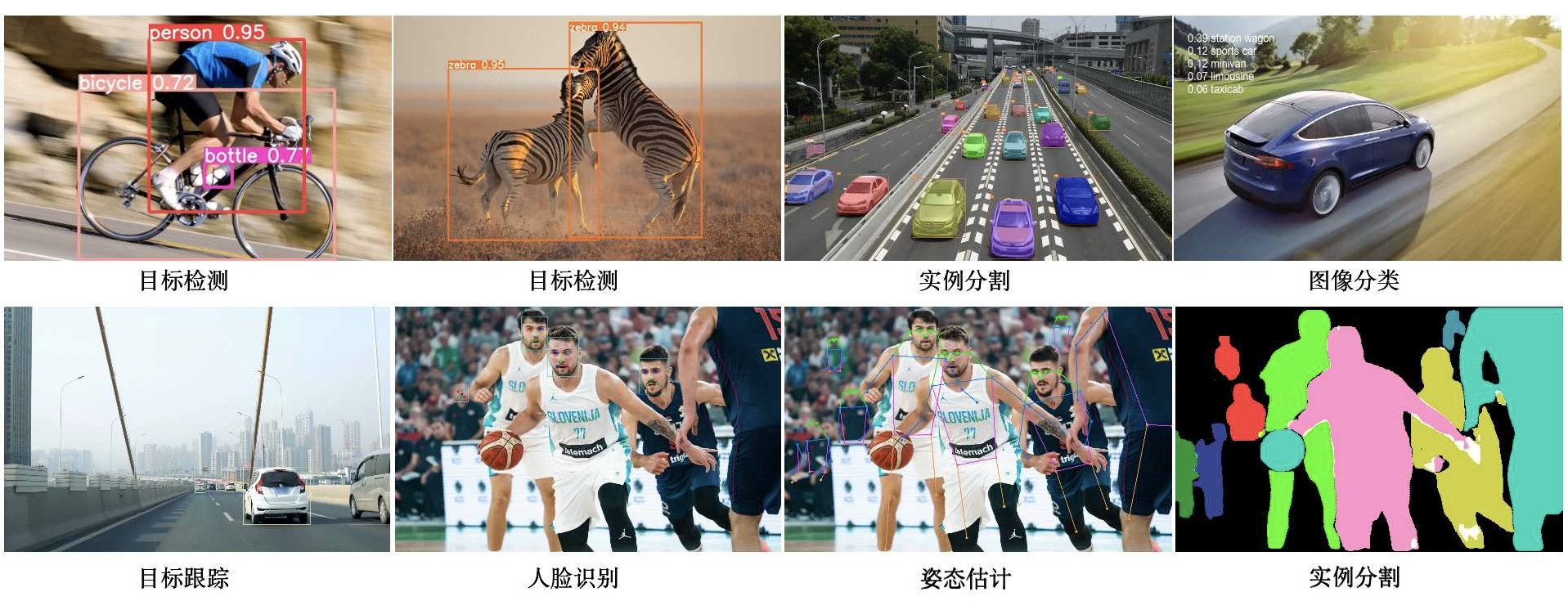

支持集成多任务,包括目标检测、实例分割、图像分类、姿态估计、人脸检测、目标跟踪等任务

**Star🌟、Fork** 不迷路,同步更新。

项目地址🌟: https://github.com/iscyy/yoloair

部分改进说明教程🌟: [https://github.com/iscyy/yoloair/wiki/Improved-tutorial-presentation](https://github.com/iscyy/yoloair/wiki/Improved-tutorial-presentation)

______________________________________________________________________

### 🌟 Beta Supported Updates

下列功能 YOLOAir-Beta版本内测 已支持🔥

- 其他 功能🌟

______________________________________________________________________

### 🌟 To Do

- 支持多任务, 包括 目标检测、分类、分割、姿态估计、人脸检测、目标跟踪 等任务

______________________________________________________________________

### 主要特性🚀

🚀支持更多的YOLO系列算法模型改进(持续更新...)

YOLOAir 算法库汇总了多种主流YOLO系列检测模型,一套代码集成多种模型:

- 内置集成 YOLOv5 模型网络结构、YOLOv7 模型网络结构、 YOLOv6 模型网络结构、PP-YOLO 模型网络结构、PP-YOLOE 模型网络结构、PP-YOLOEPlus 模型网络结构、YOLOR 模型网络结构、YOLOX 模型网络结构、ScaledYOLOv4 模型网络结构、YOLOv4 模型网络结构、YOLOv3 模型网络结构、YOLO-FaceV2模型网络结构、TPH-YOLOv5模型网络结构、SPD-YOLO模型网络结构、SlimNeck-YOLO模型网络结构、YOLOv5-Lite模型网络结构、PicoDet模型网络结构等持续更新中...

🚀支持更多的网络模型组件

- 如表

YOLOAir Structural Module Components

Backbones

Necks

Head

- CSPDarkNet系列

- HorNet系列

- ResNet系列

- RegNet系列

- ShuffleNet系列

- RepLKNet系列

- MobileNet系列

- EfficientNet系列

- ConvNext系列

- Ghost系列

- RepVGG系列

- transformer系列

- PANet

- RepPAN

- BiFPN

- CSPPAN

- SlimNeck

- ELANPAN

- GSNeck

- YOLOv4Head

- YOLOv5Head

- YOLOv6 Efficient decoupled head

- YOLOv7 IDetect Head

- YOLOv7 IAuxDetect Head

- YOLOX DetectX Head

- 自适应空间特征融合 检测头ASFF Head

- YOLOR 隐式学习 Head

- EH-Head

- Dual Weighting Head

- FCOS Head

- Dynamic Head

...

YOLOAir Module Components

Attention注意力机制

标签分配策略

IoU损失函数

- Self Attention

- Contextual Transformer

- Bottleneck Transformer

- S2-MLP Attention

- AxialAttention

- DANet

- DoubleAttention

- GlobalContextBlock

- ParNetAttention

- PolarizedSelfAttention

- SpatialGroupEnhance copy

- TripletAttention

- SK Attention

- CBAM Attention

- SE Attention

- Coordinate attention

- NAM Attention

- GAM Attention

- ECA Attention

- Shuffle Attention

- CrissCrossAttention

- SOCAttention

- SimAM Attention

- CrissCrossAttention

- Multi Anchor策略

- YOLOv5 标签分配策略

- SimOTA 标签分配策略

- YOLOv7 标签分配策略

- FreeAnchor 标签分配策略

- AutoAssign 标签分配策略

- Dual Weighting 标签分配策略

- Probabilistic Anchor Assignment 锚分配策略

- 其他改进的标签分配策略

- CIoU

- DIoU

- GIoU

- EIoU

- SIoU

- alpha IOU

...

Module Components

Loss

NMS

数据增强

- ComputeLoss(v3)

- ComputeLoss(v4)

- ComputeLoss(v5)

- ComputeLoss(v6)

- ComputeLoss(X)

- ComputeLossAuxOTA(v7)

- ComputeLossOTA(v7)

- NMS

- Merge-NMS

- Soft-NMS

- CIoU_NMS

- DIoU_NMS

- GIoU_NMS

- EIoU_NMS

- SIoU_NMS

- Soft-SIoUNMS

- Soft-CIoUNMS

- Soft-DIoUNMS

- Soft-EIoUNMS

- Soft-GIoUNMS

- SimSPPF

- SimSPPF

- SimSPPF

- Mosaic

- Copy paste

- Random affine(Rotation, Scale, Translation and Shear)

- MixUp

- HSV

- Random horizontal flip

...

YOLOAir Module Components

空间金字塔池化结构

模块化组件

AnchorBased / AnchorFree

- SPP

- SPPF

- ASPP

- RFB

- SPPCSPC

- SPPFCSPC

- SimSPPF

- Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, C3HB, C3RFEM, MultiSEAM, SEAM, C3STR, SPPCSPC, RepConv, BoT3, Air, CA, CBAM, Involution, Stem, ResCSPC, ResCSPB, ResXCSPB, ResXCSPC, BottleneckCSPB, BottleneckCSPC, ASPP, BasicRFB, SPPCSPC_group, HorBlock, CNeB,C3GC ,C3C2, nn.ConvTranspose2d, DWConvblock, RepVGGBlock, CoT3, ConvNextBlock, SPPCSP, BottleneckCSP2, DownC, BottleneckCSPF, RepVGGBlock, ReOrg, DWT, MobileOne,HorNet...

- YOLOv5、YOLOv7、YOLOv3、YOLOv4、YOLOR、ScaledYOLOv4、PPYOLO、PPYOLOv2、Improved-YOLOv5、Improved-YOLOv7

- YOLOX、YOLOv6 (Paper)、PPYOLOE、PPYOLOE+

...

🚀支持更多Backbone

- CSPDarkNet系列

- HorNet系列

- ResNet系列

- RegNet 系列

- ShuffleNet系列

- Ghost系列

- MobileNet系列

- EfficientNet系列

- ConvNext系列

- RepLKNet系列

- 重参数化系列

- RepVGG系列

- RepMLP系列

- ACNet系列

- RepConv系列

- OREPA系列

- Mobileone系列

- 自注意力Transformer系列

- MobileViT系列

- BoTNet-Transfomrer

- CoTNet-Transfomrer

- Swin-Transfomrer

- Swin-Transfomrer(v2)

- 以及其他trans系列

持续更新中🎈🚀🚀🚀

注: (YOLOAir(Beta)🔥 已经完成更新 20+ 种Transformer系列主干网络、多种MLP网络 以及 绝大部分重参数化结构模型网络)

🚀支持更多Neck

- FPN

- PANet

- RepPAN

- BiFPN

- CSPPAN

- SlimNeck

- ELANPAN

持续更新中🎈

🚀支持更多检测头Head

- YOLOv4、YOLOv5 Head检测头;

- YOLOR 隐式学习Head检测头;

- YOLOX的解耦合检测头Decoupled Head、DetectX Head;

- 自适应空间特征融合 检测头ASFF Head;

- YOLOv6-v2.0 Efficient decoupled head;

- YOLOv7检测头IAuxDetect Head, IDetect Head等;

- 其他不同检测头

🚀支持更多即插即用的注意力机制Attention

- 在网络任何部分即插即用式使用注意力机制

- Self Attention

- Contextual Transformer

- Bottleneck Transformer

- S2-MLP Attention

- SK Attention

- CBAM Attention

- SE Attention

- Coordinate attention

- NAM Attention

- GAM Attention

- ECA Attention

- Shuffle Attention

- CrissCrossAttention

- Coordinate attention

- SOCAttention

- SimAM Attention

持续更新中🎈

- 🔥🔥🔥 重磅!!!作为开源项目补充,推荐一个注意力算法代码库[External-Attention](https://github.com/xmu-xiaoma666/External-Attention-pytorch),里面汇总整理很全面,包含各种Attention等代码,代码简洁易读,一行代码实现Attention机制。

🚀更多空间金字塔池化结构

- SPP

- SPPF

- ASPP

- RFB

- SPPCSPC

- SPPFCSPC

- SimSPPF

持续更新中🎈

🚀支持更多Loss

- ComputeLoss

- ComputeLoss(v5)

- ComputeLoss(v6)

- ComputeLoss(X)

- ComputeLossAuxOTA(v7)

- ComputeLossOTA(v7)

- ComputeNWDLoss

- 其他Loss

🚀支持 Anchor-base 和 Anchor-Free

- 🌟 YOLOv5、YOLOv7、YOLOv3、YOLOv4、YOLOR、ScaledYOLOv4、PPYOLO、PPYOLOv2、Improved-YOLOv5、Improved-YOLOv7

- 🌟 YOLOX、YOLOv6 (Paper)、PPYOLOE、PPYOLOE+

🚀支持多种标签分配策略

- Multi Anchor策略

- YOLOv5 标签分配策略

- SimOTA 标签分配策略

- YOLOv7 标签分配策略

- AutoAssign 标签分配策略🌟

- Dual Weighting 标签分配策略🌟

- FreeAnchor 标签分配策略🌟

- 其他改进的标签分配策略

持续更新中🎈

🚀支持加权框融合(WBF)

🚀 内置多种网络模型模块化组件

Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, C3HB, C3RFEM, MultiSEAM, SEAM, C3STR, SPPCSPC, RepConv, BoT3, Air, CA, CBAM, Involution, Stem, ResCSPC, ResCSPB, ResXCSPB, ResXCSPC, BottleneckCSPB, BottleneckCSPC, ASPP, BasicRFB, SPPCSPC_group, HorBlock, CNeB,C3GC ,C3C2, nn.ConvTranspose2d, DWConvblock, RepVGGBlock, CoT3, ConvNextBlock, SPPCSP, BottleneckCSP2, DownC, BottleneckCSPF, RepVGGBlock, ReOrg, DWT, MobileOne,HorNet...等详细代码 **./models/common.py文件** 内

🚀支持更多IoU损失函数

- CIoU

- DIoU

- GIoU

- EIoU

- SIoU

- alpha IOU

持续更新中🎈

🚀支持更多NMS

- NMS

- Merge-NMS

- Soft-NMS

- CIoU_NMS

- DIoU_NMS

- GIoU_NMS

- EIoU_NMS

- SIoU_NMS

- Soft-SIoUNMS、Soft-CIoUNMS、Soft-DIoUNMS、Soft-EIoUNMS、Soft-GIoUNMS等;

持续更新中🎈

🚀支持更多数据增强

- Mosaic、Copy paste、Random affine(Rotation, Scale, Translation and Shear)、MixUp、Augment HSV(Hue, Saturation, Value、Random horizontal flip

🚀支持加载YOLOv3、YOLOv4、YOLOv5、YOLOv7、YOLOR、Scaled_YOLO等网络的官方预训练权重进行迁移学习

🚀 YOLO系列网络模型热力图可视化(GardCAM、GardCAM++等)

支持YOLOv3、、YOLOv3-SPP、YOLOv4、YOLOv5、YOLOR、YOLOv7Scaled_YOLOv4、TPH-YOLO、SPD-YOLO以及自定义网络模型等模型 (YOLOAir(Beta版本内测)🔥已支持)

🚀主流网络模型结构图汇总: [模型🔗](https://github.com/iscyy/yoloair/blob/main/docs/document/model_.md)

以上组件模块使用统一模型代码框架、统一任务形式、统一应用方式,**模块组件化**🚀 可以帮助用户自定义快速组合Backbone、Neck、Head,使得网络模型多样化,助力科研改进检测算法,构建更强大的网络模型。

说明: 以上主要特性支持 包括 Main版本 和 Beta版本,部分特性暂时只完成更新在 Beta 中,不是所有更新都直接在 Main 中,后续 Beta 版本内测之后逐渐加入到 Main版本中。

______________________________________________________________________

### 内置网络模型配置支持✨

🚀包括基于 YOLOv5、YOLOv7、YOLOX、YOLOR、YOLOv3、YOLOv4、Scaled_YOLOv4、PPYOLO、PPYOLOE、PPYOLOEPlus、Transformer、YOLO-FaceV2、PicoDet、YOLOv5-Lite、TPH-YOLOv5、SPD-YOLO等**其他多种改进网络结构等算法模型**的模型配置文件

______________________________________________________________________

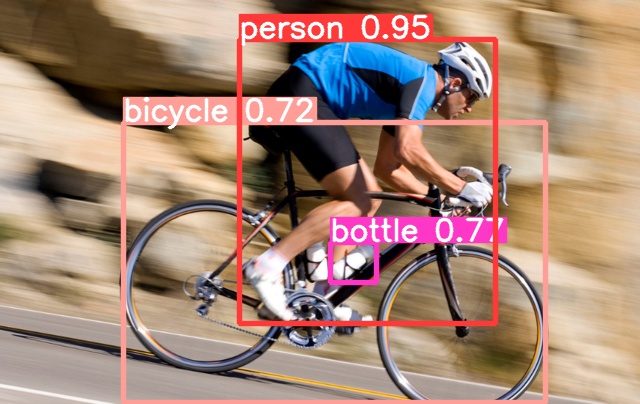

### 效果预览🚀

|目标检测|目标分割|

:-------------------------:|:-------------------------:

|

|

|图像分类|实例分割|

:-------------------------:|:-------------------------:

|

|

|目标分割|目标跟踪|

:-------------------------:|:-------------------------:

|

|

|姿态估计|人脸检测|

:-------------------------:|:-------------------------:

|

|

|热力图01|热力图02|

:-------------------------:|:-------------------------:

|

|

### 预训练权重🚀

- YOLOv5

https://github.com/ultralytics/yolov5/releases/tag/v6.1

- YOLOv4

https://github.com/iscyy/yoloair/releases/tag/v1.0

- YOLOv3

https://github.com/iscyy/yoloair/releases/tag/v1.0

- YOLOR

https://github.com/iscyy/yoloair/releases/tag/v1.0

- Scaled_YOLO

https://github.com/iscyy/yoloair/releases/tag/v1.0

- YOLOv7

https://github.com/iscyy/yoloair/releases/tag/v1.0

______________________________________________________________________

## 使用🍉

**About the code.** Follow the design principle of [YOLOv5](https://github.com/ultralytics/yolov5).

The original version was created based on YOLOv5(v6.1)

### 安装

在**Python>=3.7.0** 的环境中克隆版本仓并安装 requirements.txt,包括**PyTorch>=1.7**。

```bash

$ git clone https://github.com/iscyy/yoloair.git # 克隆

$ cd YOLOAir

$ pip install -r requirements.txt # 安装

```

### 训练

```bash

$ python train.py --data coco128.yaml --cfg configs/yolov5/yolov5s.yaml #默认为yolo

```

### 推理

`detect.py` 在各种数据源上运行推理, 并将检测结果保存到 `runs/detect` 目录。

```bash

$ python detect.py --source 0 # 网络摄像头

img.jpg # 图像

vid.mp4 # 视频

path/ # 文件夹

path/*.jpg # glob

```

### 融合

如果您使用不同模型来推理数据集,则可以使用 wbf.py文件 通过加权框融合来集成结果。

您只需要在 wbf.py文件 中设置 img 路径和 txt 路径。

```bash

$ python wbf.py

```

______________________________________________________________________

### 论文分享 ![]()

FightingCV公众号每天分享前沿论文动态

(公众号回复加群,添加小助手加交流群)

______________________________________________________________________

### Performance

| Model | size

(pixels) | mAPval

0.5:0.95 | mAPval

0.5 | Speed

CPU b1

(ms) | Speed

V100 b1

(ms) | Speed

V100 b32

(ms) | params

(M) | FLOPs

@640 (B) | Weights

|------------------------------------------------------------------------------------------------------|-----------------------|-------------------------|--------------------|------------------------------|-------------------------------|--------------------------------|--------------------|------------------------|------------------------|

| YOLOv5n | 640 | 28.0 | 45.7 | **45** | **6.3** | **0.6** | **1.9** | **4.5** | [YOLOv5n](https://github.com/ultralytics/yolov5/releases/download/v6.1/yolov5n.pt)

| YOLOv5s | 640 | 37.4 | 56.8 | 98 | 6.4 | 0.9 | 7.2 | 16.5 | [YOLOv5s](https://github.com/ultralytics/yolov5/releases/download/v6.1/yolov5s.pt)

| YOLOv5m | 640 | 45.4 | 64.1 | 224 | 8.2 | 1.7 | 21.2 | 49.0 | [YOLOv5m](https://github.com/ultralytics/yolov5/releases/download/v6.1/yolov5m.pt)

| YOLOv5l | 640 | 49.0 | 67.3 | 430 | 10.1 | 2.7 | 46.5 | 109.1 | [YOLOv5l](https://github.com/ultralytics/yolov5/releases/download/v6.1/yolov5l.pt)

| YOLOv5x | 640 | 50.7 | 68.9 | 766 | 12.1 | 4.8 | 86.7 | 205.7 | [YOLOv5x](https://github.com/ultralytics/yolov5/releases/download/v6.1/yolov5x.pt)

| | | | | | | | | |

| YOLOv5n6 | 1280 | 36.0 | 54.4 | 153 | 8.1 | 2.1 | 3.2 | 4.6 |[YOLOv5n6](https://github.com/ultralytics/yolov5/releases/download/v6.1/yolov5n6.pt)

| YOLOv5s6 | 1280 | 44.8 | 63.7 | 385 | 8.2 | 3.6 | 12.6 | 16.8 |[YOLOv5s6](https://github.com/ultralytics/yolov5/releases/download/v6.1/yolov5s6.pt)

| YOLOv5m6 | 1280 | 51.3 | 69.3 | 887 | 11.1 | 6.8 | 35.7 | 50.0 |[YOLOv5m6](https://github.com/ultralytics/yolov5/releases/download/v6.1/yolov5m6.pt)

| YOLOv5l6 | 1280 | 53.7 | 71.3 | 1784 | 15.8 | 10.5 | 76.8 | 111.4 |[YOLOv5l6](https://github.com/ultralytics/yolov5/releases/download/v6.1/yolov5l6.pt)

| YOLOv5x6

+ TTA | 1280

1536 | 55.0

**55.8** | 72.7

**72.7** | 3136

- | 26.2

- | 19.4

- | 140.7

- | 209.8

- |[YOLOv5x6](https://github.com/ultralytics/yolov5/releases/download/v6.1/yolov5x6.pt)

Expand

* The original version was created based on YOLOv5(6.1)

______________________________________________________________________

| Model | size

(pixels) | mAPval

0.5:0.95 | mAPval

0.5 | deconv kernel size

| Speed

V100 b1

(ms) | Speed

V100 b32

(ms) | params

(M) | FLOPs

@640 (B) |

|------------------------------------------------------------------------------------------------------|-----------------------|-------------------------|--------------------|------------------------------|-------------------------------|--------------------------------|--------------------|------------------------|

| YOLOv5s | 640 | 33.7 | 52.9 | - | **5.6** | **2.2** | **7.23** | **16.5** |

| YOLOv5s-deconv-exp1 | 640 | 33.4 | 52.5 | 2 | **5.6** | 2.4 | 7.55 | 18.2 |

| YOLOv5s-deconv-exp2 | 640 | **34.7** | **54.2** | 4 | 5.8 | 2.5 | 8.54 | 23.2 |

Expand

* The training process depends on 4xV100 GPU

```

# train

python -m torch.distributed.run --nproc_per_node 4 train.py --device 0,1,2,3 --data data/coco.yaml --hyp data/hyps/hyp.scratch-low.yaml --cfg path/to/model.yaml --batch 512 --epochs 300 --weights ''

# val

python val.py --verbose --data data/coco.yaml --conf 0.001 --iou 0.65 --batch 1 --weights path/to/model.pt

```

* There is a gap between the mAP of YOLOv5s and the official one, here is just for comparison

______________________________________________________________________

| Model | size

(pixels) | mAPval

0.5:0.95 | mAPval

0.5 | params

(M) | FLOPs

@640 (B) |

|------------------------------------------------------------------------------------------------------|-----------------------|-------------------------|--------------------------------|--------------------|------------------------|

| YOLOv5s | 640 | 37.4 | 56.6 | **7.226** | **16.5** |

| YOLOv5s-deconv | 640 | **37.8** | **57.1** | 7.232 | **16.5** |

Expand

* tested the 4x4 depthwise-separable deconv by setting the groups as input_channel

* their params number and FLOPS are nearly the same while the new model's mAP is about 0.4 higher than the origin.

______________________________________________________________________

| Model | size

(pixels) | mAPval

0.5:0.95 | mAPval

0.5 | params

(M) | FLOPs

@640 (B) |

|------------------------------------------------------------------------------------------------------|-----------------------|-------------------------|--------------------------------|--------------------|------------------------|

| YOLOv5s | 640 | 37.2 | 56.0 | **7.2** | **16.5** |

| YOLOv5s-C3GC-backbone | 640 | **37.7** | **57.3** | 7.5 | 16.8 |

Expand

* The original version was created based on YOLOv5-6.0

______________________________________________________________________

### YOLOv5教程✨

与YOLOv5框架同步

- [训练自定义数据](https://github.com/ultralytics/yolov5/wiki/Train-Custom-Data) 🚀 推荐

- [获得最佳训练效果的技巧](https://github.com/ultralytics/yolov5/wiki/Tips-for-Best-Training-Results) ☘️ 推荐

- [使用 Weights & Biases 记录实验](https://github.com/ultralytics/yolov5/issues/1289) 🌟 新

- [Roboflow:数据集、标签和主动学习](https://github.com/ultralytics/yolov5/issues/4975) 🌟 新

- [多GPU训练](https://github.com/ultralytics/yolov5/issues/475)

- [PyTorch Hub](https://github.com/ultralytics/yolov5/issues/36) ⭐ 新

- [TFLite, ONNX, CoreML, TensorRT 导出](https://github.com/ultralytics/yolov5/issues/251) 🚀

- [测试时数据增强 (TTA)](https://github.com/ultralytics/yolov5/issues/303)

- [模型集成](https://github.com/ultralytics/yolov5/issues/318)

- [模型剪枝/稀疏性](https://github.com/ultralytics/yolov5/issues/304)

- [超参数进化](https://github.com/ultralytics/yolov5/issues/607)

- [带有冻结层的迁移学习](https://github.com/ultralytics/yolov5/issues/1314) ⭐ 新

- [架构概要](https://github.com/ultralytics/yolov5/issues/6998) ⭐ 新

______________________________________________________________________

### 未来增强✨

后续会持续建设和完善 YOLOAir 生态

完善集成更多 YOLOv5 系列模型,持续结合不同模块,构建更多不同网络模型

横向拓展和引入关联技术等等

______________________________________________________________________

## Citation✨

```python

@article{2022yoloair,

title={{YOLOAir}: Makes improvements easy again},

author={iscyy},

repo={github https://github.com/iscyy/yoloair},

year={2022}

}

```

## Statement

Expand

* The content of this site is only for sharing notes. If some content is infringing, please sending email.

* If you have any question, please discuss with me by sending email.

## Acknowledgements

Expand

[https://github.com/ultralytics/yolov5](https://github.com/ultralytics/yolov5)

[https://github.com/ultralytics/yolov3](https://github.com/ultralytics/yolov3)

[https://github.com/WongKinYiu/PyTorch_YOLOv4](https://github.com/WongKinYiu/PyTorch_YOLOv4)

[https://github.com/WongKinYiu/ScaledYOLOv4](https://github.com/WongKinYiu/ScaledYOLOv4)

[https://github.com/meituan/YOLOv6](https://github.com/meituan/YOLOv6)

[https://github.com/WongKinYiu/yolov7](https://github.com/WongKinYiu/yolov7)

[https://github.com/WongKinYiu/yolor](https://github.com/WongKinYiu/yolor)

[https://github.com/xmu-xiaoma666/External-Attention-pytorch](https://github.com/xmu-xiaoma666/External-Attention-pytorch)

[https://gitee.com/SearchSource/yolov5_yolox](https://gitee.com/SearchSource/yolov5_yolox)

[https://github.com/Krasjet-Yu/YOLO-FaceV2](https://github.com/Krasjet-Yu/YOLO-FaceV2)

[https://github.com/positive666/yolov5_research/](https://github.com/positive666/yolov5_research)

[https://github.com/ppogg/YOLOv5-Lite](https://github.com/ppogg/YOLOv5-Lite)

[https://github.com/Gumpest/YOLOv5-Multibackbone](https://github.com/Gumpest/YOLOv5-Multibackbone-Compression)