https://github.com/jiahuiyu/wdsr_ntire2018

Code of our winning entry to NTIRE super-resolution challenge, CVPR 2018

https://github.com/jiahuiyu/wdsr_ntire2018

deep-neural-networks efficient-algorithm pytorch super-resolution wdsr

Last synced: 8 months ago

JSON representation

Code of our winning entry to NTIRE super-resolution challenge, CVPR 2018

- Host: GitHub

- URL: https://github.com/jiahuiyu/wdsr_ntire2018

- Owner: JiahuiYu

- Created: 2018-03-29T19:34:17.000Z (over 7 years ago)

- Default Branch: master

- Last Pushed: 2020-04-27T01:21:12.000Z (over 5 years ago)

- Last Synced: 2025-03-29T03:06:13.140Z (8 months ago)

- Topics: deep-neural-networks, efficient-algorithm, pytorch, super-resolution, wdsr

- Language: Python

- Homepage: http://www.vision.ee.ethz.ch/ntire18/

- Size: 19.5 KB

- Stars: 602

- Watchers: 26

- Forks: 122

- Open Issues: 1

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

# Wide Activation for Efficient and Accurate Image Super-Resolution

[Tech Report](https://arxiv.org/abs/1808.08718) | [Approach](#wdsr-network-architecture) | [Results](#overall-performance) | [TensorFlow](https://github.com/ychfan/tf_estimator_barebone/blob/master/docs/super_resolution.md) | [Other Implementations](#other-implementations) | [Bibtex](#citing)

**Update (Apr, 2020)**: We have released a [reloaded version](https://github.com/ychfan/wdsr) with full training scripts in PyTorch and pre-trained models.

**Update (Oct, 2018)**: We have re-implemented [WDSR on TensorFlow](https://github.com/ychfan/tf_estimator_barebone/blob/master/docs/super_resolution.md) for end-to-end training and testing. Pre-trained models are released. The runtime speed of [weight normalization on tensorflow](https://github.com/ychfan/tf_estimator_barebone/blob/master/common/layers.py) is also optimized.

## Run

0. Requirements:

* Install [PyTorch](https://pytorch.org/) (tested on release 0.4.0 and 0.4.1).

* Clone [EDSR-Pytorch](https://github.com/thstkdgus35/EDSR-PyTorch/tree/95f0571aa74ddf9dd01ff093081916d6f17d53f9) as backbone training framework.

1. Training and Validation:

* Copy [wdsr_a.py](/wdsr_a.py), [wdsr_b.py](/wdsr_b.py) into `EDSR-PyTorch/src/model/`.

* Modify `EDSR-PyTorch/src/option.py` and `EDSR-PyTorch/src/demo.sh` to support `--n_feats, --block_feats, --[r,g,b]_mean` option (please find reference in issue [#7](https://github.com/JiahuiYu/wdsr_ntire2018/issues/7), [#8](https://github.com/JiahuiYu/wdsr_ntire2018/issues/8)).

* Launch training with [EDSR-Pytorch](https://github.com/thstkdgus35/EDSR-PyTorch/tree/95f0571aa74ddf9dd01ff093081916d6f17d53f9) as backbone training framework.

2. Still have questions?

* If you still have questions, please first search over closed issues. If the problem is not solved, please open a new issue.

## Overall Performance

| Network | Parameters | DIV2K (val) PSNR |

| - | - | - |

| EDSR Baseline | 1,372,318 | 34.61 |

| WDSR Baseline | **1,190,100** | **34.77** |

We measured PSNR using DIV2K 0801 ~ 0900 (trained on 0000 ~ 0800) on RGB channels without self-ensemble. Both baseline models have 16 residual blocks.

More results:

Number of Residual Blocks13SR NetworkEDSRWDSR-AWDSR-BEDSRWDSR-AWDSR-BParameters0.26M0.08M0.08M0.41M0.23M0.23MDIV2K (val) PSNR33.21033.32333.43434.04334.16334.205

Number of Residual Blocks58SR NetworkEDSRWDSR-AWDSR-BEDSRWDSR-AWDSR-BParameters0.56M0.37M0.37M0.78M0.60M0.60MDIV2K (val) PSNR34.28434.38834.40934.45734.54134.536

Comparisons of EDSR and our proposed WDSR-A, WDSR-B using identical settings to [EDSR baseline model](https://github.com/thstkdgus35/EDSR-PyTorch) for image bicubic x2 super-resolution on DIV2K dataset.

## WDSR Network Architecture

Left: vanilla residual block in EDSR. Middle: **wide activation**. Right: **wider activation with linear low-rank convolution**. The proposed wide activation WDSR-A, WDSR-B have similar merits with [MobileNet V2](https://arxiv.org/abs/1801.04381) but different architectures and much better PSNR.

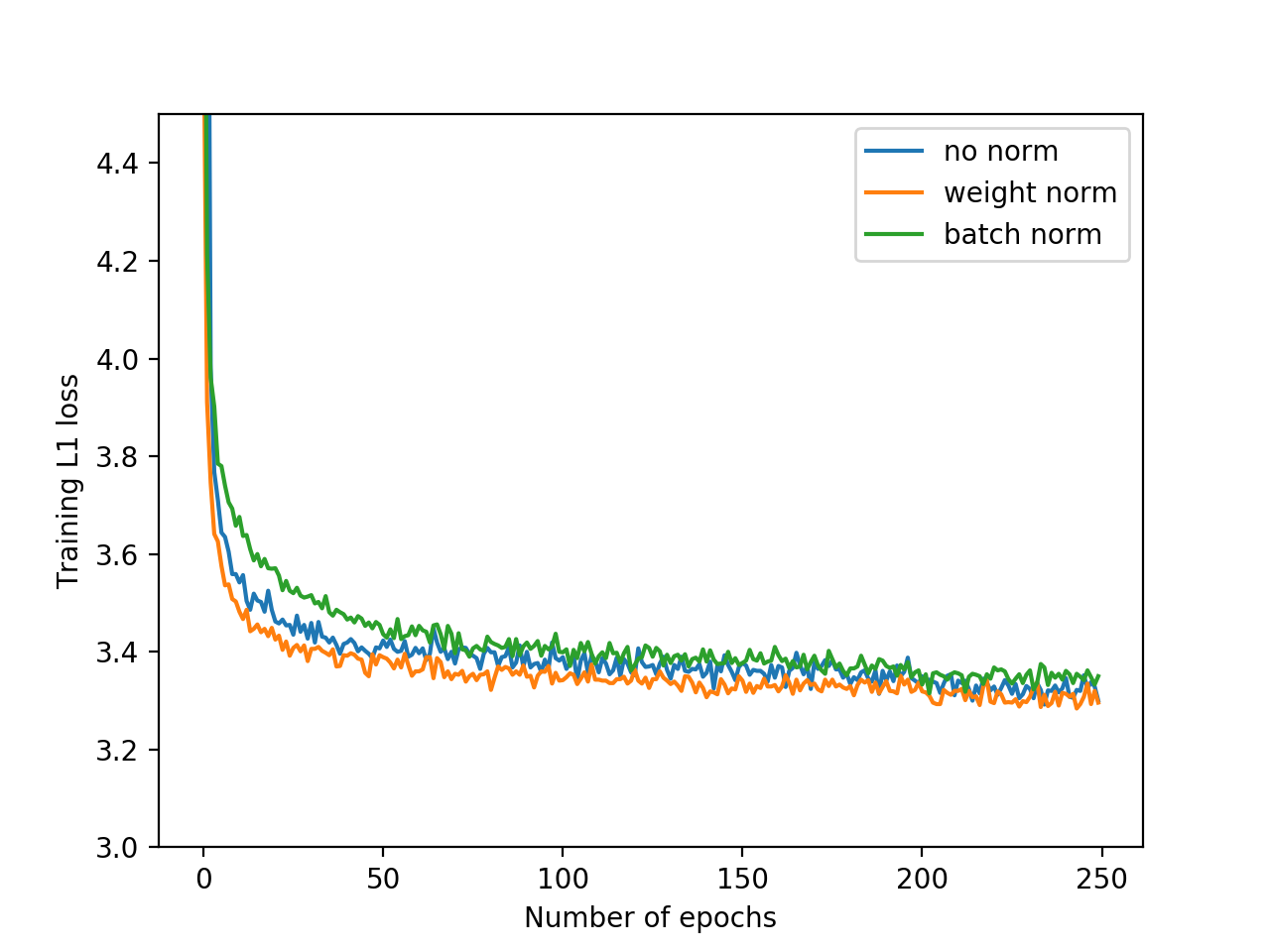

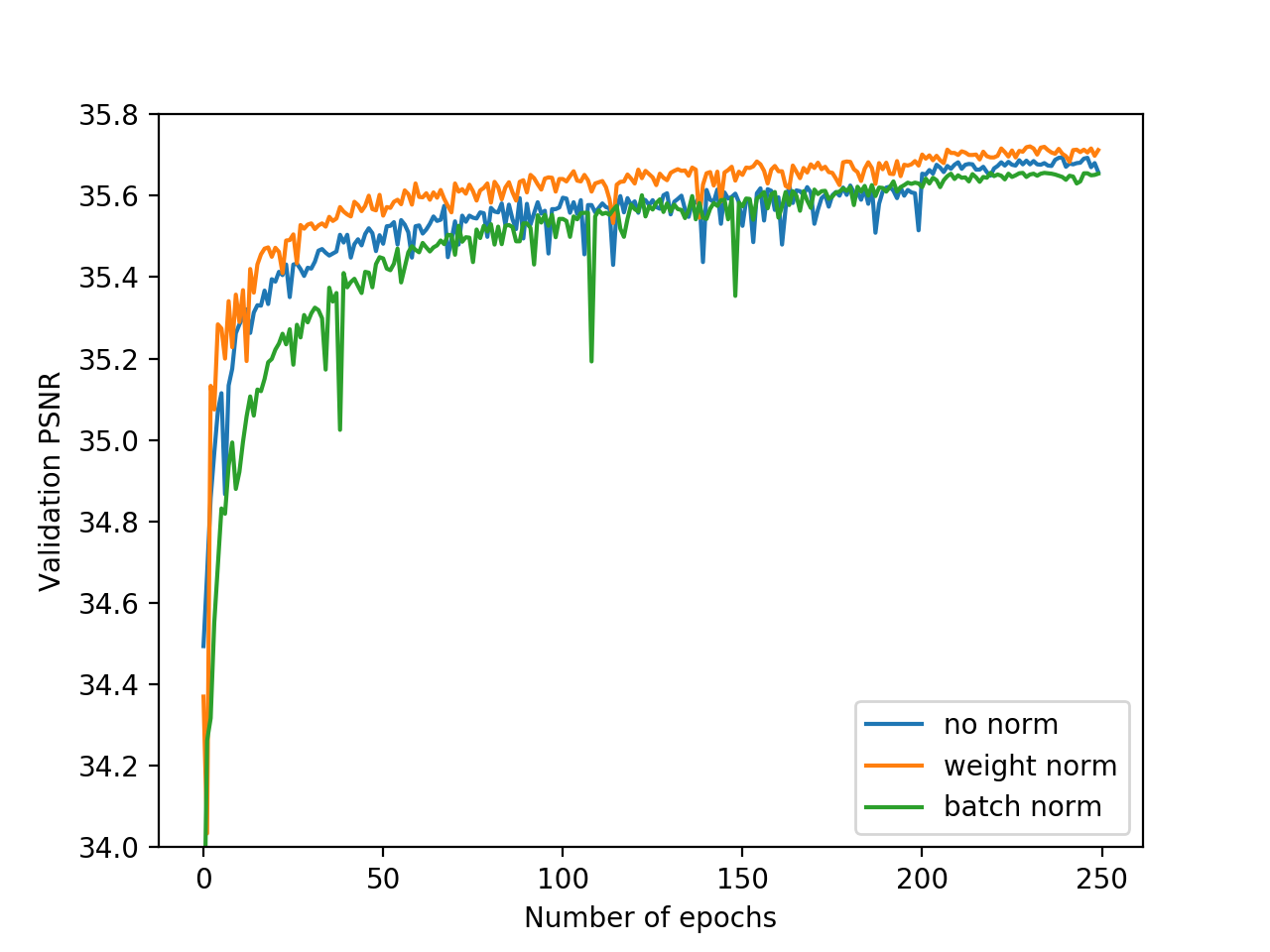

## Weight Normalization vs. Batch Normalization and No Normalization

Training loss and validation PSNR with weight normalization, batch normalization or no normalization. Training with weight normalization has faster convergence and better accuracy.

## Other Implementations

- [TensorFlow-WDSR](https://github.com/ychfan/tf_estimator_barebone/blob/master/docs/super_resolution.md) (official)

- [Keras-WDSR](https://github.com/krasserm/wdsr) By [Martin Krasser](https://github.com/krasserm)

## Citing

Please consider cite WDSR for image super-resolution and compression if you find it helpful.

```

@article{yu2018wide,

title={Wide Activation for Efficient and Accurate Image Super-Resolution},

author={Yu, Jiahui and Fan, Yuchen and Yang, Jianchao and Xu, Ning and Wang, Xinchao and Huang, Thomas S},

journal={arXiv preprint arXiv:1808.08718},

year={2018}

}

@inproceedings{fan2018wide,

title={Wide-activated Deep Residual Networks based Restoration for BPG-compressed Images},

author={Fan, Yuchen and Yu, Jiahui and Huang, Thomas S},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops},

pages={2621--2624},

year={2018}

}

```