https://github.com/jina-ai/dalle-flow

🌊 A Human-in-the-Loop workflow for creating HD images from text

https://github.com/jina-ai/dalle-flow

dalle dalle-mega dalle-mini generative-art glid3 human-in-the-loop jina neural-search openai swinir

Last synced: 6 months ago

JSON representation

🌊 A Human-in-the-Loop workflow for creating HD images from text

- Host: GitHub

- URL: https://github.com/jina-ai/dalle-flow

- Owner: jina-ai

- Created: 2022-04-30T15:15:08.000Z (over 3 years ago)

- Default Branch: main

- Last Pushed: 2023-05-16T09:54:02.000Z (over 2 years ago)

- Last Synced: 2025-05-08T03:58:37.275Z (6 months ago)

- Topics: dalle, dalle-mega, dalle-mini, generative-art, glid3, human-in-the-loop, jina, neural-search, openai, swinir

- Language: Python

- Homepage: grpcs://dalle-flow.dev.jina.ai

- Size: 111 MB

- Stars: 2,836

- Watchers: 57

- Forks: 210

- Open Issues: 27

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

- StarryDivineSky - https://github.com/jina-ai/dalle-flow - Mega、GLID-3 XL 和 Stable Diffusion 生成候选图像,然后调用 CLIP-as-service 对候选图像进行排名。首选候选材料被送入 GLID-3 XL 进行扩散,这通常可以丰富纹理和背景。最后,通过 SwinIR 将候选图像放大到 1024x1024。DALL·E Flow 是在客户端-服务器架构中使用 Jina 构建的,这赋予了它高可扩展性、无阻塞流和现代 Pythonic 接口。客户端可以通过 gRPC/Websocket/HTTP 和 TLS 与服务器交互。为什么选择 Human-in-the-loop?生成艺术是一个创造性的过程。虽然 DALL·E 释放人们的创造力,拥有单一提示单一输出的 UX/UI 将想象力锁定在单一的可能性上,无论这个单一的结果多么精细,这都是糟糕的。DALL·E Flow 是单行代码的替代方案,通过将生成艺术正式化为迭代过程。 (其他_机器视觉 / 网络服务_其他)

- awesome-review-dalle-2 - DALLE-FLOW

- awesome-production-machine-learning - DALL·E Flow - ai/dalle-flow.svg?style=social) - DALL·E Flow is an interactive workflow for generating high-definition images from text prompt. (Data Pipeline)

- awesome-list - DALL·E Flow - A Human-in-the-Loop workflow for creating HD images from text. (Computer Vision / Image / Video Generation)

README

A Human-in-the-loop? workflow for creating HD images from text

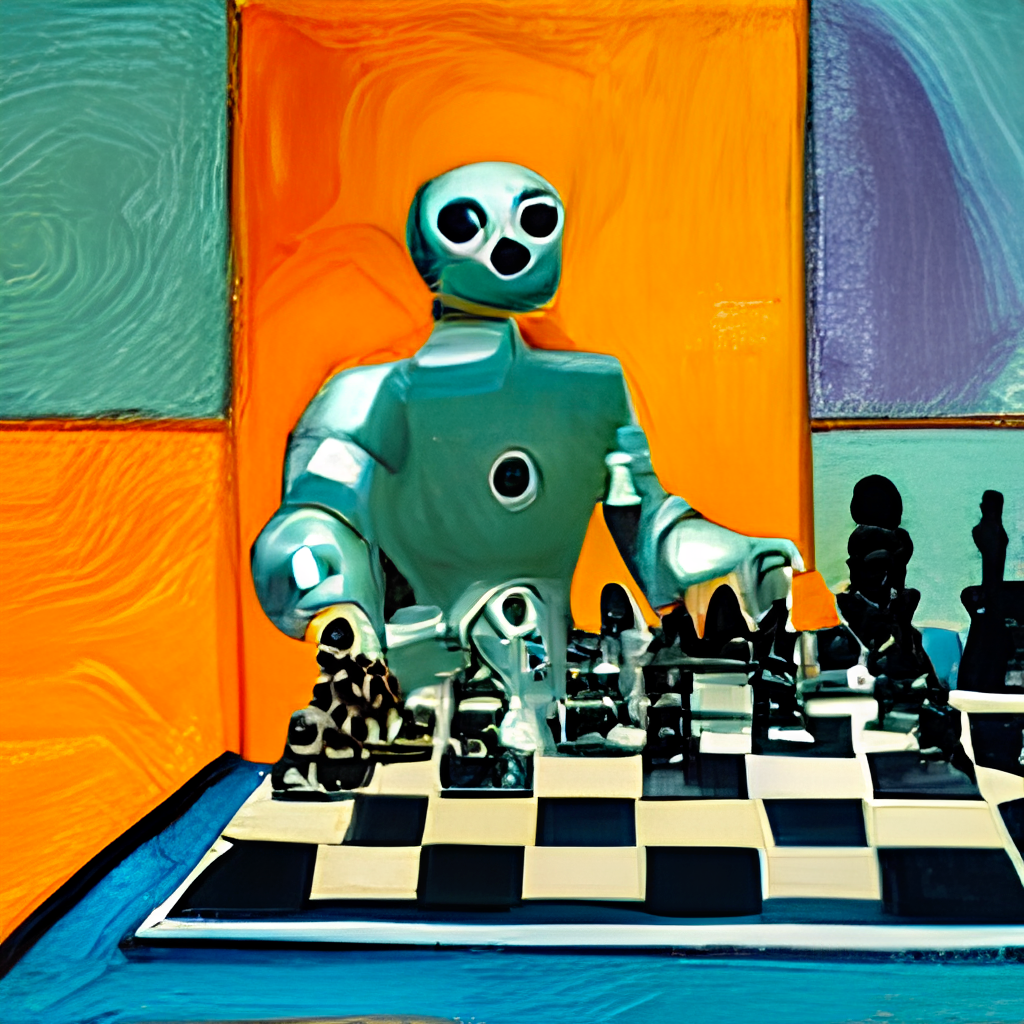

DALL·E Flow is an interactive workflow for generating high-definition images from text prompt. First, it leverages [DALL·E-Mega](https://github.com/borisdayma/dalle-mini), [GLID-3 XL](https://github.com/Jack000/glid-3-xl), and [Stable Diffusion](https://github.com/CompVis/stable-diffusion) to generate image candidates, and then calls [CLIP-as-service](https://github.com/jina-ai/clip-as-service) to rank the candidates w.r.t. the prompt. The preferred candidate is fed to [GLID-3 XL](https://github.com/Jack000/glid-3-xl) for diffusion, which often enriches the texture and background. Finally, the candidate is upscaled to 1024x1024 via [SwinIR](https://github.com/JingyunLiang/SwinIR).

DALL·E Flow is built with [Jina](https://github.com/jina-ai/jina) in a client-server architecture, which gives it high scalability, non-blocking streaming, and a modern Pythonic interface. Client can interact with the server via gRPC/Websocket/HTTP with TLS.

**Why Human-in-the-loop?** Generative art is a creative process. While recent advances of DALL·E unleash people's creativity, having a single-prompt-single-output UX/UI locks the imagination to a _single_ possibility, which is bad no matter how fine this single result is. DALL·E Flow is an alternative to the one-liner, by formalizing the generative art as an iterative procedure.

## Usage

DALL·E Flow is in client-server architecture.

- [Client usage](#Client)

- [Server usage, i.e. deploy your own server](#Server)

## Updates

- 🌟 **2022/10/27** [RealESRGAN upscalers](https://github.com/xinntao/Real-ESRGAN) have been added.

- ⚠️ **2022/10/26** To use CLIP-as-service available at `grpcs://api.clip.jina.ai:2096` (requires `jina >= v3.11.0`), you need first get an access token from [here](https://console.clip.jina.ai/get_started). See [Use the CLIP-as-service](#use-the-clip-as-service) for more details.

- 🌟 **2022/9/25** Automated [CLIP-based segmentation](https://github.com/timojl/clipseg) from a prompt has been added.

- 🌟 **2022/8/17** Text to image for [Stable Diffusion](https://github.com/CompVis/stable-diffusion) has been added. In order to use it you will need to agree to their ToS, download the weights, then enable the flag in docker or `flow_parser.py`.

- ⚠️ **2022/8/8** Started using CLIP-as-service as an [external executor](https://docs.jina.ai/fundamentals/flow/add-executors/#external-executors). Now you can easily [deploy your own CLIP executor](#run-your-own-clip) if you want. There is [a small breaking change](https://github.com/jina-ai/dalle-flow/pull/74/files#diff-b335630551682c19a781afebcf4d07bf978fb1f8ac04c6bf87428ed5106870f5R103) as a result of this improvement, so [please _reopen_ the notebook in Google Colab](https://colab.research.google.com/github/jina-ai/dalle-flow/blob/main/client.ipynb).

- ⚠️ **2022/7/6** Demo server migration to AWS EKS for better availability and robustness, **server URL is now changing to `grpcs://dalle-flow.dev.jina.ai`**. All connections are now with TLS encryption, [please _reopen_ the notebook in Google Colab](https://colab.research.google.com/github/jina-ai/dalle-flow/blob/main/client.ipynb).

- ⚠️ **2022/6/25** Unexpected downtime between 6/25 0:00 - 12:00 CET due to out of GPU quotas. The new server now has 2 GPUs, add healthcheck in client notebook.

- **2022/6/3** Reduce default number of images to 2 per pathway, 4 for diffusion.

- 🐳 **2022/6/21** [A prebuilt image is now available on Docker Hub!](https://hub.docker.com/r/jinaai/dalle-flow) This image can be run out-of-the-box on CUDA 11.6. Fix an upstream bug in CLIP-as-service.

- ⚠️ **2022/5/23** Fix an upstream bug in CLIP-as-service. This bug makes the 2nd diffusion step irrelevant to the given texts. New Dockerfile proved to be reproducible on a AWS EC2 `p2.x8large` instance.

- **2022/5/13b** Removing TLS as Cloudflare gives 100s timeout, making DALLE Flow in usable [Please _reopen_ the notebook in Google Colab!](https://colab.research.google.com/github/jina-ai/dalle-flow/blob/main/client.ipynb).

- 🔐 **2022/5/13** New Mega checkpoint! All connections are now with TLS, [Please _reopen_ the notebook in Google Colab!](https://colab.research.google.com/github/jina-ai/dalle-flow/blob/main/client.ipynb).

- 🐳 **2022/5/10** [A Dockerfile is added! Now you can easily deploy your own DALL·E Flow](#run-in-docker). New Mega checkpoint! Smaller memory-footprint, the whole Flow can now fit into **one GPU with 21GB memory**.

- 🌟 **2022/5/7** New Mega checkpoint & multiple optimization on GLID3: less memory-footprint, use `ViT-L/14@336px` from CLIP-as-service, `steps 100->200`.

- 🌟 **2022/5/6** DALL·E Flow just got updated! [Please _reopen_ the notebook in Google Colab!](https://colab.research.google.com/github/jina-ai/dalle-flow/blob/main/client.ipynb)

- Revised the first step: 16 candidates are generated, 8 from DALL·E Mega, 8 from GLID3-XL; then ranked by CLIP-as-service.

- Improved the flow efficiency: the overall speed, including diffusion and upscaling are much faster now!

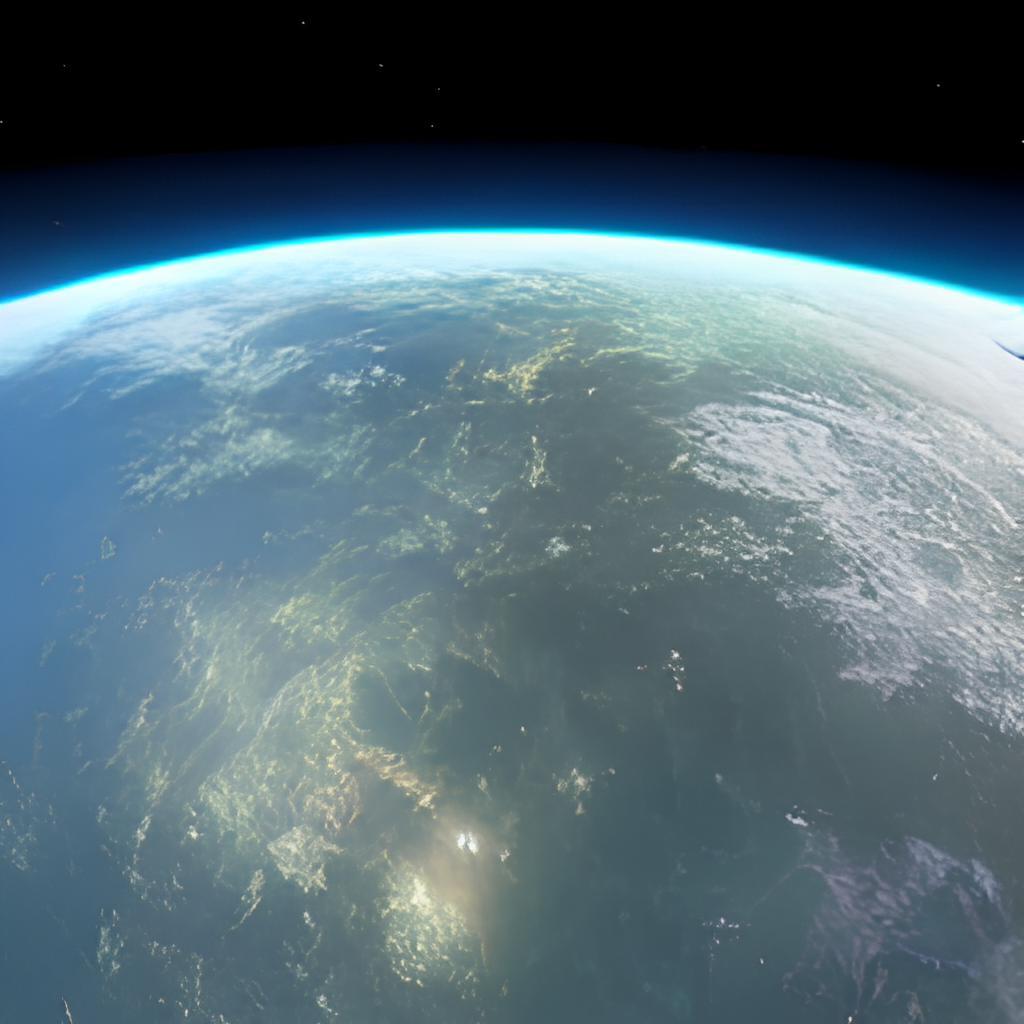

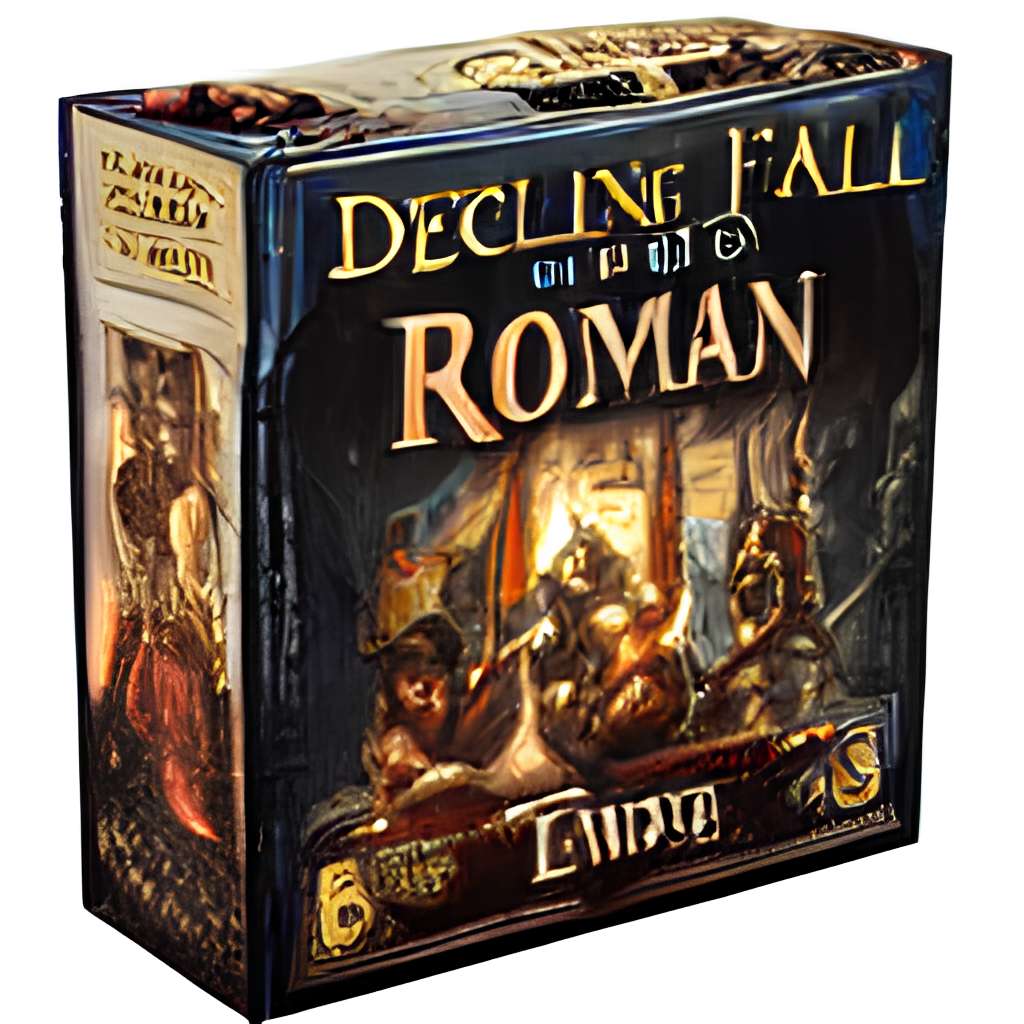

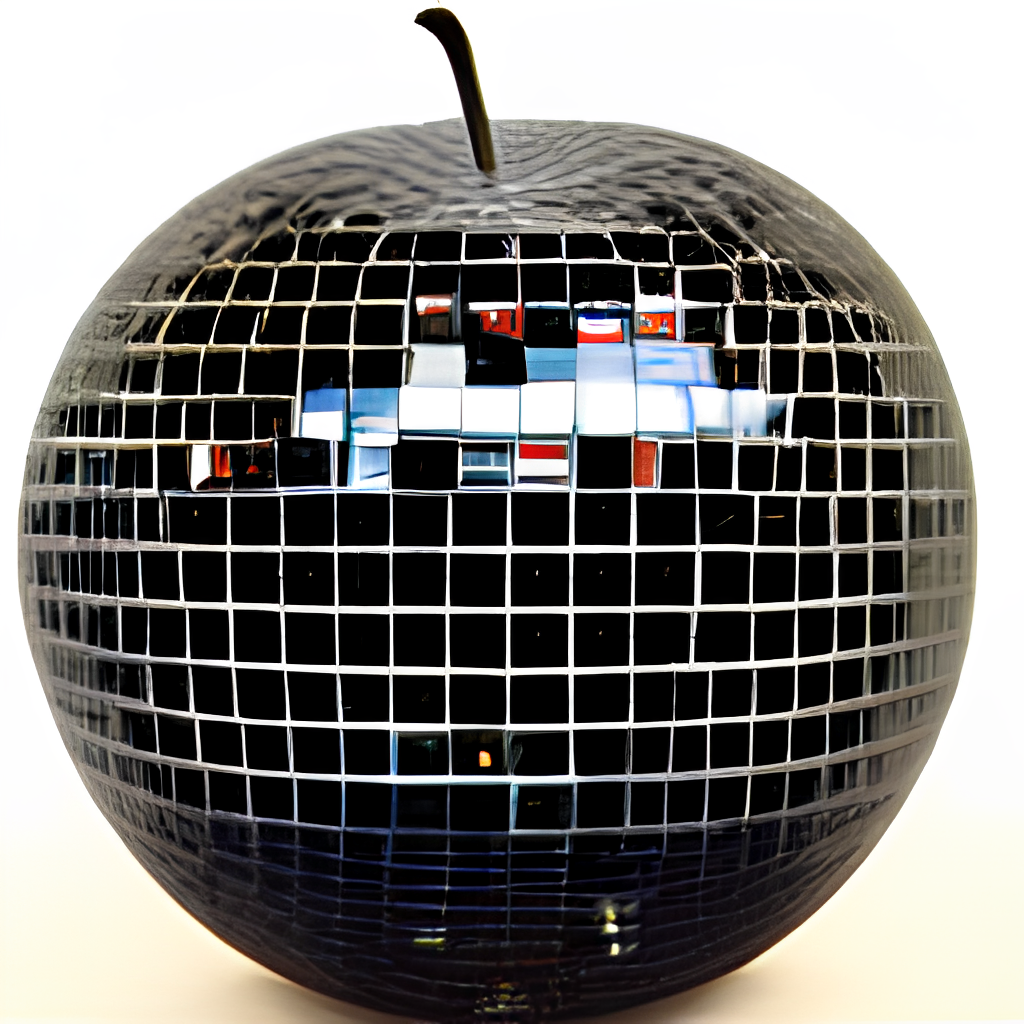

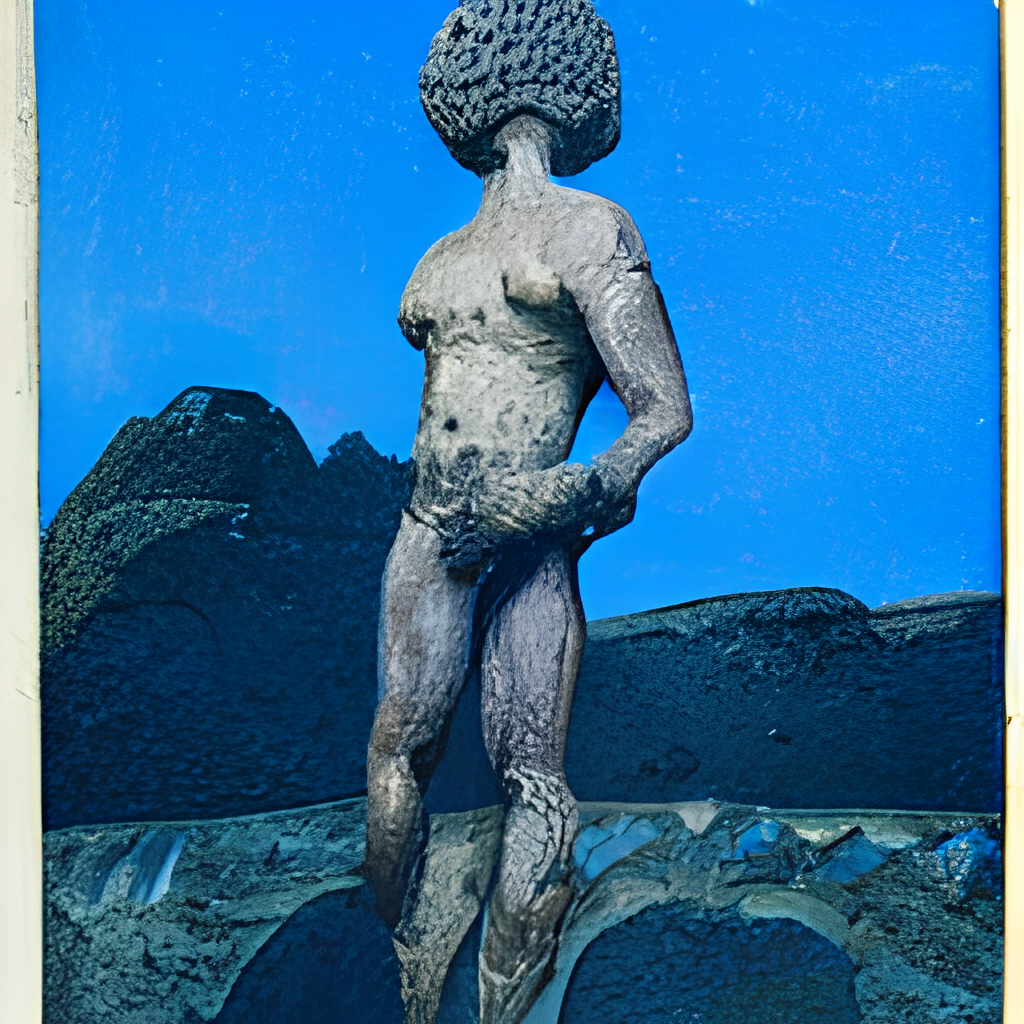

## Gallery

## Client

Using client is super easy. The following steps are best run in [Jupyter notebook](./client.ipynb) or [Google Colab](https://colab.research.google.com/github/jina-ai/dalle-flow/blob/main/client.ipynb).

You will need to install [DocArray](https://github.com/jina-ai/docarray) and [Jina](https://github.com/jina-ai/jina) first:

```bash

pip install "docarray[common]>=0.13.5" jina

```

We have provided a demo server for you to play:

> ⚠️ **Due to the massive requests, our server may be delay in response. Yet we are _very_ confident on keeping the uptime high.** You can also deploy your own server by [following the instruction here](#server).

```python

server_url = 'grpcs://dalle-flow.dev.jina.ai'

```

### Step 1: Generate via DALL·E Mega

Now let's define the prompt:

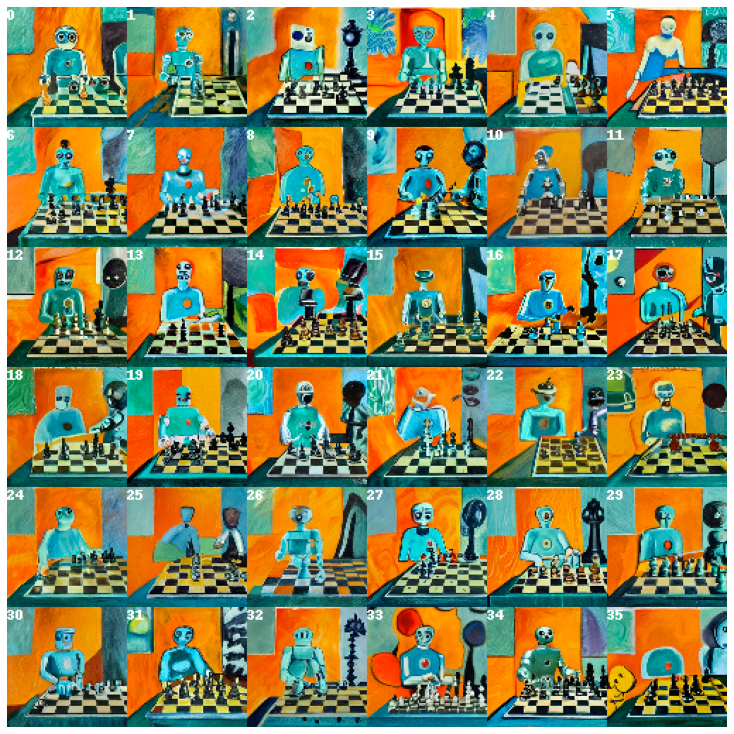

```python

prompt = 'an oil painting of a humanoid robot playing chess in the style of Matisse'

```

Let's submit it to the server and visualize the results:

```python

from docarray import Document

doc = Document(text=prompt).post(server_url, parameters={'num_images': 8})

da = doc.matches

da.plot_image_sprites(fig_size=(10,10), show_index=True)

```

Here we generate 24 candidates, 8 from DALLE-mega, 8 from GLID3 XL, and 8 from Stable Diffusion, this is as defined in `num_images`, which takes about ~2 minutes. You can use a smaller value if it is too long for you.

### Step 2: Select and refinement via GLID3 XL

The 24 candidates are sorted by [CLIP-as-service](https://github.com/jina-ai/clip-as-service), with index-`0` as the best candidate judged by CLIP. Of course, you may think differently. Notice the number in the top-left corner? Select the one you like the most and get a better view:

```python

fav_id = 3

fav = da[fav_id]

fav.embedding = doc.embedding

fav.display()

```

Now let's submit the selected candidates to the server for diffusion.

```python

diffused = fav.post(f'{server_url}', parameters={'skip_rate': 0.5, 'num_images': 36}, target_executor='diffusion').matches

diffused.plot_image_sprites(fig_size=(10,10), show_index=True)

```

This will give 36 images based on the selected image. You may allow the model to improvise more by giving `skip_rate` a near-zero value, or a near-one value to force its closeness to the given image. The whole procedure takes about ~2 minutes.

### Step 3: Select and upscale via SwinIR

Select the image you like the most, and give it a closer look:

```python

dfav_id = 34

fav = diffused[dfav_id]

fav.display()

```

Finally, submit to the server for the last step: upscaling to 1024 x 1024px.

```python

fav = fav.post(f'{server_url}/upscale')

fav.display()

```

That's it! It is _the one_. If not satisfied, please repeat the procedure.

Btw, DocArray is a powerful and easy-to-use data structure for unstructured data. It is super productive for data scientists who work in cross-/multi-modal domain. To learn more about DocArray, [please check out the docs](https://docs.jina.ai).

## Server

You can host your own server by following the instruction below.

### Hardware requirements

DALL·E Flow needs one GPU with 21GB VRAM at its peak. All services are squeezed into this one GPU, this includes (roughly)

- DALLE ~9GB

- GLID Diffusion ~6GB

- Stable Diffusion ~8GB (batch_size=4 in `config.yml`, 512x512)

- SwinIR ~3GB

- CLIP ViT-L/14-336px ~3GB

The following reasonable tricks can be used for further reducing VRAM:

- SwinIR can be moved to CPU (-3GB)

- CLIP can be delegated to [CLIP-as-service free server](https://console.clip.jina.ai/get_started) (-3GB)

It requires at least 50GB free space on the hard drive, mostly for downloading pretrained models.

High-speed internet is required. Slow/unstable internet may throw frustrating timeout when downloading models.

CPU-only environment is not tested and likely won't work. Google Colab is likely throwing OOM hence also won't work.

### Server architecture

If you have installed Jina, the above flowchart can be generated via:

```bash

# pip install jina

jina export flowchart flow.yml flow.svg

```

### Stable Diffusion weights

If you want to use Stable Diffusion, you will first need to register an account on the website [Huggingface](https://huggingface.co/) and agree to the terms and conditions for the model. After logging in, you can find the version of the model required by going here:

[CompVis / sd-v1-5-inpainting.ckpt](https://huggingface.co/runwayml/stable-diffusion-inpainting/blob/main/sd-v1-5-inpainting.ckpt)

Under the **Download the Weights** section, click the link for `sd-v1-x.ckpt`. The latest weights at the time of writing are `sd-v1-5.ckpt`.

**DOCKER USERS**: Put this file into a folder named `ldm/stable-diffusion-v1` and rename it `model.ckpt`. Follow the instructions below carefully because SD is not enabled by default.

**NATIVE USERS**: Put this file into `dalle/stable-diffusion/models/ldm/stable-diffusion-v1/model.ckpt` after finishing the rest of the steps under "Run natively". Follow the instructions below carefully because SD is not enabled by default.

### Run in Docker

#### Prebuilt image

We have provided [a prebuilt Docker image](https://hub.docker.com/r/jinaai/dalle-flow) that can be pull directly.

```bash

docker pull jinaai/dalle-flow:latest

```

#### Build it yourself

We have provided [a Dockerfile](https://github.com/jina-ai/dalle-flow/blob/main/Dockerfile) which allows you to run a server out of the box.

Our Dockerfile is using CUDA 11.6 as the base image, you may want to adjust it according to your system.

```bash

git clone https://github.com/jina-ai/dalle-flow.git

cd dalle-flow

docker build --build-arg GROUP_ID=$(id -g ${USER}) --build-arg USER_ID=$(id -u ${USER}) -t jinaai/dalle-flow .

```

The building will take 10 minutes with average internet speed, which results in a 18GB Docker image.

#### Run container

To run it, simply do:

```bash

docker run -p 51005:51005 \

-it \

-v $HOME/.cache:/home/dalle/.cache \

--gpus all \

jinaai/dalle-flow

```

Alternatively, you may also run with some workflows enabled or disabled to prevent out-of-memory crashes. To do that, pass one of these environment variables:

```

DISABLE_DALLE_MEGA

DISABLE_GLID3XL

DISABLE_SWINIR

ENABLE_STABLE_DIFFUSION

ENABLE_CLIPSEG

ENABLE_REALESRGAN

```

For example, if you would like to disable GLID3XL workflows, run:

```bash

docker run -e DISABLE_GLID3XL='1' \

-p 51005:51005 \

-it \

-v $HOME/.cache:/home/dalle/.cache \

--gpus all \

jinaai/dalle-flow

```

- The first run will take ~10 minutes with average internet speed.

- `-v $HOME/.cache:/root/.cache` avoids repeated model downloading on every docker run.

- The first part of `-p 51005:51005` is your host public port. Make sure people can access this port if you are serving publicly. The second par of it is [the port defined in flow.yml](https://github.com/jina-ai/dalle-flow/blob/e7e313522608668daeec1b7cd84afe56e5b19f1e/flow.yml#L4).

- If you want to use Stable Diffusion, it must be enabled manually with the `ENABLE_STABLE_DIFFUSION`.

- If you want to use clipseg, it must be enabled manually with the `ENABLE_CLIPSEG`.

- If you want to use RealESRGAN, it must be enabled manually with the `ENABLE_REALESRGAN`.

#### Special instructions for Stable Diffusion and Docker

**Stable Diffusion may only be enabled if you have downloaded the weights and make them available as a virtual volume while enabling the environmental flag (`ENABLE_STABLE_DIFFUSION`) for SD**.

You should have previously put the weights into a folder named `ldm/stable-diffusion-v1` and labeled them `model.ckpt`. Replace `YOUR_MODEL_PATH/ldm` below with the path on your own system to pipe the weights into the docker image.

```bash

docker run -e ENABLE_STABLE_DIFFUSION="1" \

-e DISABLE_DALLE_MEGA="1" \

-e DISABLE_GLID3XL="1" \

-p 51005:51005 \

-it \

-v YOUR_MODEL_PATH/ldm:/dalle/stable-diffusion/models/ldm/ \

-v $HOME/.cache:/home/dalle/.cache \

--gpus all \

jinaai/dalle-flow

```

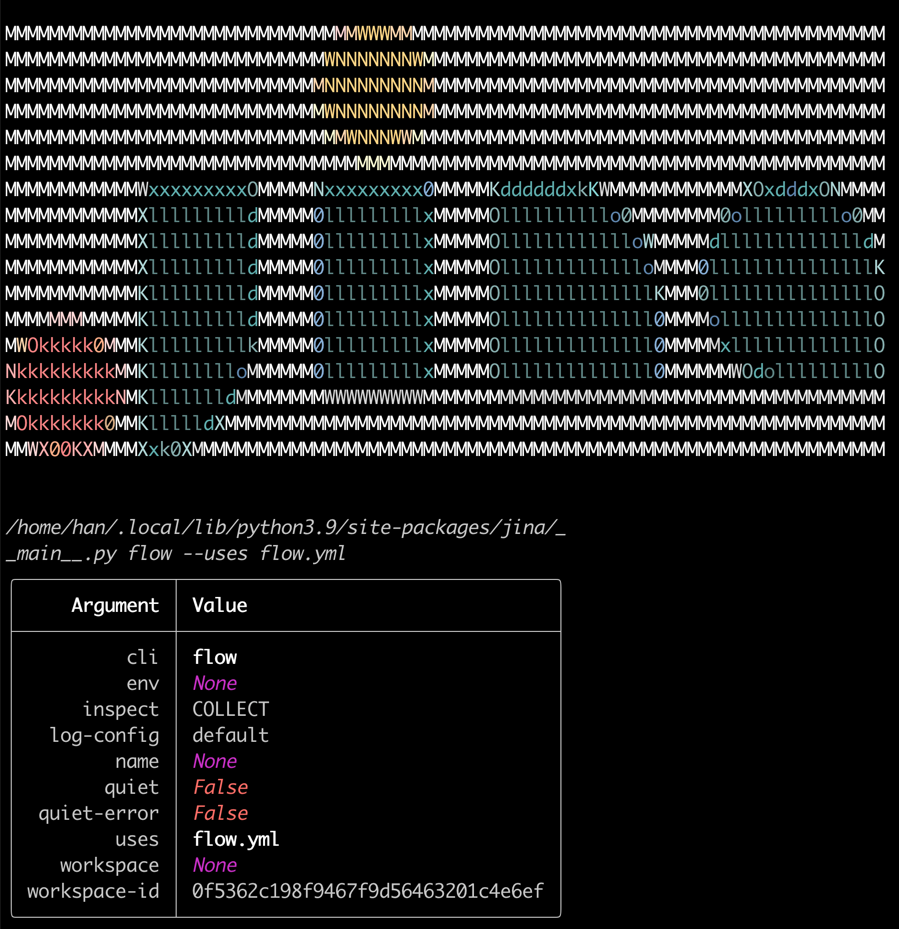

You should see the screen like following once running:

Note that unlike running natively, running inside Docker may give less vivid progressbar, color logs, and prints. This is due to the limitations of the terminal in a Docker container. It does not affect the actual usage.

### Run natively

Running natively requires some manual steps, but it is often easier to debug.

#### Clone repos

```bash

mkdir dalle && cd dalle

git clone https://github.com/jina-ai/dalle-flow.git

git clone https://github.com/jina-ai/SwinIR.git

git clone --branch v0.0.15 https://github.com/AmericanPresidentJimmyCarter/stable-diffusion.git

git clone https://github.com/CompVis/latent-diffusion.git

git clone https://github.com/jina-ai/glid-3-xl.git

git clone https://github.com/timojl/clipseg.git

```

You should have the following folder structure:

```text

dalle/

|

|-- Real-ESRGAN/

|-- SwinIR/

|-- clipseg/

|-- dalle-flow/

|-- glid-3-xl/

|-- latent-diffusion/

|-- stable-diffusion/

```

#### Install auxiliary repos

```bash

cd dalle-flow

python3 -m virtualenv env

source env/bin/activate && cd -

pip install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu116

pip install numpy tqdm pytorch_lightning einops numpy omegaconf

pip install https://github.com/crowsonkb/k-diffusion/archive/master.zip

pip install git+https://github.com/AmericanPresidentJimmyCarter/stable-diffusion.git@v0.0.15

pip install basicsr facexlib gfpgan

pip install realesrgan

pip install https://github.com/AmericanPresidentJimmyCarter/xformers-builds/raw/master/cu116/xformers-0.0.14.dev0-cp310-cp310-linux_x86_64.whl && \

cd latent-diffusion && pip install -e . && cd -

cd stable-diffusion && pip install -e . && cd -

cd SwinIR && pip install -e . && cd -

cd glid-3-xl && pip install -e . && cd -

cd clipseg && pip install -e . && cd -

```

There are couple models we need to download for GLID-3-XL if you are using that:

```bash

cd glid-3-xl

wget https://dall-3.com/models/glid-3-xl/bert.pt

wget https://dall-3.com/models/glid-3-xl/kl-f8.pt

wget https://dall-3.com/models/glid-3-xl/finetune.pt

cd -

```

Both `clipseg` and `RealESRGAN` require you to set a correct cache folder path,

typically something like $HOME/.

#### Install flow

```bash

cd dalle-flow

pip install -r requirements.txt

pip install jax~=0.3.24

```

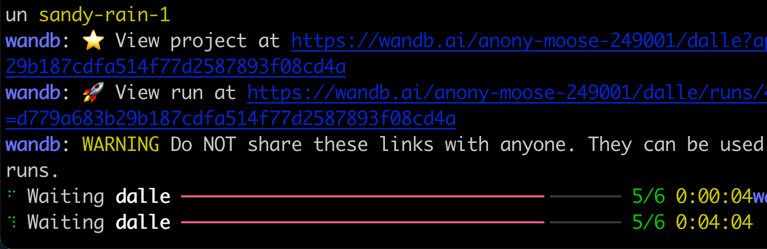

### Start the server

Now you are under `dalle-flow/`, run the following command:

```bash

# Optionally disable some generative models with the following flags when

# using flow_parser.py:

# --disable-dalle-mega

# --disable-glid3xl

# --disable-swinir

# --enable-stable-diffusion

python flow_parser.py

jina flow --uses flow.tmp.yml

```

You should see this screen immediately:

On the first start it will take ~8 minutes for downloading the DALL·E mega model and other necessary models. The proceeding runs should only take ~1 minute to reach the success message.

When everything is ready, you will see:

Congrats! Now you should be able to [run the client](#client).

You can modify and extend the server flow as you like, e.g. changing the model, adding persistence, or even auto-posting to Instagram/OpenSea. With Jina and DocArray, you can easily make DALL·E Flow [cloud-native and ready for production](https://github.com/jina-ai/jina).

### Use the CLIP-as-service

To reduce the usage of vRAM, you can use the `CLIP-as-service` as an external executor freely available at `grpcs://api.clip.jina.ai:2096`.

First, make sure you have created an access token from [console website](https://console.clip.jina.ai/get_started), or CLI as following

```bash

jina auth token create -e

```

Then, you need to change the executor related configs (`host`, `port`, `external`, `tls` and `grpc_metadata`) from [`flow.yml`](./flow.yml).

```yaml

...

- name: clip_encoder

uses: jinahub+docker://CLIPTorchEncoder/latest-gpu

host: 'api.clip.jina.ai'

port: 2096

tls: true

external: true

grpc_metadata:

authorization: ""

needs: [gateway]

...

- name: rerank

uses: jinahub+docker://CLIPTorchEncoder/latest-gpu

host: 'api.clip.jina.ai'

port: 2096

uses_requests:

'/': rank

tls: true

external: true

grpc_metadata:

authorization: ""

needs: [dalle, diffusion]

```

You can also use the `flow_parser.py` to automatically generate and run the flow with using the `CLIP-as-service` as external executor:

```bash

python flow_parser.py --cas-token "'

jina flow --uses flow.tmp.yml

```

> ⚠️ `grpc_metadata` is only available after Jina `v3.11.0`. If you are using an older version, please upgrade to the latest version.

Now, you can use the free `CLIP-as-service` in your flow.

## Support

- To extend DALL·E Flow you will need to get familiar with [Jina](https://github.com/jina-ai/jina) and [DocArray](https://github.com/jina-ai/docarray).

- Join our [Discord community](https://discord.jina.ai) and chat with other community members about ideas.

- Join our [Engineering All Hands](https://youtube.com/playlist?list=PL3UBBWOUVhFYRUa_gpYYKBqEAkO4sxmne) meet-up to discuss your use case and learn Jina's new features.

- **When?** The second Tuesday of every month

- **Where?**

Zoom ([see our public events calendar](https://calendar.google.com/calendar/embed?src=c_1t5ogfp2d45v8fit981j08mcm4%40group.calendar.google.com&ctz=Europe%2FBerlin)/[.ical](https://calendar.google.com/calendar/ical/c_1t5ogfp2d45v8fit981j08mcm4%40group.calendar.google.com/public/basic.ics))

and [live stream on YouTube](https://youtube.com/c/jina-ai)

- Subscribe to the latest video tutorials on our [YouTube channel](https://youtube.com/c/jina-ai)

## Join Us

DALL·E Flow is backed by [Jina AI](https://jina.ai) and licensed under [Apache-2.0](./LICENSE). [We are actively hiring](https://jobs.jina.ai) AI engineers, solution engineers to build the next neural search ecosystem in open-source.