https://github.com/jman4162/pytorch-vision-transformers-vit

Explore fine-tuning the Vision Transformer (ViT) model for object recognition in robotics using PyTorch. This tutorial covers setup, training, and evaluation processes, achieving impressive accuracy with practical resource constraints. Ideal for learners in AI and robotics.

https://github.com/jman4162/pytorch-vision-transformers-vit

ai-tutorial computer-vision deep-learning engineering image-classification machine-learning object-recognition pytorch pytorch-tutorial robotics vision-transformer vision-transformer-image-classification vit

Last synced: 8 months ago

JSON representation

Explore fine-tuning the Vision Transformer (ViT) model for object recognition in robotics using PyTorch. This tutorial covers setup, training, and evaluation processes, achieving impressive accuracy with practical resource constraints. Ideal for learners in AI and robotics.

- Host: GitHub

- URL: https://github.com/jman4162/pytorch-vision-transformers-vit

- Owner: jman4162

- License: mit

- Created: 2024-04-23T18:35:19.000Z (over 1 year ago)

- Default Branch: main

- Last Pushed: 2024-04-23T18:43:18.000Z (over 1 year ago)

- Last Synced: 2025-01-13T11:17:31.048Z (9 months ago)

- Topics: ai-tutorial, computer-vision, deep-learning, engineering, image-classification, machine-learning, object-recognition, pytorch, pytorch-tutorial, robotics, vision-transformer, vision-transformer-image-classification, vit

- Language: Jupyter Notebook

- Homepage:

- Size: 19.5 KB

- Stars: 0

- Watchers: 1

- Forks: 0

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

# PyTorch-Vision-Transformers-ViT

Explore fine-tuning the Vision Transformer (ViT) model for object recognition in robotics using PyTorch. This tutorial covers setup, training, and evaluation processes, achieving impressive accuracy with practical resource constraints. Ideal for learners in AI and robotics.

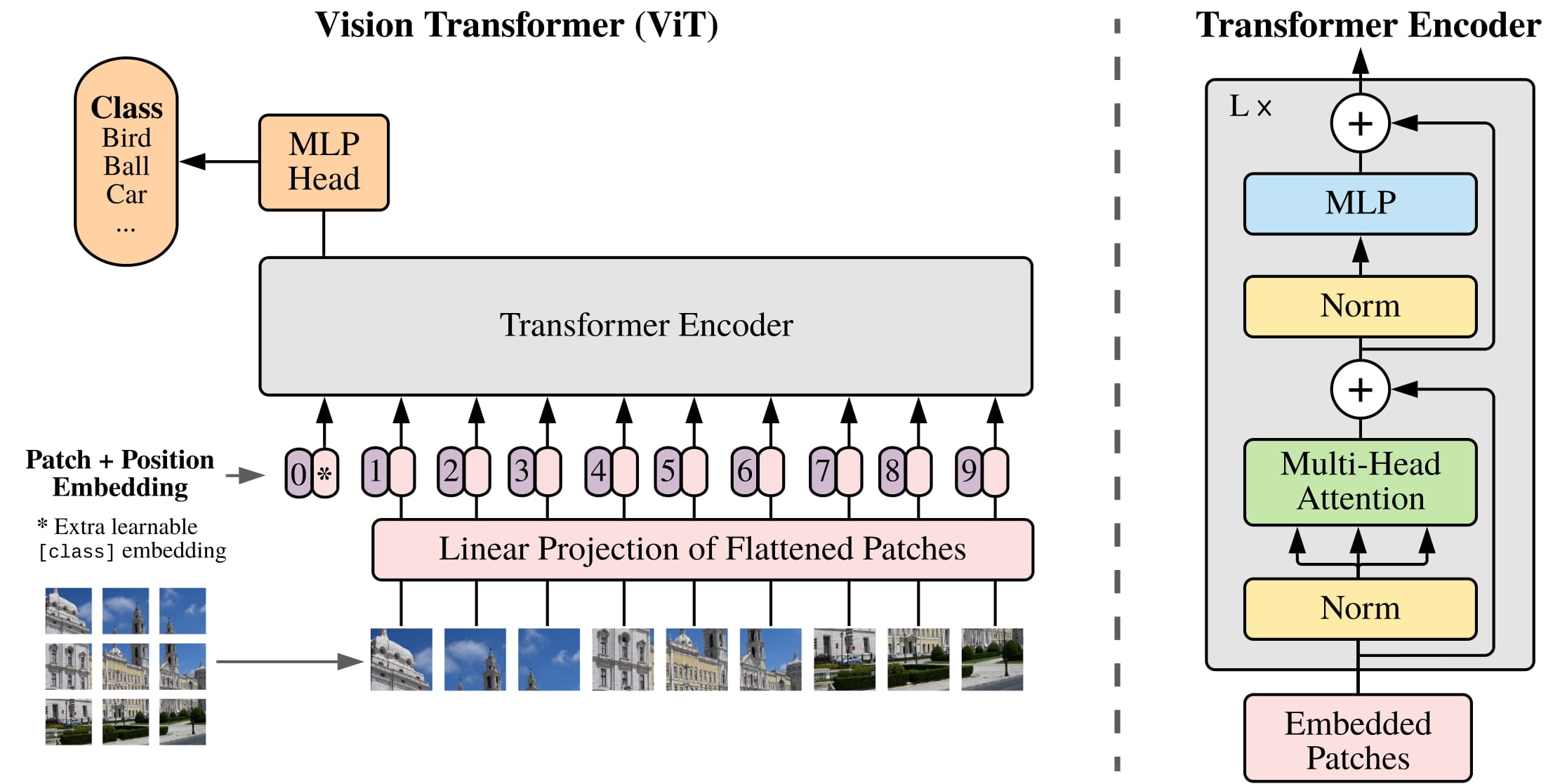

## Fine-Tuning a Vision Transformer (ViT) for Robotics Applications

This tutorial demonstrates how to fine-tune a Vision Transformer (ViT) model, specifically the `vit_b_16`, to recognize objects in the context of robotics applications using PyTorch.

### Overview

Vision Transformers have recently emerged as a powerful class of models in computer vision, rivaling traditional CNNs in various tasks. This tutorial aims to leverage the `vit_b_16` model, pre-trained on ImageNet, and fine-tune it for a specific object recognition task relevant to robotics.

### Prerequisites

Before you begin, ensure you have the following installed:

- Python 3.8 or later

- PyTorch 1.7 or later

- torchvision

- CUDA Toolkit (if utilizing GPU acceleration)

### Setup

Clone this repository to get started:

```bash

git clone https://github.com/jman4162/ViT-Robotics-Tutorial.git

cd ViT-Robotics-Tutorial

```

### Tutorial Structure

- **Data Preparation**: How to prepare and load data using PyTorch.

- **Model Fine-Tuning**: Adjust the Vision Transformer to better suit our specific task.

- **Training**: Run the training loop, implementing effective strategies for learning.

- **Evaluation**: Assess the model's performance on unseen data.

### Usage

To run the tutorial, execute the following command:

```bash

python train.py

```

### Results

Our model achieved an accuracy of 97.65% on the test set, showcasing the effectiveness of Vision Transformers when applied to robotics-related tasks, even with limited computational resources.

## Contributing

Contributions to this tutorial are welcome! Please feel free to fork the repository, make changes, and submit a pull request.

## License

Distributed under the MIT License. See `LICENSE` for more information.