https://github.com/joerihermans/constraining-dark-matter-with-stellar-streams-and-ml

Probing the nature of dark matter by inferring the dark matter particle mass with machine learning and stellar streams.

https://github.com/joerihermans/constraining-dark-matter-with-stellar-streams-and-ml

astrophysics dark-matter deep-learning likelihood-free-inference machine-learning simulation-based-inference

Last synced: 4 months ago

JSON representation

Probing the nature of dark matter by inferring the dark matter particle mass with machine learning and stellar streams.

- Host: GitHub

- URL: https://github.com/joerihermans/constraining-dark-matter-with-stellar-streams-and-ml

- Owner: JoeriHermans

- License: bsd-3-clause

- Created: 2019-10-07T06:53:52.000Z (over 5 years ago)

- Default Branch: master

- Last Pushed: 2022-12-08T11:48:45.000Z (over 2 years ago)

- Last Synced: 2024-12-30T22:03:42.413Z (5 months ago)

- Topics: astrophysics, dark-matter, deep-learning, likelihood-free-inference, machine-learning, simulation-based-inference

- Language: Jupyter Notebook

- Homepage:

- Size: 12.8 MB

- Stars: 17

- Watchers: 6

- Forks: 3

- Open Issues: 12

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

[](https://arxiv.org/abs/2011.14923)

[](https://mybinder.org/v2/gh/JoeriHermans/constraining-dark-matter-with-stellar-streams-and-ml/master?filepath=notebooks%2F01_overview.ipynb)

We put forward several techniques and guidelines for the application of (amortized) neural simulation-based

inference to scientific problems.

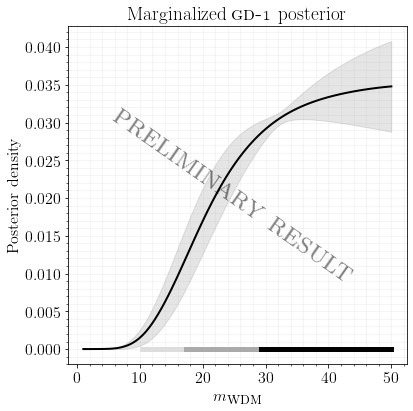

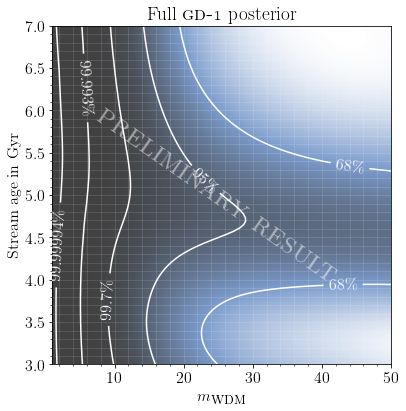

In this work we examine the relation between dark matter subhalo impacts and

the observed stellar density variations in the GD-1 stellar stream to differentiate between [Warm Dark Matter](https://en.wikipedia.org/wiki/Warm_dark_matter) and [Cold Dark Matter](https://en.wikipedia.org/wiki/Cold_dark_matter).

> **Disclaimer**: Baryonic effects are not accounted for, see paper for details.

This repository contains the code to reproduce this work on a Slurm enabled HPC cluster or on your local machine.

The Slurm arguments you typically use in your batch submission scripts will flawlessly run on your development machine without actually requiring or installing Slurm binaries. Futhermore, our scripts will automatically manage the Anaconda environment related to this work.

## Table of contents

- [Demonstration notebooks](#demonstration-notebooks)

- [Requirements](#requirements)

- [Datasets and models](#datasets-and-models)

- [Usage](#usage)

- [Pipelines](#pipelines)

- [Notebooks](#notebooks)

- [Manuscripts](#manuscripts)

- [Citing](#citing-our-work)

## Demonstration notebooks

> **Note**. If you are viewing this notebook right after release, it might be possible that the Binder links do no work yet. We are actively solving this!

In addition to the code related to the contents of this paper, we provide several demonstration notebooks

to familiarize yourself with simulation-based inference.

| Short description | Render | Binder |

| ----------------- | ----- | ------ |

| Overview notebook with presimulated data and pretrained models | [[view]](notebooks/01_overview.ipynb) | [](https://mybinder.org/v2/gh/JoeriHermans/constraining-dark-matter-with-stellar-streams-and-ml/master?filepath=notebooks%2F01_overview.ipynb) |

| Toy problem to demonstrate the technique | [[view]](notebooks/02_toy.ipynb) | [](https://mybinder.org/v2/gh/JoeriHermans/constraining-dark-matter-with-stellar-streams-and-ml/master?filepath=notebooks%2F02_toy.ipynb) |

| Out-of-distribution or model misspecification detection | [[view]](notebooks/03_out_of_distribution.ipynb) | [](https://mybinder.org/v2/gh/JoeriHermans/constraining-dark-matter-with-stellar-streams-and-ml/master?filepath=notebooks%2F03_out_of_distribution.ipynb) |

| Changing the implicit prior of the ratio estimator through MCMC | [[view]](notebooks/04_prior.ipynb) | [](https://mybinder.org/v2/gh/JoeriHermans/constraining-dark-matter-with-stellar-streams-and-ml/master?filepath=notebooks%2F04_prior.ipynb) |

## Requirements

> **Required**. The project assumes you have a working Anaconda installation.

In order to execute this project, you need at least `40 GB` of available storage space. We do not recommend to run the simulations on a single machine, as this would take about *60 years* to complete. On a HPC cluster, the simulations will take about 2-3 weeks. Training all ratio estimators will take 1-2 days depending on the availability of GPU's. Diagnostics another day.

### Installation of the Anaconda environment

```console

you@localhost:~ $ wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh

you@localhost:~ $ sh Miniconda3-latest-Linux-x86_64.sh

```

The corresponding environment can be installed by executing

```console

you@localhost:~ $ sh scripts/install.sh

```

in the root directory of the project. This will install several dependencies in a certain order due to some quirks in Anaconda.

## Datasets and models

The required computational resources mentioned above might not be available to everyone.

As such, the presimulated datasets and pretrained models can be made available on request by e-mailing

[[email protected]](mailto:[email protected]), or by opening an issue in this GitHub repository.

## Usage

Simply execute `./run.sh -h` to display all available options or`./run.sh` to install the Anaconda environment and dependencies related to this project.

A specific set of experiments can be executed by supplying a comma-seperated list.

```console

you@localhost:~ $ bash run.sh -e simulations,inference

```

If you update the `environment.yml` file by adding or removing dependencies, please run `bash run.sh -i` first. The script will automatically synchronize the changes with the Anaconda environment associated to this project.

## Pipelines

This section gives a quick overview of our results.

A link to a detailed description of every experiment is listed. As described in the [usage](#usage) section, the `identifier` plays an important roll if the developer or end-user wishes to execute a subset of pipelines (experiments).

| Identifier | Short description | Link |

| -------------- | ----------------------------------------------------------- | --------------------------------------------------------- |

| *inference* | Analyses and plots. | [[details]](experiments/experiment-inference/pipeline.sh) |

| *simulations* | A pipeline for simulating the datasets and GD-1 mocks. | [[details]](experiments/experiment-simulations/pipeline.sh) |

## Notebooks

Overview of a non-exclusive list of interesting notebooks in this repository, not included in the main paper.

| Short description | Render |

| ----------------- | ----- |

| In this notebook we explore in a ad-hoc fashion how the neural network uses the high-level features in a stellar stream to differentiate between CDM and WDM. | [[view]](experiments/experiment-inference/edge-case.ipynb) |

## Manuscripts

The preprint is available at [`manuscript/preprint/main.pdf`](manuscript/preprint/main.pdf).

Our NeurIPS submission can be found at [`manuscript/neurips/main.pdf`](manuscript/neurips/main.pdf).

## Citing our work

If you use our code or methodology, please cite our paper

```

TODO

```

and the original method paper published at ICML2020

```

@ARTICLE{hermansSBI,

author = {{Hermans}, Joeri and {Begy}, Volodimir and {Louppe}, Gilles},

title = "{Likelihood-free MCMC with Amortized Approximate Ratio Estimators}",

journal = {arXiv e-prints},

keywords = {Statistics - Machine Learning, Computer Science - Machine Learning},

year = "2019",

month = "Mar",

eid = {arXiv:1903.04057},

pages = {arXiv:1903.04057},

archivePrefix = {arXiv},

eprint = {1903.04057},

primaryClass = {stat.ML},

adsurl = {https://ui.adsabs.harvard.edu/abs/2019arXiv190304057H},

adsnote = {Provided by the SAO/NASA Astrophysics Data System}

}

```