https://github.com/jolibrain/joliGEN

Generative AI Image and Video Toolset with GANs and Diffusion for Real-World Applications

https://github.com/jolibrain/joliGEN

augmented-reality deep-learning diffusion-models gan generative-model image-generation image-to-image pytorch

Last synced: about 1 month ago

JSON representation

Generative AI Image and Video Toolset with GANs and Diffusion for Real-World Applications

- Host: GitHub

- URL: https://github.com/jolibrain/joliGEN

- Owner: jolibrain

- License: other

- Created: 2020-08-18T09:26:25.000Z (almost 5 years ago)

- Default Branch: master

- Last Pushed: 2025-02-12T16:39:53.000Z (4 months ago)

- Last Synced: 2025-04-12T12:54:33.909Z (2 months ago)

- Topics: augmented-reality, deep-learning, diffusion-models, gan, generative-model, image-generation, image-to-image, pytorch

- Language: Python

- Homepage: https://www.joligen.com

- Size: 13.5 MB

- Stars: 258

- Watchers: 8

- Forks: 37

- Open Issues: 22

-

Metadata Files:

- Readme: README.md

- Changelog: CHANGELOG.md

- License: LICENSE

Awesome Lists containing this project

README

[](https://opensource.org/licenses/Apache-2.0)

[](https://github.com/jolibrain/joliGEN/actions/workflows/github-actions-doc-options-update.yml)

[](https://www.joligen.com/doc)

Generative AI Image & Video Toolset with GANs, Diffusion and Consistency Models for Real-World Applications

**JoliGEN** is an integrated framework for training custom generative AI image-to-image models

Main Features:

- JoliGEN implements both **GAN, Diffusion and Consistency models** for unpaired and paired image to image translation tasks, including domain and style adaptation with conservation of semantics such as image and object classes, masks, ...

- JoliGEN generative AI capabilities are targeted at real world applications such as **Controled Image Generation**, **Augmented Reality**, **Dataset Smart Augmentation** and object insertion, **Synthetic to Real** transforms.

- JoliGEN allows for fast and stable training with astonishing results. A [**server with REST API**](https://www.joligen.com/doc/server.html#running-joligen-server) is provided that allows for simplified deployment and usage.

- JoliGEN has a large scope of [options and parameters](https://www.joligen.com/doc/options.html). To not get overwhelmed, follow the simple [Quickstarts](https://www.joligen.com/doc/quickstart_ddpm.html). There are then links to more detailed documentation on models, dataset formats, and data augmentation.

### Useful links

- [JoliGEN documentation](https://www.joligen.com/doc/)

- [GAN Quickstart](https://www.joligen.com/doc/quickstart_gan.html)

- [Diffusion Quickstart](https://www.joligen.com/doc/quickstart_ddpm.html)

- [Datasets](https://www.joligen.com/doc/datasets.html)

- [Training Tips](https://www.joligen.com/doc/tips.html)

## Use cases

- **AR and metaverse**: replace any image element with super-realistic objects

- **Image manipulation**: seamlessly insert or remove objects/elements in images

- **Image to image translation while preserving semantics**, e.g. existing source dataset annotations

- **Simulation to reality** translation while preserving elements, metrics, ...

- **Image generation to enrich datasets**, e.g. counter dataset imbalance, increase test sets, ...

This is achieved by combining powerful and customized generator architectures, bags of discriminators, and configurable neural networks and losses that ensure conservation of fundamental elements between source and target images.

## Example results

### Satellite imagery inpainting

Fill up missing areas with diffusion network

### Image translation while preserving the class

Mario to Sonic while preserving the action (running, jumping, ...)

### Object insertion

Virtual Try-On with Diffusion

Car insertion (BDD100K) with Diffusion

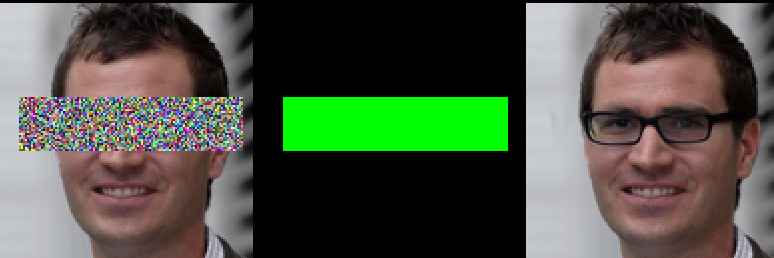

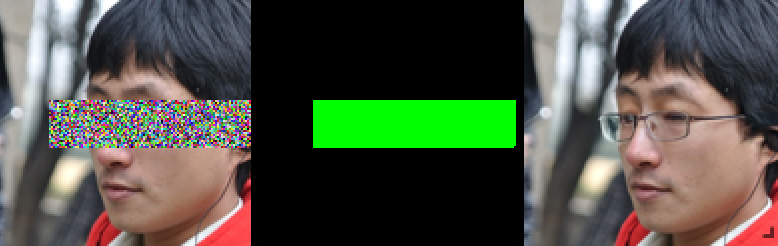

Glasses insertion (FFHQ) with Diffusion

### Object removal

Glasses removal with GANs

### Style transfer while preserving label boxes (e.g. cars, pedestrians, street signs, ...)

Day to night (BDD100K) with Transformers and GANs

Clear to snow (BDD100K) by applying a generator multiple times to add snow incrementally

Clear to overcast (BDD100K)

Clear to rainy (BDD100K)

## Features

- SoTA image to image translation

- Semantic consistency: conservation of labels of many types: bounding boxes, masks, classes.

- SoTA discriminator models: [projected](https://arxiv.org/abs/2111.01007), [vision_aided](https://arxiv.org/abs/2112.09130), custom transformers.

- Advanced generators: [real-time](https://github.com/jolibrain/joliGEN/blob/chore_new_readme/models/modules/resnet_architecture/resnet_generator.py#L388), [transformers](https://arxiv.org/abs/2203.16015), [hybrid transformers-CNN](https://github.com/jolibrain/joliGEN/blob/chore_new_readme/models/modules/segformer/segformer_generator.py#L95), [Attention-based](https://arxiv.org/abs/1911.11897), [UNet with attention](https://github.com/jolibrain/joliGEN/blob/chore_new_readme/models/modules/unet_generator_attn/unet_generator_attn.py#L323), [HDiT](https://arxiv.org/abs/2401.11605)

- Multiple models based on adversarial and diffusion generation: [CycleGAN](https://arxiv.org/abs/1703.10593), [CyCADA](https://arxiv.org/abs/1711.03213), [CUT](https://arxiv.org/abs/2007.15651), [Palette](https://arxiv.org/abs/2111.05826)

- GAN data augmentation mechanisms: [APA](https://arxiv.org/abs/2111.06849), discriminator noise injection, standard image augmentation, online augmentation through sampling around bounding boxes

- Output quality metrics: FID, PSNR, KID, ...

- Server with [REST API](https://www.joligen.com/doc/API.html)

- Support for both CPU and GPU

- [Dockerized server](https://www.joligen.com/doc/docker.html)

- Production-grade deployment in C++ via [DeepDetect](https://github.com/jolibrain/deepdetect/)

---

## Code format and Contribution

If you want to contribute please use [black](https://github.com/psf/black) code format.

Install:

```

pip install black

```

Usage :

```

black .

```

If you want to format the code automatically before every commit :

```

pip install pre-commit

pre-commit install

```

## Authors

**JoliGEN** is created and developed by [Jolibrain](https://www.jolibrain.com/).

Code structure is inspired by [pytorch-CycleGAN-and-pix2pix](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix), [CUT](https://github.com/taesungp/contrastive-unpaired-translation), [AttentionGAN](https://github.com/Ha0Tang/AttentionGAN), [MoNCE](https://github.com/fnzhan/MoNCE), [Palette](https://github.com/Janspiry/Palette-Image-to-Image-Diffusion-Models) among others.

Elements from JoliGEN are supported by the French National AI program ["Confiance.AI"](https://www.confiance.ai/en/)

Contact: