https://github.com/josefalbers/e2tts-mlx

Embarrassingly Easy Fully Non-Autoregressive Zero-Shot TTS (E2 TTS) in MLX

https://github.com/josefalbers/e2tts-mlx

e2-tts flow-matching mlx multistream text-to-speech transformer tts

Last synced: 3 months ago

JSON representation

Embarrassingly Easy Fully Non-Autoregressive Zero-Shot TTS (E2 TTS) in MLX

- Host: GitHub

- URL: https://github.com/josefalbers/e2tts-mlx

- Owner: JosefAlbers

- License: apache-2.0

- Created: 2024-10-06T04:06:15.000Z (about 1 year ago)

- Default Branch: main

- Last Pushed: 2024-10-15T12:21:33.000Z (about 1 year ago)

- Last Synced: 2025-07-01T11:07:58.956Z (4 months ago)

- Topics: e2-tts, flow-matching, mlx, multistream, text-to-speech, transformer, tts

- Language: Python

- Homepage:

- Size: 2.87 MB

- Stars: 27

- Watchers: 2

- Forks: 4

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

# e2tts-mlx: Embarrassingly Easy Fully Non-Autoregressive Zero-Shot TTS in MLX

A lightweight implementation of [Embarrassingly Easy Fully Non-Autoregressive Zero-Shot TTS](https://arxiv.org/abs/2406.18009) model using MLX, with minimal dependencies and efficient computation on Apple Silicon.

## Quick Start

### Install

```zsh

# Quick install (note: PyPI version may not always be up to date)

pip install e2tts-mlx

# For the latest version, you can install directly from the repository:

# git clone https://github.com/JosefAlbers/e2tts-mlx.git

# cd e2tts-mlx

# pip install -e .

```

### Usage

To use a pre-trained model for text-to-speech:

```zsh

e2tts 'We must achieve our own salvation.'

```

This will write `tts_0.wav` to the current directory, which you can then play.

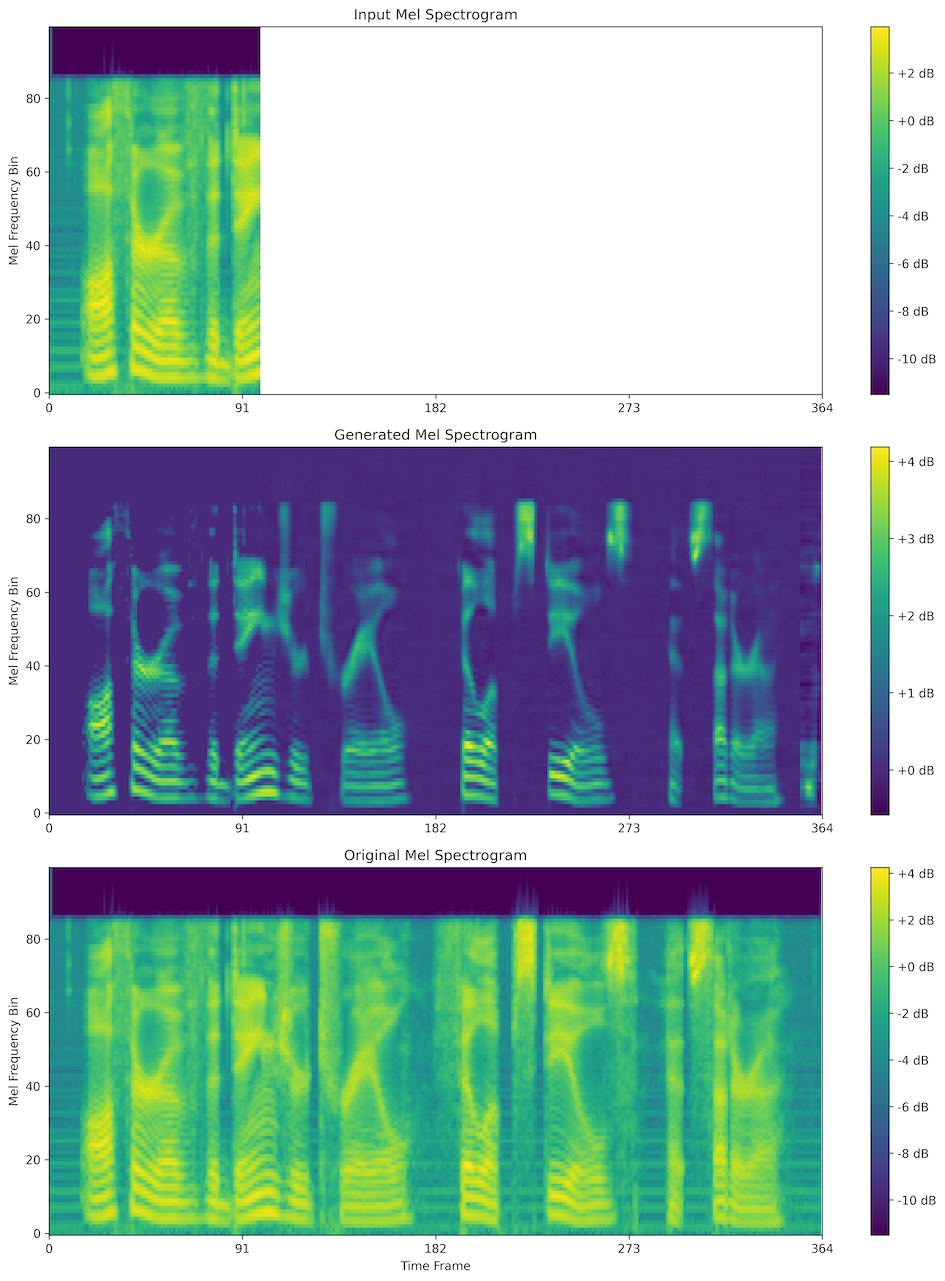

https://github.com/user-attachments/assets/89265113-0785-42f1-b867-c38c886acbef

To train a new model with default settings:

```zsh

e2tts

```

To train with custom options:

```zsh

e2tts --batch_size=16 --n_epoch=100 --lr=1e-4 --depth=8 --n_ode=32

```

Select training options:

- `--batch_size`: Set the batch size (default: 32)

- `--n_epoch`: Set the number of epochs (default: 10)

- `--lr`: Set the learning rate (default: 2e-4)

- `--depth`: Set the model depth (default: 8)

- `--n_ode`: Set the number of steps for sampling (default: 1)

- `--more_ds` parameter: Implements [two-set training](https://arxiv.org/pdf/2410.07041) (default: 'JosefAlbers/lj-speech')

## Acknowledgements

Special thanks to [lucidrains](https://github.com/lucidrains/e2-tts-pytorch)' fantastic code that inspired this project, and to [lucasnewman](https://github.com/lucasnewman/vocos-mlx)'s the Vocos implementation that made this possible.

## License

Apache License 2.0