https://github.com/josefalbers/whisper-turbo-mlx

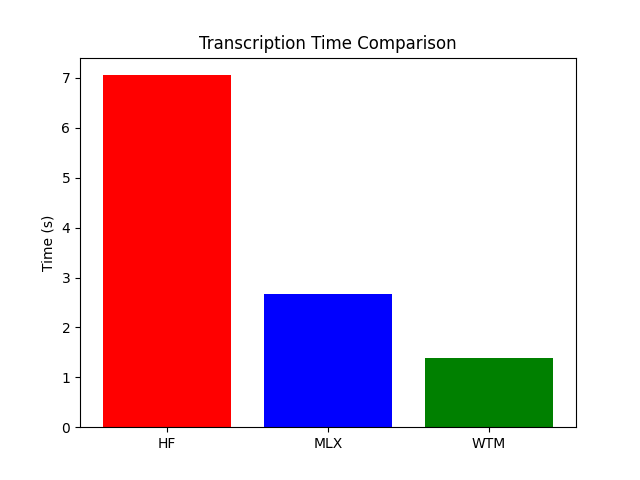

Blazing fast whisper turbo for ASR (speech-to-text) tasks

https://github.com/josefalbers/whisper-turbo-mlx

asr deep-learning mlx speech-recognition speech-to-text whisper whisper-turbo

Last synced: 5 months ago

JSON representation

Blazing fast whisper turbo for ASR (speech-to-text) tasks

- Host: GitHub

- URL: https://github.com/josefalbers/whisper-turbo-mlx

- Owner: JosefAlbers

- License: mit

- Created: 2024-10-17T09:31:49.000Z (about 1 year ago)

- Default Branch: main

- Last Pushed: 2024-10-20T04:46:00.000Z (about 1 year ago)

- Last Synced: 2025-04-03T12:07:45.467Z (7 months ago)

- Topics: asr, deep-learning, mlx, speech-recognition, speech-to-text, whisper, whisper-turbo

- Language: Python

- Homepage:

- Size: 527 KB

- Stars: 202

- Watchers: 7

- Forks: 9

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

README

# WTM (Whisper Turbo MLX)

This repository provides a fast and lightweight implementation of the [Whisper](https://github.com/openai/whisper/discussions/2363) model using [MLX](https://github.com/ml-explore/mlx-examples/tree/main/whisper), all contained within a single file of under 300 lines, designed for efficient audio transcription.

## Installation

```zsh

brew install ffmpeg

git clone https://github.com/JosefAlbers/whisper-turbo-mlx.git

cd whisper-turbo-mlx

pip install -e .

```

## Quick Start

To transcribe an audio file:

```zsh

wtm test.wav

```

To use the library in a Python script:

```python

>>> from whisper_turbo import transcribe

>>> transcribe('test.wav', any_lang=True)

```

## Quick Parameter

The `quick` parameter allows you to choose between two transcription methods:

- **`quick=True`**: Utilizes a parallel processing method for faster transcription. This method may produce choppier output but is significantly quicker, ideal for situations where speed is a priority (e.g., for feeding the generated transcripts into an LLM to collect quick summaries on many audio recordings).

- **`quick=False`** (default): Engages a recurrent processing method that is slower but yields more faithful and coherent transcriptions (still faster than other reference implementations).

You can specify this parameter when calling the `transcribe` function:

```zsh

wtm --quick=True

```

```python

>>> transcribe('test.wav', quick=True)

```

## Acknowledgements

This project builds upon the reference [MLX implementation](https://github.com/ml-explore/mlx-examples/tree/main/whisper) of the Whisper model. Great thanks to the contributors of the MLX project for their exceptional work and inspiration.

## Contributing

Contributions are welcome! Feel free to submit issues or pull requests.