https://github.com/kirili4ik/stargan-pytorch

StarGAN model written in PyTorch

https://github.com/kirili4ik/stargan-pytorch

conditional-gan pytorch stargan

Last synced: 8 months ago

JSON representation

StarGAN model written in PyTorch

- Host: GitHub

- URL: https://github.com/kirili4ik/stargan-pytorch

- Owner: Kirili4ik

- Created: 2021-03-11T23:21:17.000Z (over 4 years ago)

- Default Branch: main

- Last Pushed: 2021-03-27T14:54:03.000Z (over 4 years ago)

- Last Synced: 2025-01-20T18:46:21.797Z (9 months ago)

- Topics: conditional-gan, pytorch, stargan

- Language: Jupyter Notebook

- Homepage:

- Size: 21.5 MB

- Stars: 0

- Watchers: 2

- Forks: 0

- Open Issues: 0

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

README

# StarGAN model in PyTorch

This repository is an implementation of model described in [StarGAN: Unified Generative Adversarial Networks for Multi-Domain Image-to-Image Translation](https://arxiv.org/pdf/1711.09020v3.pdf).

Model was trained on CelebA dataset. It can be found in Torchvision (or via link in main.ipynb).

Main file with is train/test loops in a notebook(main.ipynb). It also contains config so that all hyperparameters can be found there.

WandB loffing and report can be found on their web-page ([report](https://wandb.ai/kirili4ik/dgm-ht2/reports/-2-DGM-StarGan---Vmlldzo1MjE1NTk), [logging](https://wandb.ai/kirili4ik/dgm-ht2?workspace=user-kirili4ik)). There are a lot of good and bad samples and graphs! (in Russian).

There are some good examples of trained models:

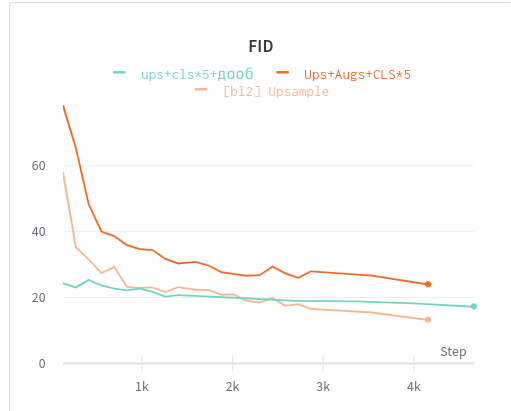

Also there were some discoveries and experiements, e.g. FID architectures comparison:

As a result of experiements IN are replaced with BN in Generator for now. Also ConvTranspose layers are replaced with Upsample+Conv layers as described [here](https://distill.pub/2016/deconv-checkerboard/). Training was performed on Nvidia 2080 TI with pics resized to 128x128 and batch size of 32.

Thx [mseitzer](https://github.com/mseitzer/pytorch-fid) for implementing Inception.py for FID calculation.

Thx [WanbB](https://wandb.ai/) for convenient logging and beautiful report writing tool.