https://github.com/kong36088/BaiduImageSpider

一个超级轻量的百度图片爬虫

https://github.com/kong36088/BaiduImageSpider

baidu crawler python3 spider

Last synced: 3 months ago

JSON representation

一个超级轻量的百度图片爬虫

- Host: GitHub

- URL: https://github.com/kong36088/BaiduImageSpider

- Owner: kong36088

- License: mit

- Created: 2016-02-06T15:54:08.000Z (over 9 years ago)

- Default Branch: master

- Last Pushed: 2023-05-29T09:24:08.000Z (about 2 years ago)

- Last Synced: 2024-10-29T15:49:14.563Z (8 months ago)

- Topics: baidu, crawler, python3, spider

- Language: Python

- Homepage:

- Size: 52.7 KB

- Stars: 874

- Watchers: 24

- Forks: 392

- Open Issues: 4

-

Metadata Files:

- Readme: README.md

Awesome Lists containing this project

- awesome - kong36088/BaiduImageSpider - 一个超级轻量的百度图片爬虫 (Python)

- awesome - kong36088/BaiduImageSpider - 一个超级轻量的百度图片爬虫 (Python)

README

# BaiduImageSpider

百度图片爬虫,基于python3

个人学习开发用

单线程爬取百度图片。

# 爬虫工具 Required

**需要安装python版本 >= 3.6**

# 使用方法

```

$ python crawling.py -h

usage: crawling.py [-h] -w WORD -tp TOTAL_PAGE -sp START_PAGE

[-pp [{10,20,30,40,50,60,70,80,90,100}]] [-d DELAY]

optional arguments:

-h, --help show this help message and exit

-w WORD, --word WORD 抓取关键词

-tp TOTAL_PAGE, --total_page TOTAL_PAGE

需要抓取的总页数

-sp START_PAGE, --start_page START_PAGE

起始页数

-pp [{10,20,30,40,50,60,70,80,90,100}], --per_page [{10,20,30,40,50,60,70,80,90,100}]

每页大小

-d DELAY, --delay DELAY

抓取延时(间隔)

```

开始爬取图片

```

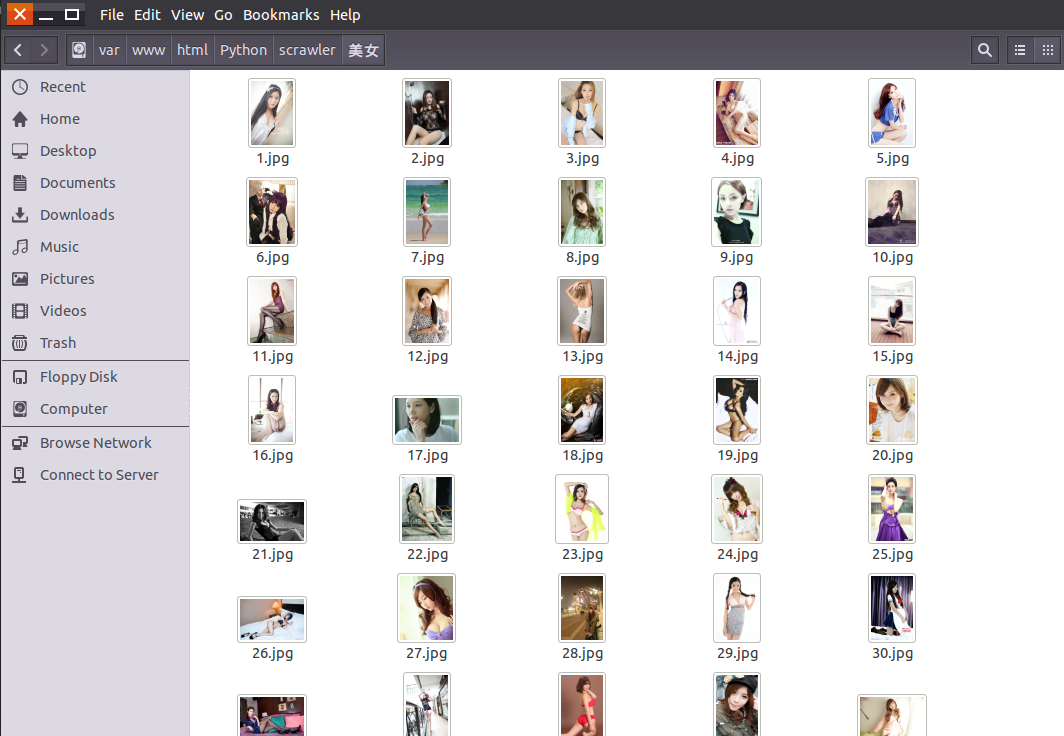

python crawling.py --word "美女" --total_page 10 --start_page 1 --per_page 30

```

另外也可以在`crawling.py`最后一行修改编辑查找关键字

图片默认保存在项目路径

运行爬虫:

``` python

python crawling.py

```

# 博客

[爬虫总结](http://www.jwlchina.cn/2016/02/06/python%E7%99%BE%E5%BA%A6%E5%9B%BE%E7%89%87%E7%88%AC%E8%99%AB/)

效果图:

# 捐赠

您的支持是对我的最大鼓励!

谢谢你请我吃糖