https://github.com/krishnan-r/sparkmonitor

Monitor Apache Spark from Jupyter Notebook

https://github.com/krishnan-r/sparkmonitor

extension jupyter spark

Last synced: 2 days ago

JSON representation

Monitor Apache Spark from Jupyter Notebook

- Host: GitHub

- URL: https://github.com/krishnan-r/sparkmonitor

- Owner: krishnan-r

- License: apache-2.0

- Archived: true

- Created: 2017-05-31T09:13:01.000Z (over 8 years ago)

- Default Branch: master

- Last Pushed: 2022-05-16T18:45:37.000Z (over 3 years ago)

- Last Synced: 2025-01-13T00:27:10.439Z (9 months ago)

- Topics: extension, jupyter, spark

- Language: JavaScript

- Homepage: https://krishnan-r.github.io/sparkmonitor/

- Size: 3.97 MB

- Stars: 172

- Watchers: 9

- Forks: 55

- Open Issues: 31

-

Metadata Files:

- Readme: README.md

- License: LICENSE

Awesome Lists containing this project

- awesome-jupyter-resources - GitHub - 68% open · ⏱️ 16.05.2022): (Jupyter拓展)

README

[](https://travis-ci.org/krishnan-r/sparkmonitor)

# Spark Monitor - An extension for Jupyter Notebook

### Note: This project is now maintained at https://github.com/swan-cern/sparkmonitor

## [Google Summer of Code - Final Report](https://krishnan-r.github.io/sparkmonitor/)

For the google summer of code final report of this project [click here](https://krishnan-r.github.io/sparkmonitor/)

## About

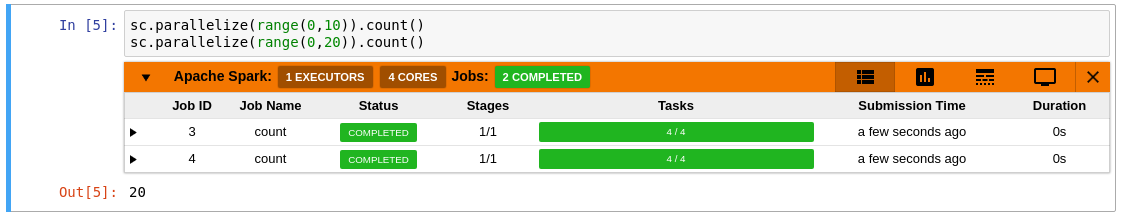

SparkMonitor is an extension for Jupyter Notebook that enables the live monitoring of Apache Spark Jobs spawned from a notebook. The extension provides several features to monitor and debug a Spark job from within the notebook interface itself.

***

## Features

* Automatically displays a live monitoring tool below cells that run Spark jobs in a Jupyter notebook

* A table of jobs and stages with progressbars

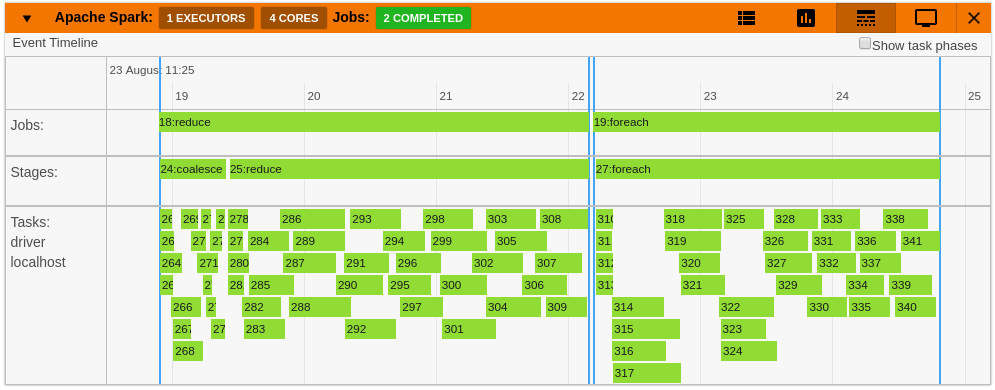

* A timeline which shows jobs, stages, and tasks

* A graph showing number of active tasks & executor cores vs time

* A notebook server extension that proxies the Spark UI and displays it in an iframe popup for more details

* For a detailed list of features see the use case [notebooks](https://krishnan-r.github.io/sparkmonitor/#common-use-cases-and-tests)

* [How it Works](https://krishnan-r.github.io/sparkmonitor/how.html)

## Quick Installation

```bash

pip install sparkmonitor

jupyter nbextension install sparkmonitor --py --user --symlink

jupyter nbextension enable sparkmonitor --py --user

jupyter serverextension enable --py --user sparkmonitor

ipython profile create && echo "c.InteractiveShellApp.extensions.append('sparkmonitor.kernelextension')" >> $(ipython profile locate default)/ipython_kernel_config.py

```

#### For more detailed instructions [click here](https://krishnan-r.github.io/sparkmonitor/install.html)

#### To do a quick test of the extension:

```bash

docker run -it -p 8888:8888 krishnanr/sparkmonitor

```

## Integration with ROOT and SWAN

At CERN, the SparkMonitor extension would find two main use cases:

* Distributed analysis with [ROOT](https://root.cern.ch/) and Apache Spark using the DistROOT module. [Here](https://krishnan-r.github.io/sparkmonitor/usecase_distroot.html) is an example demonstrating this use case.

* Integration with [SWAN](https://swan.web.cern.ch/), A service for web based analysis, via a modified [container image](https://github.com/krishnan-r/sparkmonitorhub) for SWAN user sessions.